【 TiDB 使用环境】生产环境

【 TiDB 版本】v5.4.2

【复现路径】无

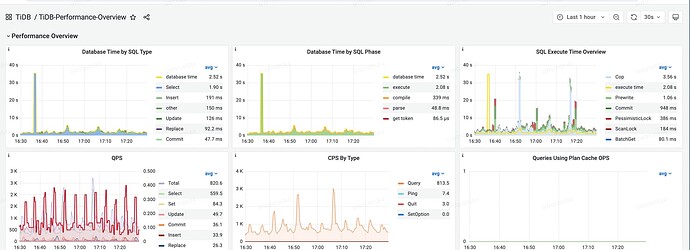

【遇到的问题:问题现象及影响】

求助:TiDB两个节点相继OOM,实际物理机内存最大使用率100%,TiDB和PB同一台机器混合部署。

非业务高峰期,在平稳的状态下突然触发OOM了。

请问排查原因的思路和步骤。谢谢了!!

【资源配置】进入到 TiDB Dashboard -集群信息 (Cluster Info) -主机(Hosts) 截图此页面

【附件:截图/日志/监控】

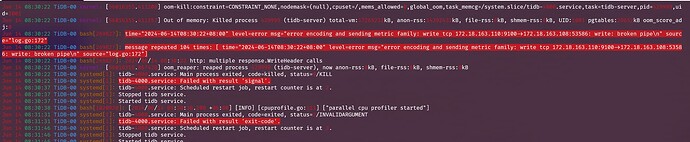

日志:

[Fri Jun 14 08:24:59 2024] [ 429999] 1001 429999 4316303 3598108 30363648 0 0 tidb-server

[Fri Jun 14 08:24:59 2024] oom-kill:constraint=CONSTRAINT_NONE,nodemask=(null),cpuset=/,mems_allowed=0,global_oom,task_memcg=/system.slice/tidb-4000.service,task=tidb-server,pid=429999,uid=1001

[Fri Jun 14 08:24:59 2024] Out of memory: Killed process 429999 (tidb-server) total-vm:17265212kB, anon-rss:14392432kB, file-rss:0kB, shmem-rss:0kB, UID:1001 pgtables:29652kB oom_score_adj:0

[Fri Jun 14 08:24:59 2024] oom_reaper: reaped process 429999 (tidb-server), now anon-rss:0kB, file-rss:0kB, shmem-rss:0kB

panic: runtime error: invalid memory address or nil pointer dereference

[signal SIGSEGV: segmentation violation code=0x1 addr=0x0 pc=0x28148d7]

tidb_stderr.log:

goroutine 1 [running]:

github.com/pingcap/tidb/ddl.(*ddl).close(0xc00082f180)

/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/ddl/ddl.go:399 +0x77

github.com/pingcap/tidb/ddl.(*ddl).Stop(0xc00082f180, 0x0, 0x0)

/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/ddl/ddl.go:327 +0x8a

github.com/pingcap/tidb/domain.(*Domain).Close(0xc000828140)

/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/domain/domain.go:695 +0x377

github.com/pingcap/tidb/session.(*domainMap).Get.func1(0x1000001685fc5, 0x7f10440521c8, 0x98)

/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/session/tidb.go:86 +0x69e

github.com/pingcap/tidb/util.RunWithRetry(0x1e, 0x1f4, 0xc001f07a60, 0x18, 0x6468280)

/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/util/misc.go:65 +0x7f

github.com/pingcap/tidb/session.(*domainMap).Get(0x642b450, 0x4538850, 0xc0001dbef0, 0xc000828140, 0x0, 0x0)

/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/session/tidb.go:71 +0x1f0

github.com/pingcap/tidb/session.createSessionWithOpt(0x4538850, 0xc0001dbef0, 0x0, 0x3e04200, 0xc000d205a0, 0xc000051980)

/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/session/session.go:2767 +0x59

github.com/pingcap/tidb/session.createSession(...)

/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/session/session.go:2763

github.com/pingcap/tidb/session.BootstrapSession(0x4538850, 0xc0001dbef0, 0x0, 0x0, 0x0)

/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/session/session.go:2598 +0xfe

main.createStoreAndDomain(0x64312a0, 0x3ff6a97, 0x2c)

/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/tidb-server/main.go:296 +0x189

main.main()

/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/tidb-server/main.go:202 +0x29e

[2024/06/14 08:29:32.728 +08:00] [WARN] [memory_usage_alarm.go:140] [“tidb-server has the risk of OOM. Running SQLs and heap profile will be recorded in record path”] [“is server-memory-quota set”=false] [“system memory total”=16244236288] [“system memory usage”=13190623232] [“tidb-server memory usage”=9654420392] [memory-usage-alarm-ratio=0.8] [“record path”=“/tmp/1001_tidb/MC4wLjAuMDo0MDAwLzAuMC4wLjA6MTAwODA=/tmp-storage/record”]