【 TiDB 使用环境】测试

【 TiDB 版本】7.1.5

【复现路径】从7.1.4升级到7.1.5

【遇到的问题:问题现象及影响】

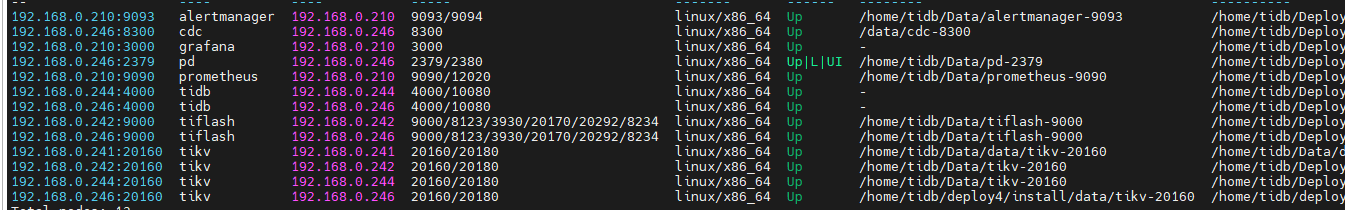

kv节点出错,集群状态正常:

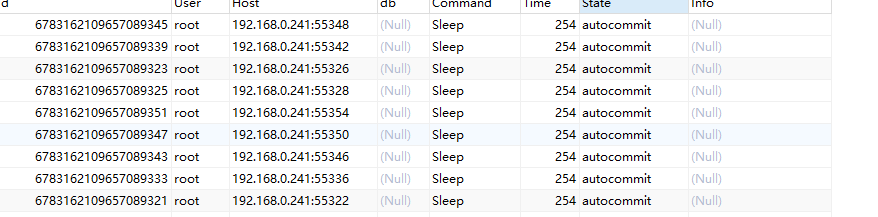

但是在查询线程的时候发现一直在提交:

然后查看其中一个kv节点日志:

> [2024/06/11 04:04:01.635 -04:00] [INFO] [<unknown>] ["Subchannel 0x7f08e136c580: Retry in 1000 milliseconds"]

> [2024/06/11 04:04:01.635 -04:00] [WARN] [raft_client.rs:296] ["RPC batch_raft fail"] [err="Some(RpcFailure(RpcStatus { status: 14-UNAVAILABLE, details: Some(\"failed to connect to all addresses\") }))"] [sink_err="Some(RpcFinished(Some(RpcStatus { status: 14-UNAVAILABLE, details: Some(\"failed to connect to all addresses\") })))"] [to_addr=192.168.0.241:20161]

> [2024/06/11 04:04:01.638 -04:00] [WARN] [raft_client.rs:199] ["send to 192.168.0.241:20161 fail, the gRPC connection could be broken"]

> [2024/06/11 04:04:01.638 -04:00] [ERROR] [transport.rs:163] ["send raft msg err"] [err="Other(\"[src/server/raft_client.rs:208]: RaftClient send fail\")"]

> [2024/06/11 04:04:01.638 -04:00] [INFO] [transport.rs:144] ["resolve store address ok"] [addr=192.168.0.241:20161] [store_id=1]

> [2024/06/11 04:04:01.638 -04:00] [INFO] [raft_client.rs:48] ["server: new connection with tikv endpoint"] [addr=192.168.0.241:20161]

> [2024/06/11 04:04:01.638 -04:00] [INFO] [<unknown>] ["Connect failed: {\"created\":\"@1718093041.638676386\",\"description\":\"Failed to connect to remote host: Connection refused\",\"errno\":111,\"file\":\"/rust/registry/src/github.com-1ecc6299db9ec823/grpcio-sys-0.5.3/grpc/src/core/lib/iomgr/tcp_client_posix.cc\",\"file_line\":200,\"os_error\":\"Connection refused\",\"syscall\":\"connect\",\"target_address\":\"ipv4:192.168.0.241:20161\"}"]

全是这种错误,写入非常快,几百个G的日志

查看241这台日志:

[2024/06/11 04:04:59.416 -04:00] [INFO] [apply.rs:1699] ["execute admin command"] [command="cmd_type: ChangePeerV2 change_peer_v2 { changes { peer { id: 144727509 store_id: 1 } } changes { change_type: AddLearnerNode peer { id: 144053190 store_id: 874002 role: Learner } } }"] [index=147] [term=85] [peer_id=144558754] [region_id=144053189]

[2024/06/11 04:04:59.416 -04:00] [INFO] [apply.rs:2292] ["exec ConfChangeV2"] [epoch="conf_ver: 96 version: 445"] [kind=EnterJoint] [peer_id=144558754] [region_id=144053189]

[2024/06/11 04:04:59.416 -04:00] [INFO] [apply.rs:2473] ["conf change successfully"] ["current region"="id: 144053189 start_key: 7480000000000001FFED5F698000000000FF00000A0380000000FF00001D5303800000FF000000005E0419A5FFED17030000000380FF000000058B0CF600FE end_key: 7480000000000001FFED5F698000000000FF00000A0380000000FF00001D7503800000FF000000005E0419A7FF1B47030000000380FF00000003181D2300FE region_epoch { conf_ver: 98 version: 445 } peers { id: 144053190 store_id: 874002 role: DemotingVoter } peers { id: 144558754 store_id: 887001 } peers { id: 144718902 store_id: 4 } peers { id: 144727509 store_id: 1 role: IncomingVoter }"] ["original region"="id: 144053189 start_key: 7480000000000001FFED5F698000000000FF00000A0380000000FF00001D5303800000FF000000005E0419A5FFED17030000000380FF000000058B0CF600FE end_key: 7480000000000001FFED5F698000000000FF00000A0380000000FF00001D7503800000FF000000005E0419A7FF1B47030000000380FF00000003181D2300FE region_epoch { conf_ver: 96 version: 445 } peers { id: 144053190 store_id: 874002 } peers { id: 144558754 store_id: 887001 } peers { id: 144718902 store_id: 4 } peers { id: 144727509 store_id: 1 role: Learner }"] [changes="[peer { id: 144727509 store_id: 1 }, change_type: AddLearnerNode peer { id: 144053190 store_id: 874002 role: Learner }]"] [peer_id=144558754] [region_id=144053189]

[2024/06/11 04:04:59.417 -04:00] [INFO] [raft.rs:2660] ["switched to configuration"] [config="Configuration { voters: Configuration { incoming: Configuration { voters: {144727509, 144558754, 144718902} }, outgoing: Configuration { voters: {144558754, 144718902, 144053190} } }, learners: {}, learners_next: {144053190}, auto_leave: false }"] [raft_id=144558754] [region_id=144053189]

[2024/06/11 04:04:59.419 -04:00] [INFO] [apply.rs:1699] ["execute admin command"] [command="cmd_type: ChangePeerV2 change_peer_v2 {}"] [index=148] [term=85] [peer_id=144558754] [region_id=144053189]

[2024/06/11 04:04:59.419 -04:00] [INFO] [apply.rs:2292] ["exec ConfChangeV2"] [epoch="conf_ver: 98 version: 445"] [kind=LeaveJoint] [peer_id=144558754] [region_id=144053189]

[2024/06/11 04:04:59.419 -04:00] [INFO] [apply.rs:2503] ["leave joint state successfully"] [region="id: 144053189 start_key: 7480000000000001FFED5F698000000000FF00000A0380000000FF00001D5303800000FF000000005E0419A5FFED17030000000380FF000000058B0CF600FE end_key: 7480000000000001FFED5F698000000000FF00000A0380000000FF00001D7503800000FF000000005E0419A7FF1B47030000000380FF00000003181D2300FE region_epoch { conf_ver: 100 version: 445 } peers { id: 144053190 store_id: 874002 role: Learner } peers { id: 144558754 store_id: 887001 } peers { id: 144718902 store_id: 4 } peers { id: 144727509 store_id: 1 }"] [peer_id=144558754] [region_id=144053189]

感觉没多大问题,现在如何解决,已经重启过一次了,还是一样

很奇怪我配置文件都是20160这端口,为啥它要访问20161这端口