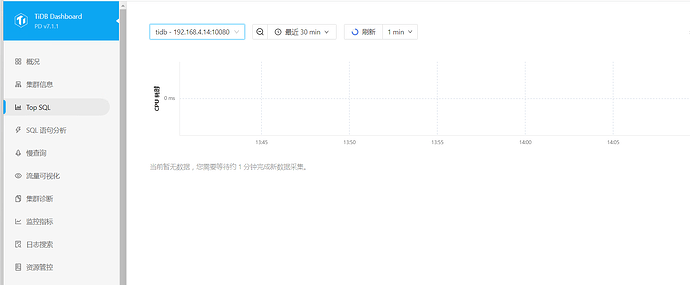

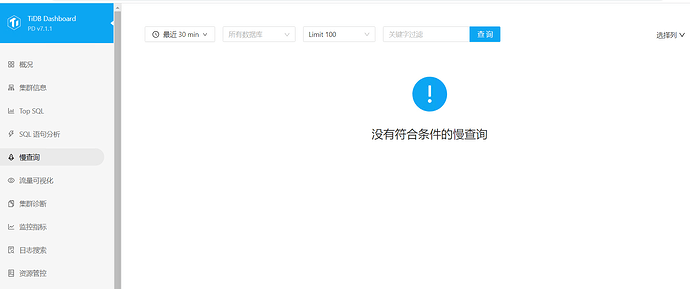

tidb监控界面,监测不到sql语句

你这集群是新搭建的嘛

不是,之前可以监测到

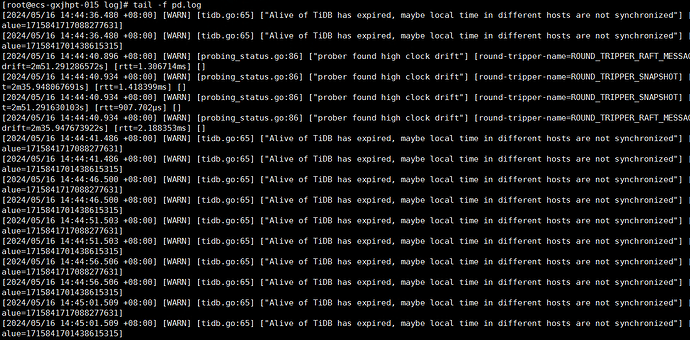

你看下Dashboard节点的日志

好的,感觉就是他的问题,我重启也没解决

同时对比Grafana再看一下UI这个节点有什么异常

你是不是把top sql的功能禁用了

没做过相关操作啊,主要现在监控界面各种指标曲线图也没有数据了

找这个状态Up|UI的PD日志,不是Grafana的,Dashboard是在某个PD上面的, 带有 |L 表示该 PD 是 Leader,|UI 表示该 PD 运行着 TiDB Dashboard。

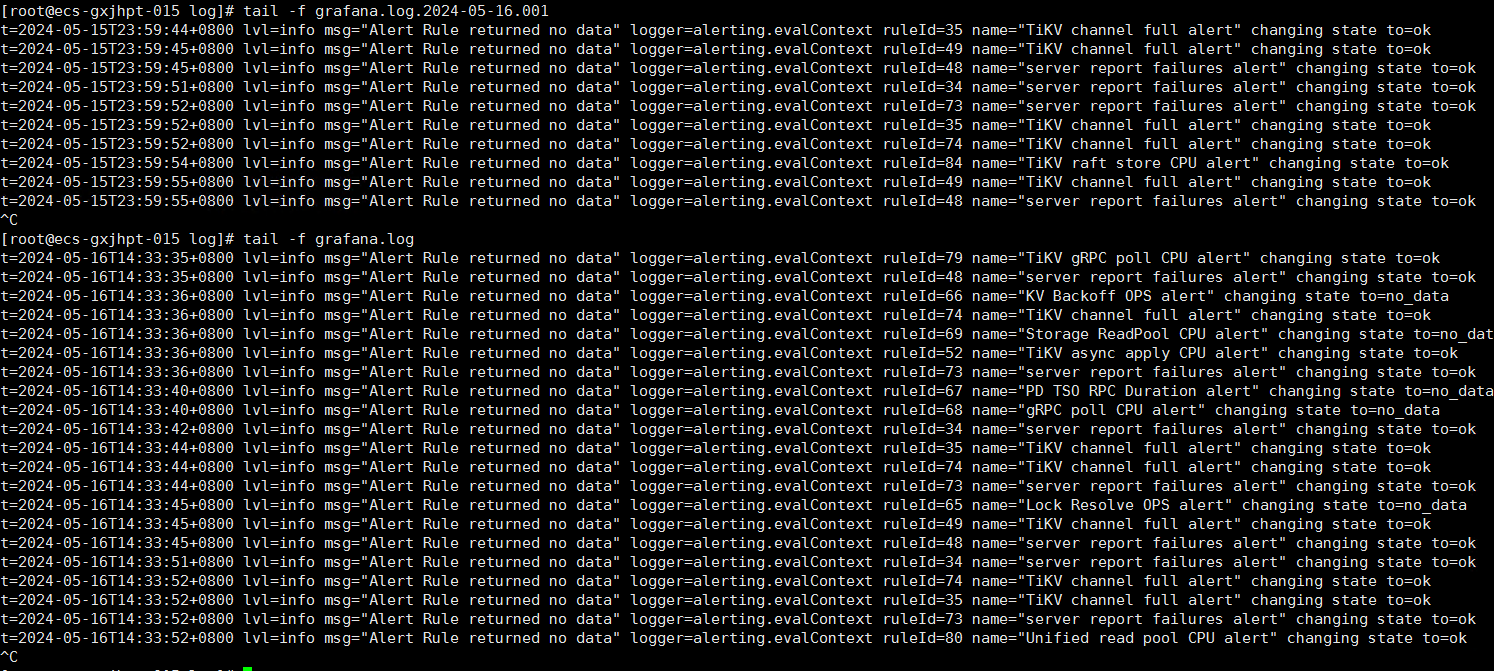

这个是grafana的日志吧?要看对应的pd节点日志

好的,这就看看

topsql功能在线设置打开关闭的,检查下是否是关闭了。

打开了,没有数据

现在集群都是正常的?

集群一切正常

最近做过什么操作么?

没有啊,没对集群做什么操作

grafana界面监控不到任何信息

t=2024-05-16T15:00:16+0800 lvl=info msg=“Alert Rule returned no data” logger=alerting.evalContext ruleId=24 name=“Scheduler worker CPU alert” changing state to=no_data

t=2024-05-16T15:00:18+0800 lvl=info msg=“Alert Rule returned no data” logger=alerting.evalContext ruleId=68 name=“gRPC poll CPU alert” changing state to=no_data

t=2024-05-16T15:00:18+0800 lvl=info msg=“Alert Rule returned no data” logger=alerting.evalContext ruleId=39 name=“Store writer CPU alert” changing state to=ok

t=2024-05-16T15:00:18+0800 lvl=info msg=“Alert Rule returned no data” logger=alerting.evalContext ruleId=77 name=“TiKV async apply CPU alert” changing state to=ok

t=2024-05-16T15:00:18+0800 lvl=info msg=“Alert Rule returned no data” logger=alerting.evalContext ruleId=67 name=“PD TSO RPC Duration alert” changing state to=no_data

t=2024-05-16T15:00:18+0800 lvl=info msg=“Alert Rule returned no data” logger=alerting.evalContext ruleId=78 name=“TiKV scheduler worker CPU alert” changing state to=ok

t=2024-05-16T15:00:18+0800 lvl=info msg=“Alert Rule returned no data” logger=alerting.evalContext ruleId=40 name=“TiKV gRPC poll CPU alert” changing state to=ok

t=2024-05-16T15:00:18+0800 lvl=info msg=“Alert Rule returned no data” logger=alerting.evalContext ruleId=27 name=“Append log duration alert” changing state to=no_data

t=2024-05-16T15:00:20+0800 lvl=info msg=“Alert Rule returned no data” logger=alerting.evalContext ruleId=30 name=“Async apply CPU alert” changing state to=no_data

t=2024-05-16T15:00:21+0800 lvl=info msg=“Alert Rule returned no data” logger=alerting.evalContext ruleId=31 name=“region health alert” changing state to=keep_state

t=2024-05-16T15:00:21+0800 lvl=info msg=“Alert Rule returned no data” logger=alerting.evalContext ruleId=34 name=“server report failures alert” changing state to=ok

t=2024-05-16T15:00:24+0800 lvl=info msg=“Alert Rule returned no data” logger=alerting.evalContext ruleId=74 name=“TiKV channel full alert” changing state to=ok

t=2024-05-16T15:00:24+0800 lvl=info msg=“Alert Rule returned no data” logger=alerting.evalContext ruleId=35 name=“TiKV channel full alert” changing state to=ok

t=2024-05-16T15:00:24+0800 lvl=info msg=“Alert Rule returned no data” logger=alerting.evalContext ruleId=73 name=“server report failures alert” changing state to=ok

t=2024-05-16T15:00:24+0800 lvl=info msg=“Alert Rule returned no data” logger=alerting.evalContext ruleId=36 name=“approximate region size alert” changing state to=no_data

t=2024-05-16T15:00:25+0800 lvl=info msg=“Alert Rule returned no data” logger=alerting.evalContext ruleId=59 name=“Connection Count alert” changing state to=no_data

t=2024-05-16T15:00:25+0800 lvl=info msg=“Alert Rule returned no data” logger=alerting.evalContext ruleId=49 name=“TiKV channel full alert” changing state to=ok

t=2024-05-16T15:00:25+0800 lvl=info msg=“Alert Rule returned no data” logger=alerting.evalContext ruleId=37 name=“TiKV raft store CPU alert” changing state to=ok

t=2024-05-16T15:00:25+0800 lvl=info msg=“Alert Rule returned no data” logger=alerting.evalContext ruleId=48 name=“server report failures alert” changing state to=ok

t=2024-05-16T15:00:26+0800 lvl=info msg=“Alert Rule returned no data” logger=alerting.evalContext ruleId=42 name=“TiKV Storage ReadPool CPU alert” changing state to=ok

t=2024-05-16T15:00:26+0800 lvl=info msg=“Alert Rule returned no data” logger=alerting.evalContext ruleId=43 name=“Unified read pool CPU alert” changing state to=ok

t=2024-05-16T15:00:26+0800 lvl=info msg=“Alert Rule returned no data” logger=alerting.evalContext ruleId=66 name=“KV Backoff OPS alert” changing state to=no_data