【 TiDB 使用环境】生产环境

【 TiDB 版本】v6.5.9

【复现路径】做过哪些操作出现的问题

kafka磁盘满了,导致数据存不到kafka

【遇到的问题:问题现象及影响】

cdc任务因为磁盘写满,导致写不进数据,ticdc主动停止了任务,出现了warning的报错

{

“upstream_id”: 7358011227008144308,

“namespace”: “default”,

“id”: “sms-record-sync”,

“state”: “warning”,

“checkpoint_tso”: 449549867346296837,

“checkpoint_time”: “2024-05-05 16:10:49.728”,

“error”: {

“time”: “2024-05-08T11:51:07.591737751+08:00”,

“addr”: “172.22.147.102:8300”,

“code”: “CDC:ErrProcessorUnknown”,

“message”: “table sink stuck”

}

}

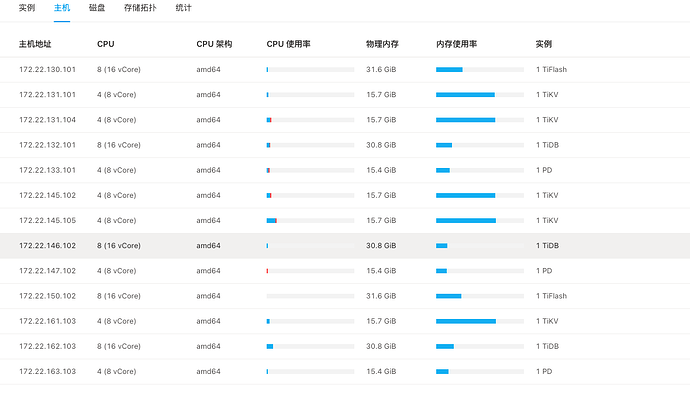

【资源配置】进入到 TiDB Dashboard -集群信息 (Cluster Info) -主机(Hosts) 截图此页面

【附件:截图/日志/监控】

有关cdc的错误日志如下

[2024/05/08 11:55:38.178 +08:00] [ERROR] [client.go:1068] [“region worker exited with error”] [namespace=default] [changefeed=sms-record-sync] [tableID=7269] [tableName=itnio_sms_record.tbsendrcd] [store=172.22.161.103:20160] [storeID=2] [streamID=19852] [error=“context canceled”] [errorVerbose=“context canceled\ngithub.com/pingcap/errors.AddStack\n\tgithub.com/pingcap/errors@v0.11.5-0.20220729040631-518f63d66278/errors.go:174\ngithub.com/pingcap/errors.Trace\n\tgithub.com/pingcap/errors@v0.11.5-0.20220729040631-518f63d66278/juju_adaptor.go:15\ngithub.com/pingcap/tiflow/cdc/kv.(*regionWorker).eventHandler\n\tgithub.com/pingcap/tiflow/cdc/kv/region_worker.go:480\ngithub.com/pingcap/tiflow/cdc/kv.(*regionWorker).run.func4\n\tgithub.com/pingcap/tiflow/cdc/kv/region_worker.go:654\ngolang.org/x/sync/errgroup.(*Group).Go.func1\n\tgolang.org/x/sync@v0.5.0/errgroup/errgroup.go:75\nruntime.goexit\n\truntime/asm_amd64.s:1594”]

[2024/05/08 11:55:38.178 +08:00] [ERROR] [client.go:1068] [“region worker exited with error”] [namespace=default] [changefeed=sms-record-sync] [tableID=7269] [tableName=itnio_sms_record.tbsendrcd] [store=172.22.145.102:20160] [storeID=3] [streamID=19886] [error=“context canceled”] [errorVerbose=“context canceled\ngithub.com/pingcap/errors.AddStack\n\tgithub.com/pingcap/errors@v0.11.5-0.20220729040631-518f63d66278/errors.go:174\ngithub.com/pingcap/errors.Trace\n\tgithub.com/pingcap/errors@v0.11.5-0.20220729040631-518f63d66278/juju_adaptor.go:15\ngithub.com/pingcap/tiflow/cdc/kv.(*regionWorker).eventHandler\n\tgithub.com/pingcap/tiflow/cdc/kv/region_worker.go:480\ngithub.com/pingcap/tiflow/cdc/kv.(*regionWorker).run.func4\n\tgithub.com/pingcap/tiflow/cdc/kv/region_worker.go:654\ngolang.org/x/sync/errgroup.(*Group).Go.func1\n\tgolang.org/x/sync@v0.5.0/errgroup/errgroup.go:75\nruntime.goexit\n\truntime/asm_amd64.s:1594”]

[2024/05/08 11:55:38.178 +08:00] [ERROR] [client.go:1068] [“region worker exited with error”] [namespace=default] [changefeed=sms-record-sync] [tableID=7269] [tableName=itnio_sms_record.tbsendrcd] [store=172.22.131.104:20160] [storeID=11] [streamID=19897] [error=“context canceled”] [errorVerbose=“context canceled\ngithub.com/pingcap/errors.AddStack\n\tgithub.com/pingcap/errors@v0.11.5-0.20220729040631-518f63d66278/errors.go:174\ngithub.com/pingcap/errors.Trace\n\tgithub.com/pingcap/errors@v0.11.5-0.20220729040631-518f63d66278/juju_adaptor.go:15\ngithub.com/pingcap/tiflow/cdc/kv.(*regionWorker).resolveLock\n\tgithub.com/pingcap/tiflow/cdc/kv/region_worker.go:300\ngithub.com/pingcap/tiflow/cdc/kv.(*regionWorker).run.func3\n\tgithub.com/pingcap/tiflow/cdc/kv/region_worker.go:651\ngolang.org/x/sync/errgroup.(*Group).Go.func1\n\tgolang.org/x/sync@v0.5.0/errgroup/errgroup.go:75\nruntime.goexit\n\truntime/asm_amd64.s:1594”]

[2024/05/08 11:55:38.178 +08:00] [ERROR] [client.go:1068] [“region worker exited with error”] [namespace=default] [changefeed=sms-record-sync] [tableID=7269] [tableName=itnio_sms_record.tbsendrcd] [store=172.22.131.101:20160] [storeID=10] [streamID=19869] [error=“context canceled”] [errorVerbose=“context canceled\ngithub.com/pingcap/errors.AddStack\n\tgithub.com/pingcap/errors@v0.11.5-0.20220729040631-518f63d66278/errors.go:174\ngithub.com/pingcap/errors.Trace\n\tgithub.com/pingcap/errors@v0.11.5-0.20220729040631-518f63d66278/juju_adaptor.go:15\ngithub.com/pingcap/tiflow/cdc/kv.(*regionWorker).eventHandler\n\tgithub.com/pingcap/tiflow/cdc/kv/region_worker.go:480\ngithub.com/pingcap/tiflow/cdc/kv.(*regionWorker).run.func4\n\tgithub.com/pingcap/tiflow/cdc/kv/region_worker.go:654\ngolang.org/x/sync/errgroup.(*Group).Go.func1\n\tgolang.org/x/sync@v0.5.0/errgroup/errgroup.go:75\nruntime.goexit\n\truntime/asm_amd64.s:1594”]

[2024/05/08 11:55:38.178 +08:00] [ERROR] [client.go:1068] [“region worker exited with error”] [namespace=default] [changefeed=sms-record-sync] [tableID=7269] [tableName=itnio_sms_record.tbsendrcd] [store=172.22.145.105:20160] [storeID=1] [streamID=19833] [error=“context canceled”] [errorVerbose=“context canceled\ngithub.com/pingcap/errors.AddStack\n\tgithub.com/pingcap/errors@v0.11.5-0.20220729040631-518f63d66278/errors.go:174\ngithub.com/pingcap/errors.Trace\n\tgithub.com/pingcap/errors@v0.11.5-0.20220729040631-518f63d66278/juju_adaptor.go:15\ngithub.com/pingcap/tiflow/cdc/kv.(*regionWorker).eventHandler\n\tgithub.com/pingcap/tiflow/cdc/kv/region_worker.go:480\ngithub.com/pingcap/tiflow/cdc/kv.(*regionWorker).run.func4\n\tgithub.com/pingcap/tiflow/cdc/kv/region_worker.go:654\ngolang.org/x/sync/errgroup.(*Group).Go.func1\n\tgolang.org/x/sync@v0.5.0/errgroup/errgroup.go:75\nruntime.goexit\n\truntime/asm_amd64.s:1594”]

[2024/05/08 11:55:38.236 +08:00] [ERROR] [client.go:1068] [“region worker exited with error”] [namespace=default] [changefeed=sms-record-sync] [tableID=7140] [tableName=itnio_sms_record.tbsendrcd] [store=172.22.131.104:20160] [storeID=11] [streamID=19707] [error=“context canceled”] [errorVerbose=“context canceled\ngithub.com/pingcap/errors.AddStack\n\tgithub.com/pingcap/errors@v0.11.5-0.20220729040631-518f63d66278/errors.go:174\ngithub.com/pingcap/errors.Trace\n\tgithub.com/pingcap/errors@v0.11.5-0.20220729040631-518f63d66278/juju_adaptor.go:15\ngithub.com/pingcap/tiflow/cdc/kv.(*regionWorker).resolveLock\n\tgithub.com/pingcap/tiflow/cdc/kv/region_worker.go:300\ngithub.com/pingcap/tiflow/cdc/kv.(*regionWorker).run.func3\n\tgithub.com/pingcap/tiflow/cdc/kv/region_worker.go:651\ngolang.org/x/sync/errgroup.(*Group).Go.func1\n\tgolang.org/x/sync@v0.5.0/errgroup/errgroup.go:75\nruntime.goexit\n\truntime/asm_amd64.s:1594”]

[2024/05/08 11:55:38.236 +08:00] [ERROR] [client.go:1068] [“region worker exited with error”] [namespace=default] [changefeed=sms-record-sync] [tableID=7140] [tableName=itnio_sms_record.tbsendrcd] [store=172.22.131.101:20160] [storeID=10] [streamID=19690] [error=“context canceled”] [errorVerbose=“context canceled\ngithub.com/pingcap/errors.AddStack\n\tgithub.com/pingcap/errors@v0.11.5-0.20220729040631-518f63d66278/errors.go:174\ngithub.com/pingcap/errors.Trace\n\tgithub.com/pingcap/errors@v0.11.5-0.20220729040631-518f63d66278/juju_adaptor.go:15\ngithub.com/pingcap/tiflow/cdc/kv.(*regionWorker).resolveLock\n\tgithub.com/pingcap/tiflow/cdc/kv/region_worker.go:300\ngithub.com/pingcap/tiflow/cdc/kv.(*regionWorker).run.func3\n\tgithub.com/pingcap/tiflow/cdc/kv/region_worker.go:651\ngolang.org/x/sync/errgroup.(*Group).Go.func1\n\tgolang.org/x/sync@v0.5.0/errgroup/errgroup.go:75\nruntime.goexit\n\truntime/asm_amd64.s:1594”]

[2024/05/08 11:55:38.237 +08:00] [ERROR] [client.go:1068] [“region worker exited with error”] [namespace=default] [changefeed=sms-record-sync] [tableID=7140] [tableName=itnio_sms_record.tbsendrcd] [store=172.22.145.105:20160] [storeID=1] [streamID=19670] [error=“context canceled”] [errorVerbose=“context canceled\ngithub.com/pingcap/errors.AddStack\n\tgithub.com/pingcap/errors@v0.11.5-0.20220729040631-518f63d66278/errors.go:174\ngithub.com/pingcap/errors.Trace\n\tgithub.com/pingcap/errors@v0.11.5-0.20220729040631-518f63d66278/juju_adaptor.go:15\ngithub.com/pingcap/tiflow/cdc/kv.(*regionWorker).resolveLock\n\tgithub.com/pingcap/tiflow/cdc/kv/region_worker.go:300\ngithub.com/pingcap/tiflow/cdc/kv.(*regionWorker).run.func3\n\tgithub.com/pingcap/tiflow/cdc/kv/region_worker.go:651\ngolang.org/x/sync/errgroup.(*Group).Go.func1\n\tgolang.org/x/sync@v0.5.0/errgroup/errgroup.go:75\nruntime.goexit\n\truntime/asm_amd64.s:1594”]

[2024/05/08 11:55:38.237 +08:00] [ERROR] [client.go:1068] [“region worker exited with error”] [namespace=default] [changefeed=sms-record-sync] [tableID=7140] [tableName=itnio_sms_record.tbsendrcd] [store=172.22.161.103:20160] [storeID=2] [streamID=19675] [error=“context canceled”]