【 TiDB 使用环境】生产环境

【 TiDB 版本】V4.0.9

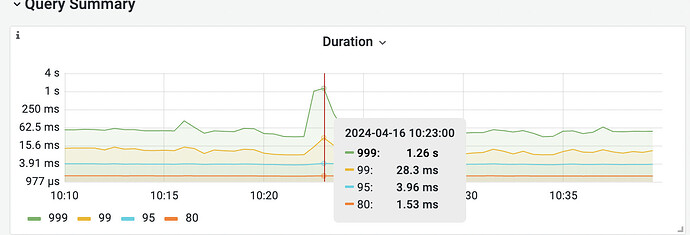

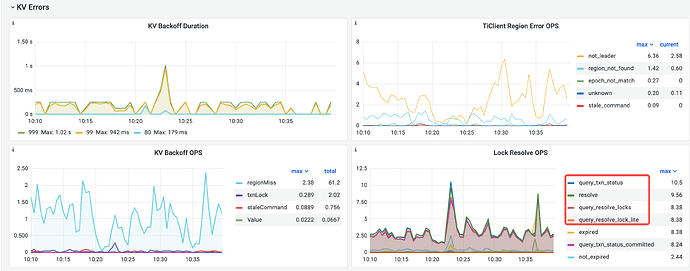

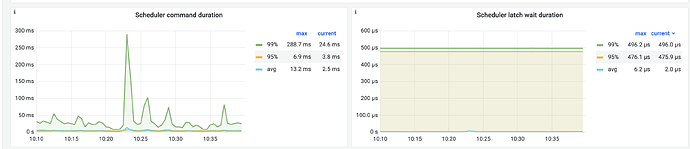

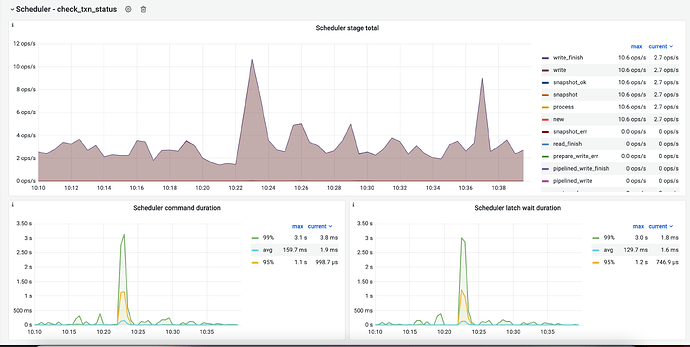

【复现路径】乐观事务模式下集群抖动

【遇到的问题:问题现象及影响】

业务使用乐观事务进行DML操作,每天会有偶发数据库抖动,通过日志发现是key is locked导致SQL执行操作3秒(MAX_EXECUTION_TIME设置为3秒)被集群强制KILL导致业务抖动。

疑问一:导致这种情况的根因没有找到。

疑问二:乐观事务下,在监控和日志中没有出现txnLockFast关键字,是不是就意味着没有读写冲突?

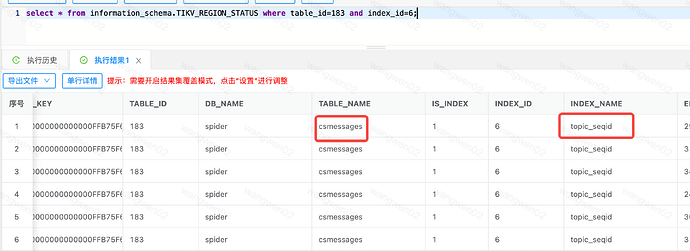

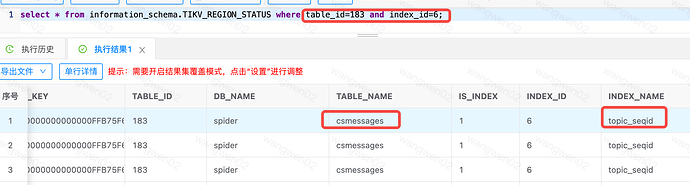

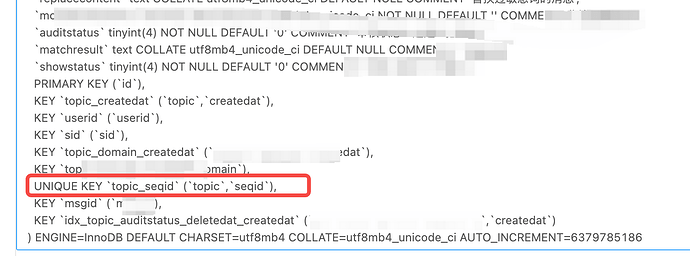

疑问三:csmessage表有主键和唯一约束,并存在7个索引和json类型字段,单条insert大小5K左右,这种insert耗时在prewrite阶段,如果改成悲观事务,会有改善嘛?

【资源配置】进入到 TiDB Dashboard -集群信息 (Cluster Info) -主机(Hosts) 截图此页面

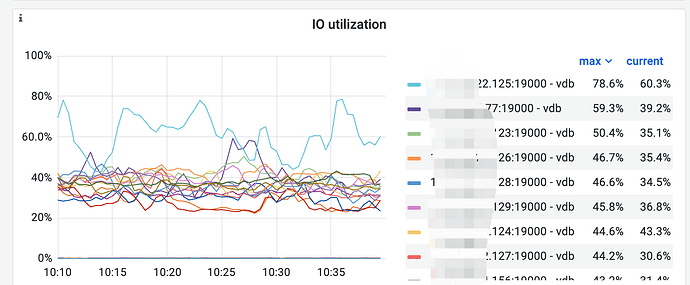

8个TiDB Server(16C64G)+3个PD Server(8C32G)+15个TiKV(16C64G2T)

【附件:截图/日志/监控】

TiKV日志:

[2024/04/16 10:22:18.146 +08:00] [INFO] [apply.rs:1653] [“add learner successfully”] [region=“id: 3918269 start_key: 7480000000000000FF8D5F698000000000FF0000020167727079FF51337964FF693748FF6762550000FD0380FF0000000000000003FF8000000000000084FF038000000006F979FFD400000000000000F8 end_key: 7480000000000000FF8D5F698000000000FF0000020167727079FF575F3835FF716D49FF7844410000FD0380FF0000000000000003FF8000000000000000FF038000000004B48EFF5800000000000000F8 region_epoch { conf_ver: 4169 version: 523 } peers { id: 72440460 store_id: 49536096 } peers { id: 72562810 store_id: 49536098 } peers { id: 72570023 store_id: 22204 }”] [peer=“id: 72589510 store_id: 8992685 is_learner: true”] [peer_id=72562810] [region_id=3918269]

[2024/04/16 10:22:18.155 +08:00] [WARN] [endpoint.rs:530] [error-response] [err=“Key is locked (will clean up) primary_lock: 7480000000000000B75F69800000000000000101677270434941332DFF62355F624F340000FD0419B320258F09BC3003800000017B88469D lock_version: 449114049285981951 key: 7480000000000000B75F698000000000000007016664363036343162FF2D333665312D3433FF64632D626538312DFF3363393665333762FF6131366200000000FB03800000017B88469D lock_ttl: 3000 txn_size: 1”]

[2024/04/16 10:22:18.160 +08:00] [WARN] [endpoint.rs:530] [error-response] [err=“Key is locked (will clean up) primary_lock: 74800000000000008B5F728000000046F32FF1 lock_version: 449114049508802669 key: 74800000000000008B5F728000000046F32FF1 lock_ttl: 3000 txn_size: 1”]

[2024/04/16 10:22:18.193 +08:00] [WARN] [endpoint.rs:530] [error-response] [err=“Key is locked (will clean up) primary_lock: 74800000000000008B5F728000000046F32FF1 lock_version: 449114049508802669 key: 74800000000000008B5F728000000046F32FF1 lock_ttl: 3000 txn_size: 1”]

[2024/04/16 10:22:18.317 +08:00] [WARN] [endpoint.rs:530] [error-response] [err=“Key is locked (will clean up) primary_lock: 74800000000000008B5F72800000000048561E lock_version: 449114049836482580 key: 74800000000000008B5F72800000000048561E lock_ttl: 3000 txn_size: 1”]

[2024/04/16 10:22:18.408 +08:00] [WARN] [endpoint.rs:530] [error-response] [err=“Key is locked (will clean up) primary_lock: 74800000000000008B5F69800000000000000101677270694B4C3534FF4364367969550000FD038AD89484A6002000 lock_version: 449114049390839117 key: 74800000000000008B5F69800000000000000201677270694B4C3534FF4364367969550000FD03800000004BF8C87A lock_ttl: 3000 txn_size: 1”]

[2024/04/16 10:22:18.918 +08:00] [WARN] [endpoint.rs:530] [error-response] [err=“Key is locked (will clean up) primary_lock: 7480000000000000B75F69800000000000000101677270694E6B4676FF70514346656B0000FD0419B32025920C9A9003800000017B87D7BC lock_version: 449114050164165210 key: 7480000000000000B75F69800000000000000601677270694E6B4676FF70514346656B0000FD038000000000000050 lock_ttl: 3000 txn_size: 1”]

[2024/04/16 10:22:19.101 +08:00] [WARN] [endpoint.rs:530] [error-response] [err=“Key is locked (will clean up) primary_lock: 7480000000000000B75F698000000000000001016772706C4A4B6A63FF5475546B4C510000FD0419B320259303EBE803800000017B876A54 lock_version: 449114050190379217 key: 7480000000000000B75F698000000000000006016772706C4A4B6A63FF5475546B4C510000FD03800000000000001C lock_ttl: 3000 txn_size: 1”]

[2024/04/16 10:22:19.119 +08:00] [WARN] [endpoint.rs:530] [error-response] [err=“Key is locked (will clean up) primary_lock: 7480000000000000B75F698000000000000001016772706C4A4B6A63FF5475546B4C510000FD0419B320259303EBE803800000017B876A54 lock_version: 449114050190379217 key: 7480000000000000B75F698000000000000006016772706C4A4B6A63FF5475546B4C510000FD03800000000000001C lock_ttl: 3000 txn_size: 1”]

[2024/04/16 10:22:19.180 +08:00] [WARN] [endpoint.rs:530] [error-response] [err=“Key is locked (will clean up) primary_lock: 7480000000000000B75F698000000000000001016772707A7A713052FF48656B474A730000FD0419B32025930449A803800000017B88473D lock_version: 449114050242807795 key: 7480000000000000B75F698000000000000006016772707A7A713052FF48656B474A730000FD038000000000000008 lock_ttl: 3000 txn_size: 1”]

[2024/04/16 10:22:19.189 +08:00] [WARN] [endpoint.rs:530] [error-response] [err=“Key is locked (will clean up) primary_lock: 7480000000000000B75F698000000000000001016772705569655566FF596F79356F300000FD0419B3202593049F9803800000017B88ABED lock_version: 449114050242806941 key: 7480000000000000B75F698000000000000006016772705569655566FF596F79356F300000FD0380000000000003A4 lock_ttl: 3000 txn_size: 1”]

[2024/04/16 10:22:19.190 +08:00] [WARN] [endpoint.rs:530] [error-response] [err=“Key is locked (will clean up) primary_lock: 74800000000000008B5F728000000034931AAA lock_version: 449114050190378030 key: 74800000000000008B5F728000000034931AAA lock_ttl: 3204 txn_size: 1”]

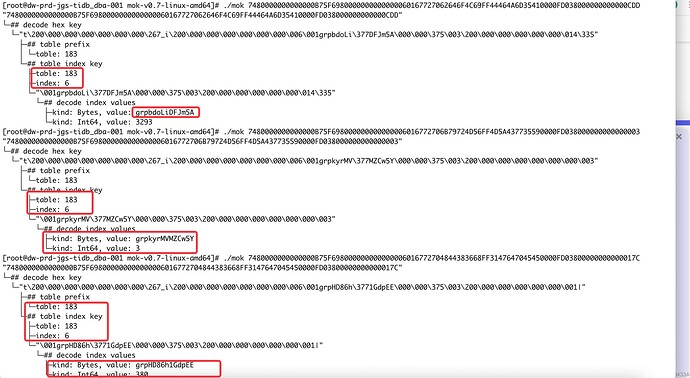

MOK信息

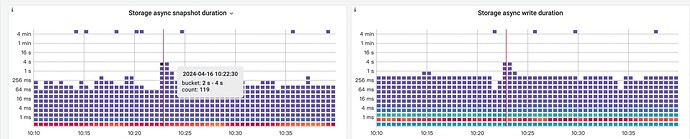

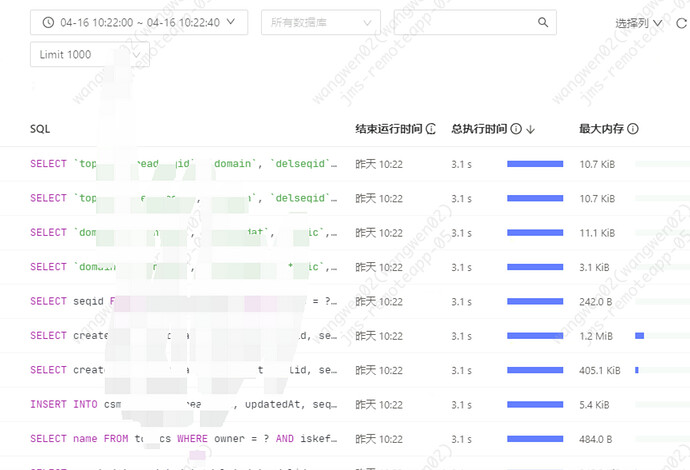

监控信息