gpt写的读取纳斯达克100id存入tidb的代码

import yfinance as yf

import pandas as pd

import mysql.connector

from sqlalchemy import create_engine

# 定义纳斯达克前100家公司的股票代码列表

nasdaq_top_100 = [ ] # 请补全列表

import requests

from bs4 import BeautifulSoup

# 设置请求头部信息

headers = {

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.107 Safari/537.36"

}

# 发送GET请求

res = requests.get("[https://api.nasdaq.com/api/quote/list-type/nasdaq100",](https://api.nasdaq.com/api/quote/list-type/nasdaq100%22,) headers=headers)

# 解析JSON数据

main_data = res.json()['data']['data']['rows']

# 打印公司名称

for i in range(len(main_data)):

print(main_data[i]['symbol'])

nasdaq_top_100.append(main_data[i]['symbol'])

# 创建一个空的列表来存储每家公司的DataFrame

dataframes = []

# 遍历股票代码列表

for ticker in nasdaq_top_100:

# 获取股票数据

stock = yf.Ticker(ticker)

# 获取财务报表数据

balance_sheet = stock.balance_sheet

income_statement = stock.financials

# 计算ROE

net_income = income_statement.loc['Net Income']

shareholder_equity = balance_sheet.loc['Stockholders Equity']

roe = net_income / shareholder_equity

# 创建一个DataFrame来存储当前公司的ROE

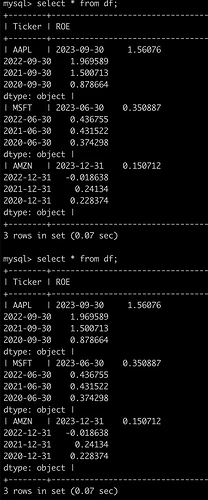

df = pd.DataFrame({'Ticker': [ticker], 'ROE': [roe]})

# 将DataFrame添加到列表中

dataframes.append(df)

# 使用pd.concat合并所有公司的DataFrame

roe_df = pd.concat(dataframes)

# 输出结果

print(roe_df)

#url = 'mysql+pymysql://username:password@hostname:port/dbname?charset=utf8'

#engine = sa.create_engine(url, echo=False)

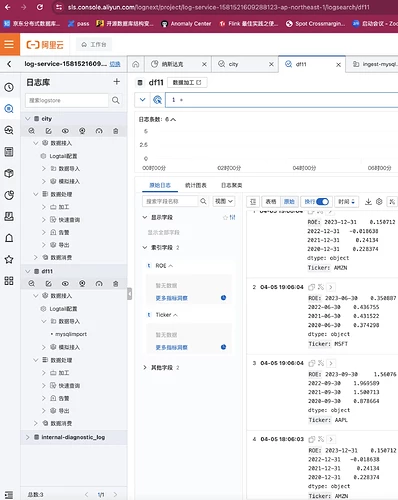

engine = create_engine('mysql+pymysql://username:password@ip/test')

roe_df.to_sql('df', engine, index=False,

method = "multi",chunksize = 10000 ,if_exists='replace')

文章结尾

不懂就问gpt

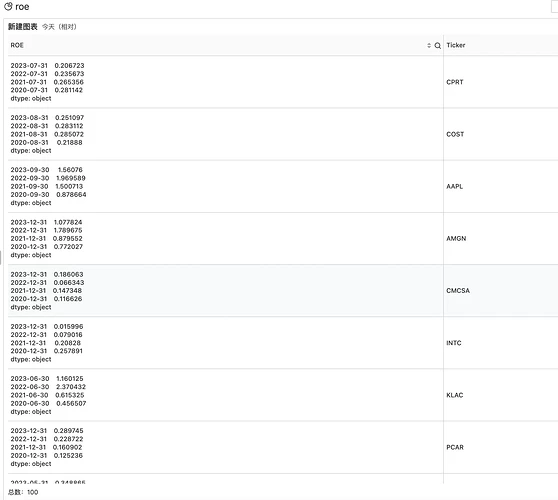

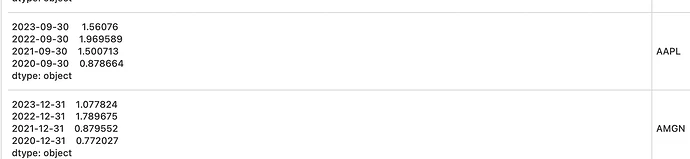

我之前只知道微软 nvidia利润高。拿个这个代码发现纳斯达克的生物科技利润也是非常高。利润率恐怖如苹果。一搜索生物医药 no1

数据分析的确让我们能获取更好的收益率。

本来写这些代码挺难的。但gpt的出现。让写代码变得无比简单。

这样 纳斯达克7巨头被市场称为“七巨头”(Magnificent Seven)的七大美股增长型或科技股——苹果、微软、Alphabet、亚马逊、英伟达、特斯拉和Meta Platforms 还得加上生物医药。

后续把纳斯达克指数k线导入到tidb。

把自己的投资金额写入就可以自己计算收益率了。

后面在想想其他方法。等出国开户就不被国内基金剥削管理费了。管理费2%挺离谱的。

import yfinance as yf

import pymysql

from datetime import datetime, timedelta

# Define database connection parameters

db_params = {

'host': 'ip',

'user': 'username',

'password': 'password',

'db': 'test'

}

# Connect to the MySQL database

connection = pymysql.connect(**db_params)

cursor = connection.cursor()

# Create a new table for NASDAQ 100 data

create_table_query = """

CREATE TABLE IF NOT EXISTS nasdaq_100s (

date DATE,

open FLOAT,

high FLOAT,

low FLOAT,

close FLOAT,

adj_close FLOAT,

volume BIGINT

)

"""

cursor.execute(create_table_query)

nasdaq_top_100 = [ ] # 请补全列表

import requests

from bs4 import BeautifulSoup

# 设置请求头部信息

headers = {

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.107 Safari/537.36"

}

# 发送GET请求

res = requests.get("[https://api.nasdaq.com/api/quote/list-type/nasdaq100",](https://api.nasdaq.com/api/quote/list-type/nasdaq100%22,) headers=headers)

# 解析JSON数据

main_data = res.json()['data']['data']['rows']

# 打印公司名称

for i in range(len(main_data)):

print(main_data[i]['symbol'])

nasdaq_top_100.append(main_data[i]['symbol'])

for ticker_symbol in nasdaq_top_100:

# 获取股票数据

# Calculate the date 10 years ago from today

ten_years_ago = datetime.now() - timedelta(days=3650)

# Fetch the historical data from yfinance

data = yf.download(ticker_symbol, start=ten_years_ago.strftime('%Y-%m-%d'))

# Insert the data into the MySQL table

insert_query = """

INSERT INTO nasdaq_100s (date, open, high, low, close, adj_close, volume)

VALUES (%s, %s, %s, %s, %s, %s, %s)

"""

for date, row in data.iterrows():

cursor.execute(insert_query, (date.date(), row['Open'], row['High'], row['Low'], row['Close'], row['Adj Close'], row['Volume']))

# Commit changes and close the connection

connection.commit()

cursor.close()

connection.close()

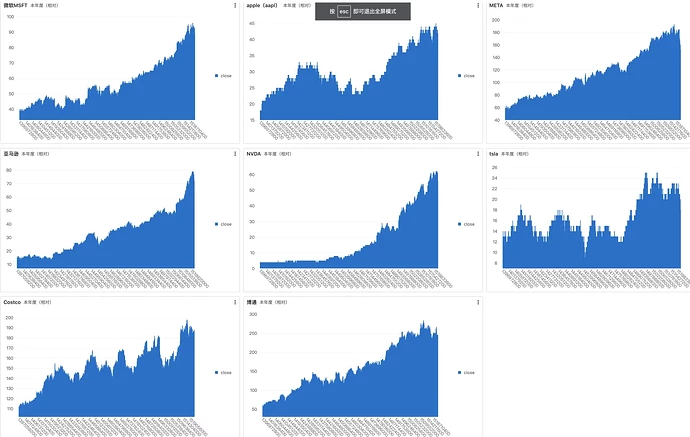

我买的基金分布情况为下面10只股票

用导入的数据做图形分析

整理完数据后就是出图了

这就是我的钱都去哪了 微软10% apple 10% nvda 2% costco 2% 博通2%

其中 msft 做的是azrue云 亚马逊做的是aws google 是g云。3朵云 nvda ai costco 超市 博通 芯片 meta 社交

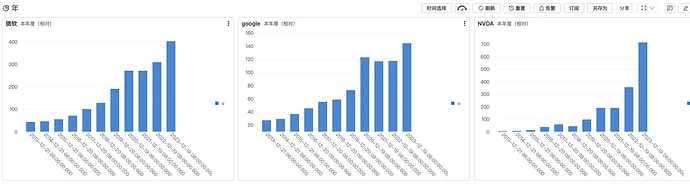

用年为单位来看这些股票呢?

tidb 本质上也是一家数据库云公司

这些数据的背后本质上是sql语句

select FROM_UNIXTIME(date - date % 31536000) as stamp,avg(close) as v GROUP BY stamp order by stamp limit 100000

文章的末位 推荐一本正经的书

若有收获,就点个赞吧