如题,tidb 8.0,pd ms 模式 tiup playground 无法再次启动

第一个次使用pd ms模式,可以正常启动

[tidb@shawnyan ~ 14:53:52]$ rm -rf .tiup/data/

[tidb@shawnyan ~ 14:53:57]$ tiup playground v8.0.0 --tag v8 --pd.mode ms --pd.api 1 --pd.tso 1 --pd.scheduling 1 --without-monitor --host 192.168.8.161

Start pd api instance:v8.0.0

Start pd tso instance:v8.0.0

Start pd scheduling instance:v8.0.0

Start pd resource_manager instance:v8.0.0

Start tikv instance:v8.0.0

Start tidb instance:v8.0.0

Waiting for tidb instances ready

192.168.8.161:4000 ... Done

Start tiflash instance:v8.0.0

userConfig map[flash:map[proxy:map[config:/home/tidb/.tiup/data/v8/tiflash-0/tiflash_proxy.toml]] logger:map[level:debug]]

Waiting for tiflash instances ready

192.168.8.161:3930 ... Done

🎉 TiDB Playground Cluster is started, enjoy!

Connect TiDB: mysql --comments --host 192.168.8.161 --port 4000 -u root

TiDB Dashboard: http://192.168.8.161:2379/dashboard

^CPlayground receive signal: interrupt

Got signal interrupt (Component: playground ; PID: 116918)

Wait tiflash(117126) to quit...

^CForce tiflash(117126) to quit...

Got signal interrupt (Component: playground ; PID: 116918)

tiflash quit

Force tidb(116977) to quit...

tidb quit

Force tikv(116955) to quit...

tikv quit

Force pd api(116928) to quit...

pd api quit

Force pd tso(116935) to quit...

pd tso quit

Force pd scheduling(116941) to quit...

pd scheduling quit

Force pd resource_manager(116948) to quit...

pd resource_manager quit

[tidb@shawnyan ~ 14:54:41]$

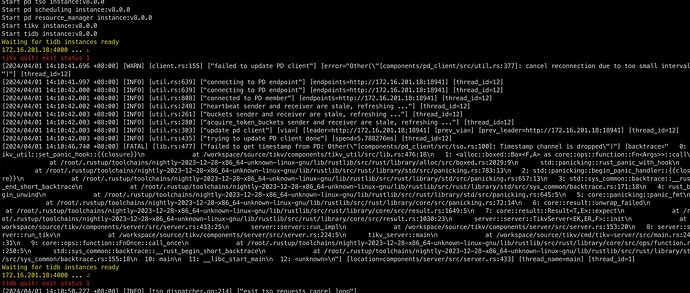

停止后,再次启动,报错

[tidb@shawnyan ~ 14:54:43]$ tiup playground v8.0.0 --tag v8 --pd.mode ms --pd.api 1 --pd.tso 1 --pd.scheduling 1 --without-monitor

Start pd api instance:v8.0.0

Start pd tso instance:v8.0.0

Start pd scheduling instance:v8.0.0

Start pd resource_manager instance:v8.0.0

Start tikv instance:v8.0.0

Start tidb instance:v8.0.0

Waiting for tidb instances ready

127.0.0.1:4000 ... ⠹

tikv quit: exit status 1

[2024/03/28 14:54:53.575 +08:00] [WARN] [client.rs:155] ["failed to update PD client"] [error="Other(\"[components/pd_client/src/util.rs:377]: cancel reconnection due to too small interval\")"] [thread_id=12]

[2024/03/28 14:54:53.876 +08:00] [INFO] [util.rs:639] ["connecting to PD endpoint"] [endpoints=http://127.0.0.1:2379] [thread_id=12]

[2024/03/28 14:54:53.877 +08:00] [INFO] [util.rs:639] ["connecting to PD endpoint"] [endpoints=http://127.0.0.1:2379] [thread_id=12]

[2024/03/28 14:54:53.877 +08:00] [INFO] [util.rs:808] ["connected to PD member"] [endpoints=http://127.0.0.1:2379] [thread_id=12]

[2024/03/28 14:54:53.877 +08:00] [INFO] [util.rs:248] ["heartbeat sender and receiver are stale, refreshing ..."] [thread_id=12]

[2024/03/28 14:54:53.877 +08:00] [INFO] [util.rs:261] ["buckets sender and receiver are stale, refreshing ..."] [thread_id=12]

[2024/03/28 14:54:53.877 +08:00] [INFO] [util.rs:280] ["acquire_token_buckets sender and receiver are stale, refreshing ..."] [thread_id=12]

[2024/03/28 14:54:53.877 +08:00] [INFO] [util.rs:303] ["update pd client"] [via=] [leader=http://127.0.0.1:2379] [prev_via=] [prev_leader=http://127.0.0.1:2379] [thread_id=12]

[2024/03/28 14:54:53.877 +08:00] [INFO] [util.rs:435] ["trying to update PD client done"] [spend=1.787786ms] [thread_id=12]

[2024/03/28 14:54:59.338 +08:00] [FATAL] [lib.rs:477] ["failed to get timestamp from PD: Other(\"[components/pd_client/src/tso.rs:100]: Timestamp channel is dropped\")"] [backtrace=" 0: tikv_util::set_panic_hook::{{closure}}\n at /workspace/source/tikv/components/tikv_util/src/lib.rs:476:18\n 1: <alloc::boxed::Box<F,A> as core::ops::function::Fn<Args>>::call\n at /root/.rustup/toolchains/nightly-2023-12-28-x86_64-unknown-linux-gnu/lib/rustlib/src/rust/library/alloc/src/boxed.rs:2029:9\n std::panicking::rust_panic_with_hook\n at /root/.rustup/toolchains/nightly-2023-12-28-x86_64-unknown-linux-gnu/lib/rustlib/src/rust/library/std/src/panicking.rs:783:13\n 2: std::panicking::begin_panic_handler::{{closure}}\n at /root/.rustup/toolchains/nightly-2023-12-28-x86_64-unknown-linux-gnu/lib/rustlib/src/rust/library/std/src/panicking.rs:657:13\n 3: std::sys_common::backtrace::__rust_end_short_backtrace\n at /root/.rustup/toolchains/nightly-2023-12-28-x86_64-unknown-linux-gnu/lib/rustlib/src/rust/library/std/src/sys_common/backtrace.rs:171:18\n 4: rust_begin_unwind\n at /root/.rustup/toolchains/nightly-2023-12-28-x86_64-unknown-linux-gnu/lib/rustlib/src/rust/library/std/src/panicking.rs:645:5\n 5: core::panicking::panic_fmt\n at /root/.rustup/toolchains/nightly-2023-12-28-x86_64-unknown-linux-gnu/lib/rustlib/src/rust/library/core/src/panicking.rs:72:14\n 6: core::result::unwrap_failed\n at /root/.rustup/toolchains/nightly-2023-12-28-x86_64-unknown-linux-gnu/lib/rustlib/src/rust/library/core/src/result.rs:1649:5\n 7: core::result::Result<T,E>::expect\n at /root/.rustup/toolchains/nightly-2023-12-28-x86_64-unknown-linux-gnu/lib/rustlib/src/rust/library/core/src/result.rs:1030:23\n server::server::TikvServer<EK,ER,F>::init\n at /workspace/source/tikv/components/server/src/server.rs:433:25\n server::server::run_impl\n at /workspace/source/tikv/components/server/src/server.rs:153:20\n 8: server::server::run_tikv\n at /workspace/source/tikv/components/server/src/server.rs:224:5\n tikv_server::main\n at /workspace/source/tikv/cmd/tikv-server/src/main.rs:249:31\n 9: core::ops::function::FnOnce::call_once\n at /root/.rustup/toolchains/nightly-2023-12-28-x86_64-unknown-linux-gnu/lib/rustlib/src/rust/library/core/src/ops/function.rs:250:5\n std::sys_common::backtrace::__rust_begin_short_backtrace\n at /root/.rustup/toolchains/nightly-2023-12-28-x86_64-unknown-linux-gnu/lib/rustlib/src/rust/library/std/src/sys_common/backtrace.rs:155:18\n 10: main\n 11: __libc_start_main\n 12: <unknown>\n"] [location=components/server/src/server.rs:433] [thread_name=main] [thread_id=1]

Waiting for tidb instances ready

127.0.0.1:4000 ... ⠋

tidb quit: exit status 1

另外,这里的 toolchains 为啥是 nightly 版本?

在 7.5 playground 没有 pd ms 模式,停止后,可以再次正常启动。

[tidb@shawnyan ~ 18:51:58]$ tiup playground v7.5.0 --tag v7 --without-monitor

Start pd instance:v7.5.0

The component `pd` version v7.5.0 is not installed; downloading from repository.

download https://tiup-mirrors.pingcap.com/pd-v7.5.0-linux-amd64.tar.gz 49.97 MiB / 49.97 MiB 100.00% 11.50 MiB/s

Start tikv instance:v7.5.0

The component `tikv` version v7.5.0 is not installed; downloading from repository.

download https://tiup-mirrors.pingcap.com/tikv-v7.5.0-linux-amd64.tar.gz 290.85 MiB / 290.85 MiB 100.00% 11.19 MiB/s

Start tidb instance:v7.5.0

The component `tidb` version v7.5.0 is not installed; downloading from repository.

download https://tiup-mirrors.pingcap.com/tidb-v7.5.0-linux-amd64.tar.gz 78.51 MiB / 78.51 MiB 100.00% 11.41 MiB/s

Waiting for tidb instances ready

127.0.0.1:4000 ... Done

Start tiflash instance:v7.5.0

userConfig map[flash:map[proxy:map[config:/home/tidb/.tiup/data/v7/tiflash-0/tiflash_proxy.toml]] logger:map[level:debug]]

The component `tiflash` version v7.5.0 is not installed; downloading from repository.

download https://tiup-mirrors.pingcap.com/tiflash-v7.5.0-linux-amd64.tar.gz 250.76 MiB / 250.76 MiB 100.00% 11.15 MiB/s

Waiting for tiflash instances ready

127.0.0.1:3930 ... Done

🎉 TiDB Playground Cluster is started, enjoy!

Connect TiDB: mysql --comments --host 127.0.0.1 --port 4000 -u root

TiDB Dashboard: http://127.0.0.1:2379/dashboard

^CPlayground receive signal: interrupt

Got signal interrupt (Component: playground ; PID: 123357)

Wait tiflash(123539) to quit...

Wait tidb(123521) to quit...

tiflash quit

tidb quit

Wait tikv(123385) to quit...

tikv quit

Wait pd(123368) to quit...

pd quit

[tidb@shawnyan ~ 20:37:59]$

[tidb@shawnyan ~ 20:38:00]$

[tidb@shawnyan ~ 20:38:00]$ tiup playground v7.5.0 --tag v7 --without-monitor

Start pd instance:v7.5.0

Start tikv instance:v7.5.0

Start tidb instance:v7.5.0

Waiting for tidb instances ready

127.0.0.1:4000 ... Done

Start tiflash instance:v7.5.0

userConfig map[flash:map[proxy:map[config:/home/tidb/.tiup/data/v7/tiflash-0/tiflash_proxy.toml]] logger:map[level:debug]]

Waiting for tiflash instances ready

127.0.0.1:3930 ... Done

🎉 TiDB Playground Cluster is started, enjoy!

Connect TiDB: mysql --comments --host 127.0.0.1 --port 4000 -u root

TiDB Dashboard: http://127.0.0.1:2379/dashboard