【 TiDB 使用环境】生产环境

【 TiDB 版本】V6.5.8

【遇到的问题:问题现象及影响】 请教各位大神,https://docs.pingcap.com/zh/tidb/stable/deploy-monitoring-services这里的操作看着步骤还挺多,是否可以用tiup工具单独部署监控【非TIDB集群部署期间】

这不是手动部署么?tiup 部署 Prometheus 是一个集群一个。

如果想用tiup来部署监控,还是得需要准备好监控相关的包,替换官方自带的,然后通过tiup进行部署替换。具体可以参考:

https://docs.pingcap.com/zh/tidb/stable/upgrade-monitoring-services

在 TiDB 官网下载页面下载 TiDB-community-server 软件包,并解压。

在解压文件中,找到 grafana-v{version}-linux-amd64.tar.gz,并解压。

使用 TiUP 部署 TiDB 集群时,TiUP 会同时自动部署 Prometheus、Grafana 和 Alertmanager 等监控组件,并且在集群扩容中自动为新增节点添加监控配置。通过 TiUP 自动部署的监控组件并不是这些三方组件的最新版本,如果你需要使用最新的三方组件,可以按照本文的方法升级所需的监控组件。

tiuph会自动部署这些

这块的内容我在仔细看看&&验证下,看着步骤还挺多,哈哈

这是在实际工作中被要求的,还是自己想做实验的呀.?

没明白你的需求,你现在是一个没有监控的集群想要加监控呢?还是什么都没有,想直接部署监控?

没有监控的集群加监控

单独装,确实多,一大堆步骤

node_exporter这个组件是不是在部署集群的时候,就算没部署监控,也是默认安装的呢

你没有集群的话,node_export应该是不会自动部署的,还真的手动部署一下了。。。

部署集群的时候,没有安装Prometheus、Grafana组件的话,node_exporter组件是不是也默认安装了

对,你不配置默认也会启动node_exporter

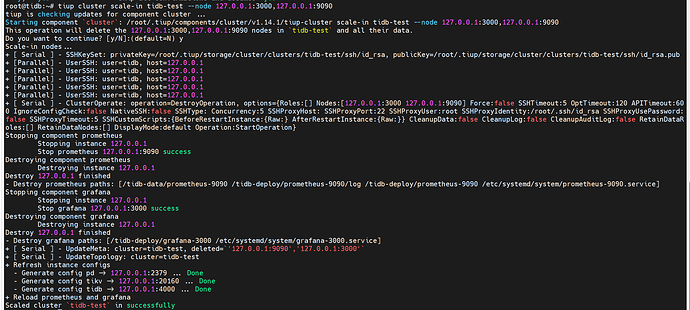

为啥要手动部署,监控没装直接scale-out下,监控可以缩容扩容的

有详细步骤么? 发来看看

扩容监控:

vi scale-out.yml

monitored:

node_exporter_port: 9100

blackbox_exporter_port: 9115

monitoring_servers:

- host: 127.0.0.1

grafana_servers:

- host: 127.0.0.1

扩容

root@tidb:~# tiup cluster scale-out tidb-test scale-out.yml -u root -p

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.14.1/tiup-cluster scale-out tidb-test scale-out.yml -u root -p

You have one or more of ["global", "monitored", "server_configs"] fields configured in

the scale out topology, but they will be ignored during the scaling out process.

If you want to use configs different from the existing cluster, cancel now and

set them in the specification fileds for each host.

Do you want to continue? [y/N]: (default=N) y

Input SSH password:

+ Detect CPU Arch Name

- Detecting node 127.0.0.1 Arch info ... Done

+ Detect CPU OS Name

- Detecting node 127.0.0.1 OS info ... Done

Please confirm your topology:

Cluster type: tidb

Cluster name: tidb-test

Cluster version: v7.6.0

Role Host Ports OS/Arch Directories

---- ---- ----- ------- -----------

prometheus 127.0.0.1 9090/12020 linux/x86_64 /tidb-deploy/prometheus-9090,/tidb-data/prometheus-9090

grafana 127.0.0.1 3000 linux/x86_64 /tidb-deploy/grafana-3000

Attention:

1. If the topology is not what you expected, check your yaml file.

2. Please confirm there is no port/directory conflicts in same host.

Do you want to continue? [y/N]: (default=N) y

+ [ Serial ] - SSHKeySet: privateKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa, publicKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa.pub

+ [Parallel] - UserSSH: user=tidb, host=127.0.0.1

+ [Parallel] - UserSSH: user=tidb, host=127.0.0.1

+ [Parallel] - UserSSH: user=tidb, host=127.0.0.1

+ Download TiDB components

- Download prometheus:v7.6.0 (linux/amd64) ... Done

- Download grafana:v7.6.0 (linux/amd64) ... Done

+ Initialize target host environments

+ Deploy TiDB instance

- Deploy instance prometheus -> 127.0.0.1:9090 ... Done

- Deploy instance grafana -> 127.0.0.1:3000 ... Done

+ Copy certificate to remote host

+ Generate scale-out config

- Generate scale-out config prometheus -> 127.0.0.1:9090 ... Done

- Generate scale-out config grafana -> 127.0.0.1:3000 ... Done

+ Init monitor config

Enabling component prometheus

Enabling instance 127.0.0.1:9090

Enable instance 127.0.0.1:9090 success

Enabling component grafana

Enabling instance 127.0.0.1:3000

Enable instance 127.0.0.1:3000 success

Enabling component node_exporter

Enabling instance 127.0.0.1

Enable 127.0.0.1 success

Enabling component blackbox_exporter

Enabling instance 127.0.0.1

Enable 127.0.0.1 success

+ [ Serial ] - Save meta

+ [ Serial ] - Start new instances

Starting component prometheus

Starting instance 127.0.0.1:9090

Start instance 127.0.0.1:9090 success

Starting component grafana

Starting instance 127.0.0.1:3000

Start instance 127.0.0.1:3000 success

Starting component node_exporter

Starting instance 127.0.0.1

Start 127.0.0.1 success

Starting component blackbox_exporter

Starting instance 127.0.0.1

Start 127.0.0.1 success

+ Refresh components conifgs

- Generate config pd -> 127.0.0.1:2379 ... Done

- Generate config tikv -> 127.0.0.1:20160 ... Done

- Generate config tidb -> 127.0.0.1:4000 ... Done

- Generate config prometheus -> 127.0.0.1:9090 ... Done

- Generate config grafana -> 127.0.0.1:3000 ... Done

+ Reload prometheus and grafana

+ [ Serial ] - UpdateTopology: cluster=tidb-test

Scaled cluster `tidb-test` out successfully

步骤非常详细,保存下,哈哈 ![]()