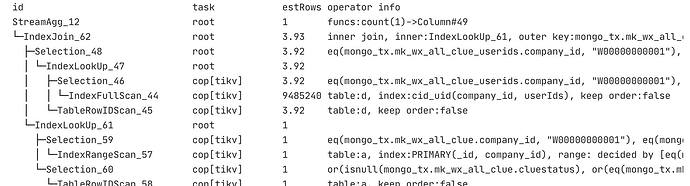

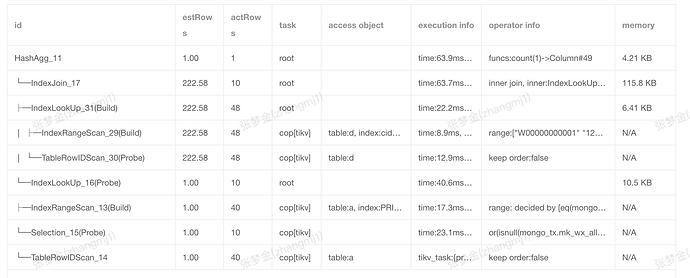

| └─IndexJoin_17 |

222.58 |

10 |

root |

|

time:63.7ms, loops:2, inner:{total:40.8ms, concurrency:5, task:1, construct:67.3µs, fetch:40.7ms, build:8.66µs}, probe:17.4µs |

inner join, inner:IndexLookUp_16, outer key:mongo_tx.mk_wx_all_clue_userids._id, inner key:mongo_tx.mk_wx_all_clue._id, equal cond:eq(mongo_tx.mk_wx_all_clue_userids._id, mongo_tx.mk_wx_all_clue._id) |

115.8 KB |

N/A |

| ├─IndexLookUp_31(Build) |

222.58 |

48 |

root |

|

time:22.2ms, loops:3, index_task: {total_time: 8.9ms, fetch_handle: 8.9ms, build: 681ns, wait: 1.58µs}, table_task: {total_time: 13ms, num: 1, concurrency: 5} |

|

6.41 KB |

N/A |

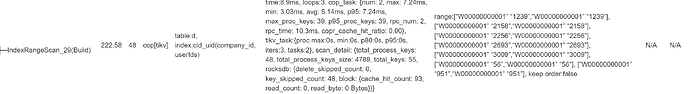

| │ ├─IndexRangeScan_29(Build) |

222.58 |

48 |

cop[tikv] |

table:d, index:cid_uid(company_id, userIds) |

time:8.9ms, loops:3, cop_task: {num: 2, max: 7.24ms, min: 3.03ms, avg: 5.14ms, p95: 7.24ms, max_proc_keys: 39, p95_proc_keys: 39, rpc_num: 2, rpc_time: 10.3ms, copr_cache_hit_ratio: 0.00}, tikv_task:{proc max:0s, min:0s, p80:0s, p95:0s, iters:3, tasks:2}, scan_detail: {total_process_keys: 48, total_process_keys_size: 4789, total_keys: 55, rocksdb: {delete_skipped_count: 0, key_skipped_count: 48, block: {cache_hit_count: 93, read_count: 0, read_byte: 0 Bytes}}} |

range:[“W00000000001” “1239”,“W00000000001” “1239”], [“W00000000001” “2158”,“W00000000001” “2158”], [“W00000000001” “2256”,“W00000000001” “2256”], [“W00000000001” “2693”,“W00000000001” “2693”], [“W00000000001” “3009”,“W00000000001” “3009”], [“W00000000001” “56”,“W00000000001” “56”], [“W00000000001” “951”,“W00000000001” “951”], keep order:false |

N/A |

N/A |

| │ └─TableRowIDScan_30(Probe) |

222.58 |

48 |

cop[tikv] |

table:d |

time:12.9ms, loops:2, cop_task: {num: 14, max: 6.68ms, min: 434.1µs, avg: 1.68ms, p95: 6.68ms, max_proc_keys: 7, p95_proc_keys: 7, tot_proc: 2ms, rpc_num: 14, rpc_time: 23.3ms, copr_cache_hit_ratio: 0.00}, tikv_task:{proc max:1ms, min:0s, p80:0s, p95:1ms, iters:14, tasks:14}, scan_detail: {total_process_keys: 48, total_process_keys_size: 5509, total_keys: 50, rocksdb: {delete_skipped_count: 0, key_skipped_count: 4, block: {cache_hit_count: 478, read_count: 0, read_byte: 0 Bytes}}} |

keep order:false |

N/A |

N/A |

| └─IndexLookUp_16(Probe) |

1.00 |

10 |

root |

|

time:40.6ms, loops:2, index_task: {total_time: 17.3ms, fetch_handle: 17.3ms, build: 662ns, wait: 1.85µs}, table_task: {total_time: 23.2ms, num: 1, concurrency: 5} |

|

10.5 KB |

N/A |

| ├─IndexRangeScan_13(Build) |

1.00 |

40 |

cop[tikv] |

table:a, index:PRIMARY(_id, company_id) |

time:17.3ms, loops:3, cop_task: {num: 11, max: 7.22ms, min: 497.9µs, avg: 3ms, p95: 7.22ms, max_proc_keys: 6, p95_proc_keys: 6, tot_proc: 3ms, tot_wait: 2ms, rpc_num: 11, rpc_time: 32.9ms, copr_cache_hit_ratio: 0.00}, tikv_task:{proc max:1ms, min:0s, p80:1ms, p95:1ms, iters:11, tasks:11}, scan_detail: {total_process_keys: 40, total_process_keys_size: 6720, total_keys: 80, rocksdb: {delete_skipped_count: 0, key_skipped_count: 40, block: {cache_hit_count: 548, read_count: 2, read_byte: 88.0 KB}}} |

range: decided by [eq(mongo_tx.mk_wx_all_clue._id, mongo_tx.mk_wx_all_clue_userids._id) eq(mongo_tx.mk_wx_all_clue.company_id, W00000000001)], keep order:false |

N/A |

N/A |

| └─Selection_15(Probe) |

1.00 |

10 |

cop[tikv] |

|

time:23.1ms, loops:2, cop_task: {num: 18, max: 5.89ms, min: 556.4µs, avg: 2.43ms, p95: 5.89ms, max_proc_keys: 7, p95_proc_keys: 7, tot_proc: 3ms, tot_wait: 3ms, rpc_num: 18, rpc_time: 43.6ms, copr_cache_hit_ratio: 0.00}, tikv_task:{proc max:1ms, min:0s, p80:0s, p95:1ms, iters:18, tasks:18}, scan_detail: {total_process_keys: 40, total_process_keys_size: 19774, total_keys: 40, rocksdb: {delete_skipped_count: 0, key_skipped_count: 0, block: {cache_hit_count: 635, read_count: 0, read_byte: 0 Bytes}}} |

or(isnull(mongo_tx.mk_wx_all_clue.cluestatus), or(eq(mongo_tx.mk_wx_all_clue.cluestatus, 0), eq(mongo_tx.mk_wx_all_clue.cluestatus, 1))) |

N/A |

N/A |

| └─TableRowIDScan_14 |

1.00 |

40 |

cop[tikv] |

table:a |

tikv_task:{proc max:1ms, min:0s, p80:0s, p95:1ms, iters:18, tasks:18} |

keep order:false |

N/A |

|