【 TiDB 使用环境】生产环境 /测试/ Poc

【 TiDB 版本】

【复现路径】做过哪些操作出现的问题

【遇到的问题:问题现象及影响】

【资源配置】进入到 TiDB Dashboard -集群信息 (Cluster Info) -主机(Hosts) 截图此页面

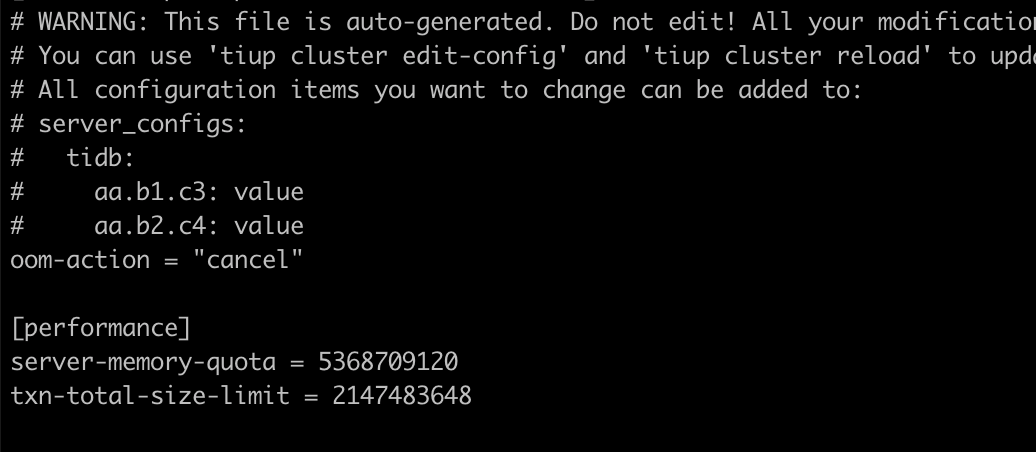

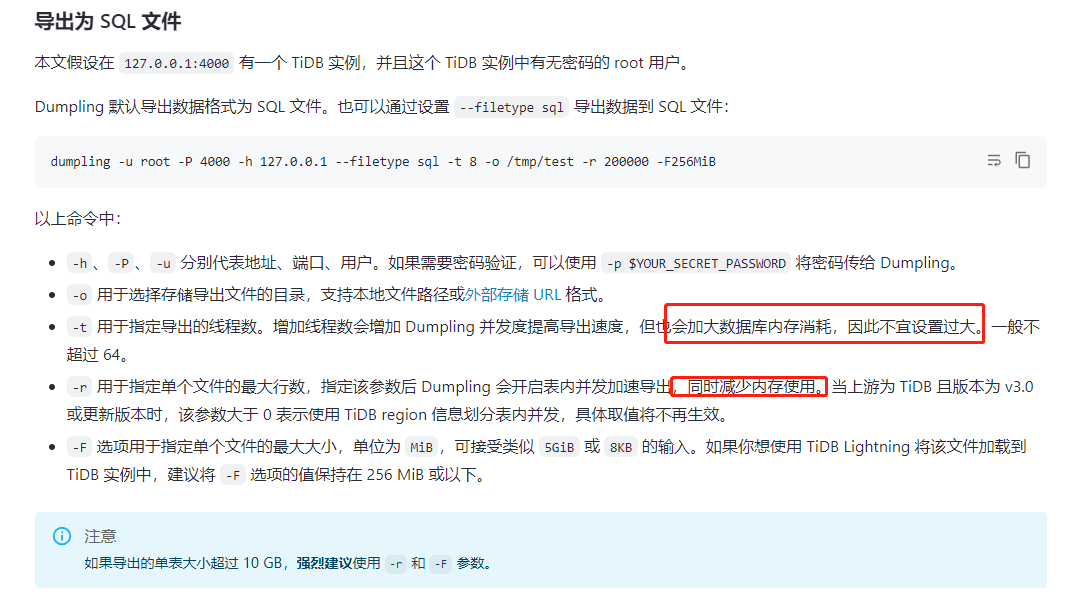

dumpling命令,分别使用了指定tidb_mem_quota_query和不指定tidb_mem_quota_query的情况都是一样的,tidb_mem_quota_query为什么没有生效

tiup dumpling -u root -P 4000 -h xxxx -p 'xxxxxxx' --filetype sql -o /data/export -F 500MiB -t 1 --params "tidb_distsql_scan_concurrency=1,tidb_mem_quota_query=209715200" --consistency=auto

报错信息

[mysql] 2024/03/05 13:36:59 packets.go:122: closing bad idle connection: EOF

[2024/03/05 13:36:59.640 +00:00] [INFO] [collector.go:224] ["units canceled"] [cancel-unit=0]

[2024/03/05 13:36:59.668 +00:00] [INFO] [collector.go:225] ["backup failed summary"] [total-ranges=1] [ranges-succeed=0] [ranges-failed=1] [unit-name="dump table data"] [error="invalid connection; sql: START TRANSACTION: sql: connection is already closed; dial tcp xxx.xxx.xxx.xxx:4000: connect: connection refused"] [errorVerbose="the following errors occurred:\n - invalid connection\n github.com/pingcap/errors.AddStack\n \tgithub.com/pingcap/errors@v0.11.5-0.20221009092201-b66cddb77c32/errors.go:174\n github.com/pingcap/errors.Trace\n \tgithub.com/pingcap/errors@v0.11.5-0.20221009092201-b66cddb77c32/juju_adaptor.go:15\n github.com/pingcap/tidb/dumpling/export.(*multiQueriesChunkIter).nextRows.func1\n \tgithub.com/pingcap/tidb/dumpling/export/ir_impl.go:87\n github.com/pingcap/tidb/dumpling/export.(*multiQueriesChunkIter).nextRows\n \tgithub.com/pingcap/tidb/dumpling/export/ir_impl.go:105\n github.com/pingcap/tidb/dumpling/export.(*multiQueriesChunkIter).Next\n \tgithub.com/pingcap/tidb/dumpling/export/ir_impl.go:153\n github.com/pingcap/tidb/dumpling/export.WriteInsert\n \tgithub.com/pingcap/tidb/dumpling/export/writer_util.go:247\n github.com/pingcap/tidb/dumpling/export.FileFormat.WriteInsert\n \tgithub.com/pingcap/tidb/dumpling/export/writer_util.go:660\n github.com/pingcap/tidb/dumpling/export.(*Writer).tryToWriteTableData\n \tgithub.com/pingcap/tidb/dumpling/export/writer.go:243\n github.com/pingcap/tidb/dumpling/export.(*Writer).WriteTableData.func1\n \tgithub.com/pingcap/tidb/dumpling/export/writer.go:228\n github.com/pingcap/tidb/br/pkg/utils.WithRetry\n \tgithub.com/pingcap/tidb/br/pkg/utils/retry.go:56\n github.com/pingcap/tidb/dumpling/export.(*Writer).WriteTableData\n \tgithub.com/pingcap/tidb/dumpling/export/writer.go:192\n github.com/pingcap/tidb/dumpling/export.(*Writer).handleTask\n \tgithub.com/pingcap/tidb/dumpling/export/writer.go:115\n github.com/pingcap/tidb/dumpling/export.(*Writer).run\n \tgithub.com/pingcap/tidb/dumpling/export/writer.go:93\n github.com/pingcap/tidb/dumpling/export.(*Dumper).startWriters.func4\n \tgithub.com/pingcap/tidb/dumpling/export/dump.go:376\n golang.org/x/sync/errgroup.(*Group).Go.func1\n \tgolang.org/x/sync@v0.1.0/errgroup/errgroup.go:75\n runtime.goexit\n \truntime/asm_amd64.s:1598\n - sql: connection is already closed\n sql: START TRANSACTION\n github.com/pingcap/tidb/dumpling/export.createConnWithConsistency\n \tgithub.com/pingcap/tidb/dumpling/export/sql.go:922\n github.com/pingcap/tidb/dumpling/export.(*Dumper).Dump.func4\n \tgithub.com/pingcap/tidb/dumpling/export/dump.go:234\n github.com/pingcap/tidb/dumpling/export.(*Dumper).Dump.func5\n \tgithub.com/pingcap/tidb/dumpling/export/dump.go:255\n github.com/pingcap/tidb/dumpling/export.(*Writer).WriteTableData.func1\n \tgithub.com/pingcap/tidb/dumpling/export/writer.go:204\n github.com/pingcap/tidb/br/pkg/utils.WithRetry\n \tgithub.com/pingcap/tidb/br/pkg/utils/retry.go:56\n github.com/pingcap/tidb/dumpling/export.(*Writer).WriteTableData\n \tgithub.com/pingcap/tidb/dumpling/export/writer.go:192\n github.com/pingcap/tidb/dumpling/export.(*Writer).handleTask\n \tgithub.com/pingcap/tidb/dumpling/export/writer.go:115\n github.com/pingcap/tidb/dumpling/export.(*Writer).run\n \tgithub.com/pingcap/tidb/dumpling/export/writer.go:93\n github.com/pingcap/tidb/dumpling/export.(*Dumper).startWriters.func4\n \tgithub.com/pingcap/tidb/dumpling/export/dump.go:376\n golang.org/x/sync/errgroup.(*Group).Go.func1\n \tgolang.org/x/sync@v0.1.0/errgroup/errgroup.go:75\n runtime.goexit\n \truntime/asm_amd64.s:1598\n - dial tcp xxx.xxx.xxx.xxx:4000: connect: connection refused\n github.com/pingcap/errors.AddStack\n \tgithub.com/pingcap/errors@v0.11.5-0.20221009092201-b66cddb77c32/errors.go:174\n github.com/pingcap/errors.Trace\n \tgithub.com/pingcap/errors@v0.11.5-0.20221009092201-b66cddb77c32/juju_adaptor.go:15\n github.com/pingcap/tidb/dumpling/export.createConnWithConsistency\n \tgithub.com/pingcap/tidb/dumpling/export/sql.go:903\n github.com/pingcap/tidb/dumpling/export.(*Dumper).Dump.func4\n \tgithub.com/pingcap/tidb/dumpling/export/dump.go:234\n github.com/pingcap/tidb/dumpling/export.(*Dumper).Dump.func5\n \tgithub.com/pingcap/tidb/dumpling/export/dump.go:255\n github.com/pingcap/tidb/dumpling/export.(*Writer).WriteTableData.func1\n \tgithub.com/pingcap/tidb/dumpling/export/writer.go:204\n github.com/pingcap/tidb/br/pkg/utils.WithRetry\n \tgithub.com/pingcap/tidb/br/pkg/utils/retry.go:56\n github.com/pingcap/tidb/dumpling/export.(*Writer).WriteTableData\n \tgithub.com/pingcap/tidb/dumpling/export/writer.go:192\n github.com/pingcap/tidb/dumpling/export.(*Writer).handleTask\n \tgithub.com/pingcap/tidb/dumpling/export/writer.go:115\n github.com/pingcap/tidb/dumpling/export.(*Writer).run\n \tgithub.com/pingcap/tidb/dumpling/export/writer.go:93\n github.com/pingcap/tidb/dumpling/export.(*Dumper).startWriters.func4\n \tgithub.com/pingcap/tidb/dumpling/export/dump.go:376\n golang.org/x/sync/errgroup.(*Group).Go.func1\n \tgolang.org/x/sync@v0.1.0/errgroup/errgroup.go:75\n runtime.goexit\n \truntime/asm_amd64.s:1598"]

[2024/03/05 13:36:59.708 +00:00] [INFO] [tso_dispatcher.go:214] ["exit tso dispatcher loop"]

[2024/03/05 13:36:59.708 +00:00] [INFO] [tso_dispatcher.go:162] ["exit tso requests cancel loop"]

[2024/03/05 13:36:59.708 +00:00] [INFO] [tso_dispatcher.go:375] ["[tso] stop fetching the pending tso requests due to context canceled"] [dc-location=global]

[2024/03/05 13:36:59.708 +00:00] [INFO] [tso_dispatcher.go:311] ["[tso] exit tso dispatcher"] [dc-location=global]

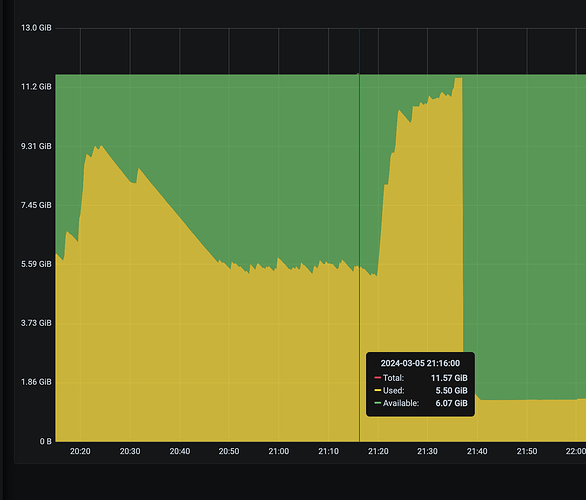

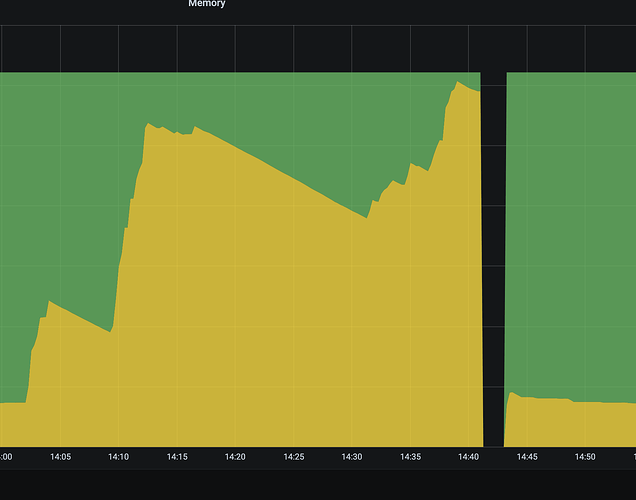

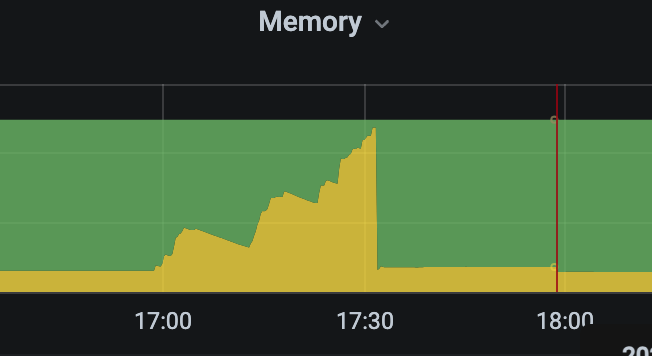

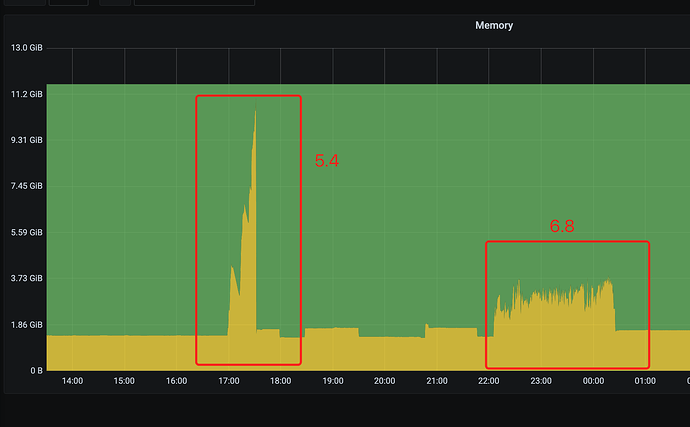

tidb的OOM

The 10 SQLs with the most memory usage for OOM analysis

SQL 0:

cost_time: 1.9202006489999999s

stats: xxx:448094468304535556

conn_id: 19

user: root

table_ids: [857]

txn_start_ts: 448171830564093953

mem_max: 295620 Bytes (288.7 KB)

sql: SELECT * FROM `xxx`.`xxx` WHERE `_tidb_rowid`>=70610415 and `_tidb_rowid`<70928387 ORDER BY `_tidb_rowid`

The 10 SQLs with the most time usage for OOM analysis

SQL 0:

cost_time: 1.9202006489999999s

stats: xxx:448094468304535556

conn_id: 19

user: root

table_ids: [857]

txn_start_ts: 448171830564093953

mem_max: 295620 Bytes (288.7 KB)

sql: SELECT * FROM `xxx`.`xxx` WHERE `_tidb_rowid`>=70610415 and `_tidb_rowid`<70928387 ORDER BY `_tidb_rowid`