【 TiDB 使用环境】生产环境

【 TiDB 版本】v6.5.5

【复现路径】业务无变动,升级前sql最大执行超时30S慢查无超时,升级后慢查出现超时中断

【遇到的问题:问题现象及影响】

告警相关:

1、TiDB_server_panic_total 频繁(之前没有)

2、TiDB_tikvclient_backoff_seconds_count 1~2次(偶尔月幅度)

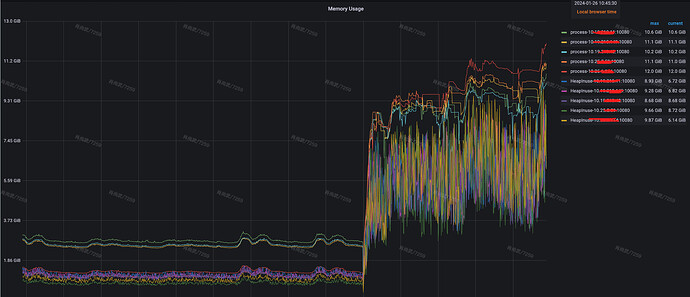

3、TiDB_memory_abnormal 1次(之前没有) 【做过什么操作】analyze table重新收集统计信息

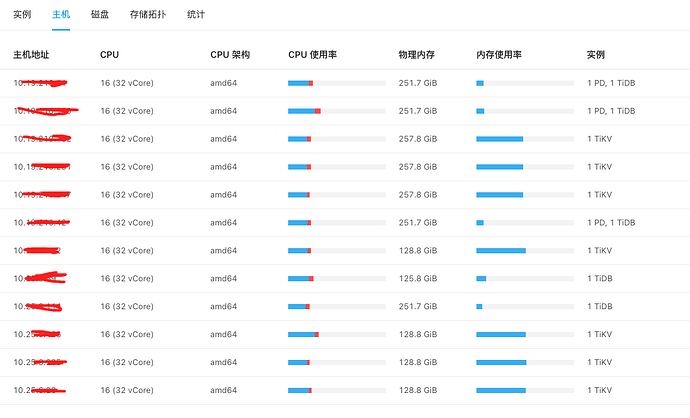

【资源配置】

【附件:截图/日志/监控】

[2024/01/24 09:01:02.866 +08:00] [ERROR] [client_batch.go:303] [batchSendLoop] [r={}] [stack=“github.com/tikv/client-go/v2/internal/client.(*batchConn).batchSendLoop.func1

/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.4-0.20230912041415-9c163cc8574b/internal/client/client_batch.go:305

runtime.gopanic

/usr/local/go/src/runtime/panic.go:884

runtime.goPanicIndex

/usr/local/go/src/runtime/panic.go:113

github.com/pingcap/kvproto/pkg/tikvpb.encodeVarintTikvpb

/go/pkg/mod/github.com/pingcap/kvproto@v0.0.0-20230726063044-73d6d7f3756b/pkg/tikvpb/tikvpb.pb.go:5438

github.com/pingcap/kvproto/pkg/tikvpb.(*BatchCommandsRequest_Request_Coprocessor).MarshalToSizedBuffer

/go/pkg/mod/github.com/pingcap/kvproto@v0.0.0-20230726063044-73d6d7f3756b/pkg/tikvpb/tikvpb.pb.go:4325

github.com/pingcap/kvproto/pkg/tikvpb.(*BatchCommandsRequest_Request_Coprocessor).MarshalTo

/go/pkg/mod/github.com/pingcap/kvproto@v0.0.0-20230726063044-73d6d7f3756b/pkg/tikvpb/tikvpb.pb.go:4313

github.com/pingcap/kvproto/pkg/tikvpb.(*BatchCommandsRequest_Request).MarshalToSizedBuffer

/go/pkg/mod/github.com/pingcap/kvproto@v0.0.0-20230726063044-73d6d7f3756b/pkg/tikvpb/tikvpb.pb.go:3850

github.com/pingcap/kvproto/pkg/tikvpb.(*BatchCommandsRequest).MarshalToSizedBuffer

/go/pkg/mod/github.com/pingcap/kvproto@v0.0.0-20230726063044-73d6d7f3756b/pkg/tikvpb/tikvpb.pb.go:3808

github.com/pingcap/kvproto/pkg/tikvpb.(*BatchCommandsRequest).Marshal

/go/pkg/mod/github.com/pingcap/kvproto@v0.0.0-20230726063044-73d6d7f3756b/pkg/tikvpb/tikvpb.pb.go:3766

google.golang.org/protobuf/internal/impl.legacyMarshal

/go/pkg/mod/google.golang.org/protobuf@v1.28.1/internal/impl/legacy_message.go:402

google.golang.org/protobuf/proto.MarshalOptions.marshal

/go/pkg/mod/google.golang.org/protobuf@v1.28.1/proto/encode.go:166

google.golang.org/protobuf/proto.MarshalOptions.MarshalAppend

/go/pkg/mod/google.golang.org/protobuf@v1.28.1/proto/encode.go:125

github.com/golang/protobuf/proto.marshalAppend

/go/pkg/mod/github.com/golang/protobuf@v1.5.2/proto/wire.go:40

github.com/golang/protobuf/proto.Marshal

/go/pkg/mod/github.com/golang/protobuf@v1.5.2/proto/wire.go:23

google.golang.org/grpc/encoding/proto.codec.Marshal

/go/pkg/mod/google.golang.org/grpc@v1.51.0/encoding/proto/proto.go:45

google.golang.org/grpc.encode

/go/pkg/mod/google.golang.org/grpc@v1.51.0/rpc_util.go:595

google.golang.org/grpc.prepareMsg

/go/pkg/mod/google.golang.org/grpc@v1.51.0/stream.go:1708

google.golang.org/grpc.(*clientStream).SendMsg

/go/pkg/mod/google.golang.org/grpc@v1.51.0/stream.go:846

github.com/pingcap/kvproto/pkg/tikvpb.(*tikvBatchCommandsClient).Send

/go/pkg/mod/github.com/pingcap/kvproto@v0.0.0-20230726063044-73d6d7f3756b/pkg/tikvpb/tikvpb.pb.go:2068

github.com/tikv/client-go/v2/internal/client.(*batchCommandsClient).send

/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.4-0.20230912041415-9c163cc8574b/internal/client/client_batch.go:519

github.com/tikv/client-go/v2/internal/client.(*batchConn).getClientAndSend

/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.4-0.20230912041415-9c163cc8574b/internal/client/client_batch.go:381

github.com/tikv/client-go/v2/internal/client.(*batchConn).batchSendLoop

/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.4-0.20230912041415-9c163cc8574b/internal/client/client_batch.go:344”]

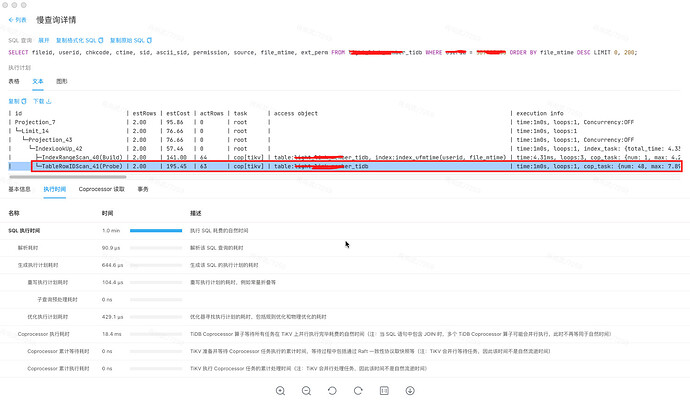

慢查

select fileid, userid, chkcode, ctime, sid, ascii_sid, permission, source, file_mtime, ext_perm from xxxx where xxxid = xxxx order by file_mtime desc limit xxx,xxx;

xxxid mtime有索引