compact 执行之后 执行计划不稳定

ySQL [(none)]> use hotel_product

Database changed

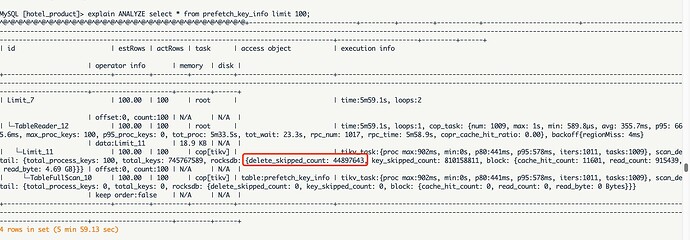

MySQL [hotel_product]> explain ANALYZE select * from prefetch_key_info limit 100;

±---------------------------±--------±--------±----------±------------------------±------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------±--------------------±--------±-----+

| id | estRows | actRows | task | access object | execution info

| operator info | memory | disk |

±---------------------------±--------±--------±----------±------------------------±------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------±--------------------±--------±-----+

| Limit_7 | 100.00 | 100 | root | | time:3m2.9s, loops:2

| offset:0, count:100 | N/A | N/A |

| └─TableReader_12 | 100.00 | 100 | root | | time:3m2.9s, loops:1, cop_task: {num: 806, max: 742.3ms, min: 922.4µs, avg: 226.8ms, p95: 448.7ms, max_proc_keys: 100, p95_proc_keys: 0, tot_proc: 2m47.9s, tot_wait: 14.2s, rpc_num: 808, rpc_time: 3m2.8s, copr_cache_hit_ratio: 0.00}, backoff{regionMiss: 2ms}

| data:Limit_11 | 18.9 KB | N/A |

| └─Limit_11 | 100.00 | 100 | cop[tikv] | | tikv_task:{proc max:620ms, min:0s, p80:298ms, p95:423ms, iters:808, tasks:806}, scan_detail: {total_process_keys: 100, total_keys: 423330044, rocksdb: {delete_skipped_count: 51187119, key_skipped_count: 474516358, block: {cache_hit_count: 6711, read_count: 294819, read_byte: 829.3 MB}}} | offset:0, count:100 | N/A | N/A |

| └─TableFullScan_10 | 100.00 | 100 | cop[tikv] | table:prefetch_key_info | tikv_task:{proc max:620ms, min:0s, p80:298ms, p95:423ms, iters:808, tasks:806}, scan_detail: {total_process_keys: 0, total_keys: 0, rocksdb: {delete_skipped_count: 0, key_skipped_count: 0, block: {cache_hit_count: 0, read_count: 0, read_byte: 0 Bytes}}}

| keep order:false | N/A | N/A |

±---------------------------±--------±--------±----------±------------------------±------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------±--------------------±--------±-----+

4 rows in set (3 min 3.78 sec)

MySQL [hotel_product]> ANALYZE table prefetch_key_info;

Query OK, 0 rows affected (20 min 43.13 sec)

MySQL [hotel_product]> explain ANALYZE select * from prefetch_key_info limit 100;

±---------------------------±--------±--------±----------±------------------------±----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------±--------------------±--------±-----+

| id | estRows | actRows | task | access object | execution info

| operator info | memory | disk |

±---------------------------±--------±--------±----------±------------------------±----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------±--------------------±--------±-----+

| Limit_7 | 100.00 | 100 | root | | time:2m37s, loops:2

| offset:0, count:100 | N/A | N/A |

| └─TableReader_12 | 100.00 | 100 | root | | time:2m37s, loops:1, cop_task: {num: 802, max: 675.2ms, min: 518.6µs, avg: 195.7ms, p95:

431.4ms, max_proc_keys: 100, p95_proc_keys: 0, tot_proc: 2m24.8s, tot_wait: 11.4s, rpc_num: 802, rpc_time: 2m36.9s, copr_cache_hit_ratio: 0.08}

| data:Limit_11 | 18.9 KB | N/A |

| └─Limit_11 | 100.00 | 100 | cop[tikv] | | tikv_task:{proc max:574ms, min:0s, p80:280ms, p95:404ms, iters:804, tasks:802}, scan_detail: {total_process_keys: 100, total_keys: 387235317, rocksdb: {delete_skipped_count: 47320693, key_skipped_count: 434555272, block: {cache_hit_count: 276457, read_count: 18, read_byte: 382.9 KB}}} | offset:0, count:100 | N/A | N/A |

| └─TableFullScan_10 | 100.00 | 100 | cop[tikv] | table:prefetch_key_info | tikv_task:{proc max:574ms, min:0s, p80:280ms, p95:404ms, iters:804, tasks:802}, scan_detail: {total_process_keys: 0, total_keys: 0, rocksdb: {delete_skipped_count: 0, key_skipped_count: 0, block: {cache_hit_count: 0, read_count: 0, read_byte: 0 Bytes}}}

| keep order:false | N/A | N/A |

±---------------------------±--------±--------±----------±------------------------±----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------±--------------------±--------±-----+

4 rows in set (2 min 37.80 sec)

ySQL [hotel_product]> explain ANALYZE select * from prefetch_key_info limit 100;

±---------------------------±--------±--------±----------±------------------------±-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------±--------------------±--------±-----+

| id | estRows | actRows | task | access object | execution info

| operator info | memory | disk |

±---------------------------±--------±--------±----------±------------------------±-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------±--------------------±--------±-----+

| Limit_7 | 100.00 | 100 | root | | time:7.7s, loops:2

| offset:0, count:100 | N/A | N/A |

| └─TableReader_12 | 100.00 | 100 | root | | time:7.7s, loops:1, cop_task: {num: 802, max: 433.9ms, min: 393.4µs, avg: 9.55ms, p95: 2.06ms, max_proc_keys: 100, p95_proc_keys: 0, tot_proc: 7.03s, tot_wait: 293ms, rpc_num: 804, rpc_time: 7.65s, copr_cache_hit_ratio: 0.95}, backoff{regionMiss: 2ms}

| data:Limit_11 | 18.9 KB | N/A |

| └─Limit_11 | 100.00 | 100 | cop[tikv] | | tikv_task:{proc max:574ms, min:0s, p80:280ms, p95:404ms, iters:804, tasks:802}, scan_detail: {total_process_keys: 100, total_keys: 19583148, rocksdb: {delete_skipped_count: 1814115, key_skipped_count: 21397225, block: {cache_hit_count: 13487, read_count: 7, read_byte: 316.2 KB}}} | offset:0, count:100 | N/A | N/A |

| └─TableFullScan_10 | 100.00 | 100 | cop[tikv] | table:prefetch_key_info | tikv_task:{proc max:574ms, min:0s, p80:280ms, p95:404ms, iters:804, tasks:802}, scan_detail: {total_process_keys: 0, total_keys: 0, rocksdb: {delete_skipped_count: 0, key_skipped_count: 0, block: {cache_hit_count: 0, read_count: 0, read_byte: 0 Bytes}}}

| keep order:false | N/A | N/A |

±---------------------------±--------±--------±----------±------------------------±-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------±--------------------±--------±-----+

4 rows in set (7.74 sec)

MySQL [hotel_product]> explain ANALYZE select * from prefetch_key_info limit 100;

±---------------------------±--------±--------±----------±------------------------±----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------±--------------------±--------±-----+

| id | estRows | actRows | task | access object | execution info

| operator

info | memory | disk |

±---------------------------±--------±--------±----------±------------------------±----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------±--------------------±--------±-----+

| Limit_7 | 100.00 | 100 | root | | time:709.4ms, loops:2

|

| └─TableReader_12 | 100.00 | 100 | root | | time:709.4ms, loops:1, cop_task: {num: 802, max: 4.63ms, min: 381.5µs, avg: 846.7µs, p95: 1.76ms, tot_proc: 2ms, tot_wait: 366ms, rpc_num: 802, rpc_time: 671.2ms, copr_cache_hit_ratio: 1.00} | data:Limit_11 | 18.9 KB | N/A |

| └─Limit_11 | 100.00 | 100 | cop[tikv] | | tikv_task:{proc max:574ms, min:0s, p80:280ms, p95:404ms, iters:804, tasks:802}, scan_detail: {total_process_keys: 0, total_keys: 3, rocksdb: {delete_skipped_count: 0, key_skipped_count: 0, block: {cache_hit_count: 40, read_count: 3, read_byte: 143.9 KB}}} | offset:0, count:100 | N/A | N/A |

| └─TableFullScan_10 | 100.00 | 100 | cop[tikv] | table:prefetch_key_info | tikv_task:{proc max:574ms, min:0s, p80:280ms, p95:404ms, iters:804, tasks:802}, scan_detail: {total_process_keys: 0, total_keys: 0, rocksdb: {delete_skipped_count: 0, key_skipped_count: 0, block: {cache_hit_count: 0, read_count: 0, read_byte: 0 Bytes}}} | keep order:false | N/A | N/A |

±---------------------------±--------±--------±----------±------------------------±----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------±--------------------±--------±-----+

4 rows in set (0.74 sec)