【 TiDB 使用环境】生产环境 /测试/ Poc

【 TiDB 版本】7.5

【复现路径】做过哪些操作出现的问题

【遇到的问题:问题现象及影响】

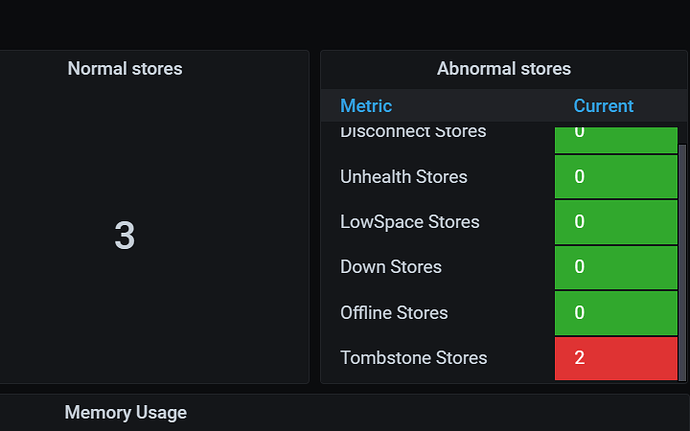

如果,grafana监控显示这样,是grafana显示问题还是集群有原来的scale-in掉的tikv没清理干净?

你是下线这2个kv节点了嘛,应该还有最后一步没做,看看文档呢

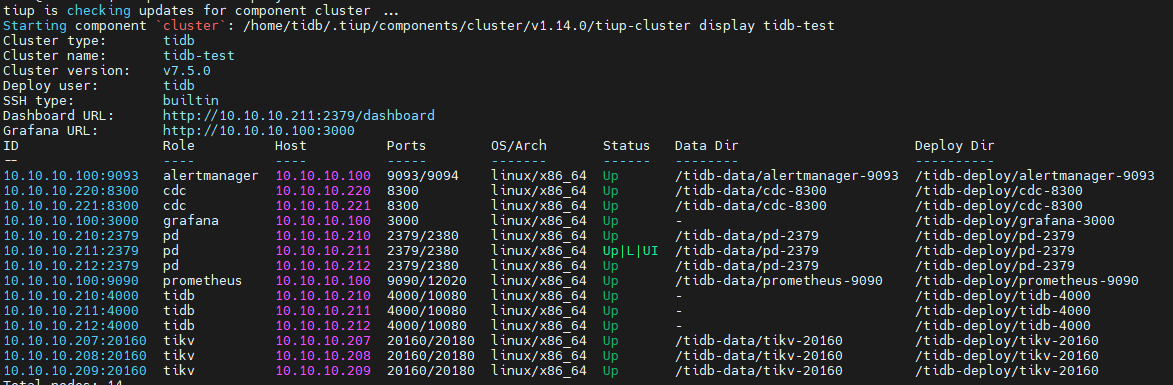

做了,tiup cluster display是看不到的

应该没有在pd节点删掉那两个下线的tikv信息

这个怎么删除呢

出现过这个情况,tidb节点所在服务器已经假死了

可以上面命令操作,要不然就登陆机器kill线程

想起来了,哈哈

看了下,pd里面的数据,只能看到3个tikv,感觉还是监控问题

» store

{

"count": 3,

"stores": [

{

"store": {

"id": 2,

"address": "10.10.10.207:20160",

"version": "7.5.0",

"peer_address": "10.10.10.207:20160",

"status_address": "10.10.10.207:20180",

"git_hash": "bd8a0aabd08fd77687f788e0b45858ccd3516e4d",

"start_timestamp": 1702543376,

"deploy_path": "/tidb-deploy/tikv-20160/bin",

"last_heartbeat": 1704941589829448281,

"state_name": "Up"

},

"status": {

"capacity": "296.8GiB",

"available": "173.3GiB",

"used_size": "91.12GiB",

"leader_count": 743,

"leader_weight": 1,

"leader_score": 743,

"leader_size": 68598,

"region_count": 2222,

"region_weight": 1,

"region_score": 482468.09045993385,

"region_size": 206588,

"slow_score": 1,

"slow_trend": {

"cause_value": 250030.06666666668,

"cause_rate": 0,

"result_value": 11,

"result_rate": 0

},

"start_ts": "2023-12-14T16:42:56+08:00",

"last_heartbeat_ts": "2024-01-11T10:53:09.829448281+08:00",

"uptime": "666h10m13.829448281s"

}

},

{

"store": {

"id": 7,

"address": "10.10.10.209:20160",

"version": "7.5.0",

"peer_address": "10.10.10.209:20160",

"status_address": "10.10.10.209:20180",

"git_hash": "bd8a0aabd08fd77687f788e0b45858ccd3516e4d",

"start_timestamp": 1702543289,

"deploy_path": "/tidb-deploy/tikv-20160/bin",

"last_heartbeat": 1704941590878681522,

"state_name": "Up"

},

"status": {

"capacity": "296.8GiB",

"available": "169.6GiB",

"used_size": "97.08GiB",

"leader_count": 735,

"leader_weight": 1,

"leader_score": 735,

"leader_size": 68643,

"region_count": 2222,

"region_weight": 1,

"region_score": 485647.8300894471,

"region_size": 206588,

"slow_score": 1,

"slow_trend": {

"cause_value": 250046.5,

"cause_rate": 0,

"result_value": 4,

"result_rate": 0

},

"start_ts": "2023-12-14T16:41:29+08:00",

"last_heartbeat_ts": "2024-01-11T10:53:10.878681522+08:00",

"uptime": "666h11m41.878681522s"

}

},

{

"store": {

"id": 1,

"address": "10.10.10.208:20160",

"version": "7.5.0",

"peer_address": "10.10.10.208:20160",

"status_address": "10.10.10.208:20180",

"git_hash": "bd8a0aabd08fd77687f788e0b45858ccd3516e4d",

"start_timestamp": 1702572040,

"deploy_path": "/tidb-deploy/tikv-20160/bin",

"last_heartbeat": 1704941591831615481,

"state_name": "Up"

},

"status": {

"capacity": "296.8GiB",

"available": "171.9GiB",

"used_size": "95.82GiB",

"leader_count": 744,

"leader_weight": 1,

"leader_score": 744,

"leader_size": 69347,

"region_count": 2222,

"region_weight": 1,

"region_score": 483825.71011686197,

"region_size": 206588,

"slow_score": 1,

"slow_trend": {

"cause_value": 250012.09166666667,

"cause_rate": 0,

"result_value": 33.5,

"result_rate": 0

},

"start_ts": "2023-12-15T00:40:40+08:00",

"last_heartbeat_ts": "2024-01-11T10:53:11.831615481+08:00",

"uptime": "658h12m31.831615481s"

}

}

]

}

我记得显示"state_name": "Down"状态

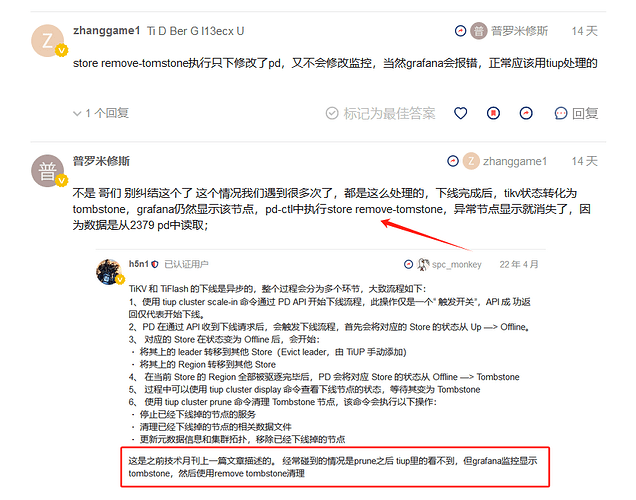

执行了,store delete 14493,又执行了store remove-tombstone

这不是问题,只是 pd 会流程这个下线信息,所以监控就会捕获显示。这个只是留存着告诉你历史上缩容过多少节点。上文哪些方法是删除 pd 中相关信息的。

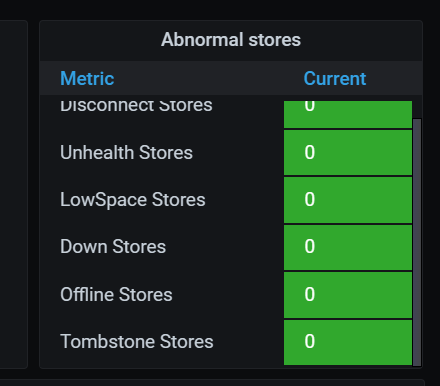

![]()

正常了

怎么修改正常的。

执行

tiup ctl:v7.5.0 pd -u http://10.10.10.211:2379 -i

进控制台,执行

store remove-tombstone

执行修剪的命令呀

此话题已在最后回复的 60 天后被自动关闭。不再允许新回复。