【 TiDB 使用环境】生产环境

【 TiDB 版本】V5升级到V7.1.2

【复现路径】tiup upgrade 集群 或者 tiup restart -R prometheus 均可复现

【遇到的问题:问题现象及影响】

1、集群升级,所有组件基本升级成功,到了最后重启node_export,其中一个节点失败,upgrade退出。

2、查看dashboard,显示集群版本、tidb、pd、tikv版本为V7

3、tiup display,集群版本显示为V5。

4、反复尝试:tiup restart -R prometheus 均重启node_export超时

Error: failed to start: failed to start: a。b.c.x7 node_exporter-9100.service, please check the instance's log() for more detail.: timed out waiting for port 9100 to be started after 2m0s

5、upgrade最后的报错如下

Upgrading component tidb

Restarting instance a.b.c.x1:4000

Restart instance a.b.c.x1:4000 success

Restarting instance a.b.c.x2:4000

Restart instance a.b.c.x2:4000 success

Restarting instance a.b.c.x1:4071

Restart instance a.b.c.x1:4071 success

Upgrading component prometheus

Restarting instance a.b.c.x7:9090

Restart instance a.b.c.x7:9090 success

Upgrading component grafana

Restarting instance a.b.c.x7:3000

Restart instance a.b.c.x7:3000 success

Upgrading component alertmanager

Restarting instance a.b.c.x7:9093

Restart instance a.b.c.x7:9093 success

Stopping component node_exporter

Stopping instance a.b.c.x1

Stopping instance a.b.c.x1

Stopping instance a.b.c.x2

Stopping instance a.b.c.x4

Stopping instance a.b.c.x7

Stopping instance a.b.c.x0

Stopping instance a.b.c.x6

Stopping instance a.b.c.x5

Stopping instance a.b.c.x8

Stopping instance a.b.c.x3

Stop a.b.c.x3 success

Stop a.b.c.x5 success

Stop a.b.c.x8 success

Stop a.b.c.x7 success

Stop a.b.c.x6 success

Stop a.b.c.x2 success

Stop a.b.c.x1 success

Stop a.b.c.x4 success

Stop a.b.c.x1 success

Stop a.b.c.x0 success

Stopping component blackbox_exporter

Stopping instance a.b.c.x1

Stopping instance a.b.c.x2

Stopping instance a.b.c.x5

Stopping instance a.b.c.x0

Stopping instance a.b.c.x1

Stopping instance a.b.c.x7

Stopping instance a.b.c.x3

Stopping instance a.b.c.x4

Stopping instance a.b.c.x8

Stopping instance a.b.c.x6

Stop a.b.c.x5 success

Stop a.b.c.x3 success

Stop a.b.c.x8 success

Stop a.b.c.x7 success

Stop a.b.c.x6 success

Stop a.b.c.x4 success

Stop a.b.c.x2 success

Stop a.b.c.x1 success

Stop a.b.c.x1 success

Stop a.b.c.x0 success

Starting component node_exporter

Starting instance a.b.c.x4

Starting instance a.b.c.x5

Starting instance a.b.c.x8

Starting instance a.b.c.x0

Starting instance a.b.c.x6

Starting instance a.b.c.x2

Starting instance a.b.c.x7

Starting instance a.b.c.x1

Starting instance a.b.c.x3

Starting instance a.b.c.x1

Start a.b.c.x5 success

Start a.b.c.x3 success

Start a.b.c.x8 success

Start a.b.c.x1 success

Start a.b.c.x6 success

Start a.b.c.x4 success

Start a.b.c.x2 success

Start a.b.c.x1 success

Start a.b.c.x0 success

Error: failed to start: a.b.c.x7 node_exporter-9100.service, please check the instance's log() for more detail.: timed out waiting for port 9100 to be started after 2m0s

尝试在Error: failed to start: a.b.c.x7 该节点使用systemctl restart node_exporter-9100 是没问题的。

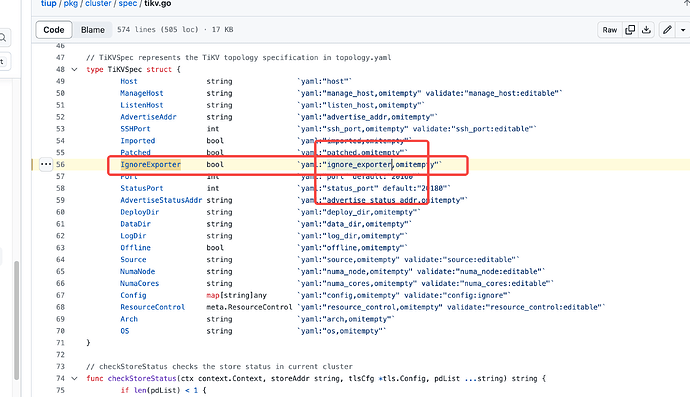

怀疑:历史上该集群通过ansible部署(V2版本历经V3、tiup V4 V5到今天V7),报错节点(x7)上的bin和script目录不够规范。