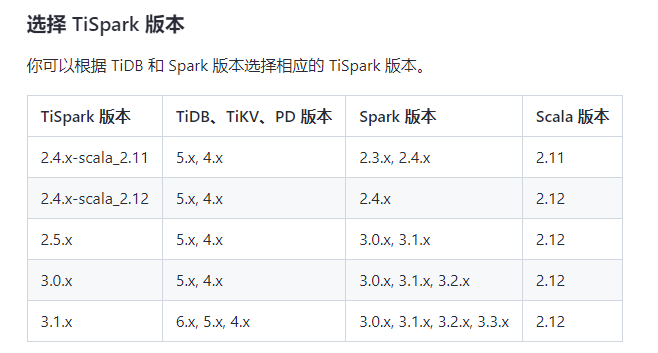

TiDB版本:7.1.2

TiSpark版本:[tispark-assembly-3.3_2.12-3.2.2.jar]

Spark版本:3.3.1

执行insert into select,执行了3次,只成功了1次,另外两次报以下错误:

operating ExecuteStatement: org.apache.spark.SparkException: Job aborted due to stage failure: Task 1 in stage 46.0 failed 4 times, most recent failure: Lost task 1.3 in stage 46.0 (TID 2248) (hadoop-0003 executor 2): org.tikv.common.exception.TiBatchWriteException: Execution exception met.

at org.tikv.txn.TwoPhaseCommitter.doPrewriteSecondaryKeys(TwoPhaseCommitter.java:308)

at org.tikv.txn.TwoPhaseCommitter.prewriteSecondaryKeys(TwoPhaseCommitter.java:259)

at com.pingcap.tispark.utils.TwoPhaseCommitHepler.$anonfun$prewriteSecondaryKeyByExecutors$1(TwoPhaseCommitHepler.scala:102)

at com.pingcap.tispark.utils.TwoPhaseCommitHepler.$anonfun$prewriteSecondaryKeyByExecutors$1$adapted(TwoPhaseCommitHepler.scala:90)

at org.apache.spark.rdd.RDD.$anonfun$foreachPartition$2(RDD.scala:1011)

at org.apache.spark.rdd.RDD.$anonfun$foreachPartition$2$adapted(RDD.scala:1011)

at org.apache.spark.SparkContext.$anonfun$runJob$5(SparkContext.scala:2268)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:136)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:548)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1504)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:551)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.util.concurrent.ExecutionException: org.tikv.common.exception.TiBatchWriteException: > max retry number 3, oldRegion={Region[9855557] ConfVer[7425] Version[419822] Store[2853733] KeyRange[t\200\000\000\000\000\020\262\335_i\200\000\000\000\000\000\000\001\001\0009\0001\0001\0001\377\0000\0001\0000\0005\377\000M\000A\0000\0002\377\0000\0009\000G\0006\377\0004\000J\000\000\000\000\373]:[t\200\000\000\000\000\020\262\335_i\200\000\000\000\000\000\000\001\001\0009\0001\0001\0001\377\0000\0001\0000\0008\377\0007\0002\0003\0009\377\0006\0009\0003\0007\377\0006\000Y\000\000\000\000\373]}, currentRegion={Region[9857771] ConfVer[7425] Version[419823] Store[2853733] KeyRange[t\200\000\000\000\000\020\262\335_i\200\000\000\000\000\000\000\001\001\0009\0001\0001\0001\377\0000\0001\0000\0005\377\000M\000A\0000\0002\377\0000\0009\000G\0006\377\0004\000J\000\000\000\000\373]:[t\200\000\000\000\000\020\262\335_i\200\000\000\000\000\000\000\001\001\0009\0001\0001\0001\377\0000\0001\0000\0005\377\000M\000A\0000\0005\377\000D\000A\000B\0008\377\0005\000Y\000\000\000\000\373]}

at java.util.concurrent.FutureTask.report(FutureTask.java:122)

at java.util.concurrent.FutureTask.get(FutureTask.java:192)

at org.tikv.txn.TwoPhaseCommitter.doPrewriteSecondaryKeys(TwoPhaseCommitter.java:285)

… 14 more

Caused by: org.tikv.common.exception.TiBatchWriteException: > max retry number 3, oldRegion={Region[9855557] ConfVer[7425] Version[419822] Store[2853733] KeyRange[t\200\000\000\000\000\020\262\335_i\200\000\000\000\000\000\000\001\001\0009\0001\0001\0001\377\0000\0001\0000\0005\377\000M\000A\0000\0002\377\0000\0009\000G\0006\377\0004\000J\000\000\000\000\373]:[t\200\000\000\000\000\020\262\335_i\200\000\000\000\000\000\000\001\001\0009\0001\0001\0001\377\0000\0001\0000\0008\377\0007\0002\0003\0009\377\0006\0009\0003\0007\377\0006\000Y\000\000\000\000\373]}, currentRegion={Region[9857771] ConfVer[7425] Version[419823] Store[2853733] KeyRange[t\200\000\000\000\000\020\262\335_i\200\000\000\000\000\000\000\001\001\0009\0001\0001\0001\377\0000\0001\0000\0005\377\000M\000A\0000\0002\377\0000\0009\000G\0006\377\0004\000J\000\000\000\000\373]:[t\200\000\000\000\000\020\262\335_i\200\000\000\000\000\000\000\001\001\0009\0001\0001\0001\377\0000\0001\0000\0005\377\000M\000A\0000\0005\377\000D\000A\000B\0008\377\0005\000Y\000\000\000\000\373]}

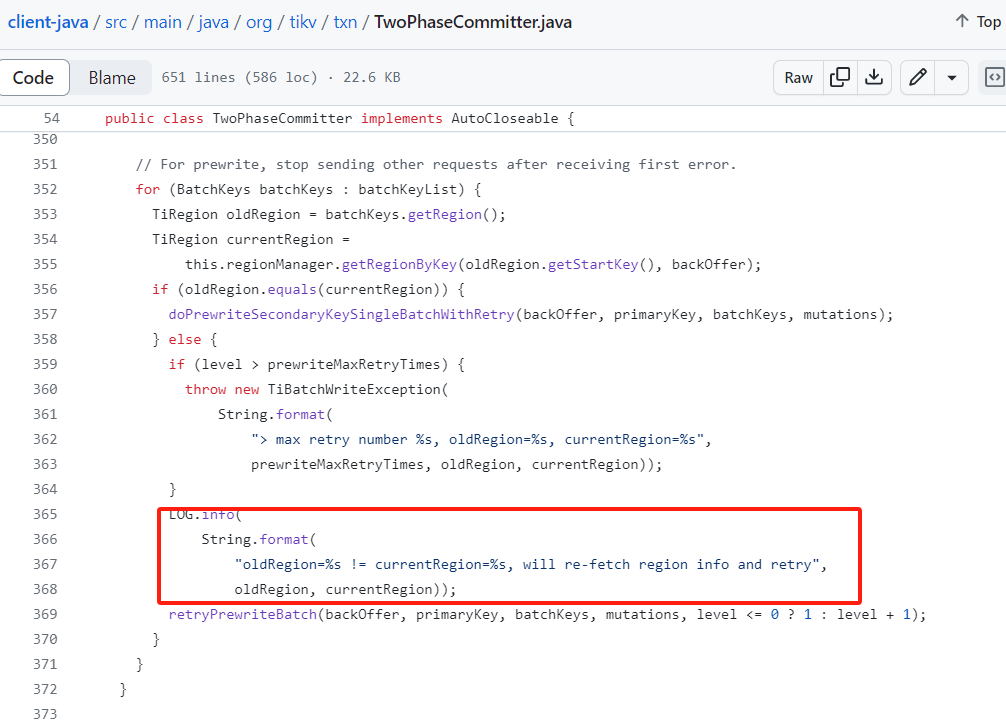

at org.tikv.txn.TwoPhaseCommitter.doPrewriteSecondaryKeysInBatchesWithRetry(TwoPhaseCommitter.java:361)

at org.tikv.txn.TwoPhaseCommitter.retryPrewriteBatch(TwoPhaseCommitter.java:390)

at org.tikv.txn.TwoPhaseCommitter.doPrewriteSecondaryKeysInBatchesWithRetry(TwoPhaseCommitter.java:369)

at org.tikv.txn.TwoPhaseCommitter.retryPrewriteBatch(TwoPhaseCommitter.java:390)

at org.tikv.txn.TwoPhaseCommitter.doPrewriteSecondaryKeysInBatchesWithRetry(TwoPhaseCommitter.java:369)

at org.tikv.txn.TwoPhaseCommitter.retryPrewriteBatch(TwoPhaseCommitter.java:390)

at org.tikv.txn.TwoPhaseCommitter.doPrewriteSecondaryKeysInBatchesWithRetry(TwoPhaseCommitter.java:369)

at org.tikv.txn.TwoPhaseCommitter.retryPrewriteBatch(TwoPhaseCommitter.java:390)

at org.tikv.txn.TwoPhaseCommitter.doPrewriteSecondaryKeysInBatchesWithRetry(TwoPhaseCommitter.java:369)

at org.tikv.txn.TwoPhaseCommitter.lambda$doPrewriteSecondaryKeys$0(TwoPhaseCommitter.java:292)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

… 3 more