【 TiDB 使用环境】生产环境

【 TiDB 版本】V7.1.2

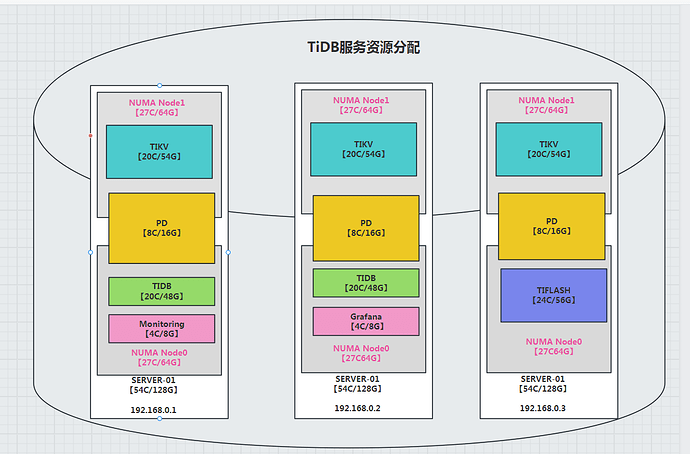

生产环境准备了3台物理机

每台服务器54C/128G/2NUMA Node

计划资源分配:

TIDB:20C/48G

PD:8C/16G

TIKV:20C/54G

TIFLASH:24C/54G

Monitoring:4C/8G

Grafana:4C/8G

各实例数量

TIDB:2个

PD:3个

TIKV:3个

TIFLASH:1个

Monitoring:1个

Grafana:1个

采用3台混合部署使用numa+cgroup

部署图:

拓扑文件:

# Global variables are applied to all deployments and used as the default value of

# the deployments if a specific deployment value is missing.

global:

user: “tidb”

ssh_port: 22

deploy_dir: “/home/tidb/tidb-deploy”

data_dir: “/home/tidb/tidb-data”

monitored:

node_exporter_port: 9100

blackbox_exporter_port: 9115

server_configs:

tidb:

log.slow-threshold: 300

binlog.enable: false

binlog.ignore-error: false

performance.max-procs: 20

tikv:

readpool.storage.use-unified-pool: false

readpool.coprocessor.use-unified-pool: true

pd:

schedule.leader-schedule-limit: 4

schedule.region-schedule-limit: 2048

schedule.replica-schedule-limit: 64

pd_servers:

-

host: 192.168.0.1

resource_control:

memory_limit: “16G”

cpu_quota: “800%” -

host: 192.168.0.2

resource_control:

memory_limit: “16G”

cpu_quota: “800%” -

host: 192.168.0.3

resource_control:

memory_limit: “16G”

cpu_quota: “800%”

tidb_servers:

-

host: 192.168.0.1

numa_node: “0”

resource_control:

memory_limit: “48G”

cpu_quota: “2000%” -

host: 192.168.0.2

numa_node: “0”

resource_control:

memory_limit: “48G”

cpu_quota: “2000%”

tikv_servers:

-

host: 192.168.0.1

numa_node: “1”

resource_control:

memory_limit: “54G”

cpu_quota: “2000%” -

host: 192.168.0.2

numa_node: “1”

resource_control:

memory_limit: “54G”

cpu_quota: “2000%” -

host: 192.168.0.3

numa_node: “1”

resource_control:

memory_limit: “54G”

cpu_quota: “2000%”

tiflash_servers:

- host: 192.168.0.3

numa_node: “0”

resource_control:

memory_limit: “56G”

cpu_quota: “2400%”

monitoring_servers:

- host: 192.168.0.1

numa_node: “0”

resource_control:

memory_limit: “8G”

cpu_quota: “400%”

grafana_servers:

- host: 192.168.0.2

numa_node: “0”

resource_control:

memory_limit: “8G”

cpu_quota: “400%”

各位大神看看规划是否合理,有没有什么优化和调整的建议。谢谢