【 TiDB 使用环境】生产环境 /测试/ Poc

生产环境

【 TiDB 版本】

v7.2.0

【复现路径】做过哪些操作出现的问题

【遇到的问题:问题现象及影响】

问题一:按库设置副本进度卡住报错

计划按库构建 TiFlash 副本

ALTER DATABASE db SET TIFLASH REPLICA 1;

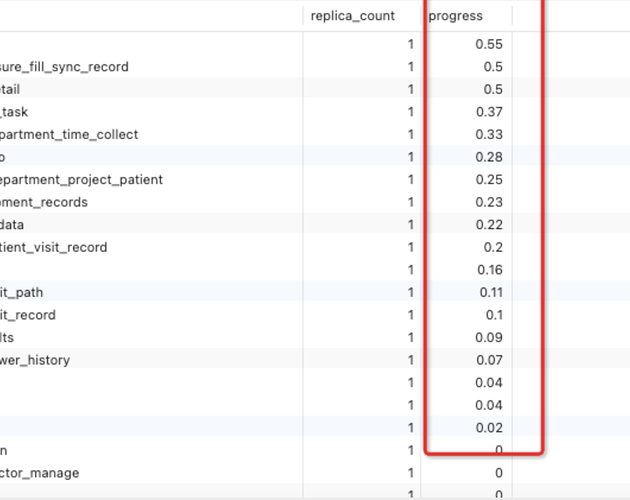

然后使用以下 SQL 查询同步进度。同步了 40 分钟,大约同步了 57 张表后,再次查询进度发现同步卡住,进度不动

select count(*) from information_schema.tiflash_replica where progress !=1 ;

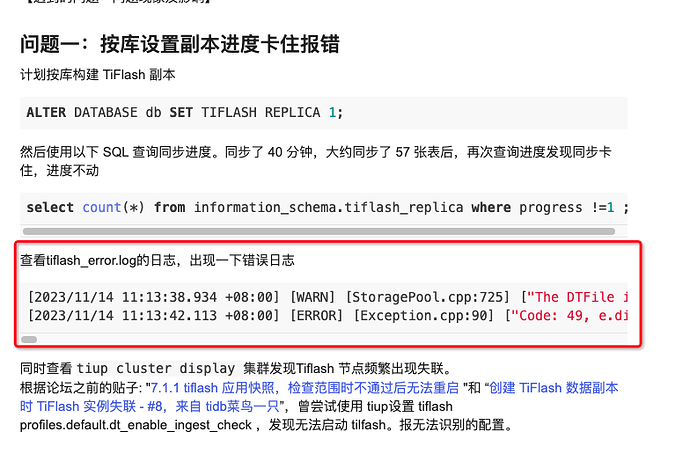

查看tiflash_error.log的日志,出现一下错误日志

[2023/11/14 11:13:38.934 +08:00] [WARN] [StoragePool.cpp:725] ["The DTFile is already exists, continute to acquire another ID. [call=std::tuple<String, PageIdU64> DB::DM::DeltaMergeStore::preAllocateIngestFile()][path=/tiflash-data/data/t_65415/stable] [id=2450]"] [source=db_7073.t_65415] [thread_id=857]

[2023/11/14 11:13:42.113 +08:00] [ERROR] [Exception.cpp:90] ["Code: 49, e.displayText() = DB::Exception: Check compare(range.getEnd(), ext_file.range.getEnd()) >= 0 failed, range.toDebugString() = [96104,98759), ext_file.range.toDebugString() = [96104,98760), e.what() = DB::Exception, Stack trace:\n\n\n 0x1c349ae\tDB::Exception::Exception(std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, int) [tiflash+29575598]\n \tdbms/src/Common/Exception.h:46\n 0x73c771c\tDB::DM::DeltaMergeStore::ingestFiles(std::__1::shared_ptr<DB::DM::DMContext> const&, DB::DM::RowKeyRange const&, std::__1::vector<DB::DM::ExternalDTFileInfo, std::__1::allocator<DB::DM::ExternalDTFileInfo> > const&, bool) [tiflash+121403164]\n \tdbms/src/Storages/DeltaMerge/DeltaMergeStore_Ingest.cpp:558\n 0x7ec2c34\tDB::DM::DeltaMergeStore::ingestFiles(DB::Context const&, DB::Settings const&, DB::DM::RowKeyRange const&, std::__1::vector<DB::DM::ExternalDTFileInfo, std::__1::allocator<DB::DM::ExternalDTFileInfo> > const&, bool) [tiflash+132918324]\n \tdbms/src/Storages/DeltaMerge/DeltaMergeStore.h:312\n 0x7f6bfe0\tvoid DB::KVStore::checkAndApplyPreHandledSnapshot<DB::RegionPtrWithSnapshotFiles>(DB::RegionPtrWithSnapshotFiles const&, DB::TMTContext&) [tiflash+133611488]\n \tdbms/src/Storages/Transaction/ApplySnapshot.cpp:140\n 0x7f6a5a6\tvoid DB::KVStore::applyPreHandledSnapshot<DB::RegionPtrWithSnapshotFiles>(DB::RegionPtrWithSnapshotFiles const&, DB::TMTContext&) [tiflash+133604774]\n \tdbms/src/Storages/Transaction/ApplySnapshot.cpp:446\n 0x7fe6721\tApplyPreHandledSnapshot [tiflash+134113057]\n \tdbms/src/Storages/Transaction/ProxyFFI.cpp:664\n 0x7fc7cc26809f\t_$LT$engine_store_ffi..observer..TiFlashObserver$LT$T$C$ER$GT$$u20$as$u20$raftstore..coprocessor..ApplySnapshotObserver$GT$::post_apply_snapshot::h9cf73b076a6be5ca [libtiflash_proxy.so+24293535]\n 0x7fc7cd284eed\traftstore::store::worker::region::Runner$LT$EK$C$R$C$T$GT$::handle_pending_applies::he8dd9bcf803128c3 [libtiflash_proxy.so+41189101]\n 0x7fc7cc876170\tyatp::task::future::RawTask$LT$F$GT$::poll::hd12828196a3b1f60 [libtiflash_proxy.so+30642544]\n 0x7fc7ce1cb08b\t_$LT$yatp..task..future..Runner$u20$as$u20$yatp..pool..runner..Runner$GT$::handle::h0559c073015890dd [libtiflash_proxy.so+57204875]\n 0x7fc7cc36da34\tstd::sys_common::backtrace::__rust_begin_short_backtrace::h0ad613d9614d6b0a [libtiflash_proxy.so+25365044]\n 0x7fc7cc3bd4a8\tcore::ops::function::FnOnce::call_once$u7b$$u7b$vtable.shim$u7d$$u7d$::h9e1cc15e5b5258a1 [libtiflash_proxy.so+25691304]\n 0x7fc7cd8fb7c5\tstd::sys::unix::thread::Thread::new::thread_start::hd2791a9cabec1fda [libtiflash_proxy.so+47966149]\n \t/rustc/96ddd32c4bfb1d78f0cd03eb068b1710a8cebeef/library/std/src/sys/unix/thread.rs:108\n 0x7fc7ca41eea5\tstart_thread [libpthread.so.0+32421]\n 0x7fc7c9d2db0d\tclone [libc.so.6+1043213]"] [source="void DB::ApplyPreHandledSnapshot(DB::EngineStoreServerWrap *, PreHandledSnapshot *) [PreHandledSnapshot = DB::PreHandledSnapshotWithFiles]"] [thread_id=860]

同时查看 tiup cluster display 集群发现Tiflash 节点频繁出现失联。

根据论坛之前的贴子: "7.1.1 tiflash 应用快照,检查范围时不通过后无法重启 "和 “创建 TiFlash 数据副本时 TiFlash 实例失联 - #8,来自 tidb菜鸟一只”,曾尝试使用 tiup设置 tiflash profiles.default.dt_enable_ingest_check ,发现无法启动 tilfash。报无法识别的配置。

问题二:将同步的 DB 取消后重启 tiflash节点次日 tiflash 节点存储被打爆

在出现同步卡住报错之后,紧急使用以下 SQL 取消了同步。然后又重启了 Tilfash节点。次日监控发现 Tiflash节点磁盘被打爆。tiflash 其中一个磁盘 1TB 被消耗了 800 多 GB。查看发现是 tilfash 部署目录下有很多 core.xxx的文件。每个大小 1GB 左右。

ALTER DATABASE saas SET TIFLASH REPLICA 0;

【资源配置】

集群两个 PD节点,一个 TiFlash节点,五个 TIKV节点,四个 tidb节点。

Tiflash硬件配置:32 核,64G内存,两个1TB SSD存储磁盘作为 tiflash数据存储

要同步的DB 大约 430 多张表,其中有两张2~3千万级别的表。

tiflash.toml配置文件

default_profile = "default"

display_name = "TiFlash"

listen_host = "0.0.0.0"

tcp_port = 9000

tmp_path = "/tiflash-data/tmp"

[flash]

service_addr = "192.168.190.9:3930"

tidb_status_addr = "192.168.190.1:10080,192.168.190.2:10080,192.168.190.8:10080,192.168.190.9:10080,192.168.190.206:10080,192.168.190.207:10080"

[flash.flash_cluster]

cluster_manager_path = "/data/tidb/tiflash-9000/bin/tiflash/flash_cluster_manager"

log = "/data/tidb/tiflash-9000/logs/tiflash_cluster_manager.log"

master_ttl = 60

refresh_interval = 20

update_rule_interval = 5

[flash.proxy]

config = "/data/tidb/tiflash-9000/conf/tiflash-learner.toml"

[logger]

count = 20

errorlog = "/data/tidb/tiflash-9000/logs/tiflash_error.log"

level = "info"

log = "/data/tidb/tiflash-9000/logs/tiflash.log"

size = "1000M"

[profiles]

[profiles.default]

max_memory_usage = 0

[raft]

pd_addr = "192.168.190.1:2379,192.168.190.2:2379"

[status]

metrics_port = 8234

[storage]

[storage.main]

capacity = [912680550400, 912680550400]

dir = ["/tiflash-data", "/data/tidb/tiflash-9000/data"]

tiflash-learner.toml配置文件

log-file = "/data/tidb/tiflash-9000/logs/tiflash_tikv.log"

[raftstore]

apply-pool-size = 4

store-pool-size = 4

[rocksdb]

wal-dir = ""

[security]

ca-path = ""

cert-path = ""

key-path = ""

[server]

addr = "0.0.0.0:20170"

advertise-addr = "192.168.190.9:20170"

advertise-status-addr = "192.168.190.9:20292"

engine-addr = "192.168.190.9:3930"

status-addr = "0.0.0.0:20292"

[storage]

data-dir = "/tiflash-data/flash"

【附件:截图/日志/监控】