为提高效率,提问时请尽量提供详细背景信息,问题描述清晰可优先响应。以下信息点请尽量提供:

- 系统版本 & kernel 版本:

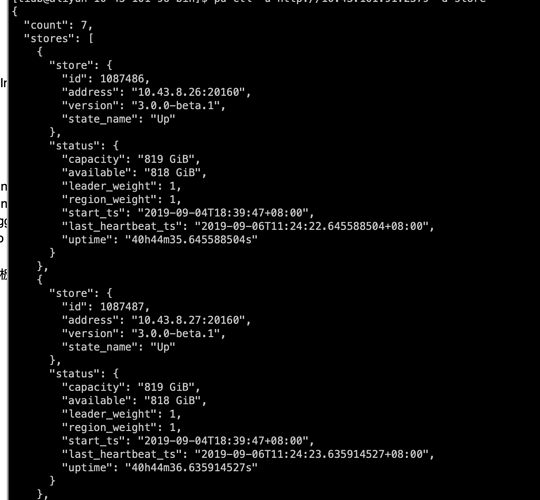

- TiDB 版本:v3.0.0-beta.1-133-g27a56180b

- 磁盘型号:阿里云本地ssd

- 集群节点分布:tidb:4,pd:3,tikv:7

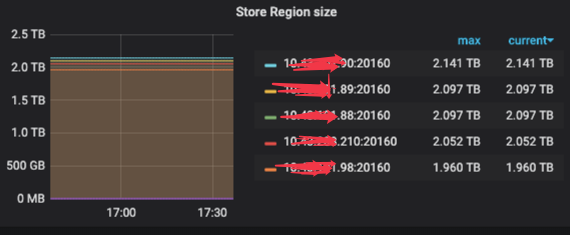

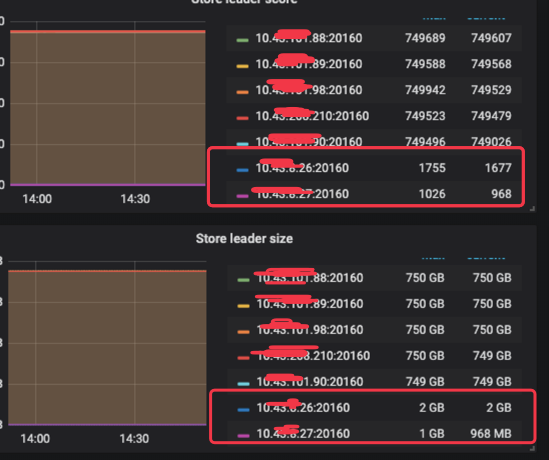

- 数据量 & region 数量 & 副本数:2.9T 45466 3

- 集群 QPS、.999-Duration、读写比例:2k select:1min r1:w5

- 问题描述(我做了什么):

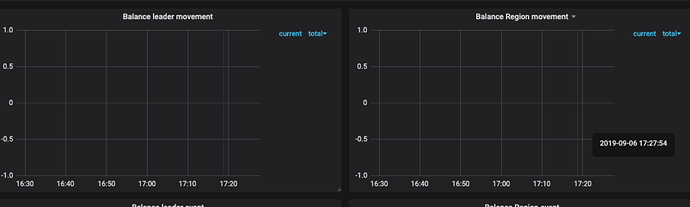

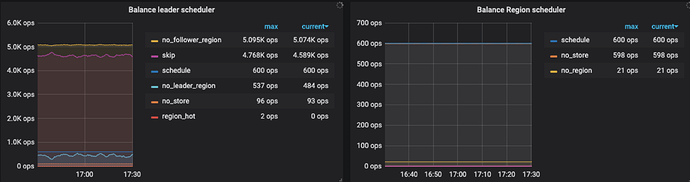

扩容了2台tikv,扩容后数据没有自动均衡,目前两个tikv的store数量还是0