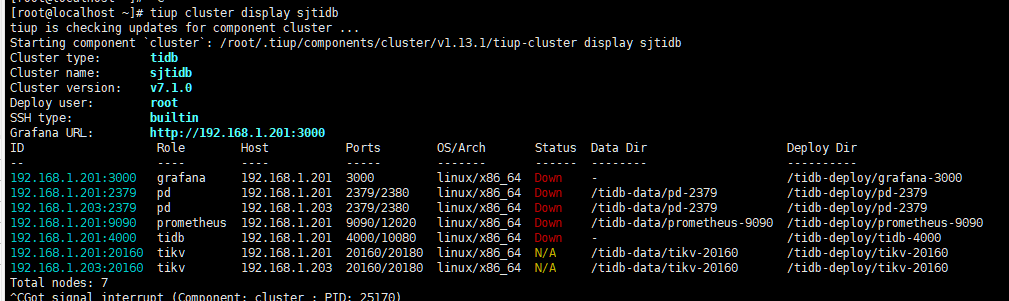

【 TiDB 使用环境】生产环境

【 TiDB 版本】 v7.1.0

【复现路径】集群启动

【遇到的问题:问题现象及影响】

集群已经缩容成功,但是启动集群提示kv报错,看了kv的日志,请求了一个缩容的pd节点,查看集群状态是不存在这个节点的。

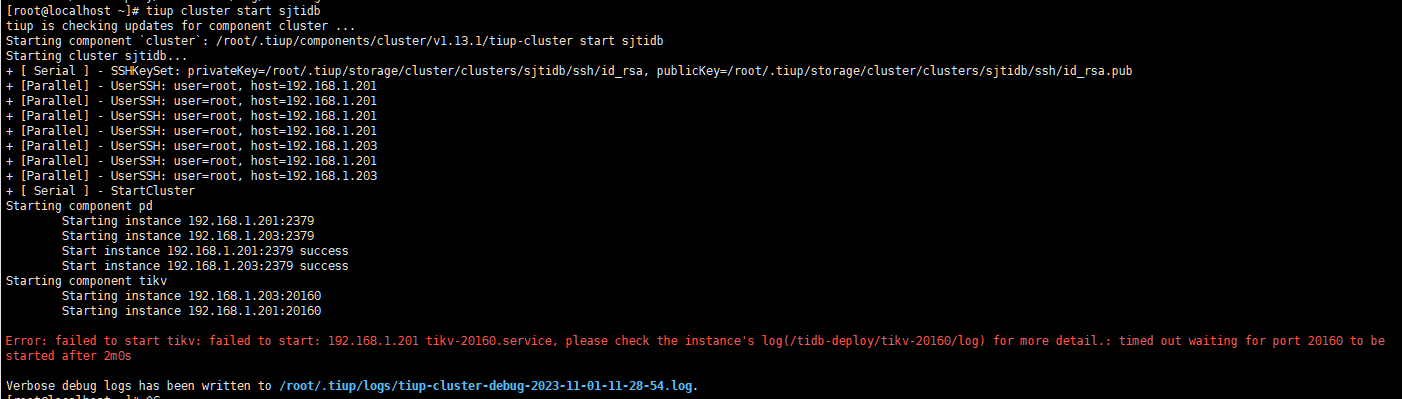

[root@localhost ~]# tiup cluster start sjtidb

tiup is checking updates for component cluster …

Starting component

cluster: /root/.tiup/components/cluster/v1.13.1/tiup-cluster start sjtidbStarting cluster sjtidb…

- [ Serial ] - SSHKeySet: privateKey=/root/.tiup/storage/cluster/clusters/sjtidb/ssh/id_rsa, publicKey=/root/.tiup/storage/cluster/clusters/sjtidb/ssh/id_rsa.pub

- [Parallel] - UserSSH: user=root, host=192.168.1.201

- [Parallel] - UserSSH: user=root, host=192.168.1.201

- [Parallel] - UserSSH: user=root, host=192.168.1.201

- [Parallel] - UserSSH: user=root, host=192.168.1.201

- [Parallel] - UserSSH: user=root, host=192.168.1.203

- [Parallel] - UserSSH: user=root, host=192.168.1.201

- [Parallel] - UserSSH: user=root, host=192.168.1.203

- [ Serial ] - StartCluster

Starting component pd

Starting instance 192.168.1.201:2379

Starting instance 192.168.1.203:2379

Start instance 192.168.1.201:2379 success

Start instance 192.168.1.203:2379 success

Starting component tikv

Starting instance 192.168.1.203:20160

Starting instance 192.168.1.201:20160

Error: failed to start tikv: failed to start: 192.168.1.201 tikv-20160.service, please check the instance’s log(/tidb-deploy/tikv-20160/log) for more detail.: timed out waiting for port 20160 to be started after 2m0s

Verbose debug logs has been written to /root/.tiup/logs/tiup-cluster-debug-2023-11-01-11-28-54.log.

[2023/11/01 11:26:53.044 +08:00] [INFO] [lib.rs:88] [“Welcome to TiKV”]

[2023/11/01 11:26:53.045 +08:00] [INFO] [lib.rs:93] [“Release Version: 7.1.0”]

[2023/11/01 11:26:53.045 +08:00] [INFO] [lib.rs:93] [“Edition: Community”]

[2023/11/01 11:26:53.045 +08:00] [INFO] [lib.rs:93] [“Git Commit Hash: 0c34464e386940a60f2a2ce279a4ef18c9c6c45b”]

[2023/11/01 11:26:53.045 +08:00] [INFO] [lib.rs:93] [“Git Commit Branch: heads/refs/tags/v7.1.0”]

[2023/11/01 11:26:53.045 +08:00] [INFO] [lib.rs:93] [“UTC Build Time: Unknown (env var does not exist when building)”]

[2023/11/01 11:26:53.045 +08:00] [INFO] [lib.rs:93] [“Rust Version: rustc 1.67.0-nightly (96ddd32c4 2022-11-14)”]

[2023/11/01 11:26:53.045 +08:00] [INFO] [lib.rs:93] [“Enable Features: pprof-fp jemalloc mem-profiling portable sse test-engine-kv-rocksdb test-engine-raft-raft-engine cloud-aws cloud-gcp cloud-azure”]

[2023/11/01 11:26:53.046 +08:00] [INFO] [lib.rs:93] [“Profile: dist_release”]

[2023/11/01 11:26:53.046 +08:00] [INFO] [mod.rs:80] [“cgroup quota: memory=Some(9223372036854771712), cpu=None, cores={13, 14, 5, 3, 15, 9, 2, 0, 4, 12, 1, 11, 7, 6, 8, 10}”]

[2023/11/01 11:26:53.046 +08:00] [INFO] [mod.rs:87] [“memory limit in bytes: 33547878400, cpu cores quota: 16”]

[2023/11/01 11:26:53.046 +08:00] [WARN] [lib.rs:544] [“environment variable

TZ is missing, using /etc/localtime”][2023/11/01 11:26:53.046 +08:00] [WARN] [server.rs:1511] [“check: kernel”] [err=“kernel parameters net.core.somaxconn got 128, expect 32768”]

[2023/11/01 11:26:53.046 +08:00] [WARN] [server.rs:1511] [“check: kernel”] [err=“kernel parameters net.ipv4.tcp_syncookies got 1, expect 0”]

[2023/11/01 11:26:53.046 +08:00] [WARN] [server.rs:1511] [“check: kernel”] [err=“kernel parameters vm.swappiness got 30, expect 0”]

[2023/11/01 11:26:53.057 +08:00] [INFO] [util.rs:604] [“connecting to PD endpoint”] [endpoints=192.168.1.201:2379]

[2023/11/01 11:26:53.060 +08:00] [INFO] [] [“TCP_USER_TIMEOUT is available. TCP_USER_TIMEOUT will be used thereafter”]

[2023/11/01 11:26:55.061 +08:00] [INFO] [util.rs:566] [“PD failed to respond”] [err=“Grpc(RpcFailure(RpcStatus { code: 4-DEADLINE_EXCEEDED, message: "Deadline Exceeded", details: [] }))”] [endpoints=192.168.1.201:2379]

[2023/11/01 11:26:55.061 +08:00] [INFO] [util.rs:604] [“connecting to PD endpoint”] [endpoints=192.168.1.202:2379]

[2023/11/01 11:26:55.062 +08:00] [INFO] [] [“subchannel 0x7f39f364f000 {address=ipv4:192.168.1.202:2379, args=grpc.client_channel_factory=0x7f39f36979b0, grpc.default_authority=192.168.1.202:2379, grpc.initial_reconnect_backoff_ms=1000, grpc.internal.subchannel_pool=0x7f39f36389a0, grpc.keepalive_time_ms=10000, grpc.keepalive_timeout_ms=3000, grpc.max_receive_message_length=-1, grpc.max_reconnect_backoff_ms=5000, grpc.max_send_message_length=-1, grpc.primary_user_agent=grpc-rust/0.10.4, grpc.resource_quota=0x7f39f36b5cc0, grpc.server_uri=dns:///192.168.1.202:2379}: connect failed: {"created":"@1698809215.062233286","description":"Failed to connect to remote host: Connection refused","errno":111,"file":"/rust/registry/src/github.com-1ecc6299db9ec823/grpcio-sys-0.10.3+1.44.0-patched/grpc/src/core/lib/iomgr/tcp_client_posix.cc","file_line":200,"os_error":"Connection refused","syscall":"connect","target_address":"ipv4:192.168.1.202:2379"}”]

[2023/11/01 11:26:55.062 +08:00] [INFO] [] [“subchannel 0x7f39f364f000 {address=ipv4:192.168.1.202:2379, args=grpc.client_channel_factory=0x7f39f36979b0, grpc.default_authority=192.168.1.202:2379, grpc.initial_reconnect_backoff_ms=1000, grpc.internal.subchannel_pool=0x7f39f36389a0, grpc.keepalive_time_ms=10000, grpc.keepalive_timeout_ms=3000, grpc.max_receive_message_length=-1, grpc.max_reconnect_backoff_ms=5000, grpc.max_send_message_length=-1, grpc.primary_user_agent=grpc-rust/0.10.4, grpc.resource_quota=0x7f39f36b5cc0, grpc.server_uri=dns:///192.168.1.202:2379}: Retry in 999 milliseconds”]

[2023/11/01 11:26:55.062 +08:00] [INFO] [util.rs:566] [“PD failed to respond”] [err=“Grpc(RpcFailure(RpcStatus { code: 14-UNAVAILABLE, message: "failed to connect to all addresses", details: [] }))”] [endpoints=192.168.1.202:2379]

[2023/11/01 11:26:55.062 +08:00] [INFO] [util.rs:604] [“connecting to PD endpoint”] [endpoints=192.168.1.203:2379]

[2023/11/01 11:26:55.063 +08:00] [INFO] [] [“subchannel 0x7f39f364f400 {address=ipv4:192.168.1.203:2379, args=grpc.client_channel_factory=0x7f39f36979b0, grpc.default_authority=192.168.1.203:2379, grpc.initial_reconnect_backoff_ms=1000, grpc.internal.subchannel_pool=0x7f39f36389a0, grpc.keepalive_time_ms=10000, grpc.keepalive_timeout_ms=3000, grpc.max_receive_message_length=-1, grpc.max_reconnect_backoff_ms=5000, grpc.max_send_message_length=-1, grpc.primary_user_agent=grpc-rust/0.10.4, grpc.resource_quota=0x7f39f36b5cc0, grpc.server_uri=dns:///192.168.1.203:2379}: connect failed: {"created":"@1698809215.063200446","description":"Failed to connect to remote host: Connection refused","errno":111,"file":"/rust/registry/src/github.com-1ecc6299db9ec823/grpcio-sys-0.10.3+1.44.0-patched/grpc/src/core/lib/iomgr/tcp_client_posix.cc","file_line":200,"os_error":"Connection refused","syscall":"connect","target_address":"ipv4:192.168.1.203:2379"}”]

[2023/11/01 11:26:55.063 +08:00] [INFO] [] [“subchannel 0x7f39f364f400 {address=ipv4:192.168.1.203:2379, args=grpc.client_channel_factory=0x7f39f36979b0, grpc.default_authority=192.168.1.203:2379, grpc.initial_reconnect_backoff_ms=1000, grpc.internal.subchannel_pool=0x7f39f36389a0, grpc.keepalive_time_ms=10000, grpc.keepalive_timeout_ms=3000, grpc.max_receive_message_length=-1, grpc.max_reconnect_backoff_ms=5000, grpc.max_send_message_length=-1, grpc.primary_user_agent=grpc-rust/0.10.4, grpc.resource_quota=0x7f39f36b5cc0, grpc.server_uri=dns:///192.168.1.203:2379}: Retry in 1000 milliseconds”]

【资源配置】进入到 TiDB Dashboard -集群信息 (Cluster Info) -主机(Hosts) 截图此页面

【附件:截图/日志/监控】