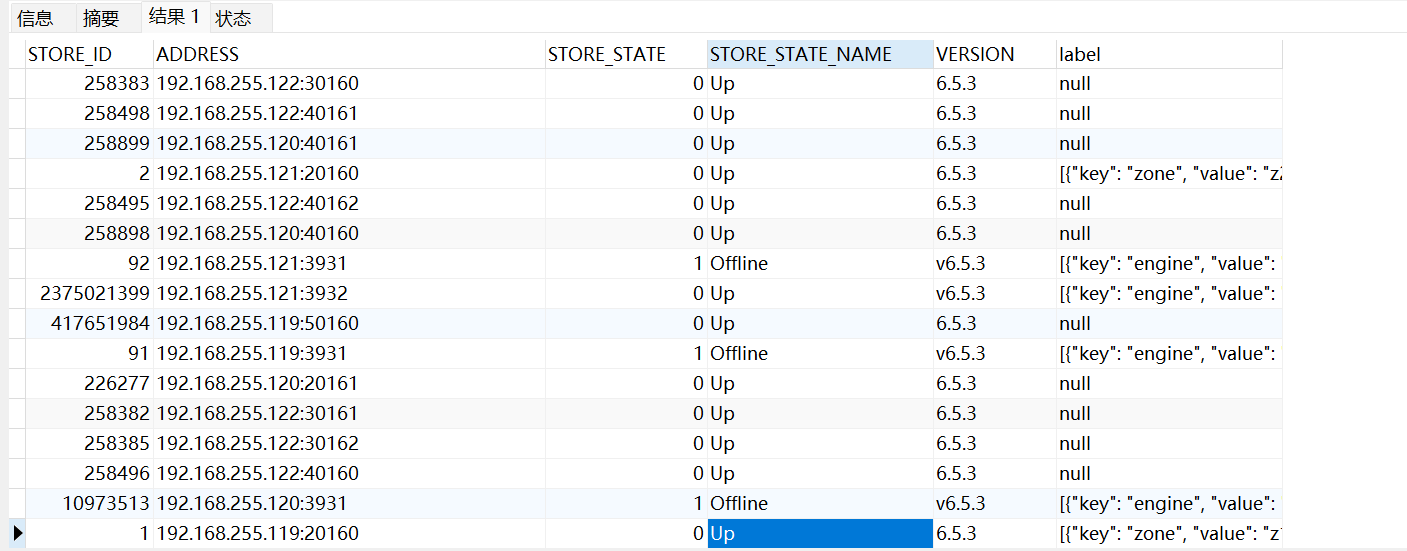

没有缩容掉节点

pd-ctl 里面很多 offline的状态

{

“count”: 19,

“stores”: [

{

“store”: {

“id”: 91,

“address”: “192.168.255.119:3931”,

“labels”: [

{

“key”: “engine”,

“value”: “tiflash”

}

],

“version”: “v6.5.3”,

“peer_address”: “192.168.255.119:20171”,

“status_address”: “192.168.255.119:20293”,

“git_hash”: “e63e24991079fff1e5afe03e859f743cbb6cf4a7”,

“start_timestamp”: 1694990902,

“deploy_path”: “/deploy/tidb/tiflash-9001/bin/tiflash”,

“last_heartbeat”: 1695016186000543038,

“state_name”: “Offline”

},

“status”: {

“capacity”: “1.718TiB”,

“available”: “1.07TiB”,

“used_size”: “55.47GiB”,

“leader_count”: 0,

“leader_weight”: 1,

“leader_score”: 0,

“leader_size”: 0,

“region_count”: 5088,

“region_weight”: 1,

“region_score”: 1402721.0927894693,

“region_size”: 1152681,

“learner_count”: 5088,

“slow_score”: 1,

“start_ts”: “2023-09-18T06:48:22+08:00”,

“last_heartbeat_ts”: “2023-09-18T13:49:46.000543038+08:00”,

“uptime”: “7h1m24.000543038s”

}

},

{

“store”: {

“id”: 92,

“address”: “192.168.255.121:3931”,

“labels”: [

{

“key”: “engine”,

“value”: “tiflash”

}

],

“version”: “v6.5.3”,

“peer_address”: “192.168.255.121:20171”,

“status_address”: “192.168.255.121:20293”,

“git_hash”: “e63e24991079fff1e5afe03e859f743cbb6cf4a7”,

“start_timestamp”: 1694990955,

“deploy_path”: “/deploy/tidb/tiflash-9001/bin/tiflash”,

“last_heartbeat”: 1695016328659292069,

“state_name”: “Offline”

},

“status”: {

“capacity”: “1.718TiB”,

“available”: “821.3GiB”,

“used_size”: “50.54GiB”,

“leader_count”: 0,

“leader_weight”: 1,

“leader_score”: 0,

“leader_size”: 0,

“region_count”: 4361,

“region_weight”: 1,

“region_score”: 1402379.9771483315,

“region_size”: 1111245,

“learner_count”: 4361,

“slow_score”: 1,

“start_ts”: “2023-09-18T06:49:15+08:00”,

“last_heartbeat_ts”: “2023-09-18T13:52:08.659292069+08:00”,

“uptime”: “7h2m53.659292069s”

}