【 TiDB 使用环境】生产环境

【 TiDB 版本】5.0.4

【复现路径】无

【资源配置】

2台tiflash机器: 32 256 GiB

tiflash内存无限制

tiflash:

logger.count: 5

logger.level: warning

profiles.default.batch_cop_pool_size: 32

profiles.default.cop_pool_size: 32

profiles.default.max_memory_usage: 0

profiles.default.max_memory_usage_for_all_queries: 0

执行报错:

2023.09.08 11:12:50.149292 [ 27515 ] <Warning> TaskManager: Begin cancel query: 444109362219974897

2023.09.08 11:12:50.149721 [ 27515 ] <Warning> TaskManager: Remaining task in query 444109362219974897 are: [444109362219974897,1]

2023.09.08 11:12:50.150400 [ 27516 ] <Warning> task 1: Begin cancel task: [444109362219974897,1]

2023.09.08 11:12:50.158941 [ 27516 ] <Error> tunnel1+-1: Failed to close tunnel: tunnel1+-1: Code: 0, e.displayText() = DB::Exception: Failed to write err, e.what() = DB::Ex

ception, Stack trace:

0. bin/tiflash/tiflash(StackTrace::StackTrace()+0x15) [0x369c535]

1. bin/tiflash/tiflash(DB::Exception::Exception(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, int)+0x25) [0x36930c5]

2. bin/tiflash/tiflash(DB::MPPTunnel::close(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&)+0x18d) [0x78aeb9d]

3. bin/tiflash/tiflash(DB::MPPTask::cancel(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&)+0x9c) [0x78aed4c]

4. bin/tiflash/tiflash() [0x86b18ee]

5. /lib64/libpthread.so.0(+0x7dd4) [0x7fb76bdc8dd4]

6. /lib64/libc.so.6(clone+0x6c) [0x7fb76b7efeac]

2023.09.08 11:12:50.159010 [ 27516 ] <Warning> task 1: Finish cancel task: [444109362219974897,1]

2023.09.08 11:12:50.159381 [ 27515 ] <Warning> TaskManager: Finish cancel query: 444109362219974897

2023.09.08 11:12:50.197413 [ 27517 ] <Error> task 1: task running meets error DB::Exception: write to tunnel which is already closed. Stack Trace : 0. bin/tiflash/tiflash(StackTrace::StackTrace()+0x15) [0x369c535]

1. bin/tiflash/tiflash(DB::Exception::Exception(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, int)+0x25) [0x36930c5]

2. bin/tiflash/tiflash(DB::MPPTunnel::write(mpp::MPPDataPacket const&, bool)+0x48a) [0x789ac2a]

3. bin/tiflash/tiflash(DB::MPPTunnelSet::write(tipb::SelectResponse&)+0x88) [0x789e598]

4. bin/tiflash/tiflash(DB::StreamingDAGResponseWriter<std::shared_ptr<DB::MPPTunnelSet> >::getEncodeTask(std::vector<DB::Block, std::allocator<DB::Block> >&, tipb::SelectResponse&) const::{lambda()#1}::operator()()+0x3e8) [0x789eca8]

5. bin/tiflash/tiflash(ThreadPool::worker()+0x166) [0x7a28d26]

6. bin/tiflash/tiflash() [0x86b18ee]

7. /lib64/libpthread.so.0(+0x7dd4) [0x7fb76bdc8dd4]

8. /lib64/libc.so.6(clone+0x6c) [0x7fb76b7efeac]

2023.09.08 11:12:50.204287 [ 27517 ] <Error> task 1: Failed to write error DB::Exception: write to tunnel which is already closed. to tunnel: tunnel1+-1: Code: 0, e.displayText() = DB::Exception: write to tunnel which is already closed., e.what() = DB::Exception, Stack trace:

0. bin/tiflash/tiflash(StackTrace::StackTrace()+0x15) [0x369c535]

1. bin/tiflash/tiflash(DB::Exception::Exception(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, int)+0x25) [0x36930c5]

2. bin/tiflash/tiflash(DB::MPPTunnel::write(mpp::MPPDataPacket const&, bool)+0x48a) [0x789ac2a]

3. bin/tiflash/tiflash(DB::MPPTask::writeErrToAllTunnel(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&)+0x9b) [0x78af24b]

4. bin/tiflash/tiflash(DB::MPPTask::runImpl()+0x1079) [0x78b0519]

5. bin/tiflash/tiflash() [0x86b18ee]

6. /lib64/libpthread.so.0(+0x7dd4) [0x7fb76bdc8dd4]

7. /lib64/libc.so.6(clone+0x6c) [0x7fb76b7efeac]

2023.09.08 11:12:50.204348 [ 27517 ] <Error> TaskManager: The task [444109362219974897,1] cannot be found and fail to unregister

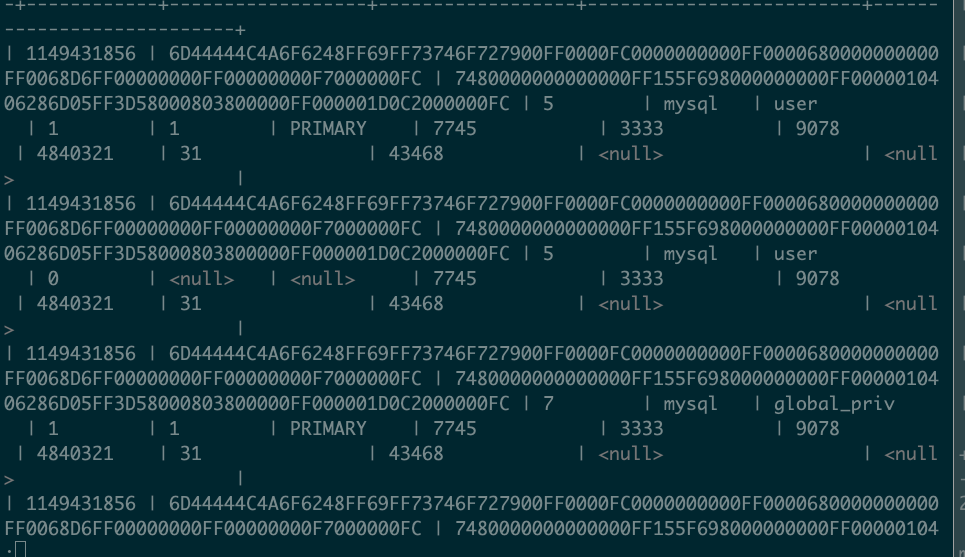

2023.09.08 11:20:42.872443 [ 28 ] <Warning> pingcap.tikv: region {1149431856,7745,3333} find error: peer is not leader for region 1149431856, leader may Some(id: 1679238770 store_id: 23)

2023.09.08 11:25:25.515659 [ 21 ] <Warning> pingcap.tikv: region {1149431856,7745,3333} find error: peer is not leader for region 1149431856, leader may Some(id: 1679241274 store_id: 22)