【 TiDB 使用环境】测试

【 TiDB 版本】6.5

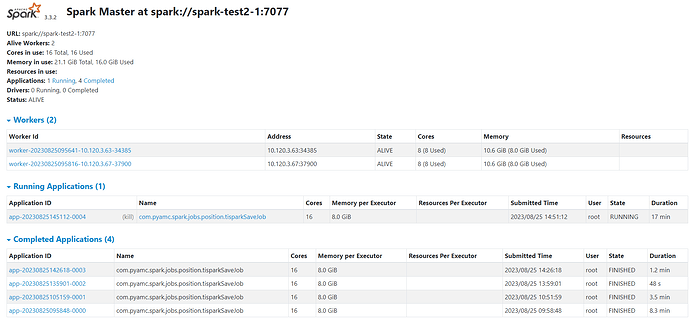

【复现路径】正在用spark改造现有业务,其中一个场景是spark将计算好的数据写入tidb,数据记录大约在800万左右,每条记录10个字段,spark直接写入tidb需要5分钟;寻思用tispark写入应该更快些,另搭建一个简单的spark standalone集群,一个master,2个worker,spark版本3.3.2,tispark的jar包是tispark-assembly-3.3_2.12-3.1.3.jar,写入同一个tidb测试库。

写入5万记录,用48秒;10万记录,1.2分;当数据量增加到50万时,写入报错了。

刚开始用tispak,不知道是哪里用导致的问题,还望有经验的大侠指点下。

【遇到的问题:问题现象及影响】50万数据,看日志分成了37个task,第20个task 报Error reading region,后续接连有其它任务报同样错误

23/08/25 14:53:36 WARN TaskSetManager: Lost task 20.0 in stage 0.0 (TID 20) (10.120.3.63 executor 1): com.pingcap.tikv.exception.TiClientInternalException: Error reading region:

at com.pingcap.tikv.operation.iterator.DAGIterator.doReadNextRegionChunks(DAGIterator.java:191)

at com.pingcap.tikv.operation.iterator.DAGIterator.readNextRegionChunks(DAGIterator.java:168)

at com.pingcap.tikv.operation.iterator.DAGIterator.hasNext(DAGIterator.java:114)

at org.apache.spark.sql.tispark.TiRowRDD$$anon$1.hasNext(TiRowRDD.scala:70)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage1.columnartorow_nextBatch_0$(Unknown Source)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage1.processNext(Unknown Source)

at org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at org.apache.spark.sql.execution.WholeStageCodegenExec$$anon$1.hasNext(WholeStageCodegenExec.scala:760)

at scala.collection.Iterator$SliceIterator.hasNext(Iterator.scala:268)

at scala.collection.Iterator$$anon$10.hasNext(Iterator.scala:460)

at org.apache.spark.shuffle.sort.BypassMergeSortShuffleWriter.write(BypassMergeSortShuffleWriter.java:140)

at org.apache.spark.shuffle.ShuffleWriteProcessor.write(ShuffleWriteProcessor.scala:59)

at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:99)

at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:52)

at org.apache.spark.scheduler.Task.run(Task.scala:136)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:548)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1504)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:551)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:750)

Caused by: java.util.concurrent.ExecutionException: com.pingcap.tikv.exception.RegionTaskException: Handle region task failed:

at java.util.concurrent.FutureTask.report(FutureTask.java:122)

at java.util.concurrent.FutureTask.get(FutureTask.java:192)

at com.pingcap.tikv.operation.iterator.DAGIterator.doReadNextRegionChunks(DAGIterator.java:186)

… 20 more

Caused by: com.pingcap.tikv.exception.RegionTaskException: Handle region task failed:

at com.pingcap.tikv.operation.iterator.DAGIterator.process(DAGIterator.java:243)

at com.pingcap.tikv.operation.iterator.DAGIterator.lambda$submitTasks$1(DAGIterator.java:92)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

… 3 more

Caused by: com.pingcap.tikv.exception.GrpcException: retry is exhausted.

at com.pingcap.tikv.util.ConcreteBackOffer.doBackOffWithMaxSleep(ConcreteBackOffer.java:153)

at com.pingcap.tikv.util.ConcreteBackOffer.doBackOff(ConcreteBackOffer.java:124)

at com.pingcap.tikv.region.RegionStoreClient.handleCopResponse(RegionStoreClient.java:708)

at com.pingcap.tikv.region.RegionStoreClient.coprocess(RegionStoreClient.java:689)

at com.pingcap.tikv.operation.iterator.DAGIterator.process(DAGIterator.java:229)

… 7 more

Caused by: com.pingcap.tikv.exception.GrpcException: TiKV down or Network partition

… 10 more

23/08/25 14:53:36 INFO TaskSetManager: Lost task 18.0 in stage 0.0 (TID 18) on 10.120.3.67, executor 0: com.pingcap.tikv.exception.TiClientInternalException (Error reading region:) [duplicate 1]

23/08/25 14:53:36 INFO TaskSetManager: Starting task 18.1 in stage 0.0 (TID 26) (10.120.3.67, executor 0, partition 18, ANY, 9207 bytes) taskResourceAssignments Map()

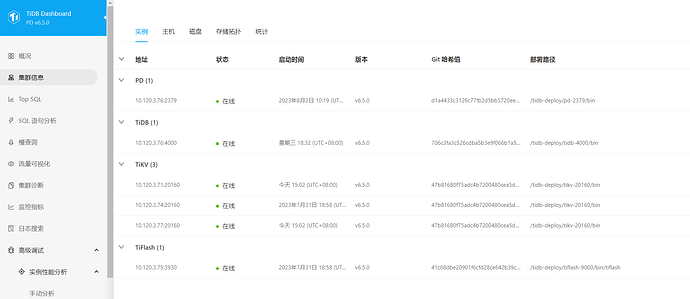

【资源配置】进入到 TiDB Dashboard -集群信息 (Cluster Info) -主机(Hosts) 截图此页面

【附件:截图/日志/监控】

tispark写入50万条记录日志.txt (146.7 KB)