【 TiDB 使用环境】测试

【 TiDB 版本】6.5.0

【复现路径】系统压力运行一段时间,PD出现故障,TIKV日志报错

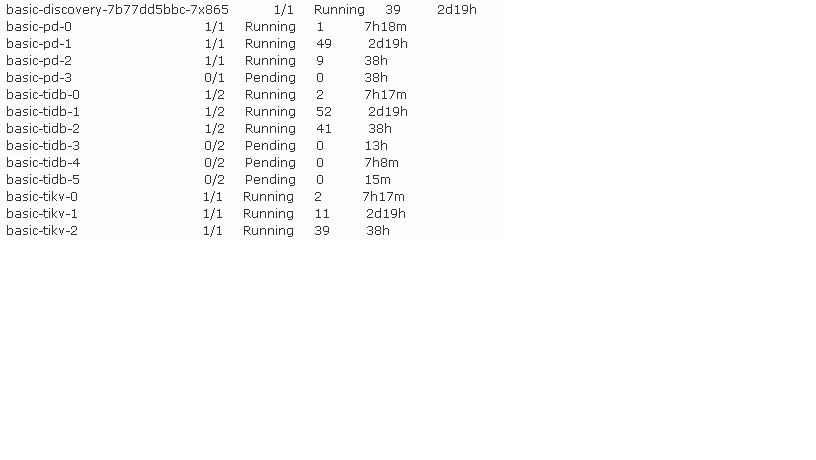

【遇到的问题:问题现象及影响】通过K8S kubelet查看出现TIDB Pending, PD Pending

【资源配置】34核 SATA盘

【附件:截图/日志/监控】

看下日志

- 猜测集群资源不足以支撑压力,导致 tidb/pd/tikv 不断重启,见截图重启次数

- 默认配置开启 auto failover,自动扩容的 pod 处于 pending 说明没有足够 node 资源,参考 https://docs.pingcap.com/zh/tidb-in-kubernetes/stable/deploy-failures#pod-处于-pending-状态

SATA盘 会不会埋没了Tidb 发挥不了优势

实际情况,我们分析的是K8S认为该POD正常,该POD处于running状态。 而基于TiOperator部署的tidb-controller-manager自动检测到业务实际不可用,于是触发POD重启,结果由于PV、PVC已经被绑定占用。 所以处于pending状态。

TiOperator 不会主动触发 pod 重启

其中一个验证环境,一个节点故障中,再次出现此问题:

[root@kylin-122 global]# kubectl get pods -Aowide | grep basic

my-space basic-discovery-8568cffbf9-fnxc6 1/1 Running 2 12d 100.84.119.105 kylin-121

my-space basic-pd-0 1/1 Running 500 12d 100.84.119.102 kylin-121

my-space basic-pd-1 0/1 Pending 0 2d9h

my-space basic-pd-2 1/1 Terminating 0 12d 100.109.240.226 kylin-123

my-space basic-pd-3 0/1 Pending 0 6d17h

my-space basic-tidb-0 1/2 Running 418 2d9h 100.68.55.65 kylin-122

my-space basic-tidb-1 1/2 Running 423 12d 100.84.119.115 kylin-121

my-space basic-tidb-2 2/2 Terminating 0 12d 100.109.240.218 kylin-123

my-space basic-tidb-3 0/2 Pending 0 6d17h

my-space basic-tikv-0 1/1 Running 2 12d 100.84.119.95 kylin-121

my-space basic-tikv-1 1/1 Terminating 0 12d 100.109.240.223 kylin-123

my-space basic-tikv-2 1/1 Running 0 2d9h 100.68.55.84 kylin-122

[root@kylin-122 global]# kubectl get pvc -n my-space -owide | grep tidb

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pd-basic-pd-0 Bound pv-local-tidb-pd-my-space-kylin-121 1Gi RWO tidb-pd-storage-my-space 16d

pd-basic-pd-1 Pending tidb-pd-storage-my-space 2d9h

pd-basic-pd-2 Bound pv-local-tidb-pd-my-space-kylin-123 1Gi RWO tidb-pd-storage-my-space 29d

pd-basic-pd-3 Pending tidb-pd-storage-my-space 16d

tikv-basic-tikv-0 Bound pv-local-tidb-kv-my-space-kylin-121 20Gi RWO tidb-kv-storage-my-space 29d

tikv-basic-tikv-1 Bound pv-local-tidb-kv-my-space-kylin-123 20Gi RWO tidb-kv-storage-my-space 29d

tikv-basic-tikv-2 Bound pv-local-tidb-kv-my-space-kylin-122 20Gi RWO tidb-kv-storage-my-space 29d

tikv-basic-tikv-3 Pending tidb-kv-storage-my-space 28d

[root@kylin-122 global]# kubectl get pv -owide | grep tidb

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv-local-tidb-kv-my-space-kylin-121 20Gi RWO Retain Bound my-space/tikv-basic-tikv-0 tidb-kv-storage-my-space 29d

pv-local-tidb-kv-my-space-kylin-122 20Gi RWO Retain Bound my-space/tikv-basic-tikv-2 tidb-kv-storage-my-space 29d

pv-local-tidb-kv-my-space-kylin-123 20Gi RWO Retain Bound my-space/tikv-basic-tikv-1 tidb-kv-storage-my-space 29d

pv-local-tidb-pd-my-space-kylin-121 1Gi RWO Retain Bound my-space/pd-basic-pd-0 tidb-pd-storage-my-space 29d

pv-local-tidb-pd-my-space-kylin-122 1Gi RWO Retain Released my-space/pd-basic-pd-1 tidb-pd-storage-my-space 29d

pv-local-tidb-pd-my-space-kylin-123 1Gi RWO Retain Bound my-space/pd-basic-pd-2 tidb-pd-storage-my-space 29d

[root@kylin-122 global]# kubectl describe pvc -n my-space pd-basic-pd-1

Name: pd-basic-pd-1

Namespace: my-space

StorageClass: tidb-pd-storage-my-space

Status: Pending

Volume:

Labels: app.kubernetes.io/component=pd

app.kubernetes.io/instance=basic

app.kubernetes.io/managed-by=tidb-operator

app.kubernetes.io/name=tidb-cluster

Annotations:

Finalizers: [kubernetes.io/pvc-protection]

Capacity:

Access Modes:

VolumeMode: Filesystem

Used By: basic-pd-1

Events:

Type Reason Age From Message

Normal WaitForPodScheduled 54s (x13822 over 2d9h) persistentvolume-controller waiting for pod basic-pd-1 to be scheduled

[root@kylin-122 global]# kubectl describe pods -n my-space basic-pd-1

Name: basic-pd-1

Namespace: my-space

Priority: 0

Node:

Labels: app.kubernetes.io/component=pd

app.kubernetes.io/instance=basic

app.kubernetes.io/managed-by=tidb-operator

app.kubernetes.io/name=tidb-cluster

controller-revision-hash=basic-pd-648c74f97f

statefulset.kubernetes.io/pod-name=basic-pd-1

Annotations: kubernetes.io/limit-ranger: LimitRanger plugin set: cpu, memory request for container pd

prometheus.io/path: /metrics

prometheus.io/port: 2379

prometheus.io/scrape: true

Status: Pending

IP:

IPs:

Controlled By: StatefulSet/basic-pd

Containers:

pd:

Image: pingcap/pd:v4.0.0

Ports: 2380/TCP, 2379/TCP

Host Ports: 0/TCP, 0/TCP

Command:

/bin/sh

/usr/local/bin/pd_start_script.sh

Requests:

cpu: 50m

memory: 40Mi

Environment:

NAMESPACE: my-space (v1:metadata.namespace)

PEER_SERVICE_NAME: basic-pd-peer

SERVICE_NAME: basic-pd

SET_NAME: basic-pd

TZ: UTC

Mounts:

/etc/pd from config (ro)

/etc/podinfo from annotations (ro)

/usr/local/bin from startup-script (ro)

/var/lib/pd from pd (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-rncxm (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

pd:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: pd-basic-pd-1

ReadOnly: false

annotations:

Type: DownwardAPI (a volume populated by information about the pod)

Items:

metadata.annotations → annotations

config:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: basic-pd

Optional: false

startup-script:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: basic-pd

Optional: false

default-token-rncxm:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-rncxm

Optional: false

QoS Class: Burstable

Node-Selectors: node-role.kubernetes.io/master=

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

Warning FailedScheduling 60s (x2308 over 2d9h) default-scheduler 0/3 nodes are available: 1 node(s) had taint {node.kubernetes.io/unreachable: }, that the pod didn’t tolerate, 2 node(s) didn’t find available persistent volumes to bind.

其中:

my-space basic-pd-2 1/1 Terminating 0 12d 100.109.240.226 kylin-123

my-space basic-tidb-2 2/2 Terminating 0 12d 100.109.240.218 kylin-123

my-space basic-tikv-1 1/1 Terminating 0 12d 100.109.240.223 kylin-123

这个节点是物理故障。

看起来是1个node 故障后,其他node 也没有可用的 volumne。可以先修复这个 node,再看看。

这违背了3节点集群的基本高可靠需求。 1个节点故障绝对不能影响集群的功能。 否则还用集群干嘛,浪费资源。

上面的问题,基本可以怀疑在绑定PVC、PV和解绑PVC、PV出现了问题。

PD 节点出现故障,你可以尝试重启 PD 节点