在7.2上测试效果一样,

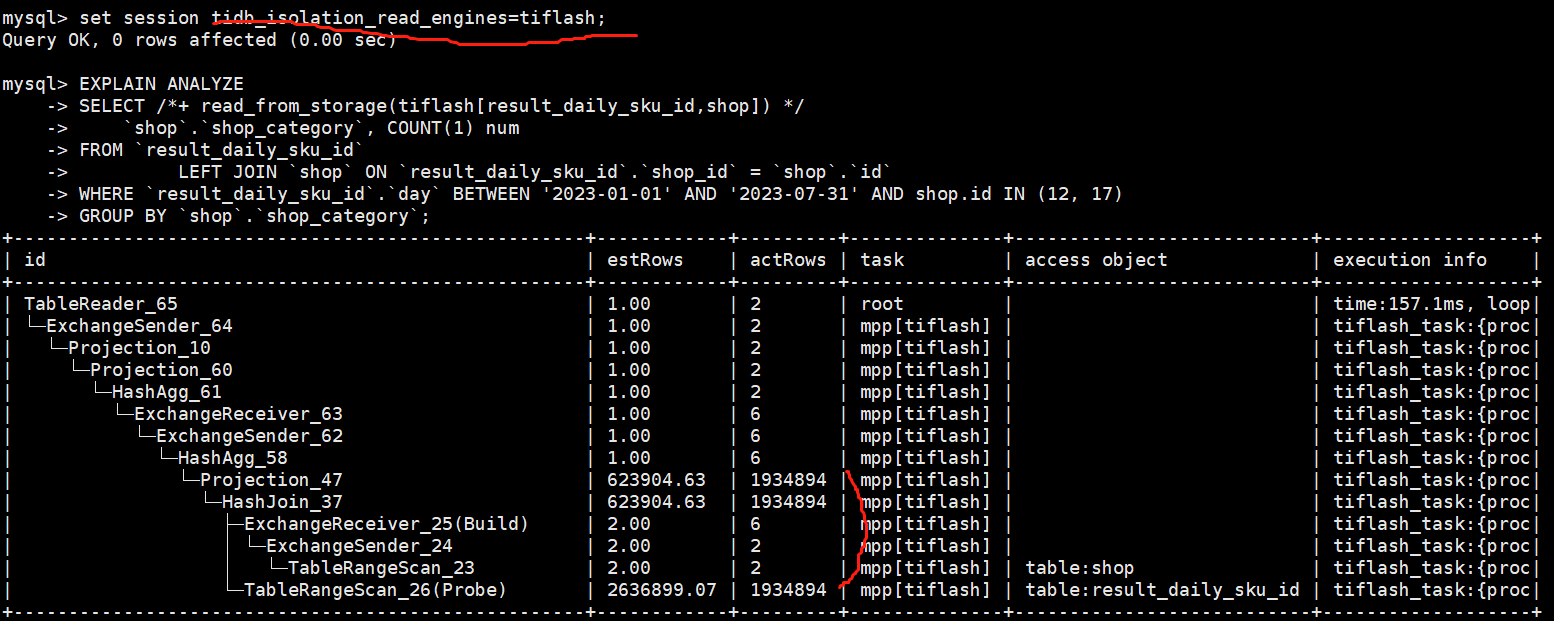

5.7.25-TiDB-v7.2.0 (root@shawnyan) [test] 09:31:02> set session tidb_isolation_read_engines=tiflash;

Query OK, 0 rows affected (0.001 sec)

5.7.25-TiDB-v7.2.0 (root@shawnyan) [test] 09:31:13> tidb_isolation_read_engines=\c

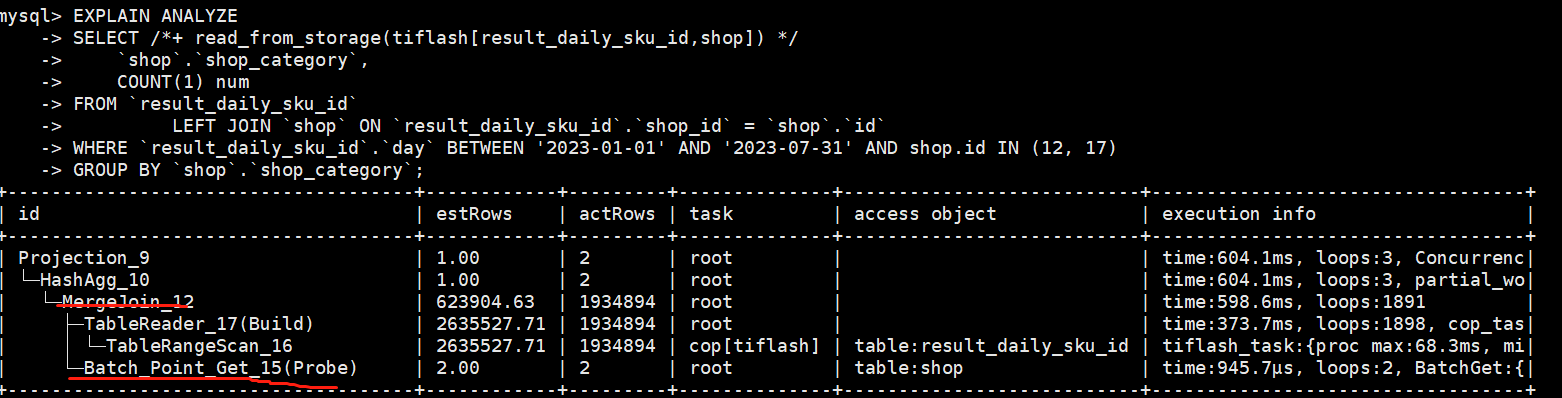

5.7.25-TiDB-v7.2.0 (root@shawnyan) [test] 09:31:17> EXPLAIN ANALYZE

-> SELECT /*+ read_from_storage(tiflash[result_daily_sku_id,shop]) */

-> `shop`.`shop_category`, COUNT(1) num

-> FROM `result_daily_sku_id`

-> LEFT JOIN `shop` ON `result_daily_sku_id`.`shop_id` = `shop`.`id`

-> WHERE `result_daily_sku_id`.`day` BETWEEN '2023-01-01' AND '2023-07-31' AND shop.id IN (12, 17)

-> GROUP BY `shop`.`shop_category`;

+------------------------------------------------+---------+---------+--------------+---------------------------+----------------------------------------------------------------------------------------------------------+------------------------------------------------------------------------------------------------------------------------------------------------+-----------+------+

| id | estRows | actRows | task | access object | execution info | operator info | memory | disk |

+------------------------------------------------+---------+---------+--------------+---------------------------+----------------------------------------------------------------------------------------------------------+------------------------------------------------------------------------------------------------------------------------------------------------+-----------+------+

| TableReader_58 | 1.00 | 0 | root | | time:25.7ms, loops:1, RU:0.000000, cop_task: {num: 1, max: 0s, proc_keys: 0, copr_cache_hit_ratio: 0.00} | MppVersion: 1, data:ExchangeSender_57 | 707 Bytes | N/A |

| └─ExchangeSender_57 | 1.00 | 0 | mpp[tiflash] | | tiflash_task:{time:21ms, loops:0, threads:4} | ExchangeType: PassThrough | N/A | N/A |

| └─Projection_9 | 1.00 | 0 | mpp[tiflash] | | tiflash_task:{time:20ms, loops:0, threads:4} | test.shop.shop_category, Column#10 | N/A | N/A |

| └─Projection_52 | 1.00 | 0 | mpp[tiflash] | | tiflash_task:{time:20ms, loops:0, threads:4} | Column#10, test.shop.shop_category | N/A | N/A |

| └─HashAgg_50 | 1.00 | 0 | mpp[tiflash] | | tiflash_task:{time:20ms, loops:0, threads:4} | group by:test.shop.shop_category, funcs:count(1)->Column#10, funcs:firstrow(test.shop.shop_category)->test.shop.shop_category, stream_count: 4 | N/A | N/A |

| └─ExchangeReceiver_36 | 0.62 | 0 | mpp[tiflash] | | tiflash_task:{time:20ms, loops:0, threads:4} | stream_count: 4 | N/A | N/A |

| └─ExchangeSender_35 | 0.62 | 0 | mpp[tiflash] | | tiflash_task:{time:17.3ms, loops:0, threads:8} | ExchangeType: HashPartition, Compression: FAST, Hash Cols: [name: test.shop.shop_category, collate: utf8mb4_general_ci], stream_count: 4 | N/A | N/A |

| └─HashJoin_34 | 0.62 | 0 | mpp[tiflash] | | tiflash_task:{time:16.3ms, loops:0, threads:8} | inner join, equal:[eq(test.result_daily_sku_id.shop_id, test.shop.id)] | N/A | N/A |

| ├─ExchangeReceiver_22(Build) | 5.00 | 0 | mpp[tiflash] | | tiflash_task:{time:15.3ms, loops:0, threads:8} | | N/A | N/A |

| │ └─ExchangeSender_21 | 5.00 | 0 | mpp[tiflash] | | tiflash_task:{time:9.11ms, loops:0, threads:1} | ExchangeType: Broadcast, Compression: FAST | N/A | N/A |

| │ └─TableRangeScan_20 | 5.00 | 0 | mpp[tiflash] | table:result_daily_sku_id | tiflash_task:{time:9.11ms, loops:0, threads:1} | range:[12 2023-01-01,12 2023-07-31], [17 2023-01-01,17 2023-07-31], keep order:false, stats:pseudo | N/A | N/A |

| └─TableRangeScan_23(Probe) | 2.00 | 0 | mpp[tiflash] | table:shop | tiflash_task:{time:14.3ms, loops:0, threads:1} | range:[12,12], [17,17], keep order:false, stats:pseudo | N/A | N/A |

+------------------------------------------------+---------+---------+--------------+---------------------------+----------------------------------------------------------------------------------------------------------+------------------------------------------------------------------------------------------------------------------------------------------------+-----------+------+

12 rows in set (0.124 sec)