下图为启动的错误

下图为/tidb-deploy/tiky-2389/log

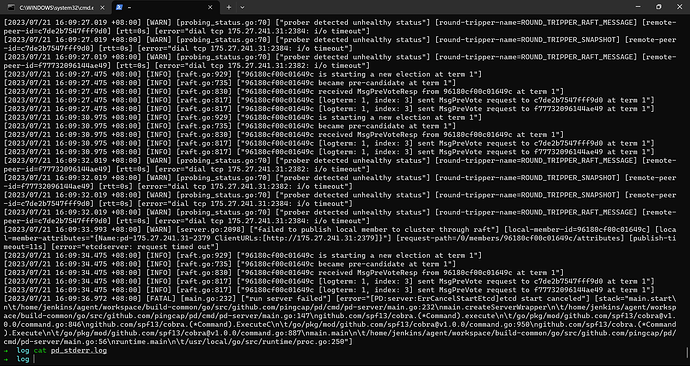

下图为PD的log

下面为配置文件:

# # Global variables are applied to all deployments and used as the default value of

# # the deployments if a specific deployment value is missing.

global:

user: "tidb"

ssh_port: 22

deploy_dir: "/tidb-deploy"

data_dir: "/tidb-data"

# # Monitored variables are applied to all the machines.

monitored:

node_exporter_port: 9100

blackbox_exporter_port: 9115

# deploy_dir: "/tidb-deploy/monitored-9100"

# data_dir: "/tidb-data/monitored-9100"

# log_dir: "/tidb-deploy/monitored-9100/log"

# # Server configs are used to specify the runtime configuration of TiDB components.

# # All configuration items can be found in TiDB docs:

# # - TiDB: https://docs.pingcap.com/zh/tidb/stable/tidb-configuration-file

# # - TiKV: https://docs.pingcap.com/zh/tidb/stable/tikv-configuration-file

# # - PD: https://docs.pingcap.com/zh/tidb/stable/pd-configuration-file

# # All configuration items use points to represent the hierarchy, e.g:

# # readpool.storage.use-unified-pool

# #

# # You can overwrite this configuration via the instance-level `config` field.

server_configs:

tidb:

log.slow-threshold: 300

binlog.enable: false

binlog.ignore-error: false

tikv:

# server.grpc-concurrency: 4

# raftstore.apply-pool-size: 2

# raftstore.store-pool-size: 2

# rocksdb.max-sub-compactions: 1

# storage.block-cache.capacity: "16GB"

# readpool.unified.max-thread-count: 12

readpool.storage.use-unified-pool: false

readpool.coprocessor.use-unified-pool: true

pd:

schedule.leader-schedule-limit: 4

schedule.region-schedule-limit: 2048

schedule.replica-schedule-limit: 64

replication.location-labels:

- host

pd_servers:

- host: 175.27.241.31

# ssh_port: 22

# name: "pd-1"

client_port: 2379

peer_port: 2380

deploy_dir: "/tidb-deploy/pd-2379"

data_dir: "/tidb-data/pd-2379"

log_dir: "/tidb-deploy/pd-2379/log"

# numa_node: "0,1"

# # The following configs are used to overwrite the `server_configs.pd` values.

# config:

# schedule.max-merge-region-size: 20

# schedule.max-merge-region-keys: 200000

- host: 175.27.241.31

# ssh_port: 22

# name: "pd-1"

client_port: 2381

peer_port: 2382

deploy_dir: "/tidb-deploy/pd-2381"

data_dir: "/tidb-data/pd-2381"

log_dir: "/tidb-deploy/pd-2381/log"

# numa_node: "0,1"

# # The following configs are used to overwrite the `server_configs.pd` values.

# config:

# schedule.max-merge-region-size: 20

# schedule.max-merge-region-keys: 200000

- host: 175.27.241.31

# ssh_port: 22

# name: "pd-1"

client_port: 2383

peer_port: 2384

deploy_dir: "/tidb-deploy/pd-2383"

data_dir: "/tidb-data/pd-2383"

log_dir: "/tidb-deploy/pd-2383/log"

# numa_node: "0,1"

# # The following configs are used to overwrite the `server_configs.pd` values.

# config:

# schedule.max-merge-region-size: 20

# schedule.max-merge-region-keys: 200000

tidb_servers:

- host: 175.27.241.31

# ssh_port: 22

port: 2385

status_port: 2386

deploy_dir: "/tidb-deploy/tidb-2385"

log_dir: "/tidb-deploy/tidb-2385/log"

# numa_node: "0,1"

# # The following configs are used to overwrite the `server_configs.tidb` values.

# config:

# log.slow-query-file: tidb-slow-overwrited.log

- host: 175.27.241.31

# ssh_port: 22

port: 2387

status_port: 2388

deploy_dir: "/tidb-deploy/tidb-2387"

log_dir: "/tidb-deploy/tidb-2387/log"

# numa_node: "0,1"

# # The following configs are used to overwrite the `server_configs.tidb` values.

# config:

# log.slow-query-file: tidb-slow-overwrited.log

tikv_servers:

- host: 175.27.241.31

ssh_port: 22

port: 2389

status_port: 2390

deploy_dir: "/tidb-deploy/tikv-2389"

data_dir: "/tidb-data/tikv-2389"

log_dir: "/tidb-deploy/tikv-2389/log"

config:

server.labels: { host: "175.27.241.31" }

- host: 175.27.241.31

ssh_port: 22

port: 2391

status_port: 2392

deploy_dir: "/tidb-deploy/tikv-2391"

data_dir: "/tidb-data/tikv-2391"

log_dir: "/tidb-deploy/tikv-2391/log"

config:

server.labels: { host: "175.27.241.31" }

- host: 175.27.241.31

ssh_port: 22

port: 2393

status_port: 2394

deploy_dir: "/tidb-deploy/tikv-2393"

data_dir: "/tidb-data/tikv-2393"

log_dir: "/tidb-deploy/tikv-2393/log"

config:

server.labels: { host: "175.27.241.31" }

monitoring_servers:

- host: 175.27.241.31

# ssh_port: 22

# port: 9090

# deploy_dir: "/tidb-deploy/prometheus-8249"

# data_dir: "/tidb-data/prometheus-8249"

# log_dir: "/tidb-deploy/prometheus-8249/log"

grafana_servers:

- host: 175.27.241.31

# port: 3000

# deploy_dir: /tidb-deploy/grafana-3000

alertmanager_servers:

- host: 175.27.241.31

# ssh_port: 22

# web_port: 9093

# cluster_port: 9094

# deploy_dir: "/tidb-deploy/alertmanager-9093"

# data_dir: "/tidb-data/alertmanager-9093"

# log_dir: "/tidb-deploy/alertmanager-9093/log"