【 TiDB 使用环境】生产环境 /测试/ Poc

生产环境

【 TiDB 版本】

v7.1.0

【复现路径】做过哪些操作出现的问题

新增索引

【遇到的问题:问题现象及影响】

加不上

【资源配置】进入到 TiDB Dashboard -集群信息 (Cluster Info) -主机(Hosts) 截图此页面

global:

user: tidb

ssh_port: 22

ssh_type: builtin

deploy_dir: /tidb-deploy

data_dir: /tidb-data

resource_control:

memory_limit: 8G

os: linux

monitored:

node_exporter_port: 9100

blackbox_exporter_port: 9115

deploy_dir: /tidb-deploy/monitor-9100

data_dir: /tidb-data/monitor-9100

log_dir: /tidb-deploy/monitor-9100/log

server_configs:

tidb:

log.slow-threshold: 300

tikv:

memory-usage-limit: 6G

readpool.coprocessor.use-unified-pool: true

readpool.storage.use-unified-pool: false

storage.block-cache.capacity: 2G

pd:

replication.enable-placement-rules: true

replication.location-labels:

- host

tidb_dashboard: {}

tiflash:

logger.level: info

tiflash-learner: {}

pump: {}

drainer: {}

cdc: {}

kvcdc: {}

grafana: {}

tidb_servers:

- host: 192.168.0.148

ssh_port: 22

port: 4000

status_port: 10080

deploy_dir: /tidb-deploy/tidb-4000

log_dir: /tidb-deploy/tidb-4000/log

arch: amd64

os: linux

tikv_servers: - host: 192.168.0.148

ssh_port: 22

port: 20160

status_port: 20180

deploy_dir: /tidb-deploy/tikv-20160

data_dir: /tidb-data/tikv-20160

log_dir: /tidb-deploy/tikv-20160/log

config:

server.labels:

host: logic-host-1

arch: amd64

os: linux

tiflash_servers: - host: 192.168.0.148

ssh_port: 22

tcp_port: 9000

http_port: 8123

flash_service_port: 3930

flash_proxy_port: 20170

flash_proxy_status_port: 20292

metrics_port: 8234

deploy_dir: /tidb-deploy/tiflash-9000

data_dir: /tidb-data/tiflash-9000

log_dir: /tidb-deploy/tiflash-9000/log

arch: amd64

os: linux

pd_servers: - host: 192.168.0.148

ssh_port: 22

name: pd-192.168.0.148-2379

client_port: 2379

peer_port: 2380

deploy_dir: /tidb-deploy/pd-2379

data_dir: /tidb-data/pd-2379

log_dir: /tidb-deploy/pd-2379/log

arch: amd64

os: linux

monitoring_servers: []

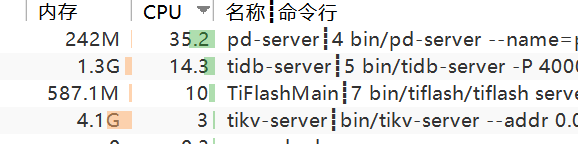

资源使用情况

【附件:截图/日志/监控】

tidb.log:

[2023/07/18 16:55:52.747 +08:00] [WARN] [session.go:2197] [“compile SQL failed”] [conn=984320192580431043] [error=“[schema:1146]Table ‘supeng.resource_lock’ doesn’t exist”] [SQL=“SELECT COUNT(1) FROM resource_lock where 1!=1”]

[2023/07/18 16:55:52.747 +08:00] [INFO] [conn.go:1184] [“command dispatched failed”] [conn=984320192580431043] [connInfo=“id:984320192580431043, addr:192.168.0.148:59418 status:10, collation:utf8mb4_general_ci, user:root”] [command=Query] [status=“inTxn:0, autocommit:1”] [sql=“SELECT COUNT(1) FROM resource_lock where 1!=1”] [txn_mode=PESSIMISTIC] [timestamp=0] [err=“[schema:1146]Table ‘supeng.resource_lock’ doesn’t exist”]

[2023/07/18 16:55:52.861 +08:00] [INFO] [session.go:3852] [“CRUCIAL OPERATION”] [conn=984320192580431043] [schemaVersion=93] [cur_db=supeng] [sql=“CREATE TABLE resource_lock(\nid BIGINT(64) AUTO_INCREMENT,\nresource VARCHAR(128),\nip VARCHAR(128),\ncreateTime DATETIME,\nupdateTime DATETIME,\nauthorId BIGINT(64),\nisDeleted BOOLEAN DEFAULT 0,\nPRIMARY KEY (id)\n) ENGINE=InnoDB CHARSET=utf8”] [user=root@%]

[2023/07/18 16:55:52.881 +08:00] [INFO] [ddl_worker.go:238] [“[ddl] add DDL jobs”] [“batch count”=1] [jobs=“ID:127, Type:create table, State:queueing, SchemaState:none, SchemaID:88, TableID:126, RowCount:0, ArgLen:2, start time: 2023-07-18 16:55:52.842 +0800 CST, Err:, ErrCount:0, SnapshotVersion:0; “] [table=true]

[2023/07/18 16:55:52.881 +08:00] [INFO] [ddl.go:1056] [”[ddl] start DDL job”] [job=“ID:127, Type:create table, State:queueing, SchemaState:none, SchemaID:88, TableID:126, RowCount:0, ArgLen:2, start time: 2023-07-18 16:55:52.842 +0800 CST, Err:, ErrCount:0, SnapshotVersion:0”] [query=“CREATE TABLE resource_lock(\nid BIGINT(64) AUTO_INCREMENT,\nresource VARCHAR(128),\nip VARCHAR(128),\ncreateTime DATETIME,\nupdateTime DATETIME,\nauthorId BIGINT(64),\nisDeleted BOOLEAN DEFAULT 0,\nPRIMARY KEY (id)\n) ENGINE=InnoDB CHARSET=utf8”]

[2023/07/18 16:55:52.891 +08:00] [INFO] [ddl_worker.go:980] [“[ddl] run DDL job”] [worker=“worker 1, tp general”] [job=“ID:127, Type:create table, State:queueing, SchemaState:none, SchemaID:88, TableID:126, RowCount:0, ArgLen:0, start time: 2023-07-18 16:55:52.842 +0800 CST, Err:, ErrCount:0, SnapshotVersion:0”]

[2023/07/18 16:55:52.915 +08:00] [INFO] [domain.go:240] [“diff load InfoSchema success”] [currentSchemaVersion=93] [neededSchemaVersion=94] [“start time”=1.446636ms] [gotSchemaVersion=94] [phyTblIDs=“[126]”] [actionTypes=“[3]”]

[2023/07/18 16:55:52.919 +08:00] [INFO] [domain.go:833] [“mdl gets lock, update to owner”] [jobID=127] [version=94]

[2023/07/18 16:55:52.957 +08:00] [INFO] [ddl_worker.go:1204] [“[ddl] wait latest schema version changed(get the metadata lock if tidb_enable_metadata_lock is true)”] [ver=94] [“take time”=53.013379ms] [job=“ID:127, Type:create table, State:done, SchemaState:public, SchemaID:88, TableID:126, RowCount:0, ArgLen:2, start time: 2023-07-18 16:55:52.842 +0800 CST, Err:, ErrCount:0, SnapshotVersion:0”]

[2023/07/18 16:55:52.971 +08:00] [INFO] [ddl_worker.go:601] [“[ddl] finish DDL job”] [worker=“worker 1, tp general”] [job=“ID:127, Type:create table, State:synced, SchemaState:public, SchemaID:88, TableID:126, RowCount:0, ArgLen:0, start time: 2023-07-18 16:55:52.842 +0800 CST, Err:, ErrCount:0, SnapshotVersion:0”]

[2023/07/18 16:55:52.979 +08:00] [INFO] [ddl.go:1158] [“[ddl] DDL job is finished”] [jobID=127]

[2023/07/18 16:55:52.979 +08:00] [INFO] [callback.go:128] [“performing DDL change, must reload”]

[2023/07/18 16:55:52.979 +08:00] [INFO] [split_region.go:85] [“split batch regions request”] [“split key count”=1] [“batch count”=1] [“first batch, region ID”=14] [“first split key”=74800000000000007e]

[2023/07/18 16:55:52.981 +08:00] [INFO] [session.go:3852] [“CRUCIAL OPERATION”] [conn=984320192580431043] [schemaVersion=94] [cur_db=supeng] [sql=“Create UNIQUE Index UX_resource_lock_resource ON resource_lock(resource)”] [user=root@%]

[2023/07/18 16:55:52.984 +08:00] [INFO] [split_region.go:187] [“batch split regions complete”] [“batch region ID”=14] [“first at”=74800000000000007e] [“first new region left”=“{Id:1025 StartKey:7480000000000000ff7b00000000000000f8 EndKey:7480000000000000ff7e00000000000000f8 RegionEpoch:{ConfVer:1 Version:65} Peers:[id:1026 store_id:1 ] EncryptionMeta: IsInFlashback:false FlashbackStartTs:0}”] [“new region count”=1]

[2023/07/18 16:55:52.984 +08:00] [INFO] [split_region.go:236] [“split regions complete”] [“region count”=1] [“region IDs”=“[1025]”]

[2023/07/18 16:55:52.988 +08:00] [INFO] [ddl_worker.go:238] [“[ddl] add DDL jobs”] [“batch count”=1] [jobs=“ID:128, Type:add index, State:queueing, SchemaState:none, SchemaID:88, TableID:126, RowCount:0, ArgLen:6, start time: 2023-07-18 16:55:52.941 +0800 CST, Err:, ErrCount:0, SnapshotVersion:0, UniqueWarnings:0; “] [table=true]

[2023/07/18 16:55:52.988 +08:00] [INFO] [ddl.go:1056] [”[ddl] start DDL job”] [job=“ID:128, Type:add index, State:queueing, SchemaState:none, SchemaID:88, TableID:126, RowCount:0, ArgLen:6, start time: 2023-07-18 16:55:52.941 +0800 CST, Err:, ErrCount:0, SnapshotVersion:0, UniqueWarnings:0”] [query=“Create UNIQUE Index UX_resource_lock_resource ON resource_lock(resource)”]

[2023/07/18 16:55:52.998 +08:00] [INFO] [ddl_worker.go:980] [“[ddl] run DDL job”] [worker=“worker 3, tp add index”] [job=“ID:128, Type:add index, State:queueing, SchemaState:none, SchemaID:88, TableID:126, RowCount:0, ArgLen:0, start time: 2023-07-18 16:55:52.941 +0800 CST, Err:, ErrCount:0, SnapshotVersion:0, UniqueWarnings:0”]

[2023/07/18 16:55:52.998 +08:00] [INFO] [index.go:620] [“[ddl] run add index job”] [job=“ID:128, Type:add index, State:running, SchemaState:none, SchemaID:88, TableID:126, RowCount:0, ArgLen:6, start time: 2023-07-18 16:55:52.941 +0800 CST, Err:, ErrCount:0, SnapshotVersion:0, UniqueWarnings:0”] [indexInfo=“{"id":1,"idx_name":{"O":"UX_resource_lock_resource","L":"ux_resource_lock_resource"},"tbl_name":{"O":"","L":""},"idx_cols":[{"name":{"O":"resource","L":"resource"},"offset":1,"length":-1}],"state":0,"backfill_state":0,"comment":"","index_type":1,"is_unique":true,"is_primary":false,"is_invisible":false,"is_global":false,"mv_index":false}”]

[2023/07/18 16:55:53.002 +08:00] [INFO] [backend_mgr.go:74] [“[ddl-ingest] ingest backfill is not available”] [error=“cannot get disk capacity at /tmp/tidb/tmp_ddl-4000: no such file or directory”]

[2023/07/18 16:55:53.002 +08:00] [ERROR] [ddl_worker.go:942] [“[ddl] run DDL job error”] [worker=“worker 3, tp add index”] [error=“cannot get disk capacity at /tmp/tidb/tmp_ddl-4000: no such file or directory”]

[2023/07/18 16:55:53.013 +08:00] [INFO] [ddl_worker.go:825] [“[ddl] run DDL job failed, sleeps a while then retries it.”] [worker=“worker 3, tp add index”] [waitTime=1s] [error=“cannot get disk capacity at /tmp/tidb/tmp_ddl-4000: no such file or directory”]

[2023/07/18 16:55:54.013 +08:00] [INFO] [ddl_worker.go:1184] [“[ddl] schema version doesn’t change”]

[2023/07/18 16:55:54.019 +08:00] [INFO] [ddl_worker.go:980] [“[ddl] run DDL job”] [worker=“worker 2, tp add index”] [job=“ID:128, Type:add index, State:running, SchemaState:none, SchemaID:88, TableID:126, RowCount:0, ArgLen:0, start time: 2023-07-18 16:55:52.941 +0800 CST, Err:[ddl:-1]cannot get disk capacity at /tmp/tidb/tmp_ddl-4000: no such file or directory, ErrCount:1, SnapshotVersion:0, UniqueWarnings:0”]

[2023/07/18 16:55:54.020 +08:00] [INFO] [index.go:620] [“[ddl] run add index job”] [job=“ID:128, Type:add index, State:running, SchemaState:none, SchemaID:88, TableID:126, RowCount:0, ArgLen:6, start time: 2023-07-18 16:55:52.941 +0800 CST, Err:[ddl:-1]cannot get disk capacity at /tmp/tidb/tmp_ddl-4000: no such file or directory, ErrCount:1, SnapshotVersion:0, UniqueWarnings:0”] [indexInfo=“{"id":1,"idx_name":{"O":"UX_resource_lock_resource","L":"ux_resource_lock_resource"},"tbl_name":{"O":"","L":""},"idx_cols":[{"name":{"O":"resource","L":"resource"},"offset":1,"length":-1}],"state":0,"backfill_state":0,"comment":"","index_type":1,"is_unique":true,"is_primary":false,"is_invisible":false,"is_global":false,"mv_index":false}”]

tipd.log:

[2023/07/18 16:55:52.980 +08:00] [INFO] [cluster_worker.go:145] [“alloc ids for region split”] [region-id=1025] [peer-ids=“[1026]”]

[2023/07/18 16:55:52.984 +08:00] [INFO] [region.go:679] [“region Version changed”] [region-id=14] [detail=“StartKey Changed:{7480000000000000FF7B00000000000000F8} → {7480000000000000FF7E00000000000000F8}, EndKey:{748000FFFFFFFFFFFFF900000000000000F8}”] [old-version=64] [new-version=65]

[2023/07/18 16:55:52.984 +08:00] [INFO] [cluster_worker.go:237] [“region batch split, generate new regions”] [region-id=14] [origin=“id:1025 start_key:"7480000000000000FF7B00000000000000F8" end_key:"7480000000000000FF7E00000000000000F8" region_epoch:<conf_ver:1 version:65 > peers:<id:1026 store_id:1 >”] [total=1]

[2023/07/18 16:56:14.991 +08:00] [INFO] [grpc_service.go:1345] [“update service GC safe point”] [service-id=gc_worker] [expire-at=-9223372035165105235] [safepoint=442936845910933504]

[2023/07/18 16:57:55.051 +08:00] [INFO] [grpc_service.go:1290] [“updated gc safe point”] [safe-point=442936845910933504]