【 TiDB 使用环境】生产环境

【 TiDB 版本】v5.4.0

【复现路径】tikv磁盘缩容

【遇到的问题:问题现象及影响】

【附件:截图/日志/监控】

tikv磁盘缩容步骤:

1.先关闭tikv

2.拷贝数据

3.再启动tikv

1.先关闭tikv

tiup cluster stop tidb-risk -N 10.0.0.17:20160

查看集群状态,显示Disconnected

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

10.0.0.10:9093 alertmanager 10.0.0.10 9093/9094 linux/x86_64 Up /data/tidb-data/alertmanager-9093 /data/tidb-deploy/alertmanager-9093

10.0.0.10:3000 grafana 10.0.0.10 3000 linux/x86_64 Up - /data/tidb-deploy/grafana-3000

10.0.0.11:2379 pd 10.0.0.11 2379/2380 linux/x86_64 Up|L /data/tidb-data/pd-2379 /data/tidb-deploy/pd-2379

10.0.0.12:2379 pd 10.0.0.12 2379/2380 linux/x86_64 Up /data/tidb-data/pd-2379 /data/tidb-deploy/pd-2379

10.0.0.13:2379 pd 10.0.0.13 2379/2380 linux/x86_64 Up|UI /data/tidb-data/pd-2379 /data/tidb-deploy/pd-2379

10.0.0.10:9090 prometheus 10.0.0.10 9090/12020 linux/x86_64 Up /data/tidb-data/prometheus-9090 /data/tidb-deploy/prometheus-9090

10.0.0.14:4000 tidb 10.0.0.14 4000/10080 linux/x86_64 Up - /data/tidb-deploy/tidb-4000

10.0.0.15:4000 tidb 10.0.0.15 4000/10080 linux/x86_64 Up - /data/tidb-deploy/tidb-4000

10.0.0.16:4000 tidb 10.0.0.16 4000/10080 linux/x86_64 Up - /data/tidb-deploy/tidb-4000

10.0.0.17:20160 tikv 10.0.0.17 20160/20180 linux/x86_64 Disconnected /data/tidb-data/tikv-20160 /data/tidb-deploy/tikv-20160

10.0.0.18:20160 tikv 10.0.0.18 20160/20180 linux/x86_64 Up /data/tidb-data/tikv-20160 /data/tidb-deploy/tikv-20160

10.0.0.19:20160 tikv 10.0.0.19 20160/20180 linux/x86_64 Up /data/tidb-data/tikv-20160 /data/tidb-deploy/tikv-20160

2.拷贝数据

cp -a /data /data1

3.启动tikv

tiup cluster start tidb-risk -N 10.0.0.17:20160

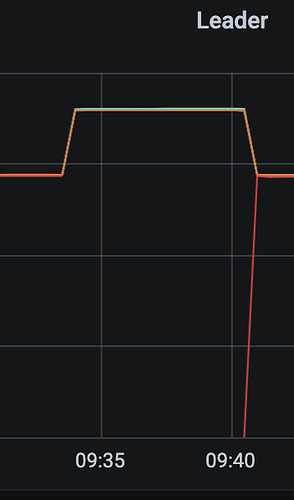

查看tikv-detail

关闭一个tikv,leader 发生选举,恢复后各节点又均衡leader

这时业务收到告警:

com.mysql.cj.jdbc.exceptions.CommunicationsException: Communications link failure

The last packet successfully received from the server was 10,674 milliseconds ago. The last packet sent successfully to the server was 10,674 milliseconds ago.

at com.mysql.cj.jdbc.exceptions.SQLError.createCommunicationsException(SQLError.java:174)

at com.mysql.cj.jdbc.exceptions.SQLExceptionsMapping.translateException(SQLExceptionsMapping.java:64)

at com.mysql.cj.jdbc.ClientPreparedStatement.executeInternal(ClientPreparedStatement.java:953)

at com.mysql.cj.jdbc.ClientPreparedStatement.execute(ClientPreparedStatement.java:370)

at com.opay.realtime.etl.util.JdbcUtil.riskSink(JdbcUtil.scala:142)

at com.opay.risk.features.sink.TableSinkMappingBroadcastProcessExt$TableSinkMappingProcess.doProcess(TableSinkMappingBroadcastProcessExt.scala:34)

at com.opay.risk.features.sink.TableSinkMappingBroadcastProcessFunction$$anonfun$processElement$1.apply(TableSinkMappingBroadcastProcessFunction.scala:64)

at com.opay.risk.features.sink.TableSinkMappingBroadcastProcessFunction$$anonfun$processElement$1.apply(TableSinkMappingBroadcastProcessFunction.scala:63)

at scala.collection.immutable.Map$Map1.foreach(Map.scala:116)

at com.opay.risk.features.sink.TableSinkMappingBroadcastProcessFunction.processElement(TableSinkMappingBroadcastProcessFunction.scala:63)

at com.opay.risk.features.sink.TableSinkMappingBroadcastProcessFunction.processElement(TableSinkMappingBroadcastProcessFunction.scala:24)

at org.apache.flink.streaming.api.operators.co.CoBroadcastWithNonKeyedOperator.processElement1(CoBroadcastWithNonKeyedOperator.java:110)

at org.apache.flink.streaming.runtime.io.StreamTwoInputProcessorFactory.processRecord1(StreamTwoInputProcessorFactory.java:213)

at org.apache.flink.streaming.runtime.io.StreamTwoInputProcessorFactory.lambda$create$0(StreamTwoInputProcessorFactory.java:178)

at org.apache.flink.streaming.runtime.io.StreamTwoInputProcessorFactory$StreamTaskNetworkOutput.emitRecord(StreamTwoInputProcessorFactory.java:291)

at org.apache.flink.streaming.runtime.io.AbstractStreamTaskNetworkInput.processElement(AbstractStreamTaskNetworkInput.java:134)

at org.apache.flink.streaming.runtime.io.AbstractStreamTaskNetworkInput.emitNext(AbstractStreamTaskNetworkInput.java:105)

at org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:66)

at org.apache.flink.streaming.runtime.io.StreamTwoInputProcessor.processInput(StreamTwoInputProcessor.java:96)

at org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:423)

at org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:204)

at org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:684)

at org.apache.flink.streaming.runtime.tasks.StreamTask.executeInvoke(StreamTask.java:639)

at org.apache.flink.streaming.runtime.tasks.StreamTask.runWithCleanUpOnFail(StreamTask.java:650)

at org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:623)

at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:779)

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:566)

at java.lang.Thread.run(Thread.java:750)

Caused by: com.mysql.cj.exceptions.CJCommunicationsException: Communications link failure

The last packet successfully received from the server was 10,674 milliseconds ago. The last packet sent successfully to the server was 10,674 milliseconds ago.

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.cj.exceptions.ExceptionFactory.createException(ExceptionFactory.java:61)

at com.mysql.cj.exceptions.ExceptionFactory.createException(ExceptionFactory.java:105)

at com.mysql.cj.exceptions.ExceptionFactory.createException(ExceptionFactory.java:151)

at com.mysql.cj.exceptions.ExceptionFactory.createCommunicationsException(ExceptionFactory.java:167)

at com.mysql.cj.protocol.a.NativeProtocol.readMessage(NativeProtocol.java:546)

at com.mysql.cj.protocol.a.NativeProtocol.checkErrorMessage(NativeProtocol.java:710)

at com.mysql.cj.protocol.a.NativeProtocol.sendCommand(NativeProtocol.java:649)

at com.mysql.cj.protocol.a.NativeProtocol.sendQueryPacket(NativeProtocol.java:948)

at com.mysql.cj.NativeSession.execSQL(NativeSession.java:1075)

at com.mysql.cj.jdbc.ClientPreparedStatement.executeInternal(ClientPreparedStatement.java:930)

… 25 more

Caused by: java.io.EOFException: Can not read response from server. Expected to read 4 bytes, read 0 bytes before connection was unexpectedly lost.

at com.mysql.cj.protocol.FullReadInputStream.readFully(FullReadInputStream.java:67)

at com.mysql.cj.protocol.a.SimplePacketReader.readHeader(SimplePacketReader.java:63)

at com.mysql.cj.protocol.a.SimplePacketReader.readHeader(SimplePacketReader.java:45)

at com.mysql.cj.protocol.a.TimeTrackingPacketReader.readHeader(TimeTrackingPacketReader.java:52)

at com.mysql.cj.protocol.a.TimeTrackingPacketReader.readHeader(TimeTrackingPacketReader.java:41)

at com.mysql.cj.protocol.a.MultiPacketReader.readHeader(MultiPacketReader.java:54)

at com.mysql.cj.protocol.a.MultiPacketReader.readHeader(MultiPacketReader.java:44)

at com.mysql.cj.protocol.a.NativeProtocol.readMessage(NativeProtocol.java:540)

… 30 more

连接参数 jdbc:mysql://10.0.0.11:3306/orders?useSSL=false&rewriteBatchedStatements=true&autoReconnect=true

这个3306是在pd节点上起的haproxy,代理后面三个tidb-4000节点

我的理解是操作tikv,发生了leader选举,请求到新的store上,不应该影响到tidb上的连接报错,为什么这里会影响到tidb的连接中断?