os: Fedora Linux 38 (Workstation Edition) x86_64

Kernel: 6.3.7-200.fc38.x86_64

TIDB version: v7.1.0

参考了文档:https://docs.pingcap.com/zh/tidb/dev/quick-start-with-tidb#在单机上模拟部署生产环境集群

部署配置:

# # Global variables are applied to all deployments and used as the default value of

# # the deployments if a specific deployment value is missing.

global:

user: "tidb"

ssh_port: 22

deploy_dir: "/data/tidb/deploy"

data_dir: "/data/tidb/data"

# # Monitored variables are applied to all the machines.

monitored:

node_exporter_port: 9100

blackbox_exporter_port: 9115

server_configs:

tidb:

log.slow-threshold: 300

tikv:

readpool.storage.use-unified-pool: false

readpool.coprocessor.use-unified-pool: true

pd:

replication.enable-placement-rules: true

replication.location-labels: ["host"]

tiflash:

logger.level: "info"

pd_servers:

- host: 172.21.117.108

tidb_servers:

- host: 172.21.117.108

tikv_servers:

- host: 172.21.117.108

port: 20160

status_port: 20180

config:

server.labels: { host: "logic-host-1" }

- host: 172.21.117.108

port: 20161

status_port: 20181

config:

server.labels: { host: "logic-host-2" }

- host: 172.21.117.108

port: 20162

status_port: 20182

config:

server.labels: { host: "logic-host-3" }

tiflash_servers:

- host: 172.21.117.108

monitoring_servers:

- host: 172.21.117.108

grafana_servers:

- host: 172.21.117.108

命令和输出

$ tiup cluster deploy tilocal v7.1.0 ./topo.yaml --user root -p

tiup is checking updates for component cluster ...

Starting component `cluster`: /home/user/.tiup/components/cluster/v1.12.3/tiup-cluster deploy tilocal v7.1.0 ./topo.yaml --user root -p

Input SSH password:

+ Detect CPU Arch Name

- Detecting node 172.21.117.108 Arch info ... Done

+ Detect CPU OS Name

- Detecting node 172.21.117.108 OS info ... Done

Please confirm your topology:

Cluster type: tidb

Cluster name: tilocal

Cluster version: v7.1.0

Role Host Ports OS/Arch Directories

---- ---- ----- ------- -----------

pd 172.21.117.108 2379/2380 linux/x86_64 /data/tidb/deploy/pd-2379,/data/tidb/data/pd-2379

tikv 172.21.117.108 20160/20180 linux/x86_64 /data/tidb/deploy/tikv-20160,/data/tidb/data/tikv-20160

tikv 172.21.117.108 20161/20181 linux/x86_64 /data/tidb/deploy/tikv-20161,/data/tidb/data/tikv-20161

tikv 172.21.117.108 20162/20182 linux/x86_64 /data/tidb/deploy/tikv-20162,/data/tidb/data/tikv-20162

tidb 172.21.117.108 4000/10080 linux/x86_64 /data/tidb/deploy/tidb-4000

tiflash 172.21.117.108 9000/8123/3930/20170/20292/8234 linux/x86_64 /data/tidb/deploy/tiflash-9000,/data/tidb/data/tiflash-9000

prometheus 172.21.117.108 9090/12020 linux/x86_64 /data/tidb/deploy/prometheus-9090,/data/tidb/data/prometheus-9090

grafana 172.21.117.108 3000 linux/x86_64 /data/tidb/deploy/grafana-3000

Attention:

1. If the topology is not what you expected, check your yaml file.

2. Please confirm there is no port/directory conflicts in same host.

Do you want to continue? [y/N]: (default=N) y

+ Generate SSH keys ... Done

+ Download TiDB components

- Download pd:v7.1.0 (linux/amd64) ... Done

- Download tikv:v7.1.0 (linux/amd64) ... Done

- Download tidb:v7.1.0 (linux/amd64) ... Done

- Download tiflash:v7.1.0 (linux/amd64) ... Done

- Download prometheus:v7.1.0 (linux/amd64) ... Done

- Download grafana:v7.1.0 (linux/amd64) ... Done

- Download node_exporter: (linux/amd64) ... Done

- Download blackbox_exporter: (linux/amd64) ... Done

+ Initialize target host environments

- Prepare 172.21.117.108:22 ... Done

+ Deploy TiDB instance

- Copy pd -> 172.21.117.108 ... Error

- Copy tikv -> 172.21.117.108 ... Error

- Copy tikv -> 172.21.117.108 ... Error

- Copy tikv -> 172.21.117.108 ... Error

- Copy tidb -> 172.21.117.108 ... Error

- Copy tiflash -> 172.21.117.108 ... Error

- Copy prometheus -> 172.21.117.108 ... Error

- Copy grafana -> 172.21.117.108 ... Error

- Deploy node_exporter -> 172.21.117.108 ... Error

- Deploy blackbox_exporter -> 172.21.117.108 ... Error

Error: failed to scp /home/user/.tiup/storage/cluster/packages/tidb-v7.1.0-linux-amd64.tar.gz to 172.21.117.108:/data/tidb/deploy/tidb-4000/bin/tidb-v7.1.0-linux-amd64.tar.gz: failed to scp /home/user/.tiup/storage/cluster/packages/tidb-v7.1.0-linux-amd64.tar.gz to tidb@172.21.117.108:/data/tidb/deploy/tidb-4000/bin/tidb-v7.1.0-linux-amd64.tar.gz: Process exited with status 1

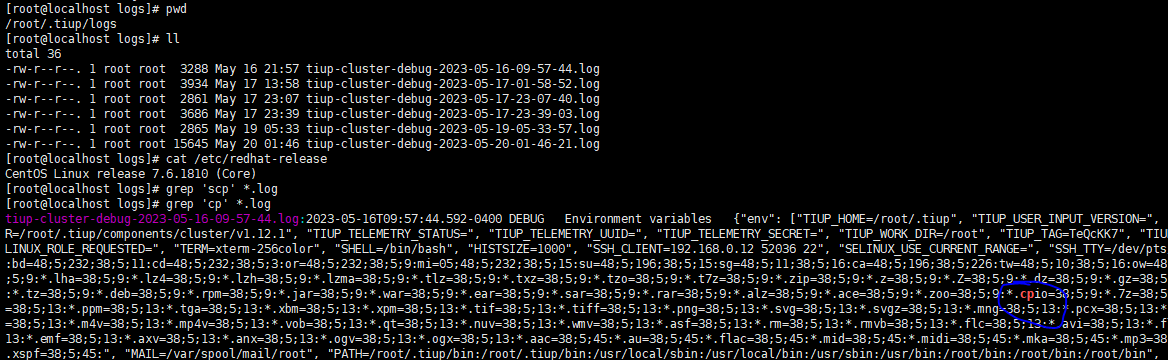

Verbose debug logs has been written to /home/user/.tiup/logs/tiup-cluster-debug-2023-06-16-16-15-25.log.

日志(最后一条)

2023-06-16T16:15:25.300+0800 INFO Execute command finished {"code": 1, "error": "failed to scp /home/user/.tiup/storage/cluster/packages/tidb-v7.1.0-linux-amd64.tar.gz to 172.21.117.108:/data/tidb/deploy/tidb-4000/bin/tidb-v7.1.0-linux-amd64.tar.gz: failed to scp /home/user/.tiup/storage/cluster/packages/tidb-v7.1.0-linux-amd64.tar.gz to tidb@172.21.117.108:/data/tidb/deploy/tidb-4000/bin/tidb-v7.1.0-linux-amd64.tar.gz: Process exited with status 1", "errorVerbose": "Process exited with status 1\nfailed to scp /home/user/.tiup/storage/cluster/packages/tidb-v7.1.0-linux-amd64.tar.gz to tidb@172.21.117.108:/data/tidb/deploy/tidb-4000/bin/tidb-v7.1.0-linux-amd64.tar.gz\ngithub.com/pingcap/tiup/pkg/cluster/executor.(*EasySSHExecutor).Transfer\n\tgithub.com/pingcap/tiup/pkg/cluster/executor/ssh.go:207\ngithub.com/pingcap/tiup/pkg/cluster/executor.(*CheckPointExecutor).Transfer\n\tgithub.com/pingcap/tiup/pkg/cluster/executor/checkpoint.go:114\ngithub.com/pingcap/tiup/pkg/cluster/task.(*InstallPackage).Execute\n\tgithub.com/pingcap/tiup/pkg/cluster/task/install_package.go:45\ngithub.com/pingcap/tiup/pkg/cluster/task.(*CopyComponent).Execute\n\tgithub.com/pingcap/tiup/pkg/cluster/task/copy_component.go:64\ngithub.com/pingcap/tiup/pkg/cluster/task.(*Serial).Execute\n\tgithub.com/pingcap/tiup/pkg/cluster/task/task.go:86\ngithub.com/pingcap/tiup/pkg/cluster/task.(*StepDisplay).Execute\n\tgithub.com/pingcap/tiup/pkg/cluster/task/step.go:111\ngithub.com/pingcap/tiup/pkg/cluster/task.(*Parallel).Execute.func1\n\tgithub.com/pingcap/tiup/pkg/cluster/task/task.go:144\nruntime.goexit\n\truntime/asm_amd64.s:1594\nfailed to scp /home/user/.tiup/storage/cluster/packages/tidb-v7.1.0-linux-amd64.tar.gz to 172.21.117.108:/data/tidb/deploy/tidb-4000/bin/tidb-v7.1.0-linux-amd64.tar.gz"}

尝试执行下面的命令

scp /home/user/.tiup/storage/cluster/packages/tidb-v7.1.0-linux-amd64.tar.gz tidb@172.21.117.108:/data/tidb/deploy/

但需要密码