补充:

按照先使用binlogctl offline-pump,再tiup scale in的方法也不行

执行命令以及结果:

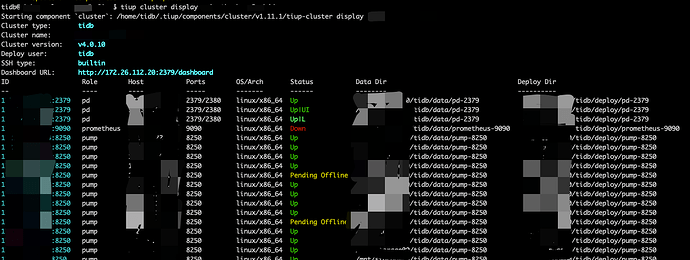

- 缩容pump节点xxx.xx.xx.xx:8250

binlogctl -pd-urls=http://xxx.xx.xx.xx:2379 -cmd offline-pump -node-id xxx.xx.xx.xx:8250

- 执行结果

[2023/06/06 17:40:56.477 +08:00] [INFO] [nodes.go:123] [“Apply action on node success”] [action=close] [NodeID=xxx.xx.xx.xx:8250]

- 查看pump状态

binlogctl -pd-urls=http://xxx.xx.xx.xx:2379 -cmd pumps |grep xxx.xx.xx.xx

- 执行结果

[2023/06/06 17:45:32.783 +08:00] [INFO] [nodes.go:53] [“query node”] [type=pump] [node=“{NodeID: xxx.xx.xx.xx:8250, Addr: xxx.xx.xx.xx:8250, State: online, MaxCommitTS: 441986437858984243, UpdateTime: 2023-06-06 17:40:55 +0800 CST}”]

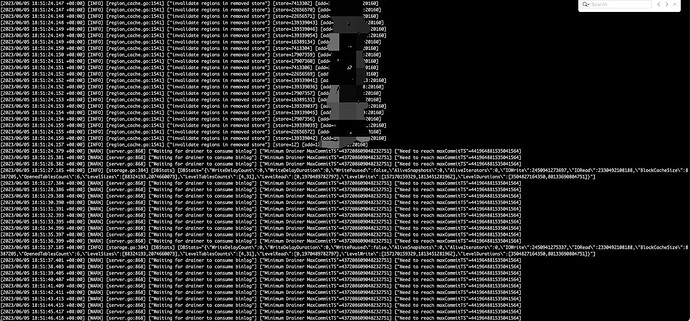

pump的运行日志(去除无关日志)

[2023/06/06 17:40:26.532 +08:00] [INFO] [server.go:567] [“server info tick”] [writeBinlogCount=5254631854] [alivePullerCount=2] [MaxCommitTS=441986430138843378]

[2023/06/06 17:40:26.532 +08:00] [INFO] [server.go:548] [“use gc ts to detect drainer checkpoint”] [“gc ts”=441826942830247936]

[2023/06/06 17:40:26.533 +08:00] [ERROR] [server.go:639] [“drainer’s checkpoint is older than pump gc ts, some binlogs are purged”] [drainer=xxx.xx.xx.xx:8249] [“gc ts”=441845817198247936] [“drainer checkpoint”=441511349769469954]

[2023/06/06 17:40:26.533 +08:00] [ERROR] [server.go:639] [“drainer’s checkpoint is older than pump gc ts, some binlogs are purged”] [drainer=xxx.xxx.xx.xx:8249] [“gc ts”=441845817198247936] [“drainer checkpoint”=437208609048232751]

[2023/06/06 17:40:30.695 +08:00] [INFO] [server.go:596] [“send gc request to storage”] [“request gc ts”=441827886548910080]

[2023/06/06 17:40:30.695 +08:00] [INFO] [storage.go:695] [“Starting GC”] [ts=441827880781742080]

[2023/06/06 17:40:30.695 +08:00] [INFO] [storage.go:752] [“New LevelDB iterator created for GC”] [ts=441827880781742080] [start=0] [limit=441827880781742081]

[2023/06/06 17:40:30.695 +08:00] [INFO] [vlog.go:482] [“GC vlog”] [ts=441827880781742080]

[2023/06/06 17:40:30.695 +08:00] [INFO] [storage.go:701] [“Finish VLog GC”] [ts=441827880781742080]

[2023/06/06 17:40:31.444 +08:00] [INFO] [storage.go:791] [“has delete”] [“delete num”=102400]

[2023/06/06 17:40:31.605 +08:00] [INFO] [storage.go:791] [“has delete”] [“delete num”=204800]

[2023/06/06 17:40:31.738 +08:00] [INFO] [storage.go:791] [“has delete”] [“delete num”=307200]

[2023/06/06 17:40:31.909 +08:00] [INFO] [storage.go:791] [“has delete”] [“delete num”=409600]

[2023/06/06 17:40:32.033 +08:00] [INFO] [storage.go:791] [“has delete”] [“delete num”=512000]

[2023/06/06 17:40:32.155 +08:00] [INFO] [storage.go:791] [“has delete”] [“delete num”=614400]

[2023/06/06 17:40:32.359 +08:00] [INFO] [storage.go:791] [“has delete”] [“delete num”=716800]

[2023/06/06 17:40:32.483 +08:00] [INFO] [storage.go:791] [“has delete”] [“delete num”=819200]

[2023/06/06 17:40:32.607 +08:00] [INFO] [storage.go:791] [“has delete”] [“delete num”=921600]

[2023/06/06 17:40:32.799 +08:00] [INFO] [storage.go:791] [“has delete”] [“delete num”=1024000]

[2023/06/06 17:40:32.892 +08:00] [INFO] [storage.go:783] [“Finish KV GC”] [ts=441827880781742080] [“delete num”=1115135]

[2023/06/06 17:40:36.532 +08:00] [INFO] [server.go:567] [“server info tick”] [writeBinlogCount=5254636083] [alivePullerCount=2] [MaxCommitTS=441986432760283250]

[2023/06/06 17:40:46.532 +08:00] [INFO] [server.go:567] [“server info tick”] [writeBinlogCount=5254640366] [alivePullerCount=2] [MaxCommitTS=441986435381723699]

[执行offline-pump命令]

[2023/06/06 17:40:56.434 +08:00] [INFO] [server.go:786] [“receive action”] [nodeID=xxx.xx.xx.xx:8250] [action=close]

[2023/06/06 17:40:56.434 +08:00] [INFO] [server.go:809] [“pump’s state change to closing”] [nodeID=xxx.xx.xx.xx:8250]

[2023/06/06 17:40:56.434 +08:00] [INFO] [server.go:908] [“begin to close pump server”]

[2023/06/06 17:40:56.434 +08:00] [INFO] [server.go:541] [“detect drainer checkpoint routine exit”]

[2023/06/06 17:40:56.434 +08:00] [INFO] [server.go:564] [“printServerInfo exit”]

[2023/06/06 17:40:56.434 +08:00] [INFO] [server.go:517] [“genFakeBinlog exit”]

[2023/06/06 17:40:56.434 +08:00] [INFO] [node.go:236] [“Heartbeat goroutine exited”]

[2023/06/06 17:40:56.434 +08:00] [INFO] [server.go:582] [“gcBinlogFile exit”]

[2023/06/06 17:40:56.434 +08:00] [INFO] [server.go:917] [“background goroutins are stopped”]

[2023/06/06 17:40:56.434 +08:00] [INFO] [server.go:955] [“The max commit ts saved is less than expected commit ts”] [“max commit ts”=441986437976948822] [“expected commit ts”=441986437976948997]

[2023/06/06 17:40:56.443 +08:00] [WARN] [server.go:256] [“reject write binlog for not online state”] [state=closing]

[2023/06/06 17:40:56.445 +08:00] [WARN] [server.go:256] [“reject write binlog for not online state”] [state=closing]

[2023/06/06 17:40:56.446 +08:00] [WARN] [server.go:256] [“reject write binlog for not online state”] [state=closing]

[2023/06/06 17:40:32.892 +08:00] [INFO] [storage.go:783] [“Finish KV GC”] [ts=441827880781742080] [“delete num”=1115135]

[2023/06/06 17:40:36.530 +08:00] [INFO] [storage.go:384] [DBStats] [DBStats=“{"WriteDelayCount":0,"WriteDelayDuration":0,"WritePaused":false,"AliveSnapshots":0,"AliveIterators":0,"IOWrite":2462233661022,"IORead":2340245933251,"BlockCacheSi ze":8387624,"OpenedTablesCount":6,"LevelSizes":[87744451,2117234218],"LevelTablesCounts":[4,32],"LevelRead":[0,1979484705349],"LevelWrite":[157887247225,1821787228222],"LevelDurations":[3515638558788,80076360188282]}”]

[2023/06/06 17:40:36.532 +08:00] [INFO] [server.go:567] [“server info tick”] [writeBinlogCount=5254636083] [alivePullerCount=2] [MaxCommitTS=441986432760283250]

[2023/06/06 17:40:46.530 +08:00] [INFO] [storage.go:384] [DBStats] [DBStats=“{"WriteDelayCount":0,"WriteDelayDuration":0,"WritePaused":false,"AliveSnapshots":0,"AliveIterators":0,"IOWrite":2462233998683,"IORead":2340245933251,"BlockCacheSi ze":8387624,"OpenedTablesCount":6,"LevelSizes":[87744451,2117234218],"LevelTablesCounts":[4,32],"LevelRead":[0,1979484705349],"LevelWrite":[157887247225,1821787228222],"LevelDurations":[3515638558788,80076360188282]}”]

[2023/06/06 17:40:46.532 +08:00] [INFO] [server.go:567] [“server info tick”] [writeBinlogCount=5254640366] [alivePullerCount=2] [MaxCommitTS=441986435381723699]

[2023/06/06 17:40:56.434 +08:00] [INFO] [server.go:786] [“receive action”] [nodeID=xxx.xx.xx.xx:8250] [action=close]

[2023/06/06 17:40:56.434 +08:00] [INFO] [server.go:809] [“pump’s state change to closing”] [nodeID=xxx.xx.xx.xx:8250]

[2023/06/06 17:40:56.434 +08:00] [INFO] [server.go:908] [“begin to close pump server”]

[2023/06/06 17:40:56.434 +08:00] [INFO] [server.go:541] [“detect drainer checkpoint routine exit”]

[2023/06/06 17:40:56.434 +08:00] [INFO] [server.go:564] [“printServerInfo exit”]

[2023/06/06 17:40:56.434 +08:00] [INFO] [server.go:517] [“genFakeBinlog exit”]

[2023/06/06 17:40:56.434 +08:00] [INFO] [node.go:236] [“Heartbeat goroutine exited”]

[2023/06/06 17:40:56.434 +08:00] [INFO] [server.go:582] [“gcBinlogFile exit”]

[2023/06/06 17:40:56.434 +08:00] [INFO] [server.go:917] [“background goroutins are stopped”]

[2023/06/06 17:40:56.434 +08:00] [INFO] [server.go:955] [“The max commit ts saved is less than expected commit ts”] [“max commit ts”=441986437976948822] [“expected commit ts”=441986437976948997]

[2023/06/06 17:40:56.443 +08:00] [WARN] [server.go:256] [“reject write binlog for not online state”] [state=closing]

[2023/06/06 17:40:56.445 +08:00] [WARN] [server.go:256] [“reject write binlog for not online state”] [state=closing]

[2023/06/06 17:40:56.446 +08:00] [WARN] [server.go:256] [“reject write binlog for not online state”] [state=closing]

[2023/06/06 17:40:58.437 +08:00] [WARN] [server.go:868] [“Waiting for drainer to consume binlog”] [“Minimum Drainer MaxCommitTS”=437208609048232751] [“Need to reach maxCommitTS”=441986437976948997]

[2023/06/06 17:40:59.439 +08:00] [WARN] [server.go:868] [“Waiting for drainer to consume binlog”] [“Minimum Drainer MaxCommitTS”=437208609048232751] [“Need to reach maxCommitTS”=441986437976948997]

[2023/06/06 17:41:00.440 +08:00] [WARN] [server.go:868] [“Waiting for drainer to consume binlog”] [“Minimum Drainer MaxCommitTS”=437208609048232751] [“Need to reach maxCommitTS”=441986437976948997]

[2023/06/06 17:41:06.530 +08:00] [INFO] [storage.go:384] [DBStats] [DBStats=“{"WriteDelayCount":0,"WriteDelayDuration":0,"WritePaused":false,"AliveSnapshots":0,"AliveIterators":0,"IOWrite":2462234279219,"IORead":2340245933251,"BlockCacheSi ze":8387624,"OpenedTablesCount":6,"LevelSizes":[87744451,2117234218],"LevelTablesCounts":[4,32],"LevelRead":[0,1979484705349],"LevelWrite":[157887247225,1821787228222],"LevelDurations":[3515638558788,80076360188282]}”]

[2023/06/06 17:41:11.347 +08:00] [ERROR] [server.go:746] [“get pumps’ status failed”] [error=“context canceled”] [errorVerbose=“context canceled\ngithub.com/pingcap/errors.AddStack\n\t/home/tomwei7/go/pkg/mod/github.com/pingcap/errors@v0.11.5-0.202011261 02027-b0a155152ca3/errors.go:174\ngithub.com/pingcap/errors.Trace\n\t/home/tomwei7/go/pkg/mod/github.com/pingcap/errors@v0.11.5-0.20201126102027-b0a155152ca3/juju_adaptor.go:15\ngithub.com/pingcap/tidb-binlog/pkg/etcd.(*Client).List\n\t/home/tomwei7/bili bili/repos/db-rd/tidb-binlog/pkg/etcd/etcd.go:164\ngithub.com/pingcap/tidb-binlog/pkg/node.(*EtcdRegistry).Nodes\n\t/home/tomwei7/bilibili/repos/db-rd/tidb-binlog/pkg/node/registry.go:75\ngithub.com/pingcap/tidb-binlog/pump.(*pumpNode).NodesStatus\n\t/ho me/tomwei7/bilibili/repos/db-rd/tidb-binlog/pump/node.go:228\ngithub.com/pingcap/tidb-binlog/pump.(*Server).PumpStatus\n\t/home/tomwei7/bilibili/repos/db-rd/tidb-binlog/pump/server.go:744\ngithub.com/pingcap/tidb-binlog/pump.(*Server).Status\n\t/home/tom wei7/bilibili/repos/db-rd/tidb-binlog/pump/server.go:683\nnet/http.HandlerFunc.ServeHTTP\n\t/usr/lib/go/src/net/http/server.go:2109\ngithub.com/gorilla/mux.(*Router).ServeHTTP\n\t/home/tomwei7/go/pkg/mod/github.com/gorilla/mux@v1.7.4/mux.go:210\nnet/http .(*ServeMux).ServeHTTP\n\t/usr/lib/go/src/net/http/server.go:2487\nnet/http.serverHandler.ServeHTTP\n\t/usr/lib/go/src/net/http/server.go:2947\nnet/http.(*conn).serve\n\t/usr/lib/go/src/net/http/server.go:1991\nruntime.goexit\n\t/usr/lib/go/src/runtime/a sm_amd64.s:1594”]