【 TiDB 使用环境】生产环境

【 TiDB 版本】7.1.0

【复现路径】做过哪些操作出现的问题

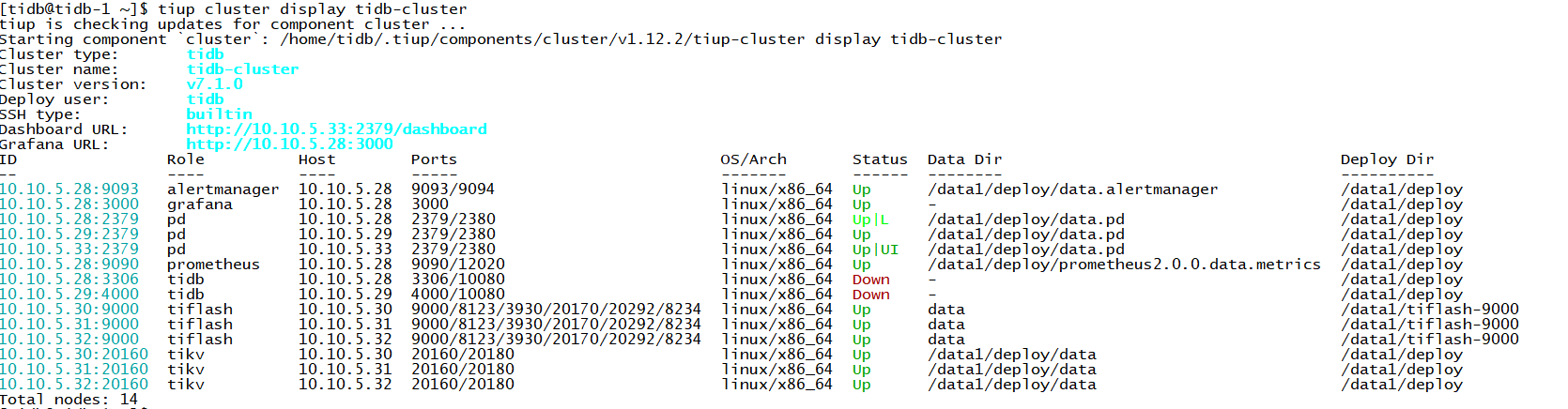

tiup cluster upgrade tidb-cluster v7.1.0

【遇到的问题:问题现象及影响】

Upgrading component tidb

Restarting instance 10.10.5.28:3306

Error: failed to restart: 10.10.5.28 tidb-3306.service, please check the instance's log(/data1/deploy/log) for more detail.: timed out waiting for port 3306 to be started after 2m0s

Verbose debug logs has been written to /home/tidb/.tiup/logs/tiup-cluster-debug-2023-06-05-22-59-43.log.

【资源配置】

【附件:截图/日志/监控】

tidb.log

[2023/06/05 22:57:43.848 +08:00] [INFO] [owner_daemon.go:81] ["begin running daemon"] [id=f6cc546c-de99-4c0d-b104-5bab6fc17e22] [daemon-id=LogBackup::Advancer]

[2023/06/05 22:57:43.855 +08:00] [INFO] [session.go:3852] ["CRUCIAL OPERATION"] [conn=0] [schemaVersion=130240] [cur_db=] [sql="ALTER TABLE mysql.stats_meta_history ADD COLUMN IF NOT EXISTS `source` varchar(40) NOT NULL after `version`;"] [user=]

[2023/06/05 22:57:43.856 +08:00] [INFO] [session.go:3852] ["CRUCIAL OPERATION"] [conn=0] [schemaVersion=130240] [cur_db=] [sql="CREATE TABLE IF NOT EXISTS mysql.tidb_ttl_task (\n\t\tjob_id varchar(64) NOT NULL,\n\t\ttable_id bigint(64) NOT NULL,\n\t\tscan_id int NOT NULL,\n\t\tscan_range_start BLOB,\n\t\tscan_range_end BLOB,\n\t\texpire_time timestamp NOT NULL,\n\t\towner_id varchar(64) DEFAULT NULL,\n\t\towner_addr varchar(64) DEFAULT NULL,\n\t\towner_hb_time timestamp DEFAULT NULL,\n\t\tstatus varchar(64) DEFAULT 'waiting',\n\t\tstatus_update_time timestamp NULL DEFAULT NULL,\n\t\tstate text,\n\t\tcreated_time timestamp NOT NULL,\n\t\tprimary key(job_id, scan_id),\n\t\tkey(created_time));"] [user=]

[2023/06/05 22:57:43.856 +08:00] [INFO] [session.go:3852] ["CRUCIAL OPERATION"] [conn=0] [schemaVersion=130240] [cur_db=] [sql="CREATE TABLE IF NOT EXISTS mysql.tidb_ttl_job_history (\n\t\tjob_id varchar(64) PRIMARY KEY,\n\t\ttable_id bigint(64) NOT NULL,\n parent_table_id bigint(64) NOT NULL,\n \ttable_schema varchar(64) NOT NULL,\n\t\ttable_name varchar(64) NOT NULL,\n \tpartition_name varchar(64) DEFAULT NULL,\n\t\tcreate_time timestamp NOT NULL,\n\t\tfinish_time timestamp NOT NULL,\n\t\tttl_expire timestamp NOT NULL,\n summary_text text,\n\t\texpired_rows bigint(64) DEFAULT NULL,\n \tdeleted_rows bigint(64) DEFAULT NULL,\n \terror_delete_rows bigint(64) DEFAULT NULL,\n \tstatus varchar(64) NOT NULL,\n \tkey(table_schema, table_name, create_time),\n \tkey(parent_table_id, create_time),\n \tkey(create_time)\n\t);"] [user=]

[2023/06/05 22:57:43.904 +08:00] [INFO] [ddl_worker.go:238] ["[ddl] add DDL jobs"] ["batch count"=1] [jobs="ID:123481, Type:create view, State:queueing, SchemaState:none, SchemaID:3, TableID:123480, RowCount:0, ArgLen:3, start time: 2023-06-05 22:57:43.999 +0800 CST, Err:<nil>, ErrCount:0, SnapshotVersion:0; "] [table=true]

[2023/06/05 22:57:43.906 +08:00] [INFO] [ddl.go:1056] ["[ddl] start DDL job"] [job="ID:123481, Type:create view, State:queueing, SchemaState:none, SchemaID:3, TableID:123480, RowCount:0, ArgLen:3, start time: 2023-06-05 22:57:43.999 +0800 CST, Err:<nil>, ErrCount:0, SnapshotVersion:0"] [query="CREATE OR REPLACE VIEW mysql.tidb_mdl_view as (\n\t\tSELECT job_id,\n\t\t\tdb_name,\n\t\t\ttable_name,\n\t\t\tquery,\n\t\t\tsession_id,\n\t\t\ttxnstart,\n\t\t\ttidb_decode_sql_digests(all_sql_digests, 4096) AS SQL_DIGESTS\n\t\tFROM information_schema.ddl_jobs,\n\t\t\tinformation_schema.cluster_tidb_trx,\n\t\t\tinformation_schema.cluster_processlist\n\t\tWHERE (ddl_jobs.state != 'synced' and ddl_jobs.state != 'cancelled')\n\t\t\tAND Find_in_set(ddl_jobs.table_id, cluster_tidb_trx.related_table_ids)\n\t\t\tAND cluster_tidb_trx.session_id = cluster_processlist.id\n\t);"]

[2023/06/05 22:57:45.843 +08:00] [INFO] [info.go:1189] [SetTiFlashGroupConfig]

[2023/06/05 22:58:06.347 +08:00] [WARN] [domain.go:215] ["failed to get schema version"] [error="There is no Write MVCC info for the schema version"] [version=130240]

[2023/06/05 22:58:28.847 +08:00] [WARN] [domain.go:215] ["failed to get schema version"] [error="There is no Write MVCC info for the schema version"] [version=130240]

[2023/06/05 22:58:51.347 +08:00] [WARN] [domain.go:215] ["failed to get schema version"] [error="There is no Write MVCC info for the schema version"] [version=130240]

[2023/06/05 22:59:13.847 +08:00] [WARN] [domain.go:215] ["failed to get schema version"] [error="There is no Write MVCC info for the schema version"] [version=130240]

[2023/06/05 22:59:36.348 +08:00] [WARN] [domain.go:215] ["failed to get schema version"] [error="There is no Write MVCC info for the schema version"] [version=130240]

[2023/06/05 22:59:58.847 +08:00] [WARN] [domain.go:215] ["failed to get schema version"] [error="There is no Write MVCC info for the schema version"] [version=130240]

[2023/06/05 23:00:21.347 +08:00] [WARN] [domain.go:215] ["failed to get schema version"] [error="There is no Write MVCC info for the schema version"] [version=130240]

[2023/06/05 23:00:43.847 +08:00] [WARN] [domain.go:215] ["failed to get schema version"] [error="There is no Write MVCC info for the schema version"] [version=130240]

[2023/06/05 23:01:06.347 +08:00] [WARN] [domain.go:215] ["failed to get schema version"] [error="There is no Write MVCC info for the schema version"] [version=130240]