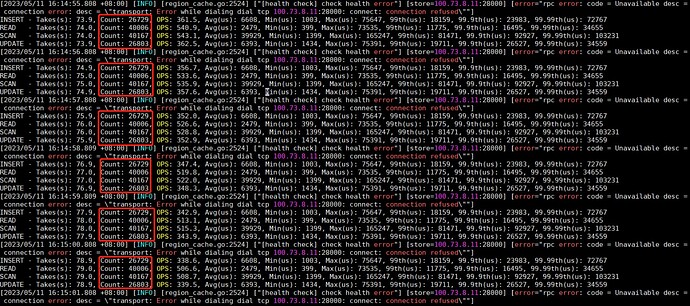

日志显示,kill TiKV 发生在 16:14:55

May 11, 2023 @ 16:14:55.810 [store.rs:2808] ["broadcasting <mark>unreachable</mark>"] [unreachable_store_id=1] [store_id=5]

May 11, 2023 @ 16:14:55.810 [store.rs:2808] ["broadcasting <mark>unreachable</mark>"] [unreachable_store_id=1] [store_id=4]

而 Raft 重新发起选举发生在 16:15:05 之后

May 11, 2023 @ 16:15:05.557 [raft.rs:1550] ["starting a new <mark>election</mark>"] [term=11] [raft_id=123] [region_id=120]

May 11, 2023 @ 16:15:06.578 [raft.rs:1550] ["starting a new <mark>election</mark>"] [term=13] [raft_id=11] [region_id=8]

May 11, 2023 @ 16:15:06.582 [raft.rs:1550] ["starting a new <mark>election</mark>"] [term=11] [raft_id=79] [region_id=76]

May 11, 2023 @ 16:15:07.579 [raft.rs:1550] ["starting a new <mark>election</mark>"] [term=9] [raft_id=35] [region_id=32]

May 11, 2023 @ 16:15:07.580 [raft.rs:1550] ["starting a new <mark>election</mark>"] [term=10] [raft_id=43] [region_id=40]

中间间隔超过 10s,是因为 TiKV 默认配置中 leader 心跳超时为 10s

如果要缩短中断时间,可以相应减少这个心跳超时。可以在 TiKV 配置中增加以下内容:

[raftstore]

raft-base-tick-interval = "1s"

raft-election-timeout-ticks = 3

raft-heartbeat-ticks = 1

pd-heartbeat-tick-interval = "5s"

raft-store-max-leader-lease = "2s"

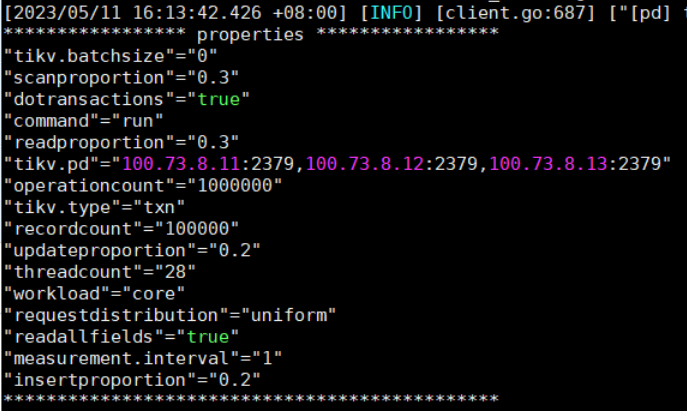

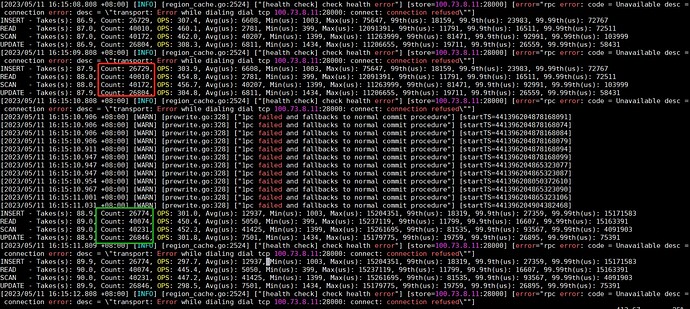

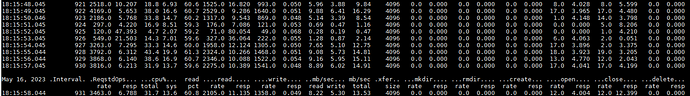

测试效果如下(注意 TOTAL 行。v6.5.2,PD x 1 + TiKV x 3):

Using request distribution 'uniform' a keyrange of [0 99999]

[2023/05/14 10:41:59.791 +08:00] [INFO] [client.go:392] ["[pd] create pd client with endpoints"] [pd-address="[127.0.0.1:33379]"]

[2023/05/14 10:41:59.796 +08:00] [INFO] [base_client.go:350] ["[pd] switch leader"] [new-leader=http://127.0.0.1:33379] [old-leader=]

[2023/05/14 10:41:59.796 +08:00] [INFO] [base_client.go:105] ["[pd] init cluster id"] [cluster-id=7232856776285670424]

[2023/05/14 10:41:59.797 +08:00] [INFO] [client.go:687] ["[pd] tso dispatcher created"] [dc-location=global]

***************** properties *****************

"requestdistribution"="uniform"

"operationcount"="100000000"

"updateproportion"="0.5"

"readallfields"="true"

"measurement.interval"="1"

"tikv.batchsize"="0"

"dotransactions"="true"

"command"="run"

"readproportion"="0.5"

"tikv.pd"="127.0.0.1:33379"

"tikv.type"="txn"

"workload"="core"

"insertproportion"="0"

"threadcount"="20"

"recordcount"="100000"

"scanproportion"="0"

**********************************************

...

...

READ - Takes(s): 10.9, Count: 76030, OPS: 6946.1, Avg(us): 746, Min(us): 360, Max(us): 92671, 50th(us): 677, 90th(us): 902, 95th(us): 1020, 99th(us): 2001, 99.9th(us): 5643, 99.99th(us): 55007

TOTAL - Takes(s): 10.9, Count: 151528, OPS: 13845.0, Avg(us): 1441, Min(us): 360, Max(us): 96319, 50th(us): 1537, 90th(us): 2305, 95th(us): 2589, 99th(us): 4227, 99.9th(us): 7951, 99.99th(us): 56575

UPDATE - Takes(s): 10.9, Count: 75498, OPS: 6909.5, Avg(us): 2141, Min(us): 1255, Max(us): 96319, 50th(us): 1993, 90th(us): 2555, 95th(us): 2915, 99th(us): 5743, 99.9th(us): 8631, 99.99th(us): 74815

UPDATE_ERROR - Takes(s): 9.9, Count: 8, OPS: 0.8, Avg(us): 2803, Min(us): 1452, Max(us): 7531, 50th(us): 2261, 90th(us): 3615, 95th(us): 7531, 99th(us): 7531, 99.9th(us): 7531, 99.99th(us): 7531

READ - Takes(s): 11.9, Count: 83421, OPS: 6983.2, Avg(us): 741, Min(us): 360, Max(us): 92671, 50th(us): 674, 90th(us): 897, 95th(us): 1014, 99th(us): 1973, 99.9th(us): 5615, 99.99th(us): 55007

TOTAL - Takes(s): 11.9, Count: 166370, OPS: 13928.4, Avg(us): 1432, Min(us): 360, Max(us): 96319, 50th(us): 1533, 90th(us): 2289, 95th(us): 2563, 99th(us): 4187, 99.9th(us): 7843, 99.99th(us): 56447

UPDATE - Takes(s): 11.9, Count: 82949, OPS: 6954.9, Avg(us): 2126, Min(us): 1255, Max(us): 96319, 50th(us): 1981, 90th(us): 2531, 95th(us): 2883, 99th(us): 5715, 99.9th(us): 8399, 99.99th(us): 74815

UPDATE_ERROR - Takes(s): 10.9, Count: 8, OPS: 0.7, Avg(us): 2803, Min(us): 1452, Max(us): 7531, 50th(us): 2261, 90th(us): 3615, 95th(us): 7531, 99th(us): 7531, 99.9th(us): 7531, 99.99th(us): 7531

[2023/05/14 10:42:12.335 +08:00] [INFO] [region_cache.go:2524] ["[health check] check health error"] [store=127.0.0.1:20162] [error="rpc error: code = Unavailable desc = connection error: desc = \"transport: Error while dialing dial tcp 127.0.0.1:20162: connect: connection refused\""]

[2023/05/14 10:42:12.335 +08:00] [INFO] [region_request.go:794] ["mark store's regions need be refill"] [id=2] [addr=127.0.0.1:20162] [error="rpc error: code = Unavailable desc = error reading from server: read tcp 127.0.0.1:50720->127.0.0.1:20162: read: connection reset by peer"] [errorVerbose="rpc error: code = Unavailable desc = error reading from server: read tcp 127.0.0.1:50720->127.0.0.1:20162: read: connection reset by peer\ngithub.com/tikv/client-go/v2/tikvrpc.CallRPC\n\t/disk1/home/pingyu/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.1-0.20220720064224-aa9ded37d17d/tikvrpc/tikvrpc.go:1064\ngithub.com/tikv/client-go/v2/internal/client.(*RPCClient).sendRequest\n\t/disk1/home/pingyu/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.1-0.20220720064224-aa9ded37d17d/internal/client/client.go:524\ngithub.com/tikv/client-go/v2/internal/client.(*RPCClient).SendRequest\n\t/disk1/home/pingyu/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.1-0.20220720064224-aa9ded37d17d/internal/client/client.go:533\ngithub.com/tikv/client-go/v2/internal/client.interceptedClient.SendRequest\n\t/disk1/home/pingyu/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.1-0.20220720064224-aa9ded37d17d/internal/client/client_interceptor.go:42\ngithub.com/tikv/client-go/v2/internal/client.reqCollapse.SendRequest\n\t/disk1/home/pingyu/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.1-0.20220720064224-aa9ded37d17d/internal/client/client_collapse.go:74\ngithub.com/tikv/client-go/v2/internal/locate.(*RegionRequestSender).sendReqToRegion\n\t/disk1/home/pingyu/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.1-0.20220720064224-aa9ded37d17d/internal/locate/region_request.go:1184\ngithub.com/tikv/client-go/v2/internal/locate.(*RegionRequestSender).SendReqCtx\n\t/disk1/home/pingyu/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.1-0.20220720064224-aa9ded37d17d/internal/locate/region_request.go:1017\ngithub.com/tikv/client-go/v2/txnkv/txnsnapshot.(*ClientHelper).SendReqCtx\n\t/disk1/home/pingyu/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.1-0.20220720064224-aa9ded37d17d/txnkv/txnsnapshot/client_helper.go:146\ngithub.com/tikv/client-go/v2/txnkv/txnsnapshot.(*KVSnapshot).get\n\t/disk1/home/pingyu/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.1-0.20220720064224-aa9ded37d17d/txnkv/txnsnapshot/snapshot.go:620\ngithub.com/tikv/client-go/v2/txnkv/txnsnapshot.(*KVSnapshot).Get\n\t/disk1/home/pingyu/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.1-0.20220720064224-aa9ded37d17d/txnkv/txnsnapshot/snapshot.go:529\ngithub.com/tikv/client-go/v2/internal/unionstore.(*KVUnionStore).Get\n\t/disk1/home/pingyu/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.1-0.20220720064224-aa9ded37d17d/internal/unionstore/union_store.go:102\ngithub.com/tikv/client-go/v2/txnkv/transaction.(*KVTxn).Get\n\t/disk1/home/pingyu/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.1-0.20220720064224-aa9ded37d17d/txnkv/transaction/txn.go:172\ngithub.com/pingcap/go-ycsb/db/tikv.(*txnDB).Read\n\t/disk1/home/pingyu/workspace/go-ycsb/db/tikv/txn.go:103\ngithub.com/pingcap/go-ycsb/pkg/client.DbWrapper.Read\n\t/disk1/home/pingyu/workspace/go-ycsb/pkg/client/dbwrapper.go:59\ngithub.com/pingcap/go-ycsb/pkg/workload.(*core).doTransactionRead\n\t/disk1/home/pingyu/workspace/go-ycsb/pkg/workload/core.go:429\ngithub.com/pingcap/go-ycsb/pkg/workload.(*core).DoTransaction\n\t/disk1/home/pingyu/workspace/go-ycsb/pkg/workload/core.go:366\ngithub.com/pingcap/go-ycsb/pkg/client.(*worker).run\n\t/disk1/home/pingyu/workspace/go-ycsb/pkg/client/client.go:129\ngithub.com/pingcap/go-ycsb/pkg/client.(*Client).Run.func2\n\t/disk1/home/pingyu/workspace/go-ycsb/pkg/client/client.go:215\nruntime.goexit\n\t/disk1/home/pingyu/opt/go-1.20.2/src/runtime/asm_amd64.s:1598"]

[2023/05/14 10:42:12.336 +08:00] [INFO] [region_cache.go:2524] ["[health check] check health error"] [store=127.0.0.1:20162] [error="rpc error: code = Unavailable desc = connection error: desc = \"transport: Error while dialing dial tcp 127.0.0.1:20162: connect: connection refused\""]

READ - Takes(s): 12.9, Count: 85747, OPS: 6622.9, Avg(us): 740, Min(us): 360, Max(us): 92671, 50th(us): 673, 90th(us): 896, 95th(us): 1013, 99th(us): 1994, 99.9th(us): 5615, 99.99th(us): 54911

TOTAL - Takes(s): 12.9, Count: 170985, OPS: 13207.7, Avg(us): 1430, Min(us): 360, Max(us): 96319, 50th(us): 1530, 90th(us): 2283, 95th(us): 2559, 99th(us): 4203, 99.9th(us): 7887, 99.99th(us): 56447

UPDATE - Takes(s): 12.9, Count: 85238, OPS: 6594.1, Avg(us): 2123, Min(us): 1103, Max(us): 96319, 50th(us): 1978, 90th(us): 2527, 95th(us): 2879, 99th(us): 5711, 99.9th(us): 8623, 99.99th(us): 74687

UPDATE_ERROR - Takes(s): 11.9, Count: 8, OPS: 0.7, Avg(us): 2803, Min(us): 1452, Max(us): 7531, 50th(us): 2261, 90th(us): 3615, 95th(us): 7531, 99th(us): 7531, 99.9th(us): 7531, 99.99th(us): 7531

[2023/05/14 10:42:13.336 +08:00] [INFO] [region_cache.go:2524] ["[health check] check health error"] [store=127.0.0.1:20162] [error="rpc error: code = Unavailable desc = connection error: desc = \"transport: Error while dialing dial tcp 127.0.0.1:20162: connect: connection refused\""]

READ - Takes(s): 13.9, Count: 85747, OPS: 6148.1, Avg(us): 740, Min(us): 360, Max(us): 92671, 50th(us): 673, 90th(us): 896, 95th(us): 1013, 99th(us): 1994, 99.9th(us): 5615, 99.99th(us): 54911

TOTAL - Takes(s): 13.9, Count: 170985, OPS: 12260.6, Avg(us): 1430, Min(us): 360, Max(us): 96319, 50th(us): 1530, 90th(us): 2283, 95th(us): 2559, 99th(us): 4203, 99.9th(us): 7887, 99.99th(us): 56447

UPDATE - Takes(s): 13.9, Count: 85238, OPS: 6120.8, Avg(us): 2123, Min(us): 1103, Max(us): 96319, 50th(us): 1978, 90th(us): 2527, 95th(us): 2879, 99th(us): 5711, 99.9th(us): 8623, 99.99th(us): 74687

UPDATE_ERROR - Takes(s): 12.9, Count: 8, OPS: 0.6, Avg(us): 2803, Min(us): 1452, Max(us): 7531, 50th(us): 2261, 90th(us): 3615, 95th(us): 7531, 99th(us): 7531, 99.9th(us): 7531, 99.99th(us): 7531

[2023/05/14 10:42:14.336 +08:00] [INFO] [region_cache.go:2524] ["[health check] check health error"] [store=127.0.0.1:20162] [error="rpc error: code = Unavailable desc = connection error: desc = \"transport: Error while dialing dial tcp 127.0.0.1:20162: connect: connection refused\""]

READ - Takes(s): 14.9, Count: 85751, OPS: 5736.9, Avg(us): 822, Min(us): 360, Max(us): 2334719, 50th(us): 673, 90th(us): 896, 95th(us): 1014, 99th(us): 1995, 99.9th(us): 5643, 99.99th(us): 56447

TOTAL - Takes(s): 14.9, Count: 170993, OPS: 11440.1, Avg(us): 1471, Min(us): 360, Max(us): 2334719, 50th(us): 1530, 90th(us): 2285, 95th(us): 2559, 99th(us): 4207, 99.9th(us): 7891, 99.99th(us): 74047

UPDATE - Takes(s): 14.9, Count: 85242, OPS: 5709.7, Avg(us): 2123, Min(us): 1103, Max(us): 96319, 50th(us): 1978, 90th(us): 2527, 95th(us): 2879, 99th(us): 5711, 99.9th(us): 8623, 99.99th(us): 74687

UPDATE_ERROR - Takes(s): 13.9, Count: 8, OPS: 0.6, Avg(us): 2803, Min(us): 1452, Max(us): 7531, 50th(us): 2261, 90th(us): 3615, 95th(us): 7531, 99th(us): 7531, 99.9th(us): 7531, 99.99th(us): 7531

[2023/05/14 10:42:14.928 +08:00] [WARN] [prewrite.go:328] ["1pc failed and fallbacks to normal commit procedure"] [startTS=441458919238009000]

[2023/05/14 10:42:15.336 +08:00] [INFO] [region_cache.go:2524] ["[health check] check health error"] [store=127.0.0.1:20162] [error="rpc error: code = Unavailable desc = connection error: desc = \"transport: Error while dialing dial tcp 127.0.0.1:20162: connect: connection refused\""]

READ - Takes(s): 15.9, Count: 88471, OPS: 5547.9, Avg(us): 1204, Min(us): 360, Max(us): 3330047, 50th(us): 672, 90th(us): 896, 95th(us): 1013, 99th(us): 2018, 99.9th(us): 5795, 99.99th(us): 2807807

TOTAL - Takes(s): 15.9, Count: 176332, OPS: 11058.2, Avg(us): 1804, Min(us): 360, Max(us): 3330047, 50th(us): 1522, 90th(us): 2279, 95th(us): 2555, 99th(us): 4231, 99.9th(us): 8175, 99.99th(us): 2334719

UPDATE - Takes(s): 15.9, Count: 87861, OPS: 5516.9, Avg(us): 2408, Min(us): 1103, Max(us): 3315711, 50th(us): 1975, 90th(us): 2521, 95th(us): 2871, 99th(us): 5715, 99.9th(us): 9039, 99.99th(us): 524031

UPDATE_ERROR - Takes(s): 14.9, Count: 9, OPS: 0.6, Avg(us): 2711, Min(us): 1452, Max(us): 7531, 50th(us): 2261, 90th(us): 3615, 95th(us): 7531, 99th(us): 7531, 99.9th(us): 7531, 99.99th(us): 7531

[2023/05/14 10:42:16.336 +08:00] [INFO] [region_cache.go:2524] ["[health check] check health error"] [store=127.0.0.1:20162] [error="rpc error: code = Unavailable desc = connection error: desc = \"transport: Error while dialing dial tcp 127.0.0.1:20162: connect: connection refused\""]

READ - Takes(s): 16.9, Count: 96508, OPS: 5694.8, Avg(us): 1157, Min(us): 335, Max(us): 3330047, 50th(us): 665, 90th(us): 888, 95th(us): 1004, 99th(us): 2003, 99.9th(us): 5703, 99.99th(us): 2334719

TOTAL - Takes(s): 16.9, Count: 192374, OPS: 11353.1, Avg(us): 1756, Min(us): 335, Max(us): 3330047, 50th(us): 1487, 90th(us): 2261, 95th(us): 2531, 99th(us): 4199, 99.9th(us): 8023, 99.99th(us): 2328575

UPDATE - Takes(s): 16.9, Count: 95866, OPS: 5663.4, Avg(us): 2360, Min(us): 1103, Max(us): 3315711, 50th(us): 1955, 90th(us): 2501, 95th(us): 2851, 99th(us): 5619, 99.9th(us): 8727, 99.99th(us): 523263

UPDATE_ERROR - Takes(s): 15.9, Count: 9, OPS: 0.6, Avg(us): 2711, Min(us): 1452, Max(us): 7531, 50th(us): 2261, 90th(us): 3615, 95th(us): 7531, 99th(us): 7531, 99.9th(us): 7531, 99.99th(us): 7531

[2023/05/14 10:42:17.336 +08:00] [INFO] [region_cache.go:2524] ["[health check] check health error"] [store=127.0.0.1:20162] [error="rpc error: code = Unavailable desc = connection error: desc = \"transport: Error while dialing dial tcp 127.0.0.1:20162: connect: connection refused\""]

READ - Takes(s): 17.9, Count: 104498, OPS: 5822.7, Avg(us): 1117, Min(us): 335, Max(us): 3330047, 50th(us): 659, 90th(us): 881, 95th(us): 997, 99th(us): 1994, 99.9th(us): 5643, 99.99th(us): 2334719

TOTAL - Takes(s): 17.9, Count: 208098, OPS: 11596.1, Avg(us): 1719, Min(us): 335, Max(us): 3330047, 50th(us): 1472, 90th(us): 2247, 95th(us): 2513, 99th(us): 4215, 99.9th(us): 7887, 99.99th(us): 529407

UPDATE - Takes(s): 17.9, Count: 103600, OPS: 5779.4, Avg(us): 2326, Min(us): 1103, Max(us): 3315711, 50th(us): 1941, 90th(us): 2485, 95th(us): 2835, 99th(us): 5607, 99.9th(us): 8519, 99.99th(us): 523263

UPDATE_ERROR - Takes(s): 16.9, Count: 9, OPS: 0.5, Avg(us): 2711, Min(us): 1452, Max(us): 7531, 50th(us): 2261, 90th(us): 3615, 95th(us): 7531, 99th(us): 7531, 99.9th(us): 7531, 99.99th(us): 7531

[2023/05/14 10:42:18.336 +08:00] [INFO] [region_cache.go:2524] ["[health check] check health error"] [store=127.0.0.1:20162] [error="rpc error: code = Unavailable desc = connection error: desc = \"transport: Error while dialing dial tcp 127.0.0.1:20162: connect: connection refused\""]

READ - Takes(s): 18.9, Count: 112175, OPS: 5920.6, Avg(us): 1086, Min(us): 335, Max(us): 3330047, 50th(us): 655, 90th(us): 876, 95th(us): 991, 99th(us): 1995, 99.9th(us): 5611, 99.99th(us): 2334719

TOTAL - Takes(s): 18.9, Count: 223470, OPS: 11795.4, Avg(us): 1689, Min(us): 335, Max(us): 3330047, 50th(us): 1467, 90th(us): 2233, 95th(us): 2499, 99th(us): 4227, 99.9th(us): 7831, 99.99th(us): 527359

UPDATE - Takes(s): 18.9, Count: 111295, OPS: 5880.6, Avg(us): 2298, Min(us): 1103, Max(us): 3315711, 50th(us): 1930, 90th(us): 2469, 95th(us): 2819, 99th(us): 5631, 99.9th(us): 8383, 99.99th(us): 522751

UPDATE_ERROR - Takes(s): 17.9, Count: 10, OPS: 0.6, Avg(us): 2700, Min(us): 1452, Max(us): 7531, 50th(us): 2261, 90th(us): 3615, 95th(us): 7531, 99th(us): 7531, 99.9th(us): 7531, 99.99th(us): 7531

[2023/05/14 10:42:19.336 +08:00] [INFO] [region_cache.go:2524] ["[health check] check health error"] [store=127.0.0.1:20162] [error="rpc error: code = Unavailable desc = connection error: desc = \"transport: Error while dialing dial tcp 127.0.0.1:20162: connect: connection refused\""]

READ - Takes(s): 19.9, Count: 119907, OPS: 6011.3, Avg(us): 1058, Min(us): 335, Max(us): 3330047, 50th(us): 651, 90th(us): 871, 95th(us): 986, 99th(us): 1994, 99.9th(us): 5567, 99.99th(us): 2328575

TOTAL - Takes(s): 19.9, Count: 238969, OPS: 11981.5, Avg(us): 1663, Min(us): 335, Max(us): 3330047, 50th(us): 1461, 90th(us): 2223, 95th(us): 2487, 99th(us): 4259, 99.9th(us): 7759, 99.99th(us): 523263

UPDATE - Takes(s): 19.9, Count: 119062, OPS: 5975.3, Avg(us): 2272, Min(us): 1103, Max(us): 3315711, 50th(us): 1920, 90th(us): 2455, 95th(us): 2809, 99th(us): 5647, 99.9th(us): 8215, 99.99th(us): 522751

UPDATE_ERROR - Takes(s): 18.9, Count: 12, OPS: 0.6, Avg(us): 2491, Min(us): 1385, Max(us): 7531, 50th(us): 1972, 90th(us): 3615, 95th(us): 3615, 99th(us): 7531, 99.9th(us): 7531, 99.99th(us): 7531

注意,修改后,Raft 相关开销会增加。可以结合实际业务需要调整配置。

此外,相关原理可以参考 专栏 - 高可用测试:KILL TiKV-Server,事务 TPS 掉零现象解读 | TiDB 社区