【 TiDB 使用环境】生产环境

【 TiDB 版本】V6.1.2 in k8s

【遇到的问题:问题现象及影响】

-

通过先缩容后扩容的方式给tiflash切换了机器(store id从1846400725变成2751805262了)

-

alter table XXX set tiflash replica 1后数据不同步:

-

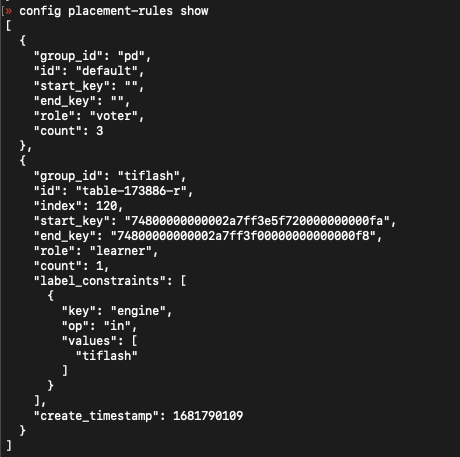

pd 有成功添加规则,但是没有运行的operator,日志有如下信息:

[2023/04/14 15:06:46.145 +08:00] [WARN] [cluster.go:1296] ["store has been Tombstone"] [store-id=1846400725] [store-address=pre-bigdata-tidb-tiflash-0.pre-bigdata-tidb-tiflash-peer.pre-bigdata-tidb.svc:3930] [state=Up] [physically-destroyed=false]

[2023/04/14 15:07:32.041 +08:00] [WARN] [cluster.go:1145] ["not found the key match with the store label"] [store="id:2751805262 address:\"pre-bigdata-tidb-tiflash-0.pre-bigdata-tidb-tiflash-peer.pre-bigdata-tidb.svc:3930\" labels:<key:\"engine\" value:\"tiflash\" > version:\"v6.1.2\" peer_address:\"pre-bigdata-tidb-tiflash-0.pre-bigdata-tidb-tiflash-peer.pre-bigdata-tidb.svc:20170\" status_address:\"pre-bigdata-tidb-tiflash-0.pre-bigdata-tidb-tiflash-peer.pre-bigdata-tidb.svc:20292\" git_hash:\"2fa392de68269ac35827e2fd40f4aaef316e3316\" start_timestamp:1681456052 deploy_path:\"/tiflash\" "] [label-key=engine]

- store信息如下,tiflash日志没看到有异常:

"store": {

"id": 2751805262,

"address": "pre-bigdata-tidb-tiflash-0.pre-bigdata-tidb-tiflash-peer.pre-bigdata-tidb.svc:3930",

"labels": [

{

"key": "engine",

"value": "tiflash"

}

],

"version": "v6.1.2",

"peer_address": "pre-bigdata-tidb-tiflash-0.pre-bigdata-tidb-tiflash-peer.pre-bigdata-tidb.svc:20170",

"status_address": "pre-bigdata-tidb-tiflash-0.pre-bigdata-tidb-tiflash-peer.pre-bigdata-tidb.svc:20292",

"git_hash": "2fa392de68269ac35827e2fd40f4aaef316e3316",

"start_timestamp": 1681460309,

"deploy_path": "/tiflash",

"last_heartbeat": 1681789934080047340,

"state_name": "Up"

},

"status": {

"capacity": "1.718TiB",

"available": "1.608TiB",

"used_size": "21.96MiB",

"leader_count": 0,

"leader_weight": 1,

"leader_score": 0,

"leader_size": 0,

"region_count": 4,

"region_weight": 1,

"region_score": 4,

"region_size": 4,

"slow_score": 1,

"start_ts": "2023-04-14T16:18:29+08:00",

"last_heartbeat_ts": "2023-04-18T11:52:14.08004734+08:00",

"uptime": "91h33m45.08004734s"

}

}

-

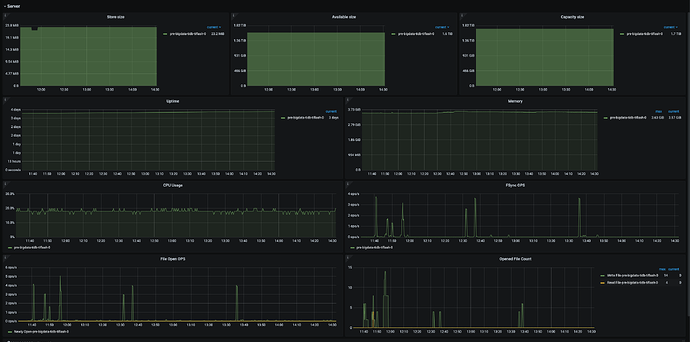

pd && tiflash监控页面