【 TiDB 使用环境】

在k8s中部署的生产环境,数据表超过100万

【 TiDB 版本】

v6.5.0

【复现路径】

调整tidb组件的cpu、mem、replica配置

之前配置为20、50、5,调整到15、32、3,由于pod长时间无法启动,且报oom的错误,所以又将配置改回到20、50、3

【遇到的问题:问题现象及影响】

tidb的pod无法启动,造成更配失败

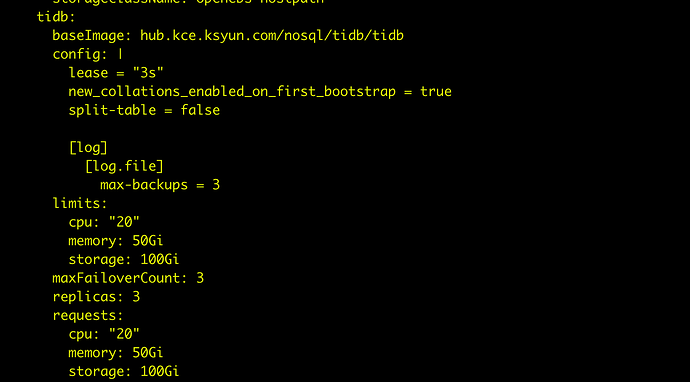

【资源配置】

【附件:截图/日志/监控】

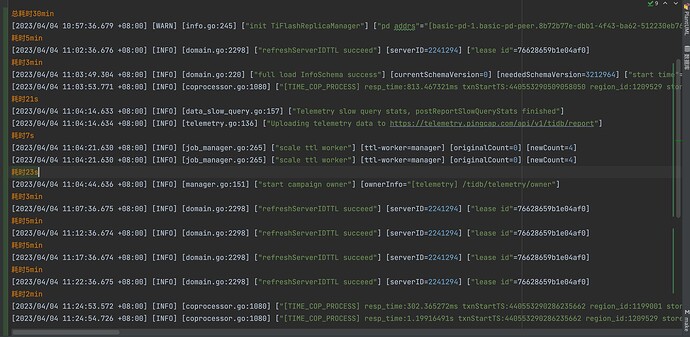

日志:

aaa.log (17.4 KB)

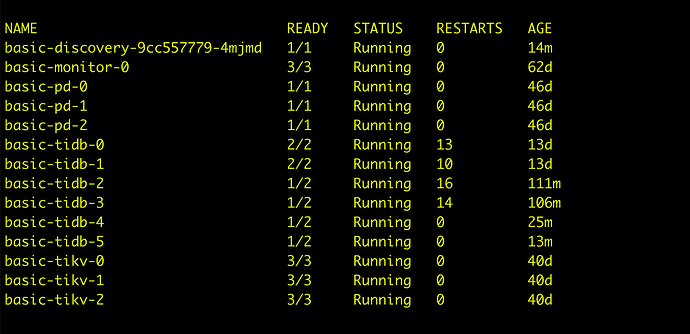

当前tidb状态

pod状态

Name: basic-tidb-5

Namespace: 8b72b77e-dbb1-4f43-ba62-512230eb7668

Node: 10.40.146.12/10.40.146.12

Start Time: Mon, 03 Apr 2023 18:42:28 +0800

Labels: app.kubernetes.io/component=tidb

app.kubernetes.io/instance=basic

app.kubernetes.io/managed-by=tidb-operator

app.kubernetes.io/name=tidb-cluster

controller-revision-hash=basic-tidb-86dd5f65

statefulset.kubernetes.io/pod-name=basic-tidb-5

Annotations: cni.projectcalico.org/podIP: 36.0.4.48/32

prometheus.io/path: /metrics

prometheus.io/port: 10080

prometheus.io/scrape: true

Status: Running

IP: 36.0.4.48

IPs:

IP: 36.0.4.48

Controlled By: StatefulSet/basic-tidb

Containers:

slowlog:

Container ID: docker://d5e7b48abd7c95f9920d154f9fe96f6ed70da57deb13263ce17409414c21d81f

Image: hub.kce.ksyun.com/nosql/tidb/busybox:1.26.2

Image ID: docker-pullable://busybox@sha256:be3c11fdba7cfe299214e46edc642e09514dbb9bbefcd0d3836c05a1e0cd0642

Port:

Host Port:

Command:

sh

-c

touch /var/log/tidb/slowlog; tail -n0 -F /var/log/tidb/slowlog;

State: Running

Started: Mon, 03 Apr 2023 18:42:29 +0800

Ready: True

Restart Count: 0

Environment:

Mounts:

/var/log/tidb from slowlog (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-rdcrn (ro)

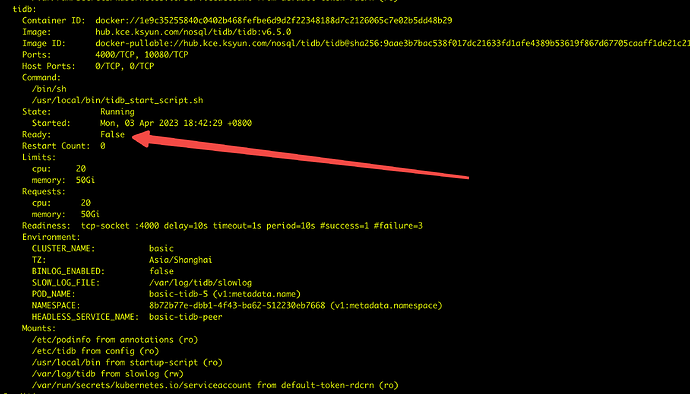

tidb:

Container ID: docker://1e9c35255840c0402b468fefbe6d9d2f22348188d7c2126065c7e02b5dd48b29

Image: hub.kce.ksyun.com/nosql/tidb/tidb:v6.5.0

Image ID: docker-pullable://hub.kce.ksyun.com/nosql/tidb/tidb@sha256:9aae3b7bac538f017dc21633fd1afe4389b53619f867d67705caaff1de21c21e

Ports: 4000/TCP, 10080/TCP

Host Ports: 0/TCP, 0/TCP

Command:

/bin/sh

/usr/local/bin/tidb_start_script.sh

State: Running

Started: Mon, 03 Apr 2023 18:42:29 +0800

Ready: False

Restart Count: 0

Limits:

cpu: 20

memory: 50Gi

Requests:

cpu: 20

memory: 50Gi

Readiness: tcp-socket :4000 delay=10s timeout=1s period=10s #success=1 #failure=3

Environment:

CLUSTER_NAME: basic

TZ: Asia/Shanghai

BINLOG_ENABLED: false

SLOW_LOG_FILE: /var/log/tidb/slowlog

POD_NAME: basic-tidb-5 (v1:metadata.name)

NAMESPACE: 8b72b77e-dbb1-4f43-ba62-512230eb7668 (v1:metadata.namespace)

HEADLESS_SERVICE_NAME: basic-tidb-peer

Mounts:

/etc/podinfo from annotations (ro)

/etc/tidb from config (ro)

/usr/local/bin from startup-script (ro)

/var/log/tidb from slowlog (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-rdcrn (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

annotations:

Type: DownwardAPI (a volume populated by information about the pod)

Items:

metadata.annotations → annotations

config:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: basic-tidb-3961643

Optional: false

startup-script:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: basic-tidb-3961643

Optional: false

slowlog:

Type: EmptyDir (a temporary directory that shares a pod’s lifetime)

Medium:

SizeLimit:

default-token-rdcrn:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-rdcrn

Optional: false

QoS Class: Burstable

Node-Selectors:

Tolerations:

Events:

Type Reason Age From Message

Normal Scheduled 18m default-scheduler Successfully assigned 8b72b77e-dbb1-4f43-ba62-512230eb7668/basic-tidb-5 to 10.40.146.12

Normal Pulled 18m kubelet Container image “hub.kce.ksyun.com/nosql/tidb/busybox:1.26.2” already present on machine

Normal Created 18m kubelet Created container slowlog

Normal Started 18m kubelet Started container slowlog

Normal Pulled 18m kubelet Container image “hub.kce.ksyun.com/nosql/tidb/tidb:v6.5.0” already present on machine

Normal Created 18m kubelet Created container tidb

Normal Started 18m kubelet Started container tidb

Warning Unhealthy 3m (x90 over 17m) kubelet Readiness probe failed: dial tcp 36.0.4.48:4000: connect: connection refused

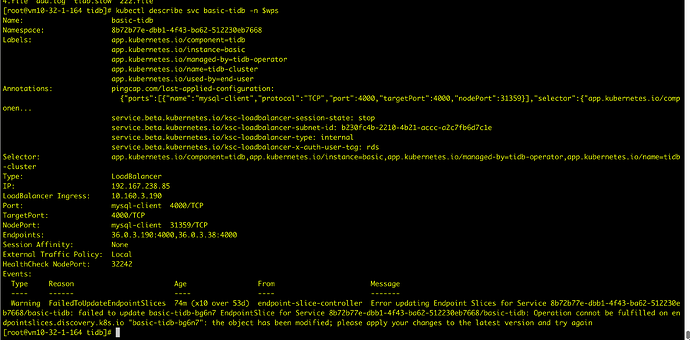

basic-tidb的svc状态如下: