【 TiDB 使用环境】生产环境

【 TiDB 版本】v6.5.1

【复现路径】

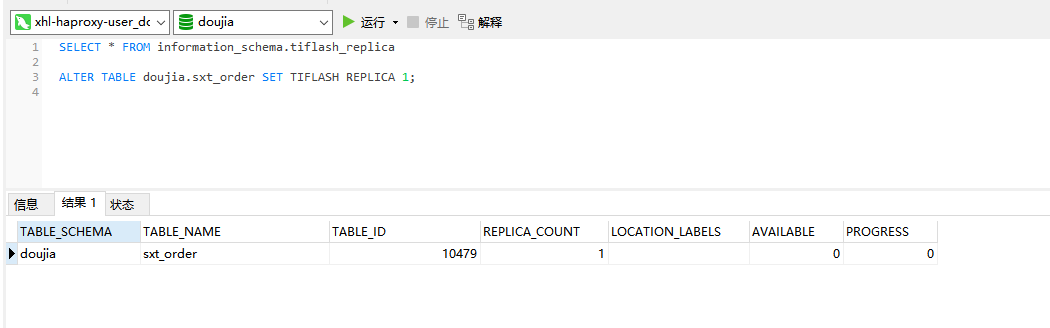

执行 ALTER TABLE sxt_order SET TIFLASH REPLICA 1;

过几分钟 发现集群无法链接tiflash,且有报错日志。

日志如下:

[2023/03/30 17:24:39.182 +08:00] [ERROR] [Exception.cpp:89] ["Code: 49, e.displayText() = DB::Exception: invalid flag 83 in write cf: physical_table_id=10479: (while preHandleSnapshot region_id=6513, index=45900, term=7), e.what() = DB::Exception, Stack trace:\n\n\n 0x17225ce\tDB::Exception::Exception(std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, int) [tiflash+24257998]\n \tdbms/src/Common/Exception.h:46\n 0x6b08404\tDB::RegionCFDataBase<DB::RegionWriteCFDataTrait>::insert(DB::StringObject<true>&&, DB::StringObject<false>&&) [tiflash+112231428]\n \tdbms/src/Storages/Transaction/RegionCFDataBase.cpp:46\n 0x6a949db\tDB::DM::SSTFilesToBlockInputStream::read() [tiflash+111757787]\n \tdbms/src/Storages/DeltaMerge/SSTFilesToBlockInputStream.cpp:137\n 0x696ca15\tDB::DM::readNextBlock(std::__1::shared_ptr<DB::IBlockInputStream> const&) [tiflash+110545429]\n \tdbms/src/Storages/DeltaMerge/DeltaMergeHelpers.h:253\n 0x6a97412\tDB::DM::PKSquashingBlockInputStream<true>::read() [tiflash+111768594]\n \tdbms/src/Storages/DeltaMerge/PKSquashingBlockInputStream.h:68\n 0x696ca15\tDB::DM::readNextBlock(std::__1::shared_ptr<DB::IBlockInputStream> const&) [tiflash+110545429]\n \tdbms/src/Storages/DeltaMerge/DeltaMergeHelpers.h:253\n 0x16d57e5\tDB::DM::DMVersionFilterBlockInputStream<1>::initNextBlock() [tiflash+23943141]\n \tdbms/src/Storages/DeltaMerge/DMVersionFilterBlockInputStream.h:137\n 0x16d360c\tDB::DM::DMVersionFilterBlockInputStream<1>::read(DB::PODArray<unsigned char, 4096ul, Allocator<false>, 15ul, 16ul>*&, bool) [tiflash+23934476]\n \tdbms/src/Storages/DeltaMerge/DMVersionFilterBlockInputStream.cpp:51\n 0x6a96868\tDB::DM::BoundedSSTFilesToBlockInputStream::read() [tiflash+111765608]\n \tdbms/src/Storages/DeltaMerge/SSTFilesToBlockInputStream.cpp:307\n 0x16d9044\tDB::DM::SSTFilesToDTFilesOutputStream<std::__1::shared_ptr<DB::DM::BoundedSSTFilesToBlockInputStream> >::write() [tiflash+23957572]\n \tdbms/src/Storages/DeltaMerge/SSTFilesToDTFilesOutputStream.cpp:200\n 0x6a8d38f\tDB::KVStore::preHandleSSTsToDTFiles(std::__1::shared_ptr<DB::Region>, DB::SSTViewVec, unsigned long, unsigned long, DB::DM::FileConvertJobType, DB::TMTContext&) [tiflash+111727503]\n \tdbms/src/Storages/Transaction/ApplySnapshot.cpp:360\n 0x6a8ca64\tDB::KVStore::preHandleSnapshotToFiles(std::__1::shared_ptr<DB::Region>, DB::SSTViewVec, unsigned long, unsigned long, DB::TMTContext&) [tiflash+111725156]\n \tdbms/src/Storages/Transaction/ApplySnapshot.cpp:275\n 0x6ae7d66\tPreHandleSnapshot [tiflash+112098662]\n \tdbms/src/Storages/Transaction/ProxyFFI.cpp:388\n 0x7f693aa9a228\tengine_store_ffi::_$LT$impl$u20$engine_store_ffi..interfaces..root..DB..EngineStoreServerHelper$GT$::pre_handle_snapshot::hec57f9b0ef29a0bb [libtiflash_proxy.so+17646120]\n 0x7f693aa91d09\tengine_store_ffi::observer::pre_handle_snapshot_impl::h0b40090f59175b24 [libtiflash_proxy.so+17612041]\n 0x7f693aa84b86\tyatp::task::future::RawTask$LT$F$GT$::poll::hd3296fb5cae316b9 [libtiflash_proxy.so+17558406]\n 0x7f693c910dc3\t_$LT$yatp..task..future..Runner$u20$as$u20$yatp..pool..runner..Runner$GT$::handle::h0056e31c4da70e35 [libtiflash_proxy.so+49589699]\n 0x7f693c9036ac\tstd::sys_common::backtrace::__rust_begin_short_backtrace::h747afb2668c16dcb [libtiflash_proxy.so+49534636]\n 0x7f693c9041cc\tcore::ops::function::FnOnce::call_once$u7b$$u7b$vtable.shim$u7d$$u7d$::h83ec6721ad8db87f [libtiflash_proxy.so+49537484]\n 0x7f693c071555\tstd::sys::unix::thread::Thread::new::thread_start::hd2791a9cabec1fda [libtiflash_proxy.so+40547669]\n \t/rustc/96ddd32c4bfb1d78f0cd03eb068b1710a8cebeef/library/std/src/sys/unix/thread.rs:108\n 0x7f69397b1ea5\tstart_thread [libpthread.so.0+32421]\n 0x7f6938bb6b0d\tclone [libc.so.6+1043213]"] [source="DB::RawCppPtr DB::PreHandleSnapshot(DB::EngineStoreServerWrap *, DB::BaseBuffView, uint64_t, DB::SSTViewVec, uint64_t, uint64_t)"] [thread_id=30]

tiflash 报错日志:

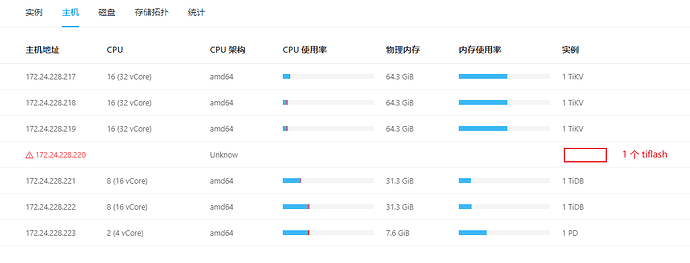

集群配置:

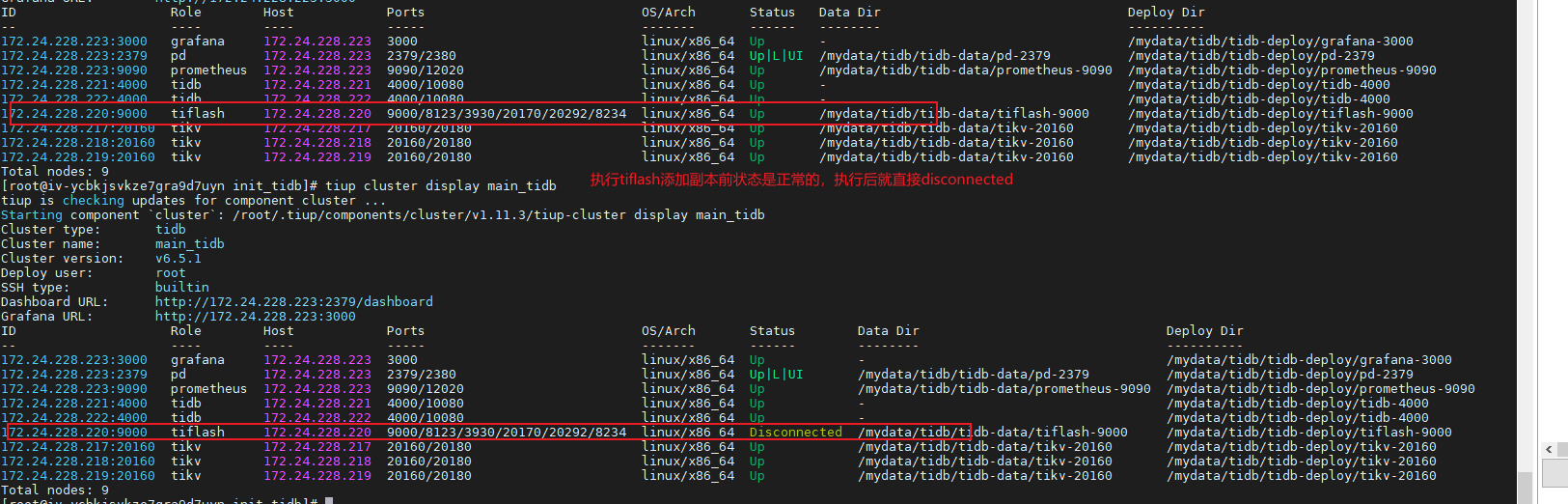

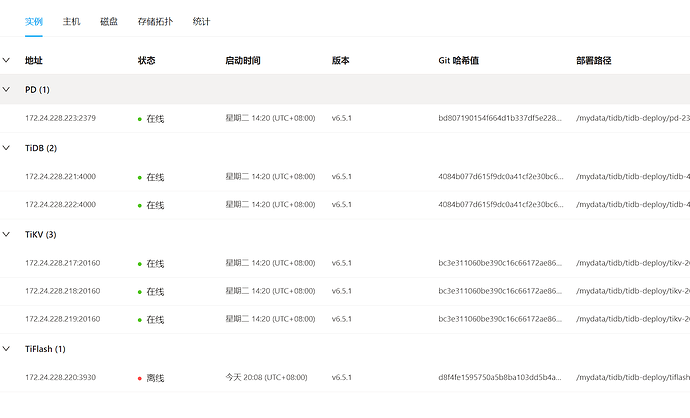

执行前状态是正常的,执行ALTER TABLE sxt_order SET TIFLASH REPLICA 1 后就Disconnected了

tiflash已经尝试过缩容再扩容都无法解决

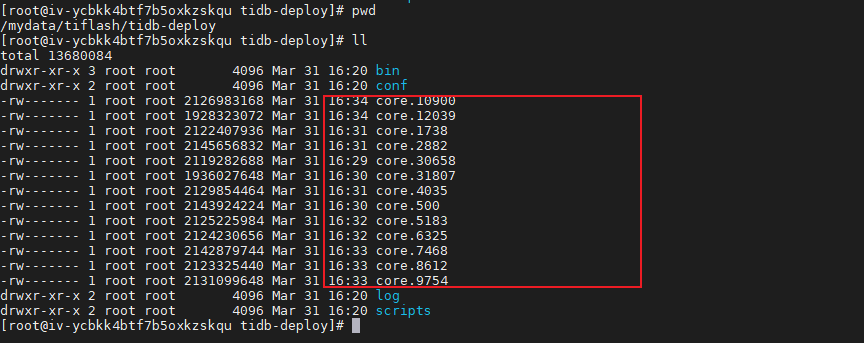

报错后tiflash部署目录不停的出现core这个文件,大小1G左右,直观感觉像是啥溢出

这个bug我验证了一下,验证结果是:只要下游tidb集群接收过上游cdc同步的数据,下游的tiflash肯定崩,无论下游tiflash再怎么缩容再扩容都无法再正常启动。在v6.5.0,v6.5.1,v7.0.0都是必现