【 TiDB 使用环境】生产环境 /测试/ Poc

【 TiDB 版本】

【复现路径】做过哪些操作出现的问题

【遇到的问题:问题现象及影响】

【资源配置】

【附件:截图/日志/监控】

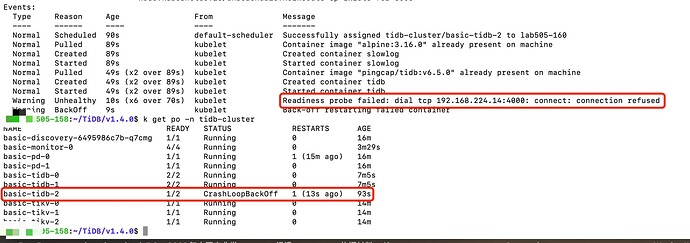

describr pod名,看下日志

k describe po basic-tidb-2 -n tidb-cluster

Name: basic-tidb-2

Namespace: tidb-cluster

Priority: 0

Node: lab505-160/10.77.110.160

Start Time: Sun, 26 Mar 2023 10:17:27 +0800

Labels: app.kubernetes.io/component=tidb

app.kubernetes.io/instance=basic

app.kubernetes.io/managed-by=tidb-operator

app.kubernetes.io/name=tidb-cluster

controller-revision-hash=basic-tidb-7dcd7bc655

statefulset.kubernetes.io/pod-name=basic-tidb-2

tidb.pingcap.com/cluster-id=7214511500400454057

Annotations: cni.projectcalico.org/containerID: 8608f6d2a03f8592b7fdf7b3e49b9d91efea15d04001ed482a05f5733a5fcaa3

cni.projectcalico.org/podIP: 192.168.224.15/32

cni.projectcalico.org/podIPs: 192.168.224.15/32

prometheus.io/path: /metrics

prometheus.io/port: 10080

prometheus.io/scrape: true

Status: Running

IP: 192.168.224.15

IPs:

IP: 192.168.224.15

Controlled By: StatefulSet/basic-tidb

Containers:

slowlog:

Container ID: docker://ef7c79f5d4059c88671faedd93d5cf5535e62296bf7b7b88088a238bd9ad6ab9

Image: alpine:3.16.0

Image ID: docker-pullable://alpine@sha256:686d8c9dfa6f3ccfc8230bc3178d23f84eeaf7e457f36f271ab1acc53015037c

Port: <none>

Host Port: <none>

Command:

sh

-c

touch /var/log/tidb/slowlog; tail -n0 -F /var/log/tidb/slowlog;

State: Running

Started: Sun, 26 Mar 2023 10:17:28 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/log/tidb from slowlog (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-mmd7s (ro)

tidb:

Container ID: docker://bca0d3b6a46d76fd8498323d6c6d645fc95f8ae7459340a254e68d34146c19fb

Image: pingcap/tidb:v6.5.0

Image ID: docker-pullable://pingcap/tidb@sha256:64db0e93a911bf27518dd3d82b46dc3bea01e89140a9a71fd324c3cd06e89c6c

Ports: 4000/TCP, 10080/TCP

Host Ports: 0/TCP, 0/TCP

Command:

/bin/sh

/usr/local/bin/tidb_start_script.sh

State: Running

Started: Sun, 26 Mar 2023 10:18:04 +0800

Last State: Terminated

Reason: Error

Exit Code: 1

Started: Sun, 26 Mar 2023 10:17:28 +0800

Finished: Sun, 26 Mar 2023 10:18:04 +0800

Ready: False

Restart Count: 1

Limits:

cpu: 1

memory: 20Gi

Requests:

cpu: 200m

memory: 10Gi

Readiness: tcp-socket :4000 delay=10s timeout=1s period=10s #success=1 #failure=3

Environment:

CLUSTER_NAME: basic

TZ: UTC

BINLOG_ENABLED: false

SLOW_LOG_FILE: /var/log/tidb/slowlog

POD_NAME: basic-tidb-2 (v1:metadata.name)

NAMESPACE: tidb-cluster (v1:metadata.namespace)

HEADLESS_SERVICE_NAME: basic-tidb-peer

Mounts:

/etc/podinfo from annotations (ro)

/etc/tidb from config (ro)

/usr/local/bin from startup-script (ro)

/var/log/tidb from slowlog (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-mmd7s (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

annotations:

Type: DownwardAPI (a volume populated by information about the pod)

Items:

metadata.annotations -> annotations

config:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: basic-tidb-6462366

Optional: false

startup-script:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: basic-tidb-6462366

Optional: false

slowlog:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

SizeLimit: <unset>

kube-api-access-mmd7s:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 55s default-scheduler Successfully assigned tidb-cluster/basic-tidb-2 to lab505-160

Normal Pulled 54s kubelet Container image "alpine:3.16.0" already present on machine

Normal Created 54s kubelet Created container slowlog

Normal Started 54s kubelet Started container slowlog

Normal Pulled 18s (x2 over 54s) kubelet Container image "pingcap/tidb:v6.5.0" already present on machine

Normal Created 18s (x2 over 54s) kubelet Created container tidb

Normal Started 18s (x2 over 54s) kubelet Started container tidb

Warning Unhealthy 5s (x3 over 35s) kubelet Readiness probe failed: dial tcp 192.168.224.15:4000: connect: connection refused

k logs basic-tidb-2 -n tidb-cluster tidb

[2023/03/26 02:19:25.158 +00:00] [INFO] [base_client.go:299] ["[pd] cannot update member from this address"] [address=http://basic-pd-0.basic-pd-peer.tidb-cluster.svc:2379] [error="[PD:client:ErrClientGetMember]error:rpc error: code = DeadlineExceeded desc = context deadline exceeded target:basic-pd-0.basic-pd-peer.tidb-cluster.svc:2379 status:CONNECTING: error:rpc error: code = DeadlineExceeded desc = context deadline exceeded target:basic-pd-0.basic-pd-peer.tidb-cluster.svc:2379 status:CONNECTING"]

[2023/03/26 02:19:26.159 +00:00] [INFO] [base_client.go:299] ["[pd] cannot update member from this address"] [address=http://basic-pd-1.basic-pd-peer.tidb-cluster.svc:2379] [error="[PD:client:ErrClientGetMember]error:rpc error: code = DeadlineExceeded desc = context deadline exceeded target:basic-pd-1.basic-pd-peer.tidb-cluster.svc:2379 status:CONNECTING: error:rpc error: code = DeadlineExceeded desc = context deadline exceeded target:basic-pd-1.basic-pd-peer.tidb-cluster.svc:2379 status:CONNECTING"]

[2023/03/26 02:19:26.159 +00:00] [ERROR] [base_client.go:144] ["[pd] failed updateMember"] [error="[PD:client:ErrClientGetLeader]get leader from [http://basic-pd-0.basic-pd-peer.tidb-cluster.svc:2379 http://basic-pd-1.basic-pd-peer.tidb-cluster.svc:2379] error"]

[2023/03/26 02:19:28.660 +00:00] [INFO] [base_client.go:299] ["[pd] cannot update member from this address"] [address=http://basic-pd-0.basic-pd-peer.tidb-cluster.svc:2379] [error="[PD:client:ErrClientGetMember]error:rpc error: code = DeadlineExceeded desc = context deadline exceeded target:basic-pd-0.basic-pd-peer.tidb-cluster.svc:2379 status:CONNECTING: error:rpc error: code = DeadlineExceeded desc = context deadline exceeded target:basic-pd-0.basic-pd-peer.tidb-cluster.svc:2379 status:CONNECTING"]

[2023/03/26 02:19:29.661 +00:00] [INFO] [base_client.go:299] ["[pd] cannot update member from this address"] [address=http://basic-pd-1.basic-pd-peer.tidb-cluster.svc:2379] [error="[PD:client:ErrClientGetMember]error:rpc error: code = DeadlineExceeded desc = context deadline exceeded target:basic-pd-1.basic-pd-peer.tidb-cluster.svc:2379 status:CONNECTING: error:rpc error: code = DeadlineExceeded desc = context deadline exceeded target:basic-pd-1.basic-pd-peer.tidb-cluster.svc:2379 status:CONNECTING"]

[2023/03/26 02:19:29.661 +00:00] [ERROR] [base_client.go:144] ["[pd] failed updateMember"] [error="[PD:client:ErrClientGetLeader]get leader from [http://basic-pd-0.basic-pd-peer.tidb-cluster.svc:2379 http://basic-pd-1.basic-pd-peer.tidb-cluster.svc:2379] error"]

[2023/03/26 02:19:32.161 +00:00] [INFO] [base_client.go:299] ["[pd] cannot update member from this address"] [address=http://basic-pd-0.basic-pd-peer.tidb-cluster.svc:2379] [error="[PD:client:ErrClientGetMember]error:rpc error: code = DeadlineExceeded desc = context deadline exceeded target:basic-pd-0.basic-pd-peer.tidb-cluster.svc:2379 status:CONNECTING: error:rpc error: code = DeadlineExceeded desc = context deadline exceeded target:basic-pd-0.basic-pd-peer.tidb-cluster.svc:2379 status:CONNECTING"]

[2023/03/26 02:19:33.162 +00:00] [INFO] [base_client.go:299] ["[pd] cannot update member from this address"] [address=http://basic-pd-1.basic-pd-peer.tidb-cluster.svc:2379] [error="[PD:client:ErrClientGetMember]error:rpc error: code = DeadlineExceeded desc = context deadline exceeded target:basic-pd-1.basic-pd-peer.tidb-cluster.svc:2379 status:CONNECTING: error:rpc error: code = DeadlineExceeded desc = context deadline exceeded target:basic-pd-1.basic-pd-peer.tidb-cluster.svc:2379 status:CONNECTING"]

[2023/03/26 02:19:33.162 +00:00] [ERROR] [base_client.go:144] ["[pd] failed updateMember"] [error="[PD:client:ErrClientGetLeader]get leader from [http://basic-pd-0.basic-pd-peer.tidb-cluster.svc:2379 http://basic-pd-1.basic-pd-peer.tidb-cluster.svc:2379] error"]

[2023/03/26 02:19:34.662 +00:00] [ERROR] [client.go:917] ["[pd] update connection contexts failed"] [dc=global] [error="rpc error: code = Canceled desc = context canceled"]

[2023/03/26 02:19:34.662 +00:00] [ERROR] [client.go:809] ["[pd] create tso stream error"] [dc-location=global] [error="[PD:client:ErrClientCreateTSOStream]create TSO stream failed, retry timeout"]

[2023/03/26 02:19:34.663 +00:00] [WARN] [store.go:83] ["new store with retry failed"] [error="[PD:client:ErrClientCreateTSOStream]create TSO stream failed, retry timeout"]

[2023/03/26 02:19:34.663 +00:00] [FATAL] [terror.go:300] ["unexpected error"] [error="[PD:client:ErrClientCreateTSOStream]create TSO stream failed, retry timeout"] [stack="github.com/pingcap/tidb/parser/terror.MustNil\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/parser/terror/terror.go:300\nmain.createStoreAndDomain\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/tidb-server/main.go:309\nmain.main\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/tidb-server/main.go:214\nruntime.main\n\t/usr/local/go/src/runtime/proc.go:250"] [stack="github.com/pingcap/tidb/parser/terror.MustNil\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/parser/terror/terror.go:300\nmain.createStoreAndDomain\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/tidb-server/main.go:309\nmain.main\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/tidb-server/main.go:214\nruntime.main\n\t/usr/local/go/src/runtime/proc.go:250"]

- 请查看资源使用情况是否有异常?比如内存等 node 上是否足够

- 请问是哪个版本?有类似的 issue https://github.com/tikv/pd/issues/5207 但是暂时没有找到 tidb panic 的。

可以看看是否在受影响版本,可以尝试其他版本试试。

如果条件允许,在资源不够的情况下,可以考虑将PD缩减到一个,你这也是毕设环境,要求应该没那么高。