【 TiDB 使用环境】生产环境

【 TiDB 版本】

【复现路径】机器下线以后,Store变成Offline状态,通过pd-ctl operator add remove-peer删除了store上的所有peer,其中三个peer无法删除,一直卡在cannot build operator for region which is in joint state。 导致statefulset pod没法重新加入集群

【遇到的问题:问题现象及影响】

【资源配置】

【附件:截图/日志/监控】

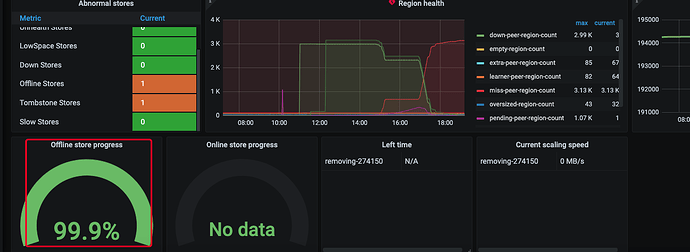

下线进度一直卡在99.99%

试试recreate-region 把这几个重建下

Tikv的一个节点的机器被异常下线了, pod一直没法启动起来, tikv-ctl没法连上这个节点。新上线的pod一直报错: duplicated store address

ip地址冲突了。把对应的老的store删掉才可以

老的store通过pd-ctl store delete了, 一直卡在offline状态。。 下线不掉。。

{

“count”: 3,

“regions”: [

{

“id”: 162617,

“start_key”: “313939333432333538343735333430363435383A4D54544E657773436F6E74656E74”,

“end_key”: “313939333632333030353936373134323033313A4D54544E657773536E6170”,

“epoch”: {

“conf_ver”: 24,

“version”: 17

},

“peers”: [

{

“id”: 162620,

“store_id”: 5,

“role”: 3,

“role_name”: “DemotingVoter”

},

{

“id”: 275614,

“store_id”: 274150,

“role_name”: “Voter”

},

{

“id”: 287072,

“store_id”: 274156,

“role_name”: “Voter”

},

{

“id”: 321305,

“store_id”: 274157,

“role”: 2,

“role_name”: “IncomingVoter”

}

],

“leader”: {

“id”: 162620,

“store_id”: 5,

“role”: 3,

“role_name”: “DemotingVoter”

},

“down_peers”: [

{

“down_seconds”: 32596,

“peer”: {

“id”: 275614,

“store_id”: 274150,

“role_name”: “Voter”

}

}

],

“written_bytes”: 37703,

“read_bytes”: 330190,

“written_keys”: 18,

“read_keys”: 199,

“approximate_size”: 93,

“approximate_keys”: 0

},

{

“id”: 239166,

“start_key”: “32663062633333646638336639323631396266623039653133373739383863623A4D54545573657250726F66696C655633”,

“end_key”: “32663062633333656638313363373365396238646436653133373739383863625F636F6D706C6574655F706C61793A4D545455736572416374696F6E3A766572”,

“epoch”: {

“conf_ver”: 48,

“version”: 17

},

“peers”: [

{

“id”: 239169,

“store_id”: 46,

“role”: 3,

“role_name”: “DemotingVoter”

},

{

“id”: 300223,

“store_id”: 274150,

“role_name”: “Voter”

},

{

“id”: 319711,

“store_id”: 274149,

“role_name”: “Voter”

},

{

“id”: 319794,

“store_id”: 274144,

“role”: 2,

“role_name”: “IncomingVoter”

}

],

“leader”: {

“id”: 239169,

“store_id”: 46,

“role”: 3,

“role_name”: “DemotingVoter”

},

“down_peers”: [

{

“down_seconds”: 32583,

“peer”: {

“id”: 300223,

“store_id”: 274150,

“role_name”: “Voter”

}

}

],

“pending_peers”: [

{

“id”: 300223,

“store_id”: 274150,

“role_name”: “Voter”

}

],

“written_bytes”: 204126,

“read_bytes”: 361689,

“written_keys”: 114,

“read_keys”: 668,

“approximate_size”: 142,

“approximate_keys”: 0

},

{

“id”: 110081,

“start_key”: “393232303531363731313230313232313233303A4D54544E657773537461746963”,

“end_key”: “393232303639323239323832383139313333383A4D54544E657773436F6E74656E74”,

“epoch”: {

“conf_ver”: 58,

“version”: 21

},

“peers”: [

{

“id”: 110082,

“store_id”: 33,

“role”: 3,

“role_name”: “DemotingVoter”

},

{

“id”: 110174,

“store_id”: 15,

“role_name”: “Voter”

},

{

“id”: 306531,

“store_id”: 274150,

“role_name”: “Voter”

},

{

“id”: 320541,

“store_id”: 274146,

“role”: 2,

“role_name”: “IncomingVoter”

}

],

“leader”: {

“id”: 320541,

“store_id”: 274146,

“role”: 2,

“role_name”: “IncomingVoter”

},

“down_peers”: [

{

“down_seconds”: 32586,

“peer”: {

“id”: 306531,

“store_id”: 274150,

“role_name”: “Voter”

}

}

],

“written_bytes”: 57045,

“read_bytes”: 291690,

“written_keys”: 31,

“read_keys”: 194,

“approximate_size”: 50,

“approximate_keys”: 0

}

]

}

curl -X DELETE http://${HostIP}:2379/pd/api/v1/admin/cache/region/{region_id} 。这个是清理pd 侧的region信息,试试处理下剩下的region

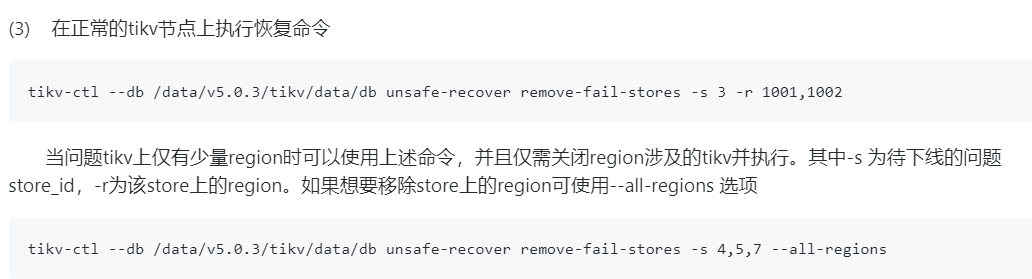

现在offline没有???保证可以使用下 unsafe命令。 如果delete不成功的话

都是高可用集群,应该不用清理region吧??

region是正常的。有线上数据, 有其他peer的leader,需要清理的是offline的peer把, 不是region

region 已经不正常了,如果Leader正常 就像前面一样remove-peer就搞定了, 清理pd region信息不是删除region。pd侧的region 也只是一种缓存而已。 你看下现在region leader正常吗

{

"count": 3,

"regions": [

{

"id": 162617,

"start_key": "313939333432333538343735333430363435383A4D54544E657773436F6E74656E74",

"end_key": "313939333632333030353936373134323033313A4D54544E657773536E6170",

"epoch": {

"conf_ver": 24,

"version": 17

},

"peers": [

{

"id": 162620,

"store_id": 5,

"role": 3,

"role_name": "DemotingVoter"

},

{

"id": 275614,

"store_id": 274150,

"role_name": "Voter"

},

{

"id": 287072,

"store_id": 274156,

"role_name": "Voter"

},

{

"id": 321305,

"store_id": 274157,

"role": 2,

"role_name": "IncomingVoter"

}

],

"leader": {

"id": 162620,

"store_id": 5,

"role": 3,

"role_name": "DemotingVoter"

},

"down_peers": [

{

"down_seconds": 83409,

"peer": {

"id": 275614,

"store_id": 274150,

"role_name": "Voter"

}

}

],

"written_bytes": 19028,

"read_bytes": 340650,

"written_keys": 12,

"read_keys": 161,

"approximate_size": 93,

"approximate_keys": 0

},

{

"id": 239166,

"start_key": "32663062633333646638336639323631396266623039653133373739383863623A4D54545573657250726F66696C655633",

"end_key": "32663062633333656638313363373365396238646436653133373739383863625F636F6D706C6574655F706C61793A4D545455736572416374696F6E3A766572",

"epoch": {

"conf_ver": 48,

"version": 17

},

"peers": [

{

"id": 239169,

"store_id": 46,

"role": 3,

"role_name": "DemotingVoter"

},

{

"id": 300223,

"store_id": 274150,

"role_name": "Voter"

},

{

"id": 319711,

"store_id": 274149,

"role_name": "Voter"

},

{

"id": 319794,

"store_id": 274144,

"role": 2,

"role_name": "IncomingVoter"

}

],

"leader": {

"id": 239169,

"store_id": 46,

"role": 3,

"role_name": "DemotingVoter"

},

"down_peers": [

{

"down_seconds": 83396,

"peer": {

"id": 300223,

"store_id": 274150,

"role_name": "Voter"

}

}

],

"pending_peers": [

{

"id": 300223,

"store_id": 274150,

"role_name": "Voter"

}

],

"written_bytes": 117365,

"read_bytes": 52276,

"written_keys": 43,

"read_keys": 166,

"approximate_size": 131,

"approximate_keys": 160186

},

{

"id": 110081,

"start_key": "393232303531363731313230313232313233303A4D54544E657773537461746963",

"end_key": "393232303639323239323832383139313333383A4D54544E657773436F6E74656E74",

"epoch": {

"conf_ver": 58,

"version": 21

},

"peers": [

{

"id": 110082,

"store_id": 33,

"role": 3,

"role_name": "DemotingVoter"

},

{

"id": 110174,

"store_id": 15,

"role_name": "Voter"

},

{

"id": 306531,

"store_id": 274150,

"role_name": "Voter"

},

{

"id": 320541,

"store_id": 274146,

"role": 2,

"role_name": "IncomingVoter"

}

],

"leader": {

"id": 320541,

"store_id": 274146,

"role": 2,

"role_name": "IncomingVoter"

},

"down_peers": [

{

"down_seconds": 83398,

"peer": {

"id": 306531,

"store_id": 274150,

"role_name": "Voter"

}

}

],

"written_bytes": 135,

"read_bytes": 144720,

"written_keys": 3,

"read_keys": 79,

"approximate_size": 54,

"approximate_keys": 0

}

]

}

执行了curl -X DELETE删除这三个region后,返回The region is removed from server cache, 但通过region store 274150还是能返回这三个region

pd-ctl -u <pd_addr> unsafe remove-failed-stores 274150

pd-ctl -u <pd_addr> unsafe remove-failed-stores show

然后通过region store 274150查看没啥变化

[

{

"info": "Unsafe recovery enters collect report stage: failed stores 274150",

"time": "2023-03-22 02:08:44.691"

},

{

"info": "Unsafe recovery finished",

"time": "2023-03-22 02:08:55.895"

}

]

tikv-ctl recreate-region报错:

[2023/03/22 02:26:45.596 +00:00] [WARN] [config.rs:648] ["compaction guard is disabled due to region info provider not available"]

[2023/03/22 02:26:45.596 +00:00] [WARN] [config.rs:756] ["compaction guard is disabled due to region info provider not available"]

[2023/03/22 02:26:45.611 +00:00] [ERROR] [executor.rs:1092] ["error while open kvdb: Storage Engine IO error: While lock file: /var/lib/tikv/db/LOCK: Resource temporarily unavailable"]

[2023/03/22 02:26:45.611 +00:00] [ERROR] [executor.rs:1095] ["LOCK file conflict indicates TiKV process is running. Do NOT delete the LOCK file and force the command to run. Doing so could cause data corruption."]

是的。 因为是k8s的pod。。 没法这样操作。 用的TiDB Operator部署的

那只能全部停了后操作了

那线上业务不可用了。。 没法这样操作。 线上有读写请求,没法停机, 这个region影响还比较小

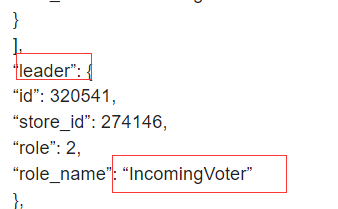

你这个集群有什么特殊的吗?为什么会有incommingvoter?这种peer没见过。

是3副本的吗?

leader降级和提升为leader的中间过程