【 TiDB 使用环境】测试

【 TiDB 版本】6.5

【复现路径】做过哪些操作出现的问题

单机部署集群

deploy 成功 init失败

tiup cluster deploy cluster-test 6.5.0 ./cluster.yaml --user root -p

tiup is checking updates for component cluster …

Starting component cluster: /root/.tiup/components/cluster/v1.11.3/tiup-cluster deploy cluster-test 6.5.0 ./cluster.yaml --user root -p

Input SSH password:

-

Detect CPU Arch Name

- Detecting node 10.0.2.15 Arch info … Done

-

Detect CPU OS Name

- Detecting node 10.0.2.15 OS info … Done

Please confirm your topology:

Cluster type: tidb

Cluster name: cluster-test

Cluster version: v6.5.0

Role Host Ports OS/Arch Directories

- Detecting node 10.0.2.15 OS info … Done

pd 10.0.2.15 2379/2380 linux/x86_64 /tidb-deploy/pd-2379,/tidb-data/pd-2379

tikv 10.0.2.15 20160/20180 linux/x86_64 /tidb-deploy/tikv-20160,/tidb-data/tikv-20160

tikv 10.0.2.15 20161/20181 linux/x86_64 /tidb-deploy/tikv-20161,/tidb-data/tikv-20161

tikv 10.0.2.15 20162/20182 linux/x86_64 /tidb-deploy/tikv-20162,/tidb-data/tikv-20162

tidb 10.0.2.15 4001/10080 linux/x86_64 /tidb-deploy/tidb-4001

tiflash 10.0.2.15 9000/8123/3930/20170/20292/8234 linux/x86_64 /tidb-deploy/tiflash-9000,/tidb-data/tiflash-9000

prometheus 10.0.2.15 9091/12020 linux/x86_64 /tidb-deploy/prometheus-9091,/tidb-data/prometheus-9091

grafana 10.0.2.15 3001 linux/x86_64 /tidb-deploy/grafana-3001

Attention:

1. If the topology is not what you expected, check your yaml file.

2. Please confirm there is no port/directory conflicts in same host.

Do you want to continue? [y/N]: (default=N) y

- Generate SSH keys … Done

- Download TiDB components

- Download pd:v6.5.0 (linux/amd64) … Done

- Download tikv:v6.5.0 (linux/amd64) … Done

- Download tidb:v6.5.0 (linux/amd64) … Done

- Download tiflash:v6.5.0 (linux/amd64) … Done

- Download prometheus:v6.5.0 (linux/amd64) … Done

- Download grafana:v6.5.0 (linux/amd64) … Done

- Download node_exporter: (linux/amd64) … Done

- Download blackbox_exporter: (linux/amd64) … Done

- Initialize target host environments

- Prepare 10.0.2.15:22 … Done

- Deploy TiDB instance

- Copy pd → 10.0.2.15 … Done

- Copy tikv → 10.0.2.15 … Done

- Copy tikv → 10.0.2.15 … Done

- Copy tikv → 10.0.2.15 … Done

- Copy tidb → 10.0.2.15 … Done

- Copy tiflash → 10.0.2.15 … Done

- Copy prometheus → 10.0.2.15 … Done

- Copy grafana → 10.0.2.15 … Done

- Deploy node_exporter → 10.0.2.15 … Done

- Deploy blackbox_exporter → 10.0.2.15 … Done

- Copy certificate to remote host

- Init instance configs

- Generate config pd → 10.0.2.15:2379 … Done

- Generate config tikv → 10.0.2.15:20160 … Done

- Generate config tikv → 10.0.2.15:20161 … Done

- Generate config tikv → 10.0.2.15:20162 … Done

- Generate config tidb → 10.0.2.15:4001 … Done

- Generate config tiflash → 10.0.2.15:9000 … Done

- Generate config prometheus → 10.0.2.15:9091 … Done

- Generate config grafana → 10.0.2.15:3001 … Done

- Init monitor configs

- Generate config node_exporter → 10.0.2.15 … Done

- Generate config blackbox_exporter → 10.0.2.15 … Done

Enabling component pd

Enabling instance 10.0.2.15:2379

Enable instance 10.0.2.15:2379 success

Enabling component tikv

Enabling instance 10.0.2.15:20162

Enabling instance 10.0.2.15:20160

Enabling instance 10.0.2.15:20161

Enable instance 10.0.2.15:20160 success

Enable instance 10.0.2.15:20162 success

Enable instance 10.0.2.15:20161 success

Enabling component tidb

Enabling instance 10.0.2.15:4001

Enable instance 10.0.2.15:4001 success

Enabling component tiflash

Enabling instance 10.0.2.15:9000

Enable instance 10.0.2.15:9000 success

Enabling component prometheus

Enabling instance 10.0.2.15:9091

Enable instance 10.0.2.15:9091 success

Enabling component grafana

Enabling instance 10.0.2.15:3001

Enable instance 10.0.2.15:3001 success

Enabling component node_exporter

Enabling instance 10.0.2.15

Enable 10.0.2.15 success

Enabling component blackbox_exporter

Enabling instance 10.0.2.15

Enable 10.0.2.15 success

Clustercluster-testdeployed successfully, you can start it with command:tiup cluster start cluster-test --init

[root@hanl config]# tiup cluster start cluster-test --init

tiup is checking updates for component cluster …

Starting componentcluster: /root/.tiup/components/cluster/v1.11.3/tiup-cluster start cluster-test --init

Starting cluster cluster-test…

- [ Serial ] - SSHKeySet: privateKey=/root/.tiup/storage/cluster/clusters/cluster-test/ssh/id_rsa, publicKey=/root/.tiup/storage/cluster/clusters/cluster-test/ssh/id_rsa.pub

- [Parallel] - UserSSH: user=tidb, host=10.0.2.15

- [Parallel] - UserSSH: user=tidb, host=10.0.2.15

- [Parallel] - UserSSH: user=tidb, host=10.0.2.15

- [Parallel] - UserSSH: user=tidb, host=10.0.2.15

- [Parallel] - UserSSH: user=tidb, host=10.0.2.15

- [Parallel] - UserSSH: user=tidb, host=10.0.2.15

- [Parallel] - UserSSH: user=tidb, host=10.0.2.15

- [Parallel] - UserSSH: user=tidb, host=10.0.2.15

- [ Serial ] - StartCluster

Starting component pd

Starting instance 10.0.2.15:2379

Start instance 10.0.2.15:2379 success

Starting component tikv

Starting instance 10.0.2.15:20162

Starting instance 10.0.2.15:20160

Starting instance 10.0.2.15:20161

Start instance 10.0.2.15:20161 success

Start instance 10.0.2.15:20160 success

Start instance 10.0.2.15:20162 success

Starting component tidb

Starting instance 10.0.2.15:4001

Error: failed to start tidb: failed to start: 10.0.2.15 tidb-4001.service, please check the instance’s log(/tidb-deploy/tidb-4001/log) for more detail.: timed out waiting for port 4001 to be started after 2m0s

Verbose debug logs has been written to /root/.tiup/logs/tiup-cluster-debug-2023-03-16-21-09-22.log.

【遇到的问题:问题现象及影响】

报错日志:

tidb log :

[2023/03/16 21:15:48.072 +08:00] [INFO] [session.go:3723] [“CRUCIAL OPERATION”] [conn=0] [schemaVersion=19] [cur_db=mysql] [sql=“CREATE TABLE IF NOT EXISTS mysql.bind_info (\n\t\toriginal_sql TEXT NOT NULL,\n\t\tbind_sql TEXT NOT NULL,\n\t\tdefault_db TEXT NOT NULL,\n\t\tstatus TEXT NOT NULL,\n\t\tcreate_time TIMESTAMP(3) NOT NULL,\n\t\tupdate_time TIMESTAMP(3) NOT NULL,\n\t\tcharset TEXT NOT NULL,\n\t\tcollation TEXT NOT NULL,\n\t\tsource VARCHAR(10) NOT NULL DEFAULT ‘unknown’,\n\t\tsql_digest varchar(64),\n\t\tplan_digest varchar(64),\n\t\tINDEX sql_index(original_sql(700),default_db(68)) COMMENT "accelerate the speed when add global binding query",\n\t\tINDEX time_index(update_time) COMMENT "accelerate the speed when querying with last update time"\n\t) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_bin;”] [user=]

[2023/03/16 21:15:52.909 +08:00] [INFO] [owner_daemon.go:56] [“daemon became owner”] [id=e94560d8-6b62-4796-a4db-955ca85bba2c] [daemon-id=LogBackup::Advancer]

[2023/03/16 21:17:03.073 +08:00] [INFO] [region_request.go:794] [“mark store’s regions need be refill”] [id=1] [addr=10.0.2.15:20161] [error=“context deadline exceeded”]

[2023/03/16 21:17:03.073 +08:00] [WARN] [session.go:998] [“can not retry txn”] [label=internal] [error=“context deadline exceeded”] [IsBatchInsert=false] [IsPessimistic=false] [InRestrictedSQL=true] [tidb_retry_limit=10] [tidb_disable_txn_auto_retry=true]

[2023/03/16 21:17:03.073 +08:00] [WARN] [session.go:1014] [“commit failed”] [“finished txn”=“Txn{state=invalid}”] [error=“context deadline exceeded”]

[2023/03/16 21:17:03.074 +08:00] [WARN] [session.go:2218] [“run statement failed”] [schemaVersion=19] [error=“context deadline exceeded”] [session=“{\n "currDBName": "mysql",\n "id": 0,\n "status": 2,\n "strictMode": true,\n "user": null\n}”]

[2023/03/16 21:17:03.074 +08:00] [FATAL] [bootstrap.go:2429] [“mustExecute error”] [error=“context deadline exceeded”] [stack=“github.com/pingcap/tidb/session.mustExecute\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/session/bootstrap.go:2429\ngithub.com/pingcap/tidb/session.insertBuiltinBindInfoRow\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/session/bootstrap.go:1628\ngithub.com/pingcap/tidb/session.initBindInfoTable\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/session/bootstrap.go:1624\ngithub.com/pingcap/tidb/session.doDDLWorks\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/session/bootstrap.go:2271\ngithub.com/pingcap/tidb/session.bootstrap\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/session/bootstrap.go:526\ngithub.com/pingcap/tidb/session.runInBootstrapSession\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/session/session.go:3412\ngithub.com/pingcap/tidb/session.BootstrapSession\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/session/session.go:3262\nmain.createStoreAndDomain\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/tidb-server/main.go:314\nmain.main\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/tidb-server/main.go:214\nruntime.main\n\t/usr/local/go/src/runtime/proc.go:250”]

【资源配置】

cluster 配置:

# Global variables are applied to all deployments and used as the default value of

# the deployments if a specific deployment value is missing.

global:

user: “tidb”

ssh_port: 22

deploy_dir: “/tidb-deploy”

data_dir: “/tidb-data”

# Monitored variables are applied to all the machines.

monitored:

node_exporter_port: 9100

blackbox_exporter_port: 9115

server_configs:

tidb:

log.slow-threshold: 300

tikv:

readpool.storage.use-unified-pool: true

readpool.unified.max-thread-count: 1

readpool.coprocessor.use-unified-pool: true

storage.block-cache.capacity: 0.5G

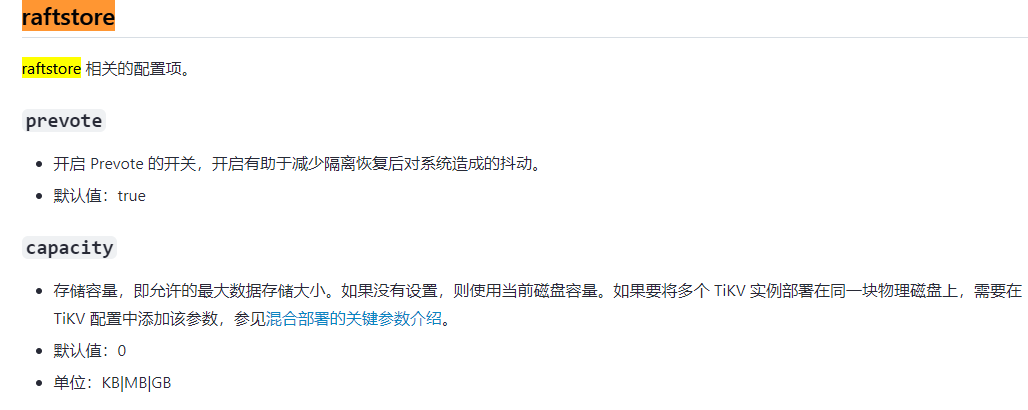

raftstore.capacity: 4G

pd:

replication.enable-placement-rules: true

replication.location-labels: [“host”]

tiflash:

logger.level: “info”

pd_servers:

- host: 10.0.2.15

tidb_servers:

-

host: 10.0.2.15

port: 4001

tikv_servers: -

host: 10.0.2.15

port: 20160

status_port: 20180

config:

server.labels: { host: “logic-host-1” } -

host: 10.0.2.15

port: 20161

status_port: 20181

config:

server.labels: { host: “logic-host-2” } -

host: 10.0.2.15

port: 20162

status_port: 20182

config:

server.labels: { host: “logic-host-3” }

tiflash_servers:

- host: 10.0.2.15

monitoring_servers:

- host: 10.0.2.15

port: 9091

grafana_servers:

- host: 10.0.2.15

port: 3001# Grafana deployment file, startup script, configuration file storage directory.

deploy_dir: /tidb-deploy/grafana-3001

【附件:截图/日志/监控】