【 TiDB 使用环境】生产环境 /测试/ Poc

【 TiDB 版本】v 6.6.0

【复现路径】做过哪些操作出现的问题

【遇到的问题:问题现象及影响】

【资源配置】

【附件:截图/日志/监控】

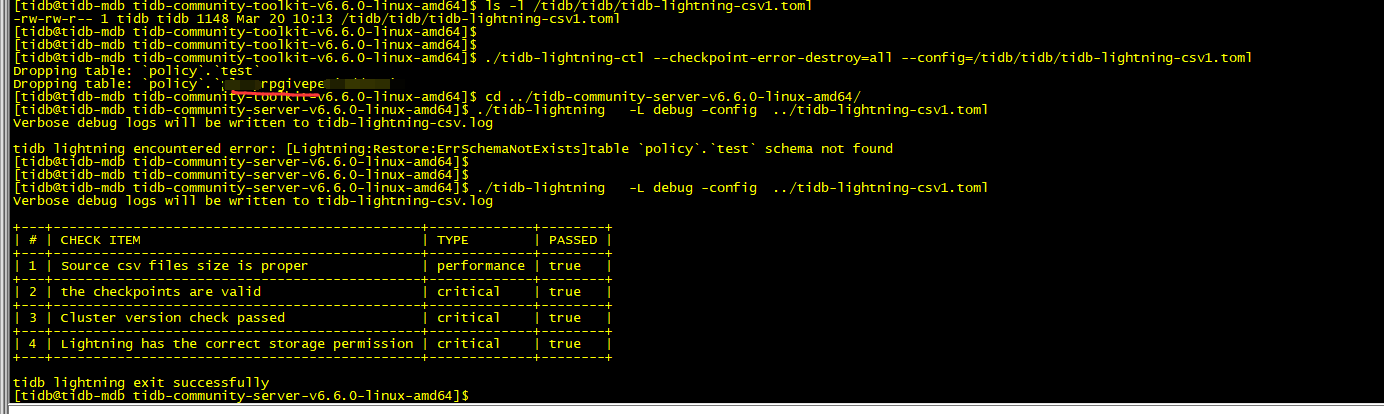

多次尝试,在步骤2的检查中不通过, 请教下这个的使用技巧?

后面附上详细的信息

[tidb@tidb-mdb tidb-community-server-v6.6.0-linux-amd64]$

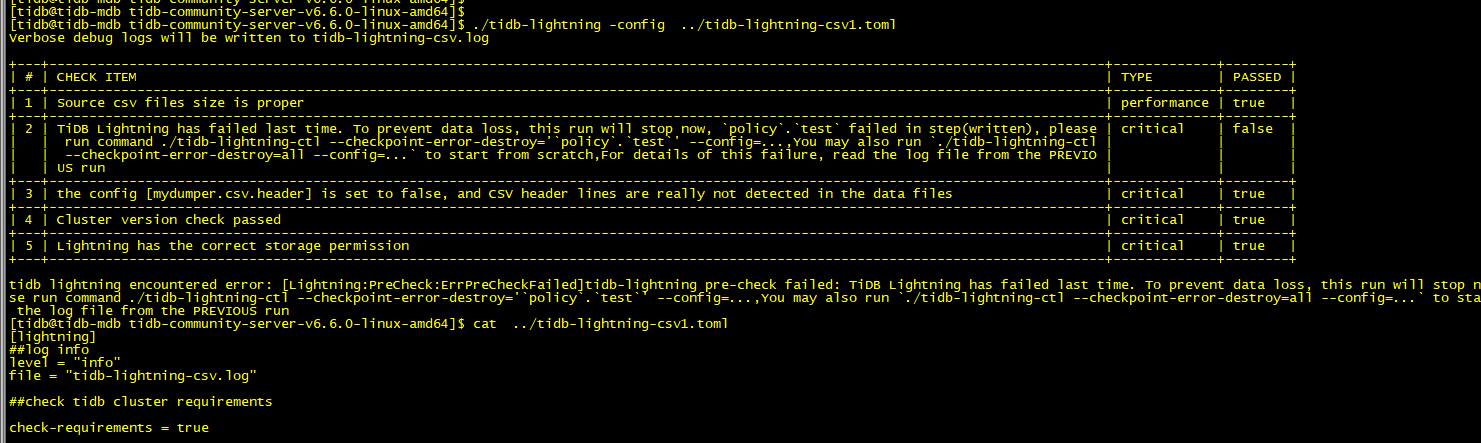

[tidb@tidb-mdb tidb-community-server-v6.6.0-linux-amd64]$ ./tidb-lightning -config …/tidb-lightning-csv1.toml

Verbose debug logs will be written to tidb-lightning-csv.log

±–±-----------------------------------------------------------------------------------------------------------------------------------±------------±-------+

| # | CHECK ITEM | TYPE | PASSED |

±–±-----------------------------------------------------------------------------------------------------------------------------------±------------±-------+

| 1 | Source csv files size is proper | performance | true |

±–±-----------------------------------------------------------------------------------------------------------------------------------±------------±-------+

| 2 | TiDB Lightning has failed last time. To prevent data loss, this run will stop now, policy.test failed in step(written), please | critical | false |

| | run command ./tidb-lightning-ctl --checkpoint-error-destroy=‘policy.test’ --config=…,You may also run ./tidb-lightning-ctl | | | | | --checkpoint-error-destroy=all --config=... to start from scratch,For details of this failure, read the log file from the PREVIO | | |

| | US run | | |

±–±-----------------------------------------------------------------------------------------------------------------------------------±------------±-------+

| 3 | the config [mydumper.csv.header] is set to false, and CSV header lines are really not detected in the data files | critical | true |

±–±-----------------------------------------------------------------------------------------------------------------------------------±------------±-------+

| 4 | Cluster version check passed | critical | true |

±–±-----------------------------------------------------------------------------------------------------------------------------------±------------±-------+

| 5 | Lightning has the correct storage permission | critical | true |

±–±-----------------------------------------------------------------------------------------------------------------------------------±------------±-------+

tidb lightning encountered error: [Lightning:PreCheck:ErrPreCheckFailed]tidb-lightning pre-check failed: TiDB Lightning has failed last time. To prevent data loss, this run will stop now, policy.test failed in step(written), please run command ./tidb-lightning-ctl --checkpoint-error-destroy=‘policy.test’ --config=…,You may also run ./tidb-lightning-ctl --checkpoint-error-destroy=all --config=... to start from scratch,For details of this failure, read the log file from the PREVIOUS run

[tidb@tidb-mdb tidb-community-server-v6.6.0-linux-amd64]$ cat …/tidb-lightning-csv1.toml

[lightning]

##log info

level = “info”

file = “tidb-lightning-csv.log”

##check tidb cluster requirements

check-requirements = true

##import mode ,such as physical import mode and logical import mode.

physical import mode : local

logical import mode : tidb

[tikv-importer]

#backend = “local”

backend = “tidb”

temprory directory for import key-value data, must be empty directory.

sorted-kv-dir = “/data/sorted-kv-dir”

#logical import mode operation

-replace

-ignore

-error

on-duplicate = “replace”

###source data directory

#[mydumper]

csv file

###source data directory

[mydumper]

data-source-dir = “/data/my_database/csv/”

[mydumper.csv]

separator = “\t”

delimiter = ‘’

header = false

not-null = false

null = ‘NULL’

backslash-escape = false

##igore the default databases.

#filter = [‘.’, ‘!mysql.', '!sys.’, ‘!INFORMATION_SCHEMA.', '!PERFORMANCE_SCHEMA.’, ‘!METRICS_SCHEMA.', '!INSPECTION_SCHEMA.’]

##target cluster info

[tidb]

host = “192.168.2.151”

port = 4000

user = “root”

password = “root”

status-port = 10080

pd-addr = “192.168.2.151:2379”

log-level = “error”

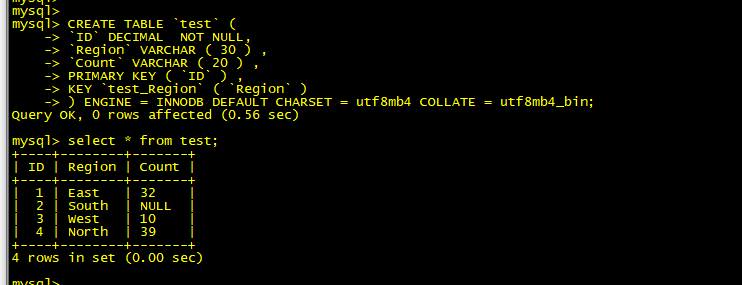

[tidb@tidb-mdb tidb-community-server-v6.6.0-linux-amd64]$ cat /data/my_database/csv/policy.test.CSV

1,“East”,32

2,“South”,\N

3,“West”,10

4,“North”,39

[tidb@tidb-mdb tidb-community-server-v6.6.0-linux-amd64]$