【 TiDB 使用环境】生产环境 /测试/ Poc

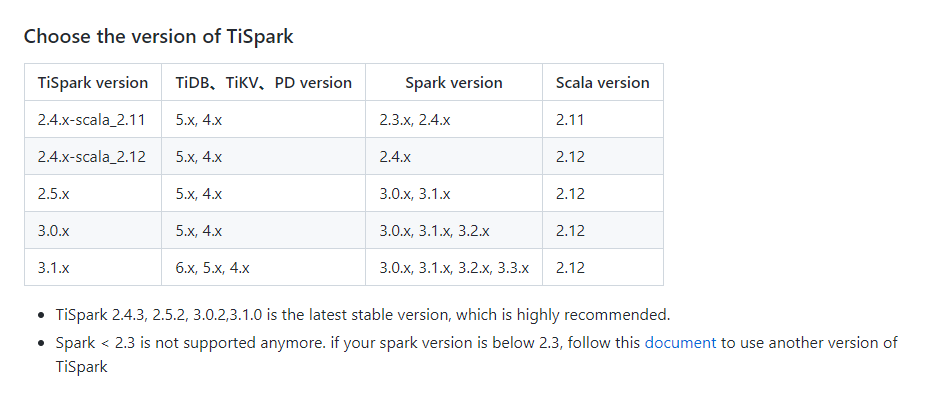

【 TiDB 版本】6.6

【复现路径】做过哪些操作出现的问题

tispark

启动 spark-shell:spark-shell --jars tispark-assembly-{version}.jar

在 spark-shell 中运行如下命令:

spark.sql(“select ti_version()”).collect

【遇到的问题:问题现象及影响】

【资源配置】

【附件:截图/日志/监控】

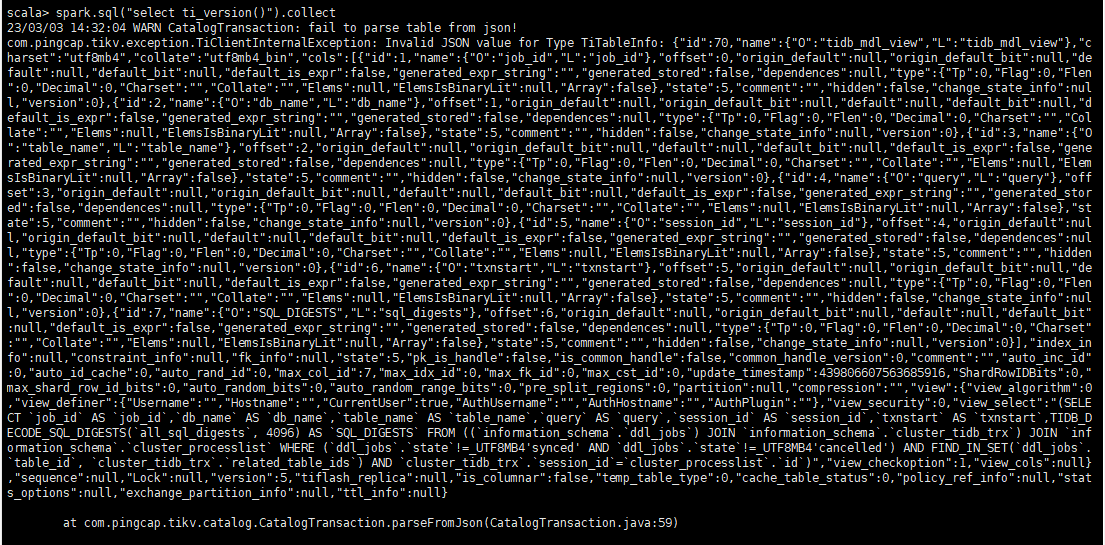

scala> spark.sql(“select ti_version()”).collect

23/03/03 14:32:04 WARN CatalogTransaction: fail to parse table from json!

com.pingcap.tikv.exception.TiClientInternalException: Invalid JSON value for Type TiTableInfo: {“id”:70,“name”:{“O”:“tidb_mdl_view”,“L”:“tidb_mdl_view”},“charset”:“utf8mb4”,“collate”:“utf8mb4_bin”,“cols”:[{“id”:1,“name”:{“O”:“job_id”,“L”:“job_id”},“offset”:0,“origin_default”:null,“origin_default_bit”:null,“default”:null,“default_bit”:null,“default_is_expr”:false,“generated_expr_string”:“”,“generated_stored”:false,“dependences”:null,“type”:{“Tp”:0,“Flag”:0,“Flen”:0,“Decimal”:0,“Charset”:“”,“Collate”:“”,“Elems”:null,“ElemsIsBinaryLit”:null,“Array”:false},“state”:5,“comment”:“”,“hidden”:false,“change_state_info”:null,“version”:0},{“id”:2,“name”:{“O”:“db_name”,“L”:“db_name”},“offset”:1,“origin_default”:null,“origin_default_bit”:null,“default”:null,“default_bit”:null,“default_is_expr”:false,“generated_expr_string”:“”,“generated_stored”:false,“dependences”:null,“type”:{“Tp”:0,“Flag”:0,“Flen”:0,“Decimal”:0,“Charset”:“”,“Collate”:“”,“Elems”:null,“ElemsIsBinaryLit”:null,“Array”:false},“state”:5,“comment”:“”,“hidden”:false,“change_state_info”:null,“version”:0},{“id”:3,“name”:{“O”:“table_name”,“L”:“table_name”},“offset”:2,“origin_default”:null,“origin_default_bit”:null,“default”:null,“default_bit”:null,“default_is_expr”:false,“generated_expr_string”:“”,“generated_stored”:false,“dependences”:null,“type”:{“Tp”:0,“Flag”:0,“Flen”:0,“Decimal”:0,“Charset”:“”,“Collate”:“”,“Elems”:null,“ElemsIsBinaryLit”:null,“Array”:false},“state”:5,“comment”:“”,“hidden”:false,“change_state_info”:null,“version”:0},{“id”:4,“name”:{“O”:“query”,“L”:“query”},“offset”:3,“origin_default”:null,“origin_default_bit”:null,“default”:null,“default_bit”:null,“default_is_expr”:false,“generated_expr_string”:“”,“generated_stored”:false,“dependences”:null,“type”:{“Tp”:0,“Flag”:0,“Flen”:0,“Decimal”:0,“Charset”:“”,“Collate”:“”,“Elems”:null,“ElemsIsBinaryLit”:null,“Array”:false},“state”:5,“comment”:“”,“hidden”:false,“change_state_info”:null,“version”:0},{“id”:5,“name”:{“O”:“session_id”,“L”:“session_id”},“offset”:4,“origin_default”:null,“origin_default_bit”:null,“default”:null,“default_bit”:null,“default_is_expr”:false,“generated_expr_string”:“”,“generated_stored”:false,“dependences”:null,“type”:{“Tp”:0,“Flag”:0,“Flen”:0,“Decimal”:0,“Charset”:“”,“Collate”:“”,“Elems”:null,“ElemsIsBinaryLit”:null,“Array”:false},“state”:5,“comment”:“”,“hidden”:false,“change_state_info”:null,“version”:0},{“id”:6,“name”:{“O”:“txnstart”,“L”:“txnstart”},“offset”:5,“origin_default”:null,“origin_default_bit”:null,“default”:null,“default_bit”:null,“default_is_expr”:false,“generated_expr_string”:“”,“generated_stored”:false,“dependences”:null,“type”:{“Tp”:0,“Flag”:0,“Flen”:0,“Decimal”:0,“Charset”:“”,“Collate”:“”,“Elems”:null,“ElemsIsBinaryLit”:null,“Array”:false},“state”:5,“comment”:“”,“hidden”:false,“change_state_info”:null,“version”:0},{“id”:7,“name”:{“O”:“SQL_DIGESTS”,“L”:“sql_digests”},“offset”:6,“origin_default”:null,“origin_default_bit”:null,“default”:null,“default_bit”:null,“default_is_expr”:false,“generated_expr_string”:“”,“generated_stored”:false,“dependences”:null,“type”:{“Tp”:0,“Flag”:0,“Flen”:0,“Decimal”:0,“Charset”:“”,“Collate”:“”,“Elems”:null,“ElemsIsBinaryLit”:null,“Array”:false},“state”:5,“comment”:“”,“hidden”:false,“change_state_info”:null,“version”:0}],“index_info”:null,“constraint_info”:null,“fk_info”:null,“state”:5,“pk_is_handle”:false,“is_common_handle”:false,“common_handle_version”:0,“comment”:“”,“auto_inc_id”:0,“auto_id_cache”:0,“auto_rand_id”:0,“max_col_id”:7,“max_idx_id”:0,“max_fk_id”:0,“max_cst_id”:0,“update_timestamp”:439806607563685916,“ShardRowIDBits”:0,“max_shard_row_id_bits”:0,“auto_random_bits”:0,“auto_random_range_bits”:0,“pre_split_regions”:0,“partition”:null,“compression”:“”,“view”:{“view_algorithm”:0,“view_definer”:{“Username”:“”,“Hostname”:“”,“CurrentUser”:true,“AuthUsername”:“”,“AuthHostname”:“”,“AuthPlugin”:“”},“view_security”:0,“view_select”:“(SELECT job_id AS job_id,db_name AS db_name,table_name AS table_name,query AS query,session_id AS session_id,txnstart AS txnstart,TIDB_DECODE_SQL_DIGESTS(all_sql_digests, 4096) AS SQL_DIGESTS FROM ((information_schema.ddl_jobs) JOIN information_schema.cluster_tidb_trx) JOIN information_schema.cluster_processlist WHERE (ddl_jobs.state!=_UTF8MB4’synced’ AND ddl_jobs.state!=_UTF8MB4’cancelled’) AND FIND_IN_SET(ddl_jobs.table_id, cluster_tidb_trx.related_table_ids) AND cluster_tidb_trx.session_id=cluster_processlist.id)”,“view_checkoption”:1,“view_cols”:null},“sequence”:null,“Lock”:null,“version”:5,“tiflash_replica”:null,“is_columnar”:false,“temp_table_type”:0,“cache_table_status”:0,“policy_ref_info”:null,“stats_options”:null,“exchange_partition_info”:null,“ttl_info”:null}

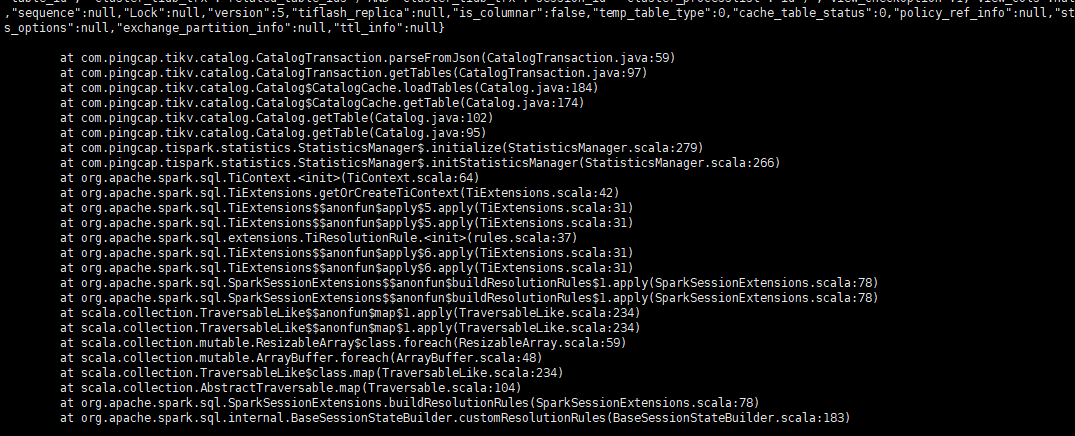

at com.pingcap.tikv.catalog.CatalogTransaction.parseFromJson(CatalogTransaction.java:59)

at com.pingcap.tikv.catalog.CatalogTransaction.getTables(CatalogTransaction.java:97)

at com.pingcap.tikv.catalog.Catalog$CatalogCache.loadTables(Catalog.java:184)

at com.pingcap.tikv.catalog.Catalog$CatalogCache.getTable(Catalog.java:174)

at com.pingcap.tikv.catalog.Catalog.getTable(Catalog.java:102)

at com.pingcap.tikv.catalog.Catalog.getTable(Catalog.java:95)

at com.pingcap.tispark.statistics.StatisticsManager$.initialize(StatisticsManager.scala:279)

at com.pingcap.tispark.statistics.StatisticsManager$.initStatisticsManager(StatisticsManager.scala:266)

at org.apache.spark.sql.TiContext.<init>(TiContext.scala:64)

at org.apache.spark.sql.TiExtensions.getOrCreateTiContext(TiExtensions.scala:42)

at org.apache.spark.sql.TiExtensions$$anonfun$apply$5.apply(TiExtensions.scala:31)

at org.apache.spark.sql.TiExtensions$$anonfun$apply$5.apply(TiExtensions.scala:31)

at org.apache.spark.sql.extensions.TiResolutionRule.<init>(rules.scala:37)

at org.apache.spark.sql.TiExtensions$$anonfun$apply$6.apply(TiExtensions.scala:31)

at org.apache.spark.sql.TiExtensions$$anonfun$apply$6.apply(TiExtensions.scala:31)

at org.apache.spark.sql.SparkSessionExtensions$$anonfun$buildResolutionRules$1.apply(SparkSessionExtensions.scala:78)

at org.apache.spark.sql.SparkSessionExtensions$$anonfun$buildResolutionRules$1.apply(SparkSessionExtensions.scala:78)

at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:48)

at scala.collection.TraversableLike$class.map(TraversableLike.scala:234)

at scala.collection.AbstractTraversable.map(Traversable.scala:104)

at org.apache.spark.sql.SparkSessionExtensions.buildResolutionRules(SparkSessionExtensions.scala:78)

at org.apache.spark.sql.internal.BaseSessionStateBuilder.customResolutionRules(BaseSessionStateBuilder.scala:183)

at org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1.<init>(HiveSessionStateBuilder.scala:74)

at org.apache.spark.sql.hive.HiveSessionStateBuilder.analyzer(HiveSessionStateBuilder.scala:69)

at org.apache.spark.sql.internal.BaseSessionStateBuilder$$anonfun$build$2.apply(BaseSessionStateBuilder.scala:293)

at org.apache.spark.sql.internal.BaseSessionStateBuilder$$anonfun$build$2.apply(BaseSessionStateBuilder.scala:293)

at org.apache.spark.sql.internal.SessionState.analyzer$lzycompute(SessionState.scala:79)

at org.apache.spark.sql.internal.SessionState.analyzer(SessionState.scala:79)

at org.apache.spark.sql.execution.QueryExecution.analyzed$lzycompute(QueryExecution.scala:57)

at org.apache.spark.sql.execution.QueryExecution.analyzed(QueryExecution.scala:55)

at org.apache.spark.sql.execution.QueryExecution.assertAnalyzed(QueryExecution.scala:47)

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:78)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:642)

at $line14.$read$$iw$$iw$$iw$$iw$$iw$$iw$$iw$$iw.<init>(<console>:24)

at $line14.$read$$iw$$iw$$iw$$iw$$iw$$iw$$iw.<init>(<console>:29)

at $line14.$read$$iw$$iw$$iw$$iw$$iw$$iw.<init>(<console>:31)

at $line14.$read$$iw$$iw$$iw$$iw$$iw.<init>(<console>:33)

at $line14.$read$$iw$$iw$$iw$$iw.<init>(<console>:35)

at $line14.$read$$iw$$iw$$iw.<init>(<console>:37)

at $line14.$read$$iw$$iw.<init>(<console>:39)

at $line14.$read$$iw.<init>(<console>:41)

at $line14.$read.<init>(<console>:43)

at $line14.$read$.<init>(<console>:47)

at $line14.$read$.<clinit>(<console>)

at $line14.$eval$.$print$lzycompute(<console>:7)

at $line14.$eval$.$print(<console>:6)

at $line14.$eval.$print(<console>)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at scala.tools.nsc.interpreter.IMain$ReadEvalPrint.call(IMain.scala:793)

at scala.tools.nsc.interpreter.IMain$Request.loadAndRun(IMain.scala:1054)

at scala.tools.nsc.interpreter.IMain$WrappedRequest$$anonfun$loadAndRunReq$1.apply(IMain.scala:645)

at scala.tools.nsc.interpreter.IMain$WrappedRequest$$anonfun$loadAndRunReq$1.apply(IMain.scala:644)

at scala.reflect.internal.util.ScalaClassLoader$class.asContext(ScalaClassLoader.scala:31)

at scala.reflect.internal.util.AbstractFileClassLoader.asContext(AbstractFileClassLoader.scala:19)

at scala.tools.nsc.interpreter.IMain$WrappedRequest.loadAndRunReq(IMain.scala:644)

at scala.tools.nsc.interpreter.IMain.interpret(IMain.scala:576)

at scala.tools.nsc.interpreter.IMain.interpret(IMain.scala:572)

at scala.tools.nsc.interpreter.ILoop.interpretStartingWith(ILoop.scala:819)

at scala.tools.nsc.interpreter.ILoop.command(ILoop.scala:691)

at scala.tools.nsc.interpreter.ILoop.processLine(ILoop.scala:404)

at scala.tools.nsc.interpreter.ILoop.loop(ILoop.scala:425)

at org.apache.spark.repl.SparkILoop$$anonfun$process$1.apply$mcZ$sp(SparkILoop.scala:285)

at org.apache.spark.repl.SparkILoop.runClosure(SparkILoop.scala:159)

at org.apache.spark.repl.SparkILoop.process(SparkILoop.scala:182)

at org.apache.spark.repl.Main$.doMain(Main.scala:78)

at org.apache.spark.repl.Main$.main(Main.scala:58)

at org.apache.spark.repl.Main.main(Main.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:849)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:167)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:195)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:86)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:924)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:933)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: com.fasterxml.jackson.databind.exc.UnrecognizedPropertyException: Unrecognized field “AuthPlugin” (class com.pingcap.tikv.meta.TiUserIdentity), not marked as ignorable (5 known properties: “Hostname”, “CurrentUser”, “Username”, “AuthHostname”, “AuthUsername”])

at [Source: {“id”:70,“name”:{“O”:“tidb_mdl_view”,“L”:“tidb_mdl_view”},“charset”:“utf8mb4”,“collate”:“utf8mb4_bin”,“cols”:[{“id”:1,“name”:{“O”:“job_id”,“L”:“job_id”},“offset”:0,“origin_default”:null,“origin_default_bit”:null,“default”:null,“default_bit”:null,“default_is_expr”:false,“generated_expr_string”:“”,“generated_stored”:false,“dependences”:null,“type”:{“Tp”:0,“Flag”:0,“Flen”:0,“Decimal”:0,“Charset”:“”,“Collate”:“”,“Elems”:null,“ElemsIsBinaryLit”:null,“Array”:false},“state”:5,“comment”:“”,“hidden”:false,“change_state_info”:null,“version”:0},{“id”:2,“name”:{“O”:“db_name”,“L”:“db_name”},“offset”:1,“origin_default”:null,“origin_default_bit”:null,“default”:null,“default_bit”:null,“default_is_expr”:false,“generated_expr_string”:“”,“generated_stored”:false,“dependences”:null,“type”:{“Tp”:0,“Flag”:0,“Flen”:0,“Decimal”:0,“Charset”:“”,“Collate”:“”,“Elems”:null,“ElemsIsBinaryLit”:null,“Array”:false},“state”:5,“comment”:“”,“hidden”:false,“change_state_info”:null,“version”:0},{“id”:3,“name”:{“O”:“table_name”,“L”:“table_name”},“offset”:2,“origin_default”:null,“origin_default_bit”:null,“default”:null,“default_bit”:null,“default_is_expr”:false,“generated_expr_string”:“”,“generated_stored”:false,“dependences”:null,“type”:{“Tp”:0,“Flag”:0,“Flen”:0,“Decimal”:0,“Charset”:“”,“Collate”:“”,“Elems”:null,“ElemsIsBinaryLit”:null,“Array”:false},“state”:5,“comment”:“”,“hidden”:false,“change_state_info”:null,“version”:0},{“id”:4,“name”:{“O”:“query”,“L”:“query”},“offset”:3,“origin_default”:null,“origin_default_bit”:null,“default”:null,“default_bit”:null,“default_is_expr”:false,“generated_expr_string”:“”,“generated_stored”:false,“dependences”:null,“type”:{“Tp”:0,“Flag”:0,“Flen”:0,“Decimal”:0,“Charset”:“”,“Collate”:“”,“Elems”:null,“ElemsIsBinaryLit”:null,“Array”:false},“state”:5,“comment”:“”,“hidden”:false,“change_state_info”:null,“version”:0},{“id”:5,“name”:{“O”:“session_id”,“L”:“session_id”},“offset”:4,“origin_default”:null,“origin_default_bit”:null,“default”:null,“default_bit”:null,“default_is_expr”:false,“generated_expr_string”:“”,“generated_stored”:false,“dependences”:null,“type”:{“Tp”:0,“Flag”:0,“Flen”:0,“Decimal”:0,“Charset”:“”,“Collate”:“”,“Elems”:null,“ElemsIsBinaryLit”:null,“Array”:false},“state”:5,“comment”:“”,“hidden”:false,“change_state_info”:null,“version”:0},{“id”:6,“name”:{“O”:“txnstart”,“L”:“txnstart”},“offset”:5,“origin_default”:null,“origin_default_bit”:null,“default”:null,“default_bit”:null,“default_is_expr”:false,“generated_expr_string”:“”,“generated_stored”:false,“dependences”:null,“type”:{“Tp”:0,“Flag”:0,“Flen”:0,“Decimal”:0,“Charset”:“”,“Collate”:“”,“Elems”:null,“ElemsIsBinaryLit”:null,“Array”:false},“state”:5,“comment”:“”,“hidden”:false,“change_state_info”:null,“version”:0},{“id”:7,“name”:{“O”:“SQL_DIGESTS”,“L”:“sql_digests”},“offset”:6,“origin_default”:null,“origin_default_bit”:null,“default”:null,“default_bit”:null,“default_is_expr”:false,“generated_expr_string”:“”,“generated_stored”:false,“dependences”:null,“type”:{“Tp”:0,“Flag”:0,“Flen”:0,“Decimal”:0,“Charset”:“”,“Collate”:“”,“Elems”:null,“ElemsIsBinaryLit”:null,“Array”:false},“state”:5,“comment”:“”,“hidden”:false,“change_state_info”:null,“version”:0}],“index_info”:null,“constraint_info”:null,“fk_info”:null,“state”:5,“pk_is_handle”:false,“is_common_handle”:false,“common_handle_version”:0,“comment”:“”,“auto_inc_id”:0,“auto_id_cache”:0,“auto_rand_id”:0,“max_col_id”:7,“max_idx_id”:0,“max_fk_id”:0,“max_cst_id”:0,“update_timestamp”:439806607563685916,“ShardRowIDBits”:0,“max_shard_row_id_bits”:0,“auto_random_bits”:0,“auto_random_range_bits”:0,“pre_split_regions”:0,“partition”:null,“compression”:“”,“view”:{“view_algorithm”:0,“view_definer”:{“Username”:“”,“Hostname”:“”,“CurrentUser”:true,“AuthUsername”:“”,“AuthHostname”:“”,“AuthPlugin”:“”},“view_security”:0,“view_select”:“(SELECT job_id AS job_id,db_name AS db_name,table_name AS table_name,query AS query,session_id AS session_id,txnstart AS txnstart,TIDB_DECODE_SQL_DIGESTS(all_sql_digests, 4096) AS SQL_DIGESTS FROM ((information_schema.ddl_jobs) JOIN information_schema.cluster_tidb_trx) JOIN information_schema.cluster_processlist WHERE (ddl_jobs.state!=_UTF8MB4’synced’ AND ddl_jobs.state!=_UTF8MB4’cancelled’) AND FIND_IN_SET(ddl_jobs.table_id, cluster_tidb_trx.related_table_ids) AND cluster_tidb_trx.session_id=cluster_processlist.id)”,“view_checkoption”:1,“view_cols”:null},“sequence”:null,“Lock”:null,“version”:5,“tiflash_replica”:null,“is_columnar”:false,“temp_table_type”:0,“cache_table_status”:0,“policy_ref_info”:null,“stats_options”:null,“exchange_partition_info”:null,“ttl_info”:null}; line: 1, column: 3740] (through reference chain: com.pingcap.tikv.meta.TiTableInfo[“view”]->com.pingcap.tikv.meta.TiViewInfo[“view_definer”]->com.pingcap.tikv.meta.TiUserIdentity[“AuthPlugin”])

at com.fasterxml.jackson.databind.exc.UnrecognizedPropertyException.from(UnrecognizedPropertyException.java:52)

at com.fasterxml.jackson.databind.DeserializationContext.reportUnknownProperty(DeserializationContext.java:839)

at com.fasterxml.jackson.databind.deser.std.StdDeserializer.handleUnknownProperty(StdDeserializer.java:1045)

at com.fasterxml.jackson.databind.deser.BeanDeserializerBase.handleUnknownProperty(BeanDeserializerBase.java:1352)

at com.fasterxml.jackson.databind.deser.BeanDeserializerBase.handleUnknownVanilla(BeanDeserializerBase.java:1330)

at com.fasterxml.jackson.databind.deser.BeanDeserializer.deserialize(BeanDeserializer.java:228)

at com.fasterxml.jackson.databind.deser.BeanDeserializer._deserializeUsingPropertyBased(BeanDeserializer.java:402)

at com.fasterxml.jackson.databind.deser.BeanDeserializerBase.deserializeFromObjectUsingNonDefault(BeanDeserializerBase.java:1099)

at com.fasterxml.jackson.databind.deser.BeanDeserializer.deserializeFromObject(BeanDeserializer.java:296)

at com.fasterxml.jackson.databind.deser.BeanDeserializer.deserialize(BeanDeserializer.java:133)

at com.fasterxml.jackson.databind.deser.SettableBeanProperty.deserialize(SettableBeanProperty.java:520)

at com.fasterxml.jackson.databind.deser.BeanDeserializer._deserializeWithErrorWrapping(BeanDeserializer.java:463)

at com.fasterxml.jackson.databind.deser.BeanDeserializer._deserializeUsingPropertyBased(BeanDeserializer.java:379)

at com.fasterxml.jackson.databind.deser.BeanDeserializerBase.deserializeFromObjectUsingNonDefault(BeanDeserializerBase.java:1099)

at com.fasterxml.jackson.databind.deser.BeanDeserializer.deserializeFromObject(BeanDeserializer.java:296)

at com.fasterxml.jackson.databind.deser.BeanDeserializer.deserialize(BeanDeserializer.java:133)

at com.fasterxml.jackson.databind.deser.SettableBeanProperty.deserialize(SettableBeanProperty.java:520)

at com.fasterxml.jackson.databind.deser.BeanDeserializer._deserializeWithErrorWrapping(BeanDeserializer.java:463)

at com.fasterxml.jackson.databind.deser.BeanDeserializer._deserializeUsingPropertyBased(BeanDeserializer.java:379)

at com.fasterxml.jackson.databind.deser.BeanDeserializerBase.deserializeFromObjectUsingNonDefault(BeanDeserializerBase.java:1099)

at com.fasterxml.jackson.databind.deser.BeanDeserializer.deserializeFromObject(BeanDeserializer.java:296)

at com.fasterxml.jackson.databind.deser.BeanDeserializer.deserialize(BeanDeserializer.java:133)

at com.fasterxml.jackson.databind.ObjectMapper._readMapAndClose(ObjectMapper.java:3736)

at com.fasterxml.jackson.databind.ObjectMapper.readValue(ObjectMapper.java:2726)

at com.pingcap.tikv.catalog.CatalogTransaction.parseFromJson(CatalogTransaction.java:54)

… 84 more

[Stage 0:> (0 + 0) / 1]23/03/03 14:32:21 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

23/03/03 14:32:36 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

23/03/03 14:32:51 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

23/03/03 14:33:06 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

[Stage 0:> (0 + 0) / 1]23/03/03 14:33:21 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

23/03/03 14:33:36 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

23/03/03 14:33:51 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

23/03/03 14:34:06 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

[Stage 0:> (0 + 0) / 1]23/03/03 14:34:21 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

23/03/03 14:34:36 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

23/03/03 14:34:51 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

23/03/03 14:35:06 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

[Stage 0:> (0 + 0) / 1]23/03/03 14:35:21 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

23/03/03 14:35:36 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

23/03/03 14:35:51 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

23/03/03 14:36:06 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

[Stage 0:> (0 + 0) / 1]23/03/03 14:36:21 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

23/03/03 14:36:26 WARN Signaling: Cancelling all active jobs, this can take a while. Press Ctrl+C again to exit now.

org.apache.spark.SparkException: Job 0 cancelled as part of cancellation of all jobs

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$failJobAndIndependentStages(DAGScheduler.scala:1889)

at org.apache.spark.scheduler.DAGScheduler.handleJobCancellation(DAGScheduler.scala:1824)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$doCancelAllJobs$1.apply$mcVI$sp(DAGScheduler.scala:830)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$doCancelAllJobs$1.apply(DAGScheduler.scala:830)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$doCancelAllJobs$1.apply(DAGScheduler.scala:830)

at scala.collection.mutable.HashSet.foreach(HashSet.scala:78)

at org.apache.spark.scheduler.DAGScheduler.doCancelAllJobs(DAGScheduler.scala:830)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:2082)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2059)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2048)

at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:49)

at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:737)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2061)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2082)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2101)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2126)

at org.apache.spark.rdd.RDD$$anonfun$collect$1.apply(RDD.scala:945)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:363)

at org.apache.spark.rdd.RDD.collect(RDD.scala:944)

at org.apache.spark.sql.execution.SparkPlan.executeCollect(SparkPlan.scala:299)

at org.apache.spark.sql.Dataset.org$apache$spark$sql$Dataset$$collectFromPlan(Dataset.scala:3383)

at org.apache.spark.sql.Dataset$$anonfun$collect$1.apply(Dataset.scala:2782)

at org.apache.spark.sql.Dataset$$anonfun$collect$1.apply(Dataset.scala:2782)

at org.apache.spark.sql.Dataset$$anonfun$53.apply(Dataset.scala:3364)

at org.apache.spark.sql.execution.SQLExecution$$anonfun$withNewExecutionId$1.apply(SQLExecution.scala:78)

at org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:125)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:73)

at org.apache.spark.sql.Dataset.withAction(Dataset.scala:3363)

at org.apache.spark.sql.Dataset.collect(Dataset.scala:2782)

… 49 elided