tidb 也起不来?

本来上面只有3台kv是down 了,后来我stop 了之后,又重启,后面就起不来了

单独起tidb 看报什么错

Error: failed to start tidb: failed to start: 10.26.51.137 tidb-4000.service, please check the instance’s log(/httx/data1/deploy/tidb-4000/log) for more detail.: timed out waiting for port 4000 to be started after 2m0s

现在单启TIDB也不行了。我去。

三个tidb实例单独起都不行?看一下详细日志

目前解决思路,1.先起来出了出问题的tikv外别的实例,让服务正常运行,2.起来前把同步任务或其他先任务先停一停。3.备份数据库数据,尝试通过下线或者别的方式尝试恢复tikv实例。

好的,谢谢老师,我按照您给出的这个思路去做。

好的,您稍等。

老师,我在最早出现问题的store7 所属的那台TIKV上发现了这么一条个日志输出,是凌晨4点钟的。

[2023/02/28 04:08:29.782 +08:00] [INFO] [scheduler.rs:517] [“get snapshot failed”] [err=“Error(Request(message: "EpochNotMatch current epoch of region 310992 is conf_ver: 1325 version: 2705, but you sent conf_ver: 1325 version: 2704" epoch_not_match { current_regions { id: 310992 start_key: 7480000000000000FFAB5F698000000000FF0000020137393132FF32333734FF323137FF3138353338FF3333FF340000000000FA03FF8000000006246C53FF0000000000000000F7 end_key: 7480000000000000FFAB5F698000000000FF0000020137393333FF36393936FF353433FF3537343433FF3230FF000000000000F903FF800000001208B68DFF0000000000000000F7 region_epoch { conf_ver: 1325 version: 2705 } peers { id: 310993 store_id: 10 } peers { id: 310994 store_id: 1 } peers { id: 310995 store_id: 7 } } current_regions { id: 338008 start_key: 7480000000000000FFAB5F698000000000FF0000020137383539FF30323030FF343830FF3239353431FF3037FF000000000000F903FF8000000002A96741FF0000000000000000F7 end_key: 7480000000000000FFAB5F698000000000FF0000020137393132FF32333734FF323137FF3138353338FF3333FF340000000000FA03FF8000000006246C53FF0000000000000000F7 region_epoch { conf_ver: 1325 version: 2705 } peers { id: 338009 store_id: 10 } peers { id: 338010 store_id: 1 } peers { id: 338011 store_id: 7 } } }))”] [cid=50752110]

[2023/02/28 04:08:34.965 +08:00] [FATAL] [lib.rs:465] [“rocksdb background error. db: kv, reason: compaction, error: Corruption: block checksum mismatch: expected 1381501666, got 1130776720 in /httx/data1/data/tikv-20160/db/150586.sst offset 105625 size 11427”] [backtrace=" 0: tikv_util::set_panic_hook::{{closure}}\n at /home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tikv/components/tikv_util/src/lib.rs:464:18\n 1: std::panicking::rust_panic_with_hook\n at /rustc/2faabf579323f5252329264cc53ba9ff803429a3/library/std/src/panicking.rs:626:17\n 2: std::panicking::begin_panic_handler::{{closure}}\n at /rustc/2faabf579323f5252329264cc53ba9ff803429a3/library/std/src/panicking.rs:519:13\n 3: std::sys_common::backtrace::__rust_end_short_backtrace\n at /rustc/2faabf579323f5252329264cc53ba9ff803429a3/library/std/src/sys_common/backtrace.rs:141:18\n 4: rust_begin_unwind\n at /rustc/2faabf579323f5252329264cc53ba9ff803429a3/library/std/src/panicking.rs:515:5\n 5: std::panicking::begin_panic_fmt\n at /rustc/2faabf579323f5252329264cc53ba9ff803429a3/library/std/src/panicking.rs:457:5\n 6: <engine_rocks::event_listener::RocksEventListener as rocksdb::event_listener::EventListener>::on_background_error\n at /home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tikv/components/engine_rocks/src/event_listener.rs:119:13\n rocksdb::event_listener::on_background_error\n at /rust/git/checkouts/rust-rocksdb-a9a28e74c6ead8ef/7641ef5/src/event_listener.rs:315:5\n 7: _ZN24crocksdb_eventlistener_t17OnBackgroundErrorEN7rocksdb21BackgroundErrorReasonEPNS0_6StatusE\n at /rust/git/checkouts/rust-rocksdb-a9a28e74c6ead8ef/7641ef5/librocksdb_sys/crocksdb/c.cc:2352:24\n 8: _ZN7rocksdb12EventHelpers23NotifyOnBackgroundErrorERKSt6vectorISt10shared_ptrINS_13EventListenerEESaIS4_EENS_21BackgroundErrorReasonEPNS_6StatusEPNS_17InstrumentedMutexEPb\n at /rust/git/checkouts/rust-rocksdb-a9a28e74c6ead8ef/7641ef5/librocksdb_sys/rocksdb/db/event_helpers.cc:53:32\n 9: _ZN7rocksdb12ErrorHandler10SetBGErrorERKNS_6StatusENS_21BackgroundErrorReasonE\n at /rust/git/checkouts/rust-rocksdb-a9a28e74c6ead8ef/7641ef5/librocksdb_sys/rocksdb/db/error_handler.cc:219:42\n 10: _ZN7rocksdb6DBImpl20BackgroundCompactionEPbPNS_10JobContextEPNS_9LogBufferEPNS0_19PrepickedCompactionENS_3Env8PriorityE\n at /rust/git/checkouts/rust-rocksdb-a9a28e74c6ead8ef/7641ef5/librocksdb_sys/rocksdb/db/db_impl/db_impl_compaction_flush.cc:2799:30\n 11: _ZN7rocksdb6DBImpl24BackgroundCallCompactionEPNS0_19PrepickedCompactionENS_3Env8PriorityE\n at /rust/git/checkouts/rust-rocksdb-a9a28e74c6ead8ef/7641ef5/librocksdb_sys/rocksdb/db/db_impl/db_impl_compaction_flush.cc:2319:72\n 12: _ZN7rocksdb6DBImpl16BGWorkCompactionEPv\n at /rust/git/checkouts/rust-rocksdb-a9a28e74c6ead8ef/7641ef5/librocksdb_sys/rocksdb/db/db_impl/db_impl_compaction_flush.cc:2093:61\n 13: _ZNKSt8functionIFvvEEclEv\n at /opt/rh/devtoolset-8/root/usr/include/c++/8/bits/std_function.h:687:14\n _ZN7rocksdb14ThreadPoolImpl4Impl8BGThreadEm\n at /rust/git/checkouts/rust-rocksdb-a9a28e74c6ead8ef/7641ef5/librocksdb_sys/rocksdb/util/threadpool_imp.cc:266:9\n 14: _ZN7rocksdb14ThreadPoolImpl4Impl15BGThreadWrapperEPv\n at /rust/git/checkouts/rust-rocksdb-a9a28e74c6ead8ef/7641ef5/librocksdb_sys/rocksdb/util/threadpool_imp.cc:307:15\n 15: execute_native_thread_routine\n 16: start_thread\n 17: clone\n"] [location=components/engine_rocks/src/event_listener.rs:119] [thread_name=]

[2023/02/28 04:08:54.027 +08:00] [INFO] [lib.rs:81] [“Welcome to TiKV”]

[2023/02/28 04:08:54.028 +08:00] [INFO] [lib.rs:86] [“Release Version: 5.4.3”]

先别管这个先把服务起来,然后恢复tikv

好的,谢谢老师。

学习了

非常谢谢老师,已经根据您的思路,成功的进行了缩容并将三台机器下线,后来又将这三台重新进行了扩容等一系列操作,现在看起来数据也没有问题。太感谢了。

互相学习。 ![]()

![]()

![]()

这个问题的什么导致的 有结果吗

导致此问题发生的原因不详,我也没在做什么操作,只是简单的同步数据。数据量也不太大。以前这几年也都是这么同步的。谁知道这次是怎么回事 。日志上也没有价值的信息。

扩缩容应该能稳定一段时间了

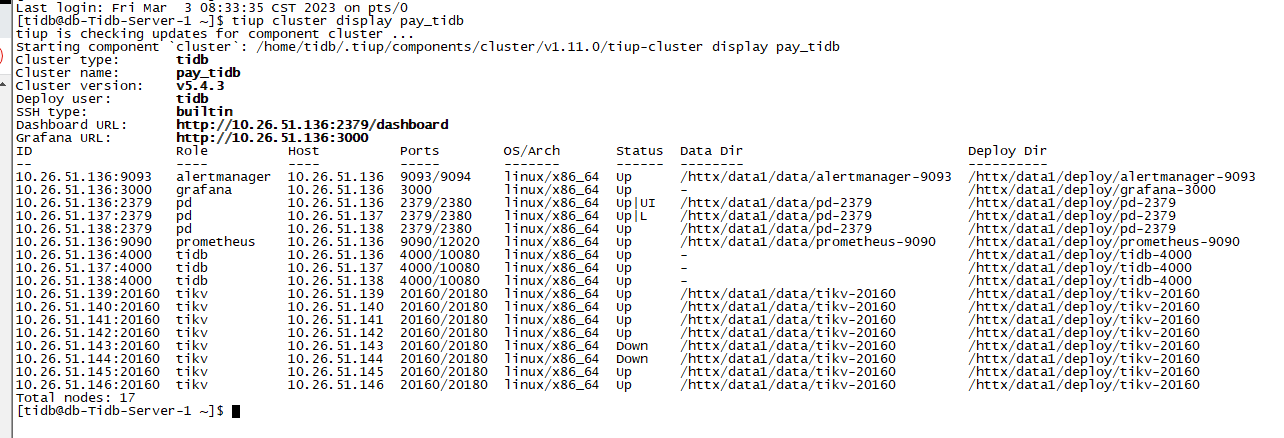

老师,前天我对坏掉的三台进行了缩容后,TIDB全部正常。然后我又对这三台进行了扩容,当时看着是没有问题的,数据也是没问题的。然而今天早上06:14分的时候,又down了两台,居然不是原来的三台之内的。我的配置是3台tidb server,3个 pd和3个tidb在同台机器上,8台tikv, tidb 和tikv所在的机器内存64GB,CPU为24C。display如下:

上次坏的是10.26.51.139,10.26.51.141和10.26.51.142

今天坏的是10.26.51.143 和10.26.51.144

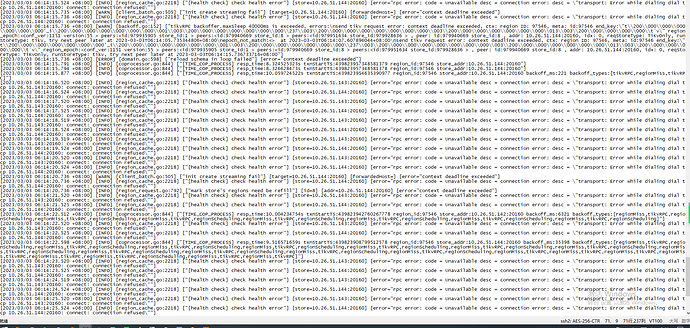

tidb当时的异常信息是

其中一台down的TIKV的日志信息如下附件:

tikv.log.zip (18.6 MB)

现在是彻底懵圈了。因为昨天并没有同步数据,也无其它的操作。

检查下网络, node exporter里有一些监控信息

kv有问题了