为提高效率,提问时请提供以下信息,问题描述清晰可优先响应。

- 【TiDB 版本】: 5.7.25-TiDB-v2.1.17

脚本输出集群信息:

系统信息

+-------+-----------------------+

| Host | Release |

+-------+-----------------------+

| tikv1 | 3.10.0-514.el7.x86_64 |

| tikv2 | 3.10.0-514.el7.x86_64 |

| tikv3 | 3.10.0-514.el7.x86_64 |

+-------+-----------------------+

TiDB 集群信息

+---------------------+--------------+------+----+------+

| TiDB_version | Clu_replicas | TiDB | PD | TiKV |

+---------------------+--------------+------+----+------+

| 5.7.25-TiDB-v2.1.17 | 3 | 2 | 3 | 3 |

+---------------------+--------------+------+----+------+

集群节点信息

+------------+--------------+

| Node_IP | Server_info |

+------------+--------------+

| instance_0 | tikv+pd |

| instance_1 | pd+tikv+tidb |

| instance_2 | tikv+pd+tidb |

+------------+--------------+

容量 & region 数量

+---------------------+-----------------+--------------+

| Storage_capacity_GB | Storage_uesd_GB | Region_count |

+---------------------+-----------------+--------------+

| 2598.73 | 67.14 | 1902 |

+---------------------+-----------------+--------------+

QPS

+---------+----------------+-----------------+

| Clu_QPS | Duration_99_MS | Duration_999_MS |

+---------+----------------+-----------------+

| 1638.84 | 1574.65 | 3838.43 |

+---------+----------------+-----------------+

热点 region 信息

+---------------+----------+-----------+

| Store | Hot_read | Hot_write |

+---------------+----------+-----------+

| store-store_5 | 1 | 3 |

| store-store_4 | 0 | 3 |

| store-store_1 | 0 | 2 |

+---------------+----------+-----------+

磁盘延迟信息

+--------+----------+-------------+--------------+

| Device | Instance | Read_lat_MS | Write_lat_MS |

+--------+----------+-------------+--------------+

+--------+----------+-------------+--------------+

- 【问题描述】:

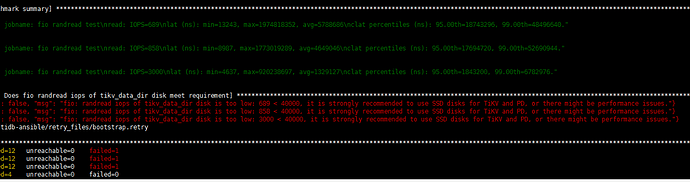

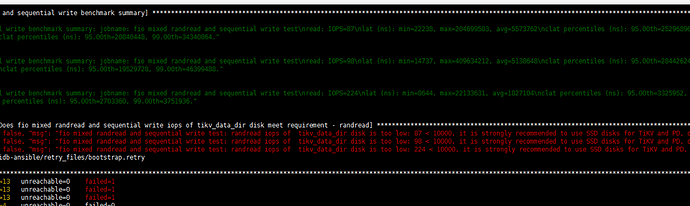

1.安装前提:使用ansible安装时跳过了磁盘IO的检测,因为没使用ssd。 安装时检测的磁盘IPOS如下:

- 在写入数据的时,通过iostat 发现%util持续90%以上,而CPU资源非常宽裕(32核)

根据官方文档Tikv性能调优文章中介绍的几个关键配置修改自己的tikv配置如下(对level0和level1层的数据压缩),io依然是瓶颈,cpu资源占用也还是很少,请问配置应该如何调整还是有其他问题?

tikv配置:

[server]

grpc-concurrency = 8

grpc-raft-conn-num = 24

labels = { }

[storage]

scheduler-worker-pool-size = 28

[pd]

This section will be overwritten by command line parameters

[metric]

address = “10.0.60.107:9091”

interval = “15s”

job = “tikv”

[raftstore]

raftdb-path = “”

region-split-check-diff = “32MB”

sync-log = true

[coprocessor]

region-max-size = “384MB”

region-split-size = “256MB”

[rocksdb]

max-background-jobs = 28

wal-dir = “”

[rocksdb.defaultcf]

block-cache-size = “15GB”

compression-per-level = [“zlib”, “zlib”, “lz4”, “lz4”, “lz4”, “zstd”, “zstd”]

max-bytes-for-level-base = “128MB”

target-file-size-base = “32MB”

[rocksdb.lockcf]

[rocksdb.writecf]

block-cache-size = “9GB”

compression-per-level = [“zlib”, “zlib”, “lz4”, “lz4”, “lz4”, “zstd”, “zstd”]

max-bytes-for-level-base = “128MB”

target-file-size-base = “32MB”

[raftdb]

[raftdb.defaultcf]

block-cache-size = “2GB”

compression-per-level = [“zlib”, “zlib”, “lz4”, “lz4”, “lz4”, “zstd”, “zstd”]

max-bytes-for-level-base = “128MB”

target-file-size-base = “32MB”