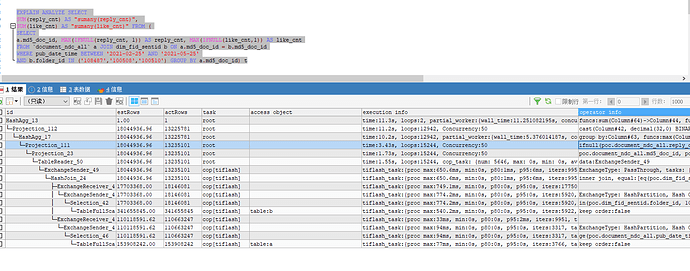

这个应该是没动过的

set @@tidb_hashagg_final_concurrency=30;

另外改写一下sql,减少 tidb 的工作量:

mysql> desc SELECT count(1) AS "countd(post_cnt)" FROM (SELECT 1 FROM poc.`document_ndc_all` a JOIN poc.dim_fid_sentid b ON a.md5_doc_id = b.md5_doc_id WHERE pub_date_time BETWEEN '2021-02-22' AND '2021-05-25' AND b.folder_id IN ('108487', '100508', '100510') GROUP BY a.md5_doc_id) tt;

+----------------------------------------------------+----------+-------------------+---------------+----------------------------------------------------------------------------------------------------------------------------------------+

| id | estRows | task | access object | operator info |

+----------------------------------------------------+----------+-------------------+---------------+----------------------------------------------------------------------------------------------------------------------------------------+

| HashAgg_68 | 1.00 | root | | funcs:count(Column#49)->Column#43 |

| └─TableReader_70 | 1.00 | root | | data:ExchangeSender_69 |

| └─ExchangeSender_69 | 1.00 | batchCop[tiflash] | | ExchangeType: PassThrough |

| └─HashAgg_15 | 1.00 | batchCop[tiflash] | | funcs:count(1)->Column#49 |

| └─Projection_63 | 37.46 | batchCop[tiflash] | | Column#45 |

| └─HashAgg_61 | 37.46 | batchCop[tiflash] | | group by:poc.document_ndc_all.md5_doc_id, funcs:firstrow(1)->Column#45 |

| └─ExchangeReceiver_46 | 37.46 | batchCop[tiflash] | | |

| └─ExchangeSender_45 | 37.46 | batchCop[tiflash] | | ExchangeType: HashPartition, Hash Cols: poc.document_ndc_all.md5_doc_id |

| └─Projection_42 | 37.46 | batchCop[tiflash] | | poc.document_ndc_all.md5_doc_id |

| └─HashJoin_44 | 37.46 | batchCop[tiflash] | | inner join, equal:[eq(poc.dim_fid_sentid.md5_doc_id, poc.document_ndc_all.md5_doc_id)] |

| ├─ExchangeReceiver_29(Build) | 29.97 | batchCop[tiflash] | | |

| │ └─ExchangeSender_28 | 29.97 | batchCop[tiflash] | | ExchangeType: Broadcast |

| │ └─Selection_27 | 29.97 | batchCop[tiflash] | | in(poc.dim_fid_sentid.folder_id, 108487, 100508, 100510), not(isnull(poc.dim_fid_sentid.md5_doc_id)) |

| │ └─TableFullScan_26 | 10000.00 | batchCop[tiflash] | table:b | keep order:false, stats:pseudo |

| └─Selection_31(Probe) | 250.00 | batchCop[tiflash] | | ge(poc.document_ndc_all.pub_date_time, 2021-02-22 00:00:00.000000), le(poc.document_ndc_all.pub_date_time, 2021-05-25 00:00:00.000000) |

| └─TableFullScan_30 | 10000.00 | batchCop[tiflash] | table:a | keep order:false, stats:pseudo |

+----------------------------------------------------+----------+-------------------+---------------+----------------------------------------------------------------------------------------------------------------------------------------+

16 rows in set (0.00 sec)tiflash 是在 clickhouse 上构建的 MPP,在大型 join/agg 的情况下肯定会更快。欢迎继续使用和提问题,我们一直持续迭代完善功能。

非常赞,改用group by 之后,也下推到tiflash执行了,直接缩减到2s执行完毕,非常非常赞

mysql> desc SELECT SUM(reply_cnt) AS "sumany(reply_cnt)", SUM(like_cnt) AS "sumany(like_cnt)" FROM ( SELECT a.md5_doc_id, MAX(IFNULL(reply_cnt, 1)) AS reply_cnt, MAX(IFNULL(like_cnt, 1)) AS like_cnt FROM `document_ndc_all` a JOIN dim_fid_sentid b ON a.md5_doc_id = b.md5_doc_id WHERE pub_date_time BETWEEN '2021-02-25' AND '2021-05-25' AND b.folder_id IN ('108487', '100508', '100510') GROUP BY a.md5_doc_id ) t;

+--------------------------------------------------------+----------+-------------------+---------------+--------------------------------------------------------------------------------------------------------------------------------------------+

| id | estRows | task | access object | operator info |

+--------------------------------------------------------+----------+-------------------+---------------+--------------------------------------------------------------------------------------------------------------------------------------------+

| HashAgg_68 | 1.00 | root | | funcs:sum(Column#52)->Column#44, funcs:sum(Column#53)->Column#45 |

| └─TableReader_70 | 1.00 | root | | data:ExchangeSender_69 |

| └─ExchangeSender_69 | 1.00 | batchCop[tiflash] | | ExchangeType: PassThrough |

| └─HashAgg_15 | 1.00 | batchCop[tiflash] | | funcs:sum(Column#61)->Column#52, funcs:sum(Column#62)->Column#53 |

| └─Projection_89 | 37.46 | batchCop[tiflash] | | cast(Column#42, decimal(32,0) BINARY)->Column#61, cast(Column#43, decimal(32,0) BINARY)->Column#62 |

| └─Projection_63 | 37.46 | batchCop[tiflash] | | Column#42, Column#43 |

| └─HashAgg_61 | 37.46 | batchCop[tiflash] | | group by:Column#60, funcs:max(Column#58)->Column#42, funcs:max(Column#59)->Column#43 |

| └─Projection_88 | 37.46 | batchCop[tiflash] | | ifnull(poc.document_ndc_all.reply_cnt, 1)->Column#58, ifnull(poc.document_ndc_all.like_cnt, 1)->Column#59, poc.document_ndc_all.md5_doc_id |

| └─ExchangeReceiver_46 | 37.46 | batchCop[tiflash] | | |

| └─ExchangeSender_45 | 37.46 | batchCop[tiflash] | | ExchangeType: HashPartition, Hash Cols: poc.document_ndc_all.md5_doc_id |

| └─Projection_42 | 37.46 | batchCop[tiflash] | | poc.document_ndc_all.md5_doc_id, poc.document_ndc_all.reply_cnt, poc.document_ndc_all.like_cnt |

| └─HashJoin_44 | 37.46 | batchCop[tiflash] | | inner join, equal:[eq(poc.dim_fid_sentid.md5_doc_id, poc.document_ndc_all.md5_doc_id)] |

| ├─ExchangeReceiver_29(Build) | 29.97 | batchCop[tiflash] | | |

| │ └─ExchangeSender_28 | 29.97 | batchCop[tiflash] | | ExchangeType: Broadcast |

| │ └─Selection_27 | 29.97 | batchCop[tiflash] | | in(poc.dim_fid_sentid.folder_id, 108487, 100508, 100510), not(isnull(poc.dim_fid_sentid.md5_doc_id)) |

| │ └─TableFullScan_26 | 10000.00 | batchCop[tiflash] | table:b | keep order:false, stats:pseudo |

| └─Selection_31(Probe) | 250.00 | batchCop[tiflash] | | ge(poc.document_ndc_all.pub_date_time, 2021-02-25 00:00:00.000000), le(poc.document_ndc_all.pub_date_time, 2021-05-25 00:00:00.000000) |

| └─TableFullScan_30 | 10000.00 | batchCop[tiflash] | table:a | keep order:false, stats:pseudo |

+--------------------------------------------------------+----------+-------------------+---------------+--------------------------------------------------------------------------------------------------------------------------------------------+

18 rows in set (0.00 sec)

我这边是可以下推的,符合预期。

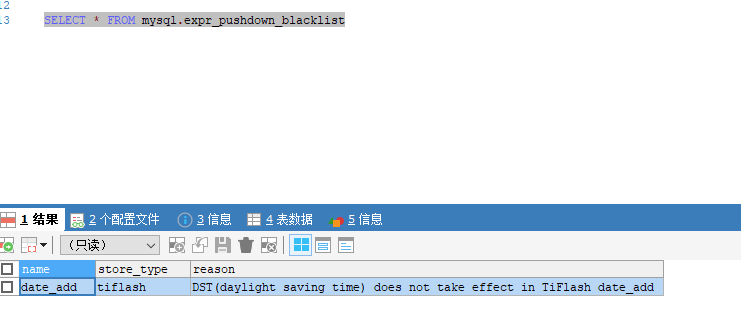

你检查一下 select * from mysql.expr_pushdown_blacklist; 看看 cast 是不是在里面,如果是,安装教程删除一下https://docs.pingcap.com/zh/tidb/stable/blocklist-control-plan

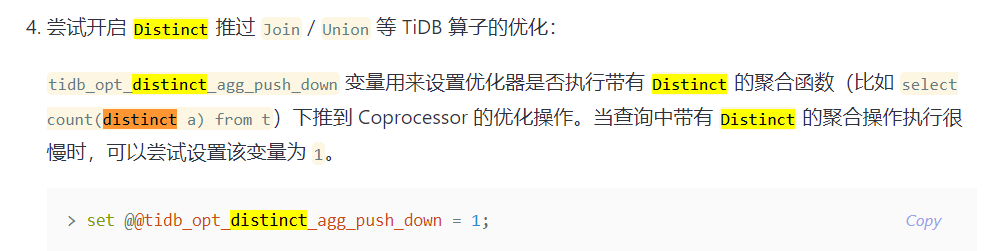

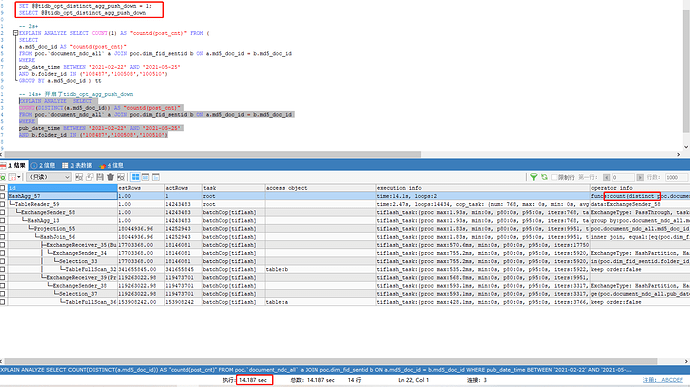

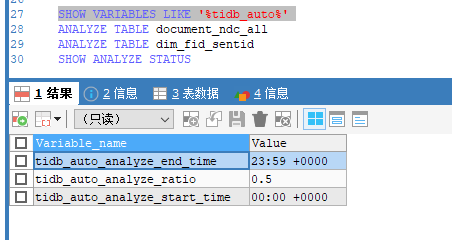

@Hacker_IslRgOns 可以查一下这个参数,有没有设置,感觉如果设置了这个参数,可能不用改为 group by? 可以试试这个文档里的参数。

https://docs.pingcap.com/zh/tidb/stable/tune-tiflash-performance#tidb-相关参数调优

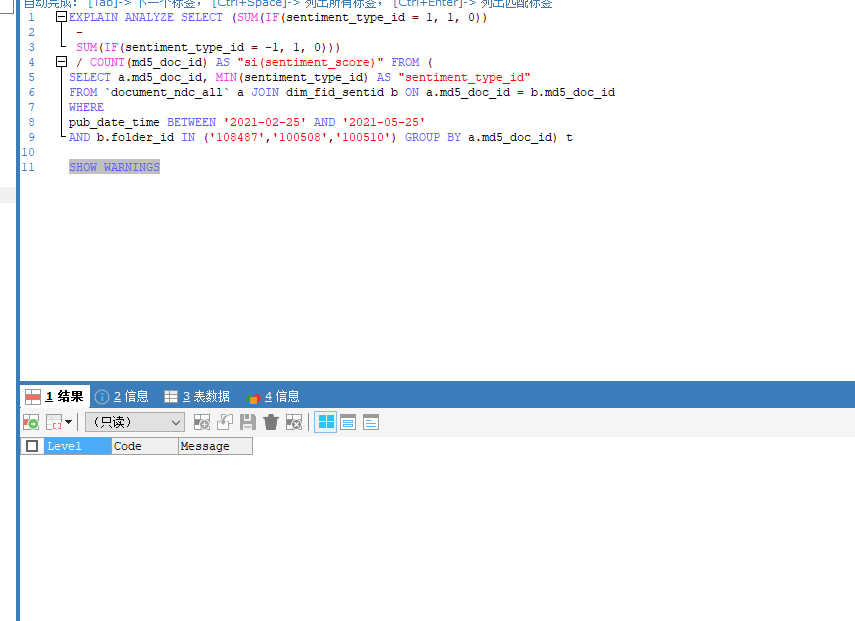

这个计划是不是也有 warnings? show warnings 看看

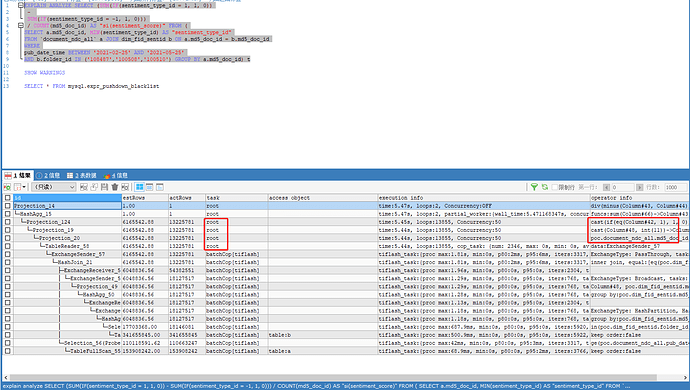

这个 sql 跟上面的不一样,把这个sql 的文本给我吧,我本地看看

analyze-sql.sql (13.4 KB)

@fzhedu

上面那个sql是:

SELECT

SUM(reply_cnt) AS “sumany(reply_cnt)”,

SUM(like_cnt) AS “sumany(like_cnt)” FROM (

SELECT

a.md5_doc_id, MAX(IFNULL(reply_cnt,1)) AS reply_cnt, MAX(IFNULL(like_cnt,1)) AS like_cnt

FROM document_ndc_all a JOIN dim_fid_sentid b ON a.md5_doc_id = b.md5_doc_id

WHERE pub_date_time BETWEEN ‘2021-02-25’ AND ‘2021-05-25’

AND b.folder_id IN (‘108487’,‘100508’,‘100510’) GROUP BY a.md5_doc_id) t

这个文件里的是另一个,都是groupby 没有下推的

经研究,是由于优化器的代码模型有点问题,导致没有选择最优的 2-phase agg。我可以打个 patch,避免这个问题,如果你需要的话。

打patch,是要升级集群5.0.2到一个临时版本?我目前是在测试阶段,倒是不着急,下个release会有正式修复吗

对,就是替换 tidb组件的二进制就行,很简单。

估计得下下个 release 会修好,因为下个 release 即将发布了

只是替换tidb组件的二进制的话,可以的,还请麻烦发我一下,我测试下

你们用的什么机器平台?

aws ec2机器, centos 7系统, tiup安装的