【概述】 tiup 缩容时报 failed to scale in: cannot find node id ‘ip:port’ in topology

【背景】在shell脚本中缩容多个节点,执行sh脚本

tiup cluster scale-in -y tidb --node 172.24.0.22:2379

tiup cluster scale-in -y tidb --node 172.24.0.5:2379

tiup cluster scale-in -y tidb --node 172.24.0.22:4000

tiup cluster scale-in -y tidb --node 172.24.0.5:4000

【现象】缩容失败报如下错

Starting component cluster: /home/tidb/.tiup/components/cluster/v1.4.1/tiup-cluster scale-in -y tidb --node 172.24.0.5:4000

-

[ Serial ] - SSHKeySet: privateKey=/home/tidb/.tiup/storage/cluster/clusters/tidb/ssh/id_rsa, publicKey=/home/tidb/.tiup/storage/cluster/clusters/tidb/ssh/id_rsa.pub

-

[Parallel] - UserSSH: user=tidb, host=172.24.0.12

-

[Parallel] - UserSSH: user=tidb, host=172.24.0.20

-

[Parallel] - UserSSH: user=tidb, host=172.24.0.20

-

[Parallel] - UserSSH: user=tidb, host=172.24.0.23

-

[Parallel] - UserSSH: user=tidb, host=172.24.0.21

-

[Parallel] - UserSSH: user=tidb, host=172.24.0.23

-

[Parallel] - UserSSH: user=tidb, host=172.24.0.5

-

[Parallel] - UserSSH: user=tidb, host=172.24.0.21

-

[Parallel] - UserSSH: user=tidb, host=172.24.0.22

-

[Parallel] - UserSSH: user=tidb, host=172.24.0.15

-

[Parallel] - UserSSH: user=tidb, host=172.24.0.12

-

[Parallel] - UserSSH: user=tidb, host=172.24.0.19

-

[Parallel] - UserSSH: user=tidb, host=172.24.0.17

-

[Parallel] - UserSSH: user=tidb, host=172.24.0.12

-

[ Serial ] - ClusterOperate: operation=ScaleInOperation, options={Roles:[] Nodes:[172.24.0.5:4000] Force:false SSHTimeout:5 OptTimeout:120 APITimeout:300 IgnoreConfigCheck:false NativeSSH:false SSHType: CleanupData:false CleanupLog:false RetainDataRoles:[] RetainDataNodes:[] Operation:StartOperation}

Stopping component tidb

Stopping instance 172.24.0.5

Failed to stop tidb-4000.service: Unit tidb-4000.service not loaded.Stop tidb 172.24.0.5:4000 success

Destroying component tidb

Destroying instance 172.24.0.5

Destroy 172.24.0.5 success

- Destroy tidb paths: [/data/tidb-deploy/tidb-4000/log /data/tidb-deploy/tidb-4000 /etc/systemd/system/tidb-4000.service]

Stopping component node_exporter

Stopping component blackbox_exporter

Failed to stop blackbox_exporter-9115.service: Unit blackbox_exporter-9115.service not loaded.

Destroying monitored 172.24.0.5

Destroying instance 172.24.0.5

172.24.0.5 failed to destroy blackbox exportoer: timed out waiting for port 9115 to be stopped after 2m0s

Error: failed to scale in: failed to destroy monitor: 172.24.0.5 failed to destroy blackbox exportoer: timed out waiting for port 9115 to be stopped after 2m0s: timed out waiting for port 9115 to be stopped after 2m0s

Verbose debug logs has been written to /home/tidb/.tiup/logs/tiup-cluster-debug-2021-06-16-17-57-20.log.

Error: run /home/tidb/.tiup/components/cluster/v1.4.1/tiup-cluster (wd:/home/tidb/.tiup/data/SaURssu) failed: exit status 1

Starting component cluster: /home/tidb/.tiup/components/cluster/v1.4.1/tiup-cluster scale-in -y tidb --node 172.24.0.22:4000

- [ Serial ] - SSHKeySet: privateKey=/home/tidb/.tiup/storage/cluster/clusters/tidb/ssh/id_rsa, publicKey=/home/tidb/.tiup/storage/cluster/clusters/tidb/ssh/id_rsa.pub

- [Parallel] - UserSSH: user=tidb, host=172.24.0.12

- [Parallel] - UserSSH: user=tidb, host=172.24.0.19

- [Parallel] - UserSSH: user=tidb, host=172.24.0.20

- [Parallel] - UserSSH: user=tidb, host=172.24.0.23

- [Parallel] - UserSSH: user=tidb, host=172.24.0.20

- [Parallel] - UserSSH: user=tidb, host=172.24.0.21

- [Parallel] - UserSSH: user=tidb, host=172.24.0.23

- [Parallel] - UserSSH: user=tidb, host=172.24.0.12

- [Parallel] - UserSSH: user=tidb, host=172.24.0.12

- [Parallel] - UserSSH: user=tidb, host=172.24.0.21

- [Parallel] - UserSSH: user=tidb, host=172.24.0.15

- [Parallel] - UserSSH: user=tidb, host=172.24.0.17

- [ Serial ] - ClusterOperate: operation=ScaleInOperation, options={Roles:[] Nodes:[172.24.0.22:4000] Force:false SSHTimeout:5 OptTimeout:120 APITimeout:300 IgnoreConfigCheck:false NativeSSH:false SSHType: CleanupData:false CleanupLog:false RetainDataRoles:[] RetainDataNodes:[] Operation:StartOperation}

Error: failed to scale in: cannot find node id ‘172.24.0.22:4000’ in topology

Verbose debug logs has been written to /home/tidb/.tiup/logs/tiup-cluster-debug-2021-06-16-17-57-20.log.

Error: run /home/tidb/.tiup/components/cluster/v1.4.1/tiup-cluster (wd:/home/tidb/.tiup/data/SaUSPp0) failed: exit status 1

【业务影响】缩容不成功

【TiDB 版本】TIDB v5.0.1

【附件】

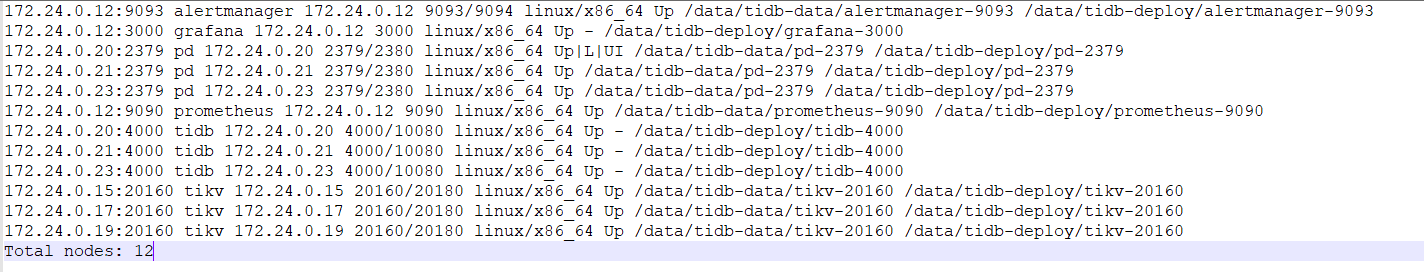

- TiUP Cluster Display 信息 正常

Starting componentcluster: /home/tidb/.tiup/components/cluster/v1.4.1/tiup-cluster display tidb

Cluster type: tidb

Cluster name: tidb

Cluster version: v5.0.1

SSH type: builtin

Dashboard URL: http://172.24.0.20:2379/dashboard

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

172.24.0.12:9093 alertmanager 172.24.0.12 9093/9094 linux/x86_64 Up /data/tidb-data/alertmanager-9093 /data/tidb-deploy/alertmanager-9093

172.24.0.12:3000 grafana 172.24.0.12 3000 linux/x86_64 Up - /data/tidb-deploy/grafana-3000

172.24.0.20:2379 pd 172.24.0.20 2379/2380 linux/x86_64 Up|L|UI /data/tidb-data/pd-2379 /data/tidb-deploy/pd-2379

172.24.0.21:2379 pd 172.24.0.21 2379/2380 linux/x86_64 Up /data/tidb-data/pd-2379 /data/tidb-deploy/pd-2379

172.24.0.23:2379 pd 172.24.0.23 2379/2380 linux/x86_64 Up /data/tidb-data/pd-2379 /data/tidb-deploy/pd-2379

172.24.0.12:9090 prometheus 172.24.0.12 9090 linux/x86_64 Up /data/tidb-data/prometheus-9090 /data/tidb-deploy/prometheus-9090

172.24.0.20:4000 tidb 172.24.0.20 4000/10080 linux/x86_64 Up - /data/tidb-deploy/tidb-4000

172.24.0.21:4000 tidb 172.24.0.21 4000/10080 linux/x86_64 Up - /data/tidb-deploy/tidb-4000

172.24.0.23:4000 tidb 172.24.0.23 4000/10080 linux/x86_64 Up - /data/tidb-deploy/tidb-4000

172.24.0.15:20160 tikv 172.24.0.15 20160/20180 linux/x86_64 Up /data/tidb-data/tikv-20160 /data/tidb-deploy/tikv-20160

172.24.0.17:20160 tikv 172.24.0.17 20160/20180 linux/x86_64 Up /data/tidb-data/tikv-20160 /data/tidb-deploy/tikv-20160

172.24.0.19:20160 tikv 172.24.0.19 20160/20180 linux/x86_64 Up /data/tidb-data/tikv-20160 /data/tidb-deploy/tikv-20160

Total nodes: 12

- TiUP Cluster Edit Config 信息 正常