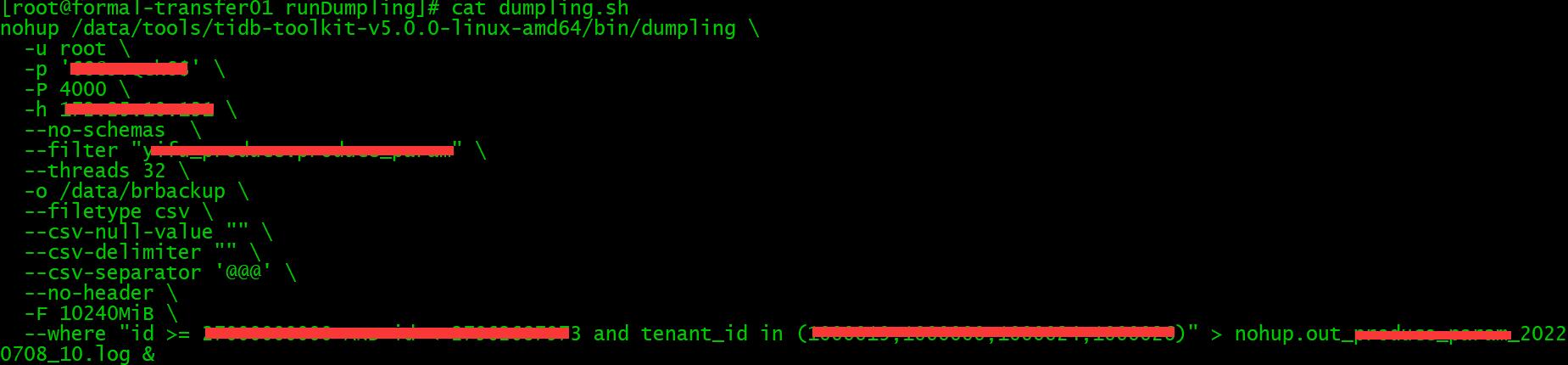

图一:导出命令

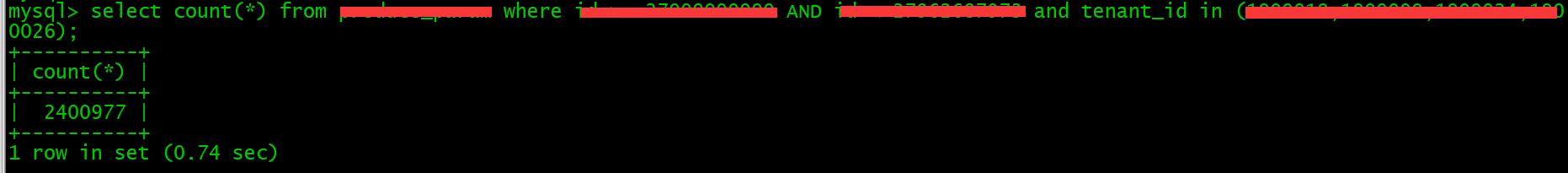

图二:导出数据量

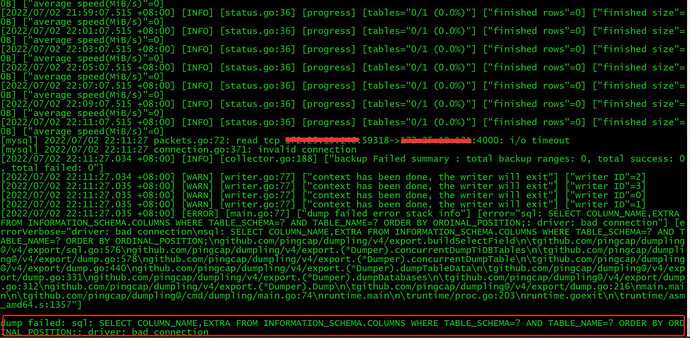

图三:导出命令对应报错日志:

nohup.out_produce_param_20220708_10.log (8.8 KB)

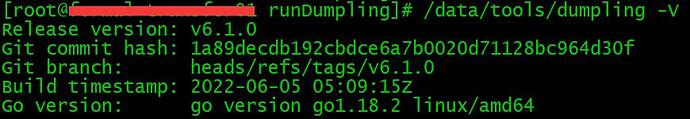

Release version: v5.0.0

Git commit hash: 463e4cc96078fa532baeea05624d9b5ceb044fb1

Git branch: heads/refs/tags/v5.0.0

Build timestamp: 2021-04-06 04:42:26Z

Go version: go version go1.13 linux/amd64

[2022/07/08 17:04:13.134 +08:00] [INFO] [versions.go:55] [“Welcome to dumpling”] [“Release Version”=v5.0.0] [“Git Commit Hash”=463e4cc96078fa532baeea05624d9b5ceb044fb1] [“Git Branch”=heads/refs/tags/v5.0.0] [“Build timestamp”=“2021-04-06 04:42:26”] [“Go Version”=“go version go1.13 linux/amd64”]

[2022/07/08 17:04:13.138 +08:00] [INFO] [config.go:599] [“detect server type”] [type=TiDB]

[2022/07/08 17:04:13.138 +08:00] [INFO] [config.go:618] [“detect server version”] [version=5.1.0]

[2022/07/08 17:04:13.148 +08:00] [INFO] [client.go:193] [“[pd] create pd client with endpoints”] [pd-address=“[x.x.x.134:2379,x.x.x.135:2379,x.x.x.136:2379]”]

[2022/07/08 17:04:13.150 +08:00] [INFO] [base_client.go:308] [“[pd] switch leader”] [new-leader=http://x.x.x.136:2379] [old-leader=]

[2022/07/08 17:04:13.150 +08:00] [INFO] [base_client.go:112] [“[pd] init cluster id”] [cluster-id=6989862638718844439]

[2022/07/08 17:04:13.151 +08:00] [INFO] [dump.go:921] [“generate dumpling gc safePoint id”] [id=dumpling_1657271053151646318]

[2022/07/08 17:04:13.156 +08:00] [INFO] [dump.go:82] [“begin to run Dump”] [conf=“{"s3":{"endpoint":"","region":"","storage-class":"","sse":"","sse-kms-key-id":"","acl":"","access-key":"","secret-access-key":"","provider":"","force-path-style":true,"use-accelerate-endpoint":false},"gcs":{"endpoint":"","storage-class":"","predefined-acl":"","credentials-file":""},"AllowCleartextPasswords":false,"SortByPk":true,"NoViews":true,"NoHeader":true,"NoSchemas":true,"NoData":false,"CompleteInsert":false,"TransactionalConsistency":true,"EscapeBackslash":true,"DumpEmptyDatabase":true,"PosAfterConnect":false,"CompressType":0,"Host":"x.x.x.131","Port":4000,"Threads":32,"User":"root","Security":{"CAPath":"","CertPath":"","KeyPath":""},"LogLevel":"info","LogFile":"","LogFormat":"text","OutputDirPath":"/data/brbackup","StatusAddr":":8281","Snapshot":"434443662949875740","Consistency":"snapshot","CsvNullValue":"","SQL":"","CsvSeparator":"@@@","CsvDelimiter":"","Databases":[],"Where":"id \u003e= 27000000000 AND id \u003c 27962687973 and tenant_id in (1000019,1000000,1000024,1000026)","FileType":"csv","ServerInfo":{"ServerType":3,"ServerVersion":"5.1.0"},"Rows":0,"ReadTimeout":900000000000,"TiDBMemQuotaQuery":0,"FileSize":10737418240,"StatementSize":1000000,"SessionParams":{"tidb_snapshot":"434443662949875740"},"Tables":null}”]

[2022/07/08 17:06:13.355 +08:00] [INFO] [status.go:36] [progress] [tables=“0/1 (0.0%)”] [“finished rows”=0] [“finished size”=0B] [“average speed(MiB/s)”=0]

[2022/07/08 17:08:13.355 +08:00] [INFO] [status.go:36] [progress] [tables=“0/1 (0.0%)”] [“finished rows”=0] [“finished size”=0B] [“average speed(MiB/s)”=0]

[2022/07/08 17:10:13.355 +08:00] [INFO] [status.go:36] [progress] [tables=“0/1 (0.0%)”] [“finished rows”=0] [“finished size”=0B] [“average speed(MiB/s)”=0]

[2022/07/08 17:12:13.355 +08:00] [INFO] [status.go:36] [progress] [tables=“0/1 (0.0%)”] [“finished rows”=0] [“finished size”=0B] [“average speed(MiB/s)”=0]

[2022/07/08 17:14:13.355 +08:00] [INFO] [status.go:36] [progress] [tables=“0/1 (0.0%)”] [“finished rows”=0] [“finished size”=0B] [“average speed(MiB/s)”=0]

[2022/07/08 17:16:13.355 +08:00] [INFO] [status.go:36] [progress] [tables=“0/1 (0.0%)”] [“finished rows”=0] [“finished size”=0B] [“average speed(MiB/s)”=0]

[2022/07/08 17:18:13.355 +08:00] [INFO] [status.go:36] [progress] [tables=“0/1 (0.0%)”] [“finished rows”=0] [“finished size”=0B] [“average speed(MiB/s)”=0]

[2022/07/08 17:20:13.355 +08:00] [INFO] [status.go:36] [progress] [tables=“0/1 (0.0%)”] [“finished rows”=0] [“finished size”=0B] [“average speed(MiB/s)”=0]

[2022/07/08 17:22:13.355 +08:00] [INFO] [status.go:36] [progress] [tables=“0/1 (0.0%)”] [“finished rows”=0] [“finished size”=0B] [“average speed(MiB/s)”=0]

[2022/07/08 17:24:13.355 +08:00] [INFO] [status.go:36] [progress] [tables=“0/1 (0.0%)”] [“finished rows”=0] [“finished size”=0B] [“average speed(MiB/s)”=0]

[2022/07/08 17:26:13.355 +08:00] [INFO] [status.go:36] [progress] [tables=“0/1 (0.0%)”] [“finished rows”=0] [“finished size”=0B] [“average speed(MiB/s)”=0]

[2022/07/08 17:28:13.355 +08:00] [INFO] [status.go:36] [progress] [tables=“0/1 (0.0%)”] [“finished rows”=0] [“finished size”=0B] [“average speed(MiB/s)”=0]

[2022/07/08 17:30:13.355 +08:00] [INFO] [status.go:36] [progress] [tables=“0/1 (0.0%)”] [“finished rows”=0] [“finished size”=0B] [“average speed(MiB/s)”=0]

[2022/07/08 17:32:13.355 +08:00] [INFO] [status.go:36] [progress] [tables=“0/1 (0.0%)”] [“finished rows”=0] [“finished size”=0B] [“average speed(MiB/s)”=0]

[2022/07/08 17:34:13.355 +08:00] [INFO] [status.go:36] [progress] [tables=“0/1 (0.0%)”] [“finished rows”=0] [“finished size”=0B] [“average speed(MiB/s)”=0]

[2022/07/08 17:36:13.356 +08:00] [INFO] [status.go:36] [progress] [tables=“0/1 (0.0%)”] [“finished rows”=0] [“finished size”=0B] [“average speed(MiB/s)”=0]

[2022/07/08 17:38:13.355 +08:00] [INFO] [status.go:36] [progress] [tables=“0/1 (0.0%)”] [“finished rows”=0] [“finished size”=0B] [“average speed(MiB/s)”=0]

[2022/07/08 17:40:13.355 +08:00] [INFO] [status.go:36] [progress] [tables=“0/1 (0.0%)”] [“finished rows”=0] [“finished size”=0B] [“average speed(MiB/s)”=0]

[2022/07/08 17:42:13.355 +08:00] [INFO] [status.go:36] [progress] [tables=“0/1 (0.0%)”] [“finished rows”=0] [“finished size”=0B] [“average speed(MiB/s)”=0]

[2022/07/08 17:44:13.355 +08:00] [INFO] [status.go:36] [progress] [tables=“0/1 (0.0%)”] [“finished rows”=0] [“finished size”=0B] [“average speed(MiB/s)”=0]

[mysql] 2022/07/08 17:44:43 packets.go:72: read tcp x.x.x.146:32882->x.x.x.131:4000: i/o timeout

[mysql] 2022/07/08 17:44:43 connection.go:371: invalid connection

[2022/07/08 17:44:43.298 +08:00] [INFO] [collector.go:188] [“backup Failed summary : total backup ranges: 0, total success: 0, total failed: 0”]

[2022/07/08 17:44:43.299 +08:00] [WARN] [writer.go:77] [“context has been done, the writer will exit”] [“writer ID”=2]

[2022/07/08 17:44:43.299 +08:00] [WARN] [writer.go:77] [“context has been done, the writer will exit”] [“writer ID”=31]

[2022/07/08 17:44:43.299 +08:00] [WARN] [writer.go:77] [“context has been done, the writer will exit”] [“writer ID”=30]

[2022/07/08 17:44:43.299 +08:00] [WARN] [writer.go:77] [“context has been done, the writer will exit”] [“writer ID”=29]

[2022/07/08 17:44:43.299 +08:00] [WARN] [writer.go:77] [“context has been done, the writer will exit”] [“writer ID”=27]

[2022/07/08 17:44:43.299 +08:00] [WARN] [writer.go:77] [“context has been done, the writer will exit”] [“writer ID”=1]

[2022/07/08 17:44:43.299 +08:00] [WARN] [writer.go:77] [“context has been done, the writer will exit”] [“writer ID”=28]

[2022/07/08 17:44:43.300 +08:00] [WARN] [writer.go:77] [“context has been done, the writer will exit”] [“writer ID”=19]

[2022/07/08 17:44:43.299 +08:00] [WARN] [writer.go:77] [“context has been done, the writer will exit”] [“writer ID”=15]

[2022/07/08 17:44:43.299 +08:00] [WARN] [writer.go:77] [“context has been done, the writer will exit”] [“writer ID”=14]

[2022/07/08 17:44:43.299 +08:00] [WARN] [writer.go:77] [“context has been done, the writer will exit”] [“writer ID”=13]

[2022/07/08 17:44:43.299 +08:00] [WARN] [writer.go:77] [“context has been done, the writer will exit”] [“writer ID”=0]

[2022/07/08 17:44:43.299 +08:00] [WARN] [writer.go:77] [“context has been done, the writer will exit”] [“writer ID”=12]

[2022/07/08 17:44:43.299 +08:00] [WARN] [writer.go:77] [“context has been done, the writer will exit”] [“writer ID”=7]

[2022/07/08 17:44:43.299 +08:00] [ERROR] [main.go:77] [“dump failed error stack info”] [error=“sql: SELECT COLUMN_NAME,EXTRA FROM INFORMATION_SCHEMA.COLUMNS WHERE TABLE_SCHEMA=? AND TABLE_NAME=? ORDER BY ORDINAL_POSITION;: driver: bad connection”] [errorVerbose=“driver: bad connection

sql: SELECT COLUMN_NAME,EXTRA FROM INFORMATION_SCHEMA.COLUMNS WHERE TABLE_SCHEMA=? AND TABLE_NAME=? ORDER BY ORDINAL_POSITION;

github.com/pingcap/dumpling/v4/export.buildSelectField

\tgithub.com/pingcap/dumpling@/v4/export/sql.go:576

github.com/pingcap/dumpling/v4/export.(*Dumper).concurrentDumpTiDBTables

\tgithub.com/pingcap/dumpling@/v4/export/dump.go:578

github.com/pingcap/dumpling/v4/export.(*Dumper).concurrentDumpTable

\tgithub.com/pingcap/dumpling@/v4/export/dump.go:440

github.com/pingcap/dumpling/v4/export.(*Dumper).buildConcatTask.func1

\tgithub.com/pingcap/dumpling@/v4/export/dump.go:340

runtime.goexit

\truntime/asm_amd64.s:1357”]

dump failed: sql: SELECT COLUMN_NAME,EXTRA FROM INFORMATION_SCHEMA.COLUMNS WHERE TABLE_SCHEMA=? AND TABLE_NAME=? ORDER BY ORDINAL_POSITION;: driver: bad connection

图四:TiDB1日志

131.log (2.6 MB)

图五:TiDB2日志

132.log (1.0 MB)