【 TiDB 使用环境】生产环境 or 测试环境 or POC

【 TiDB 版本】

【遇到的问题】

集群版本:4.0.6

搭建完cdc以后,上游表插入数据,下游查询不到任何数据,不知道是哪里有问题。

如下是我的配置过程:

1、上游创建数据库和表

create database cdcdb;

use cdcdb;

create table test(id int not null primary key, name varchar(100));

2、下游创建数据库和表

create database cdcdb;

use cdcdb;

create table test(id int not null primary key, name varchar(100));

3、下游数据库添加复制用户

mysql> create user ‘root’@‘xxx.xxx.xxx.136’ identified by ‘admin’;

Query OK, 0 rows affected (0.03 sec)

mysql> grant all on . to ‘root’@‘xxx.xxx.xxx.136’;

Query OK, 0 rows affected (0.02 sec)

测试,从上游连接到下游的数据库,可以连通!

[tidb@TIDBjsjdsjkxt01 ~]$ /home/tidb/tidb_init/mysql-5.7.26-el7-x86_64/bin/mysql -h xxx.xxx.xxx.128 -uroot -P 4000 -p

4、使用非配置文件,创建同步任务

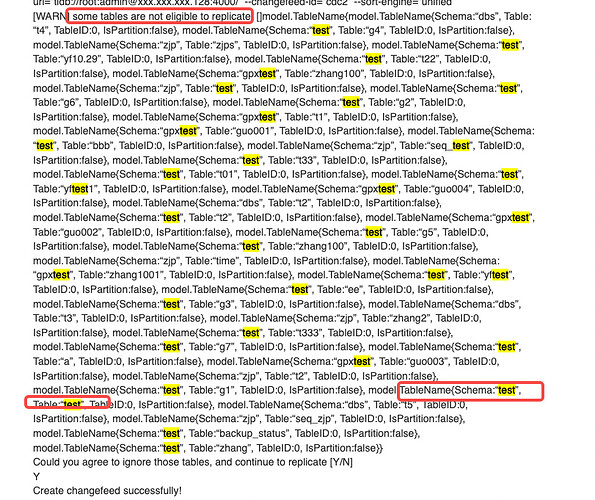

[tidb@TIDBjsjdsjkxt01 ~]$ cdc cli changefeed create --pd=http://xxx.xxx.xxx.136:2379 --sink-uri=“tidb://root:admin@xxx.xxx.xxx.128:4000/” --changefeed-id=“cdc2” --sort-engine=“unified”

[WARN] some tables are not eligible to replicate, model.TableName{model.TableName{Schema:“dbs”, Table:“t4”, TableID:0, IsPartition:false}, model.TableName{Schema:“test”, Table:“g4”, TableID:0, IsPartition:false}, model.TableName{Schema:“zjp”, Table:“zjps”, TableID:0, IsPartition:false}, model.TableName{Schema:“test”, Table:“yf10.29”, TableID:0, IsPartition:false}, model.TableName{Schema:“test”, Table:“t22”, TableID:0, IsPartition:false}, model.TableName{Schema:“gpxtest”, Table:“zhang100”, TableID:0, IsPartition:false}, model.TableName{Schema:“zjp”, Table:“test”, TableID:0, IsPartition:false}, model.TableName{Schema:“test”, Table:“g6”, TableID:0, IsPartition:false}, model.TableName{Schema:“test”, Table:“g2”, TableID:0, IsPartition:false}, model.TableName{Schema:“gpxtest”, Table:“t1”, TableID:0, IsPartition:false}, model.TableName{Schema:“gpxtest”, Table:“guo001”, TableID:0, IsPartition:false}, model.TableName{Schema:“test”, Table:“bbb”, TableID:0, IsPartition:false}, model.TableName{Schema:“zjp”, Table:“seq_test”, TableID:0, IsPartition:false}, model.TableName{Schema:“test”, Table:“t33”, TableID:0, IsPartition:false}, model.TableName{Schema:“test”, Table:“t01”, TableID:0, IsPartition:false}, model.TableName{Schema:“test”, Table:“yftest1”, TableID:0, IsPartition:false}, model.TableName{Schema:“gpxtest”, Table:“guo004”, TableID:0, IsPartition:false}, model.TableName{Schema:“dbs”, Table:“t2”, TableID:0, IsPartition:false}, model.TableName{Schema:“test”, Table:“t2”, TableID:0, IsPartition:false}, model.TableName{Schema:“gpxtest”, Table:“guo002”, TableID:0, IsPartition:false}, model.TableName{Schema:“test”, Table:“g5”, TableID:0, IsPartition:false}, model.TableName{Schema:“test”, Table:“zhang100”, TableID:0, IsPartition:false}, model.TableName{Schema:“zjp”, Table:“time”, TableID:0, IsPartition:false}, model.TableName{Schema:“gpxtest”, Table:“zhang1001”, TableID:0, IsPartition:false}, model.TableName{Schema:“test”, Table:“yftest”, TableID:0, IsPartition:false}, model.TableName{Schema:“test”, Table:“ee”, TableID:0, IsPartition:false}, model.TableName{Schema:“test”, Table:“g3”, TableID:0, IsPartition:false}, model.TableName{Schema:“dbs”, Table:“t3”, TableID:0, IsPartition:false}, model.TableName{Schema:“zjp”, Table:“zhang2”, TableID:0, IsPartition:false}, model.TableName{Schema:“test”, Table:“t333”, TableID:0, IsPartition:false}, model.TableName{Schema:“test”, Table:“g7”, TableID:0, IsPartition:false}, model.TableName{Schema:“test”, Table:“a”, TableID:0, IsPartition:false}, model.TableName{Schema:“gpxtest”, Table:“guo003”, TableID:0, IsPartition:false}, model.TableName{Schema:“zjp”, Table:“t2”, TableID:0, IsPartition:false}, model.TableName{Schema:“test”, Table:“g1”, TableID:0, IsPartition:false}, model.TableName{Schema:“test”, Table:“test”, TableID:0, IsPartition:false}, model.TableName{Schema:“dbs”, Table:“t5”, TableID:0, IsPartition:false}, model.TableName{Schema:“zjp”, Table:“seq_zjp”, TableID:0, IsPartition:false}, model.TableName{Schema:“test”, Table:“backup_status”, TableID:0, IsPartition:false}, model.TableName{Schema:“test”, Table:“zhang”, TableID:0, IsPartition:false}}

Could you agree to ignore those tables, and continue to replicate [Y/N]

Y

Create changefeed successfully!

ID: cdc2

Info: {“sink-uri”:“tidb://root:admin@xxx.xxx.xxx.128:4000/”,“opts”:{},“create-time”:“2022-06-22T11:24:42.813427811+08:00”,“start-ts”:434075935120556033,“target-ts”:0,“admin-job-type”:0,“sort-engine”:“unified”,“sort-dir”:“.”,“config”:{“case-sensitive”:true,“enable-old-value”:false,“filter”:{“rules”:[“.”],“ignore-txn-start-ts”:null,“ddl-allow-list”:null},“mounter”:{“worker-num”:16},“sink”:{“dispatchers”:null,“protocol”:“default”},“cyclic-replication”:{“enable”:false,“replica-id”:0,“filter-replica-ids”:null,“id-buckets”:0,“sync-ddl”:false},“scheduler”:{“type”:“table-number”,“polling-time”:-1}},“state”:“normal”,“history”:null,“error”:null}

5、查询同步状态

[tidb@TIDBjsjdsjkxt01 ~]$ cdc cli changefeed list --pd=http://xxx.xxx.xxx.136:2379

[

{

“id”: “cdc2”,

“summary”: {

“state”: “normal”,

“tso”: 434075935120556033,

“checkpoint”: “2022-06-22 11:24:42.778”,

“error”: null

}

}

]

6、插入测试数据,下游没有查询到。

相关cdc日志里面没有发现有报错信息,请见附件。

cdc.log (9.9 KB)

【复现路径】做过哪些操作出现的问题

【问题现象及影响】

【附件】

请提供各个组件的 version 信息,如 cdc/tikv,可通过执行 cdc version/tikv-server --version 获取。