pd-ctl执行结果

{

"count": 4,

"stores": [

{

"store": {

"id": 1,

"address": "172.21.23.138:20160",

"labels": [

{

"key": "host",

"value": "kv-host-138"

}

],

"version": "4.0.8",

"status_address": "0.0.0.0:20180",

"git_hash": "83091173e960e5a0f5f417e921a0801d2f6635ae",

"start_timestamp": 1611222452,

"deploy_path": "/data/tidb-deploy/tikv-20160/bin",

"last_heartbeat": 1614043787479839137,

"state_name": "Up"

},

"status": {

"capacity": "251.9GiB",

"available": "218.1GiB",

"used_size": "1.585GiB",

"leader_count": 194,

"leader_weight": 1,

"leader_score": 194,

"leader_size": 2155,

"region_count": 564,

"region_weight": 1,

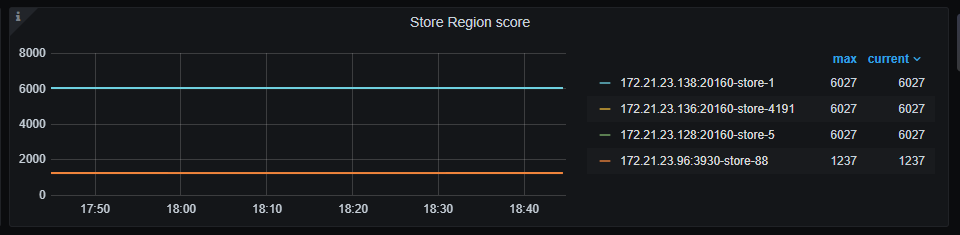

"region_score": 6027,

"region_size": 6027,

"start_ts": "2021-01-21T17:47:32+08:00",

"last_heartbeat_ts": "2021-02-23T09:29:47.479839137+08:00",

"uptime": "783h42m15.479839137s"

}

},

{

"store": {

"id": 5,

"address": "172.21.23.128:20160",

"labels": [

{

"key": "host",

"value": "kv-host-128"

}

],

"version": "4.0.8",

"status_address": "0.0.0.0:20180",

"git_hash": "83091173e960e5a0f5f417e921a0801d2f6635ae",

"start_timestamp": 1611222432,

"deploy_path": "/data/tidb-deploy/tikv-20160/bin",

"last_heartbeat": 1614043780657840226,

"state_name": "Up"

},

"status": {

"capacity": "251.9GiB",

"available": "202.6GiB",

"used_size": "1.614GiB",

"leader_count": 186,

"leader_weight": 1,

"leader_score": 186,

"leader_size": 2211,

"region_count": 564,

"region_weight": 1,

"region_score": 6027,

"region_size": 6027,

"start_ts": "2021-01-21T17:47:12+08:00",

"last_heartbeat_ts": "2021-02-23T09:29:40.657840226+08:00",

"uptime": "783h42m28.657840226s"

}

},

{

"store": {

"id": 88,

"address": "172.21.23.96:3930",

"state": 1,

"labels": [

{

"key": "engine",

"value": "tiflash"

}

],

"version": "v4.0.8",

"peer_address": "172.21.23.96:20170",

"status_address": "172.21.23.96:20292",

"git_hash": "f0a78d93e440dac7c7935ea7e67c656b1bb5f913",

"start_timestamp": 1611222351,

"deploy_path": "/data/tidb-deploy/tiflash-9000/bin/tiflash",

"last_heartbeat": 1614043788032387822,

"state_name": "Offline"

},

"status": {

"capacity": "196.7GiB",

"available": "196.6GiB",

"used_size": "167.8MiB",

"leader_count": 0,

"leader_weight": 1,

"leader_score": 0,

"leader_size": 0,

"region_count": 66,

"region_weight": 1,

"region_score": 1237,

"region_size": 1237,

"start_ts": "2021-01-21T17:45:51+08:00",

"last_heartbeat_ts": "2021-02-23T09:29:48.032387822+08:00",

"uptime": "783h43m57.032387822s"

}

},

{

"store": {

"id": 4191,

"address": "172.21.23.136:20160",

"labels": [

{

"key": "host",

"value": "kv-host-136"

}

],

"version": "4.0.8",

"status_address": "0.0.0.0:20180",

"git_hash": "83091173e960e5a0f5f417e921a0801d2f6635ae",

"start_timestamp": 1613982253,

"deploy_path": "/data/tidb-deploy/tikv-20160/bin",

"last_heartbeat": 1614043790057614330,

"state_name": "Up"

},

"status": {

"capacity": "251.9GiB",

"available": "248.2GiB",

"used_size": "914.6MiB",

"leader_count": 184,

"leader_weight": 1,

"leader_score": 184,

"leader_size": 1661,

"region_count": 564,

"region_weight": 1,

"region_score": 6027,

"region_size": 6027,

"start_ts": "2021-02-22T16:24:13+08:00",

"last_heartbeat_ts": "2021-02-23T09:29:50.05761433+08:00",

"uptime": "17h5m37.05761433s"

}

}

]

}