集群版本V3.0.19

规模: KV:102 pd:3 tidb:10

目前集群在晚高峰期出现写入慢:TIKV日志具体:

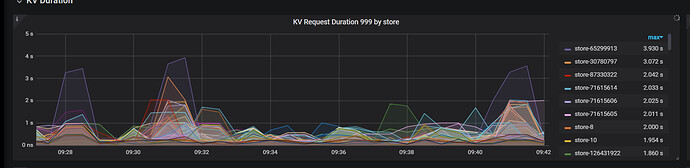

根据监控kv request duration 999 by store 显示的max查看TIKV日志

err=“Request(message: "EpochNotMatch current epoch of region 31028983 is conf_ver: 40061 version: 66708, but you sent conf_ver: 40061 version: 66707" epoch_not_match { current_regions { id: 31028983 start_key: 7480000000000010FFFE5F7280000002F5FFDE5EFF0000000000FA end_key: 7480000000000011FF0000000000000000F8 region_epoch { conf_ver: 40061 version: 66708 } peers { id: 129516334 store_id: 65299913 } peers { id: 129516688 store_id: 66997891 } peers { id: 129517743 store_id: 71615608 } } current_regions { id: 129581721 start_key: 7480000000000010FFFE5F7280000002F5FFDAA0F10000000000FA end_key: 7480000000000010FFFE5F7280000002F5FFDE5EFF0000000000FA region_epoch { conf_ver: 40061 version: 66708 } peers { id: 129581722 store_id: 65299913 } peers { id: 129581723 store_id: 66997891 } peers { id: 129581724 store_id: 71615608 } } })”] [cid=9036593]

发现持续几分钟保持在30s 写入非常慢,重启这个节点,写入恢复。

发现每次异常的store响应持续在30s+,tikv节点都存在同一个region编号:

怀疑可能存在热点问题。

麻烦按照文档,确认一下是否存在热点问题。

https://book.tidb.io/session4/chapter7/hotspot-resolved.html#722-定位热点表--索引

恩 ,我理解的是一个region不能同时出现在不同的node节点。

截图能给一个完整的命令和结果贴上来吗

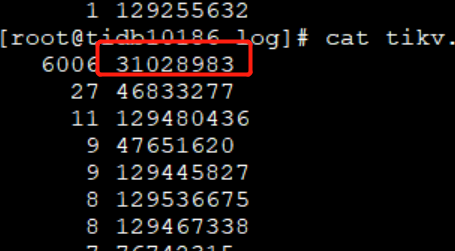

1、过滤出来的region异常的排名,和监控显示一样

2、在异常的节点通过命令:cat tikv.log|grep “EpochNotMatch”|awk -F ‘[’ ‘{print $6}’|awk -F ‘[ ]’ ‘{print $7}’ |grep -v “region”|sort -rn |uniq -c|sort -nr

curl http://tidb-server:10080/region/regionID

3、通过pd-ctl region regiondID 查看当前LEADER和日志显示一样的

» region 31028983 ###这个是刚刚的操作,

{

“id”: 31028983,

“start_key”: “7480000000000010FFFE5F728000000303FF52267A0000000000FA”,

“end_key”: “7480000000000011FF0000000000000000F8”,

“epoch”: {

“conf_ver”: 40088,

“version”: 67635

},

“peers”: [

{

“id”: 129516334,

“store_id”: 65299913

},

{

“id”: 129790779,

“store_id”: 34896008

},

{

“id”: 129800463,

“store_id”: 9

}

],

“leader”: {

“id”: 129516334,

“store_id”: 65299913

},

“approximate_size”: 100,

“approximate_keys”: 222444

}

日志:

[2021/02/08 20:40:12.907 +08:00] [INFO] [process.rs:176] [“get snapshot failed”] [err=“Request(message: "EpochNotMatch current epoch of region 31028983 is conf_ver: 40061 version: 66708, but you sent conf_ver: 40061 version: 66707" epoch_not_match { current_regions { id: 31028983 start_key: 7480000000000010FFFE5F7280000002F5FFDE5EFF0000000000FA end_key: 7480000000000011FF0000000000000000F8 region_epoch { conf_ver: 40061 version: 66708 } peers { id: 129516334 store_id: 65299913 } peers { id: 129516688 store_id: 66997891 } peers { id: 129517743 store_id: 71615608 } } current_regions { id: 129581721 start_key: 7480000000000010FFFE5F7280000002F5FFDAA0F10000000000FA end_key: 7480000000000010FFFE5F7280000002F5FFDE5EFF0000000000FA region_epoch { conf_ver: 40061 version: 66708 } peers { id: 129581722 store_id: 65299913 } peers { id: 129581723 store_id: 66997891 } peers { id: 129581724 store_id: 71615608 } } })”] [cid=9036036]

[2021/02/08 20:40:13.061 +08:00] [INFO] [process.rs:176] [“get snapshot failed”] [err=“Request(message: "EpochNotMatch current epoch of region 31028983 is conf_ver: 40061 version: 66708, but you sent conf_ver: 40061 version: 66707" epoch_not_match { current_regions { id: 31028983 start_key: 7480000000000010FFFE5F7280000002F5FFDE5EFF0000000000FA end_key: 7480000000000011FF0000000000000000F8 region_epoch { conf_ver: 40061 version: 66708 } peers { id: 129516334 store_id: 65299913 } peers { id: 129516688 store_id: 66997891 } peers { id: 129517743 store_id: 71615608 } } current_regions { id: 129581721 start_key: 7480000000000010FFFE5F7280000002F5FFDAA0F10000000000FA end_key: 7480000000000010FFFE5F7280000002F5FFDE5EFF0000000000FA region_epoch { conf_ver: 40061 version: 66708 } peers { id: 129581722 store_id: 65299913 } peers { id: 129581723 store_id: 66997891 } peers { id: 129581724 store_id: 71615608 } } })”] [cid=9037204]

另外说下:我现在这表没有主主键,有唯一索引,目前表数据量在200Y,是否建议加SHARD_ROW_ID_BITS 参数。

如果 CPU 没有特别大的压力,是可以考虑使用 SHARD_ROW_ID_BITS 来解决热点问题的。

可能是因为读写冲突导致了 region 调度的报错。

能否根据 pd-ctl hot read 看一下 store 的热点。

恩 这个日志 最近这个晚上高峰期的日志,现在hot read 10情况:

? region topread 10

{

“count”: 10,

“regions”: [

{

“id”: 43954914,

“start_key”: “7480000000000000FF5F5F698000000000FF0000130380000000FF0004112E03800000FF0000000000038000FF125F33F7A99403B4FF6E62E00001CFE103FF8000000026C61010FF0000000000000000F7”,

“end_key”: “7480000000000000FF5F5F698000000000FF0000130380000000FF0004112E03800000FF0004900B52038000FF125D1BD22348038EFF6D646C57E4A00003FF80000000215DBDCCFF0000000000000000F7”,

“epoch”: {

“conf_ver”: 683,

“version”: 666

},

“peers”: [

{

“id”: 118272931,

“store_id”: 31918594

},

{

“id”: 123893732,

“store_id”: 68996523

},

{

“id”: 124427226,

“store_id”: 66453472

}

],

“leader”: {

“id”: 118272931,

“store_id”: 31918594

},

“written_bytes”: 902,

“read_bytes”: 1343333602,

“written_keys”: 10,

“read_keys”: 13169947,

“approximate_size”: 89,

“approximate_keys”: 913376

},

{

“id”: 128472339,

“start_key”: “7480000000000000FF295F728000000000FFE5C3730000000000FA”,

“end_key”: “7480000000000000FF295F728000000000FFE5C7550000000000FA”,

“epoch”: {

“conf_ver”: 4943,

“version”: 2648

},

“peers”: [

{

“id”: 129263530,

“store_id”: 87330329

},

{

“id”: 129428074,

“store_id”: 13

},

{

“id”: 129563159,

“store_id”: 129470554

}

],

“leader”: {

“id”: 129263530,

“store_id”: 87330329

},

“written_bytes”: 488093,

“read_bytes”: 768231467,

“written_keys”: 83,

“read_keys”: 102753,

“approximate_size”: 115,

“approximate_keys”: 115

},

{

“id”: 129462972,

“start_key”: “7480000000000000FF295F728000000000FFE5C7550000000000FA”,

“end_key”: “7480000000000000FF295F728000000000FFE8897B0000000000FA”,

“epoch”: {

“conf_ver”: 4019,

“version”: 2695

},

“peers”: [

{

“id”: 129462974,

“store_id”: 41325555

},

{

“id”: 129462975,

“store_id”: 30121991

},

{

“id”: 129704918,

“store_id”: 128317130

}

],

“leader”: {

“id”: 129462974,

“store_id”: 41325555

},

“written_bytes”: 177714,

“read_bytes”: 354279603,

“written_keys”: 30,

“read_keys”: 67030,

“approximate_size”: 56,

“approximate_keys”: 53

},

{

“id”: 78812561,

“start_key”: “7480000000000001FFD95F698000000000FF0000060380000000FF00040F80038E624AFFD21424D000038000FF000007F0D0AC0000FD”,

“end_key”: “7480000000000001FFD95F698000000000FF0000060380000000FF0004112E03B46E60FFE800015848038000FF0000083D59370000FD”,

“epoch”: {

“conf_ver”: 632,

“version”: 1561

},

“peers”: [

{

“id”: 113799145,

“store_id”: 71615605

},

{

“id”: 127336356,

“store_id”: 68996529

},

{

“id”: 128436425,

“store_id”: 66453472

}

],

“leader”: {

“id”: 128436425,

“store_id”: 66453472

},

“written_bytes”: 3069,

“read_bytes”: 310349235,

“written_keys”: 42,

“read_keys”: 4138073,

“approximate_size”: 103,

“approximate_keys”: 1423269

},

{

“id”: 40641048,

“start_key”: “7480000000000001FFD95F698000000000FF0000060380000000FF0004112E03B46E60FFE800015848038000FF0000083D59370000FD”,

“end_key”: “7480000000000001FFD95F698000000000FF0000060380000000FF0004125C03B4C372FF280002416F038000FF000008E6181F0000FD”,

“epoch”: {

“conf_ver”: 632,

“version”: 1561

},

“peers”: [

{

“id”: 126205275,

“store_id”: 9

},

{

“id”: 126209951,

“store_id”: 56479442

},

{

“id”: 126449104,

“store_id”: 2228312

}

],

“leader”: {

“id”: 126205275,

“store_id”: 9

},

“written_bytes”: 2408,

“read_bytes”: 273298751,

“written_keys”: 33,

“read_keys”: 3646973,

“approximate_size”: 54,

“approximate_keys”: 745463

},

{

“id”: 112022225,

“start_key”: “7480000000000000FF295F728000000000FFE5BE2F0000000000FA”,

“end_key”: “7480000000000000FF295F728000000000FFE5C3730000000000FA”,

“epoch”: {

“conf_ver”: 2491,

“version”: 2661

},

“peers”: [

{

“id”: 129232407,

“store_id”: 87330325

},

{

“id”: 129264321,

“store_id”: 1932003

},

{

“id”: 129540894,

“store_id”: 87330324

}

],

“leader”: {

“id”: 129264321,

“store_id”: 1932003

},

“written_bytes”: 1031230,

“read_bytes”: 235318465,

“written_keys”: 161,

“read_keys”: 44726,

“approximate_size”: 112,

“approximate_keys”: 1444

},

{

“id”: 33359280,

“start_key”: “7480000000000000FFC65F698000000000FF0000080380000000FF0001367503800000FF00048B4EEF038000FF00000133F0800132FF30313831313230FFFF3230353832343133FFFF37333531303538FF36FF303231350000FF0000FB0380000001FF63EFF62A00000000FB”,

“end_key”: “7480000000000000FFC65F698000000000FF0000080380000000FF0001367503800000FF00048B4EF0038000FF0000013416070132FF30313930373237FFFF3133313034393133FFFF37333531303536FF35FF303331350000FF0000FB0380000003FFAE530BE500000000FB”,

“epoch”: {

“conf_ver”: 561,

“version”: 651

},

“peers”: [

{

“id”: 125442448,

“store_id”: 38206033

},

{

“id”: 127416484,

“store_id”: 110931663

},

{

“id”: 128268849,

“store_id”: 87550945

}

],

“leader”: {

“id”: 128268849,

“store_id”: 87550945

},

“read_bytes”: 231476399,

“read_keys”: 1841308,

“approximate_size”: 98,

“approximate_keys”: 792603

},

{

“id”: 53412688,

“start_key”: “7480000000000000FFC65F698000000000FF0000080380000000FF0001367503800000FF00048B2844038000FF000001343D6F0132FF30323030383135FFFF3130343535313133FFFF37333533353530FF35FF303138340000FF0000FB0380000008FF10DB0D0E00000000FB”,

“end_key”: “7480000000000000FFC65F698000000000FF0000080380000000FF0001367503800000FF00048B29C5038000FF0000013414C70132FF30313930343037FFFF3138303533313133FFFF37333533353337FF36FF303135330000FF0000FB0380000002FF9877D93D00000000FB”,

“epoch”: {

“conf_ver”: 586,

“version”: 641

},

“peers”: [

{

“id”: 124963804,

“store_id”: 56141586

},

{

“id”: 127574262,

“store_id”: 16

},

{

“id”: 129324452,

“store_id”: 38206034

}

],

“leader”: {

“id”: 124963804,

“store_id”: 56141586

},

“read_bytes”: 204162273,

“read_keys”: 1616335,

“approximate_size”: 36,

“approximate_keys”: 293555

},

{

“id”: 100852839,

“start_key”: “7480000000000002FF085F728000000000FF5C76220000000000FA”,

“end_key”: “7480000000000002FF085F728000000000FF600BBF0000000000FA”,

“epoch”: {

“conf_ver”: 874,

“version”: 1890

},

“peers”: [

{

“id”: 120101316,

“store_id”: 39829522

},

{

“id”: 123122367,

“store_id”: 34896009

},

{

“id”: 124742184,

“store_id”: 31918592

}

],

“leader”: {

“id”: 123122367,

“store_id”: 34896009

},

“read_bytes”: 200485550,

“read_keys”: 840608,

“approximate_size”: 95,

“approximate_keys”: 211414

},

{

“id”: 122851206,

“start_key”: “7480000000000002FF085F728000000000FF6344E30000000000FA”,

“end_key”: “7480000000000002FF085F728000000000FF69F7040000000000FA”,

“epoch”: {

“conf_ver”: 838,

“version”: 1892

},

“peers”: [

{

“id”: 122851207,

“store_id”: 66997891

},

{

“id”: 123591157,

“store_id”: 66453472

},

{

“id”: 128410315,

“store_id”: 9

}

],

“leader”: {

“id”: 128410315,

“store_id”: 9

},

“read_bytes”: 200450114,

“read_keys”: 836496,

“approximate_size”: 97,

“approximate_keys”: 204800

}

]

}

建议您使用两种方式解决:

- 可以考虑打散表的 region 分布

curl -X POST http://{TiDBIP}:10080/tables/{db}/{table}/scatter

- 可以使用 SHARD_ROW_ID_BITS 解决热点问题。

这个值一般建议怎么设置,目前我这有51台机器,每台2个NODE的

SHARD_ROW_ID_BITS 的值增加会导致 RPC 请求增加,继而导致 CPU 使用率和网络开销增加。

建议您先增加到 SHARD_ROW_ID_BITS = 6 ,即增加了 2^6 = 64 个分片。

如果效果不理想的话,可以在 CPU 能够承受的范围内,增加到 SHARD_ROW_ID_BITS = 8。

此外,SHARD_ROW_ID_BITS 的值是可以动态修改,每次修改只针对于新数据有效。

恩 我先改完观察下,谢谢

![]()