看下这个tikv节点的目录重新生成了吗,把日志文件上传一下

是的,呜呜呜

我们现在想的办法的,如果不能直接恢复,就扩容节点。

目录生成了。日志如附件!

tikv2.log (61 MB)

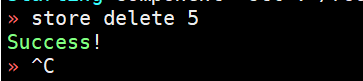

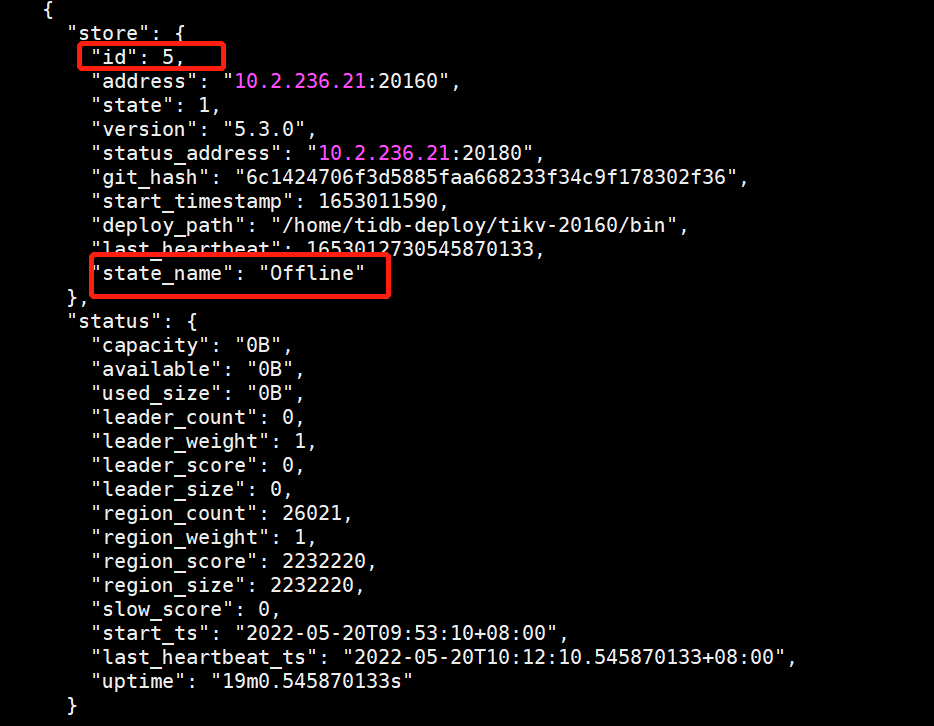

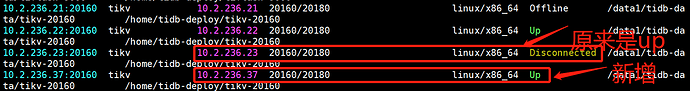

从日志看就是store id重复了,第9步删除store id成功了吗,看下现在的store有哪些

您好,region 副本大多数还在的话建议缩容扩容,因为你数据已经都删了,Unsafe Recovery 没有太大意义。

参考

https://docs.pingcap.com/zh/tidb/stable/scale-tidb-using-tiup#缩容-tidbpdtikv-节点

https://docs.pingcap.com/zh/tidb/stable/scale-tidb-using-tiup#扩容-tidbpdtikv-节点

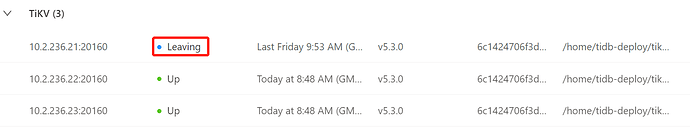

现在3tikv 节点情况下,损坏1个tikv节点。是不是要增加1个tikv扩容 ,然后再缩容这个损坏的节点?

先把误删的节点缩容,清理数据,然后再扩。

实例已经不运行了吧,直接 prune 吧,tiup cluster prune

执行 tiup cluster prune <cluster-name>之后,是不是这个损坏的节点不用更换IP又可以执行扩容操作?

tiup cluster scale-out <cluster-name> scale-out.yaml [-p] [-i /home/root/.ssh/gcp_rsa]

是啊,把它原来的部署目录,数据目录清理干净,按照常规缩扩容的方法来一遍就行了。

3个节点挂掉1个,丢失的只是少数派,没必要使用ctl来做unsafe-recover,直接把这台服务器上的TiKV和TiFlash直接缩容掉(可尝试加–force),再扩容上去就可以了

报错:

[2022/05/23 17:07:48.598 +08:00] [ERROR] [util.rs:460] [“request failed”] [err_code=KV:PD:gRPC] [err=“Grpc(RpcFailure(RpcStatus { code: 2-UNKNOWN, message: “duplicated store address: id:1151064 address:\“10.2.236.21:20160\” version:\“5.3.0\” status_address:\“10.2.236.21:20180\” git_hash:\“6c1424706f3d5885faa668233f34c9f178302f36\” start_timestamp:1653296863 deploy_path:\”/home/tidb-deploy/tikv-20160/bin\” , already registered by id:5 address:\“10.2.236.21:20160\” state:Offline version:\“5.3.0\” status_address:\“10.2.236.21:20180\” git_hash:\“6c1424706f3d5885faa668233f34c9f178302f36\” start_timestamp:1653011590 deploy_path:\"/home/tidb-deploy/tikv-20160/bin\" last_heartbeat:1653012730545870133 “, details: [] }))”]

[2022/05/23 17:07:48.598 +08:00] [FATAL] [server.rs:843] [“failed to start node: Grpc(RpcFailure(RpcStatus { code: 2-UNKNOWN, message: “duplicated store address: id:1151064 address:\“10.2.236.21:20160\” version:\“5.3.0\” status_address:\“10.2.236.21:20180\” git_hash:\“6c1424706f3d5885faa668233f34c9f178302f36\” start_timestamp:1653296863 deploy_path:\”/home/tidb-deploy/tikv-20160/bin\” , already registered by id:5 address:\“10.2.236.21:20160\” state:Offline version:\“5.3.0\” status_address:\“10.2.236.21:20180\” git_hash:\“6c1424706f3d5885faa668233f34c9f178302f36\” start_timestamp:1653011590 deploy_path:\"/home/tidb-deploy/tikv-20160/bin\" last_heartbeat:1653012730545870133 “, details: [] }))”]

执行 tiup cluster prune 了吗

看日志有没有重启过

新增加的tikv节点,经过一个晚上数据才同步了几十G,如何排查数据准确性?