是通过dm 工具把 64张分表聚合到tidb的一张表,源表每张表的id都是自增主键,因为聚合的需要目标表的id是普通键,不知道适应那种解决方案 规避或解决方案?

热点问题,直接官网搜就OK,至于选那个方式定位解决,都OK,热点可视化是 dashboard ,直接官网搜关键字查询怎么用即可

table-regions.py ,数据量太大的话脚本 查不出了

更新下脚本可以了

[RECORD - mars_k_order.inquiry_order_new] - Leaders Distribution:

total leader count: 2229

store: 26085, num_leaders: 380, percentage: 17.05%

store: 26380, num_leaders: 406, percentage: 18.21%

store: 122029, num_leaders: 344, percentage: 15.43%

store: 91029, num_leaders: 358, percentage: 16.06%

store: 90871, num_leaders: 400, percentage: 17.95%

store: 26584, num_leaders: 341, percentage: 15.30%

[RECORD - mars_k_order.inquiry_order_new] - Peers Distribution:

total peers count: 8916

store: 80417, num_peers(num_learners): 2229( 2229), percentage: 25.00%

store: 26085, num_peers(num_learners): 1058( 0), percentage: 11.87%

store: 26380, num_peers(num_learners): 1134( 0), percentage: 12.72%

store: 122029, num_peers(num_learners): 1106( 0), percentage: 12.40%

store: 91029, num_peers(num_learners): 1112( 0), percentage: 12.47%

store: 90871, num_peers(num_learners): 1180( 0), percentage: 13.23%

store: 26584, num_peers(num_learners): 1097( 0), percentage: 12.30%

[INDEX - uq_inquiry_order_no] - Leaders Distribution:

total leader count: 973

store: 26085, num_leaders: 130, percentage: 13.36%

store: 26380, num_leaders: 157, percentage: 16.14%

store: 122029, num_leaders: 165, percentage: 16.96%

store: 91029, num_leaders: 179, percentage: 18.40%

store: 90871, num_leaders: 165, percentage: 16.96%

store: 26584, num_leaders: 177, percentage: 18.19%

[INDEX - idx_provider_app_id] - Leaders Distribution:

total leader count: 983

store: 26085, num_leaders: 173, percentage: 17.60%

store: 26380, num_leaders: 150, percentage: 15.26%

store: 122029, num_leaders: 154, percentage: 15.67%

store: 91029, num_leaders: 164, percentage: 16.68%

store: 90871, num_leaders: 145, percentage: 14.75%

store: 26584, num_leaders: 197, percentage: 20.04%

[INDEX - create_citycode_create_idx] - Leaders Distribution:

total leader count: 969

store: 26085, num_leaders: 177, percentage: 18.27%

store: 26380, num_leaders: 158, percentage: 16.31%

store: 122029, num_leaders: 143, percentage: 14.76%

store: 91029, num_leaders: 183, percentage: 18.89%

store: 90871, num_leaders: 136, percentage: 14.04%

store: 26584, num_leaders: 172, percentage: 17.75%

[INDEX - idx_order_no] - Leaders Distribution:

total leader count: 967

store: 26085, num_leaders: 146, percentage: 15.10%

store: 26380, num_leaders: 150, percentage: 15.51%

store: 122029, num_leaders: 182, percentage: 18.82%

store: 91029, num_leaders: 159, percentage: 16.44%

store: 90871, num_leaders: 147, percentage: 15.20%

store: 26584, num_leaders: 183, percentage: 18.92%

[INDEX - idx_provider_id] - Leaders Distribution:

total leader count: 954

store: 26085, num_leaders: 175, percentage: 18.34%

store: 26380, num_leaders: 143, percentage: 14.99%

store: 122029, num_leaders: 157, percentage: 16.46%

store: 91029, num_leaders: 146, percentage: 15.30%

store: 90871, num_leaders: 144, percentage: 15.09%

store: 26584, num_leaders: 189, percentage: 19.81%

[INDEX - id] - Leaders Distribution:

total leader count: 943

store: 26085, num_leaders: 144, percentage: 15.27%

store: 26380, num_leaders: 133, percentage: 14.10%

store: 122029, num_leaders: 139, percentage: 14.74%

store: 91029, num_leaders: 176, percentage: 18.66%

store: 90871, num_leaders: 166, percentage: 17.60%

store: 26584, num_leaders: 185, percentage: 19.62%

[INDEX - uq_inquiry_order_no] - Peers Distribution:

total peers count: 2919

store: 26085, num_peers(num_learners): 456( 0), percentage: 15.62%

store: 26380, num_peers(num_learners): 432( 0), percentage: 14.80%

store: 122029, num_peers(num_learners): 525( 0), percentage: 17.99%

store: 91029, num_peers(num_learners): 552( 0), percentage: 18.91%

store: 90871, num_peers(num_learners): 473( 0), percentage: 16.20%

store: 26584, num_peers(num_learners): 481( 0), percentage: 16.48%

[INDEX - idx_provider_app_id] - Peers Distribution:

total peers count: 2949

store: 26085, num_peers(num_learners): 487( 0), percentage: 16.51%

store: 26380, num_peers(num_learners): 434( 0), percentage: 14.72%

store: 122029, num_peers(num_learners): 499( 0), percentage: 16.92%

store: 91029, num_peers(num_learners): 517( 0), percentage: 17.53%

store: 90871, num_peers(num_learners): 446( 0), percentage: 15.12%

store: 26584, num_peers(num_learners): 566( 0), percentage: 19.19%

[INDEX - create_citycode_create_idx] - Peers Distribution:

total peers count: 2907

store: 26085, num_peers(num_learners): 479( 0), percentage: 16.48%

store: 26380, num_peers(num_learners): 440( 0), percentage: 15.14%

store: 122029, num_peers(num_learners): 466( 0), percentage: 16.03%

store: 91029, num_peers(num_learners): 543( 0), percentage: 18.68%

store: 90871, num_peers(num_learners): 468( 0), percentage: 16.10%

store: 26584, num_peers(num_learners): 511( 0), percentage: 17.58%

[INDEX - idx_order_no] - Peers Distribution:

total peers count: 2901

store: 26085, num_peers(num_learners): 457( 0), percentage: 15.75%

store: 26380, num_peers(num_learners): 439( 0), percentage: 15.13%

store: 122029, num_peers(num_learners): 533( 0), percentage: 18.37%

store: 91029, num_peers(num_learners): 514( 0), percentage: 17.72%

store: 90871, num_peers(num_learners): 462( 0), percentage: 15.93%

store: 26584, num_peers(num_learners): 496( 0), percentage: 17.10%

[INDEX - idx_provider_id] - Peers Distribution:

total peers count: 2862

store: 26085, num_peers(num_learners): 503( 0), percentage: 17.58%

store: 26380, num_peers(num_learners): 441( 0), percentage: 15.41%

store: 122029, num_peers(num_learners): 505( 0), percentage: 17.65%

store: 91029, num_peers(num_learners): 464( 0), percentage: 16.21%

store: 90871, num_peers(num_learners): 452( 0), percentage: 15.79%

store: 26584, num_peers(num_learners): 497( 0), percentage: 17.37%

[INDEX - id] - Peers Distribution:

total peers count: 2829

store: 26085, num_peers(num_learners): 467( 0), percentage: 16.51%

store: 26380, num_peers(num_learners): 410( 0), percentage: 14.49%

store: 122029, num_peers(num_learners): 483( 0), percentage: 17.07%

store: 91029, num_peers(num_learners): 488( 0), percentage: 17.25%

store: 90871, num_peers(num_learners): 484( 0), percentage: 17.11%

store: 26584, num_peers(num_learners): 497( 0), percentage: 17.57%

我看分布挺均匀的

这个表打散热点再看看

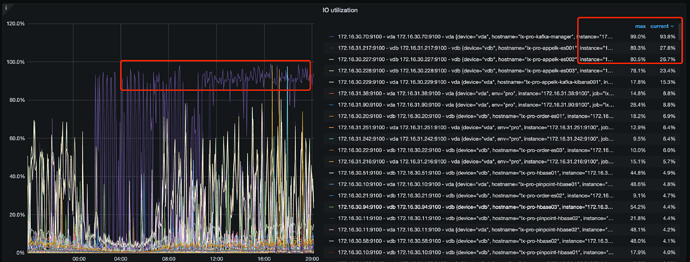

截图是es的服务器,不是tidb的服务器

热点表打散了,可是效果不明显

1、重新给一下 你的集群监控图把,你给的哪个看不见图,都是表达式

dm 有办法批量处理吗?批量入库? ```

batch: 100这个参数设置后,tidb看到怎么还是单条插入呢

具体只的是什么阶段? 全量导入还是增量同步过程? 配置参数是否正确,这个问题,建议您可以具体描述,上传配置文件,在一个新的 DM 帖子中咨询下,多谢。

增量同步过程

就是入库超级慢

整的快想放弃tidb了

分析慢的原因,建议先看一下下游 tidb 是否出现异常,或单纯的并发不够。这都需要查看监控,上面给的监控信息太乱了,最好重新整理一下,只给出只和 tidb 集群相关的监控