为提高效率,提问时请提供以下信息,问题描述清晰可优先响应。

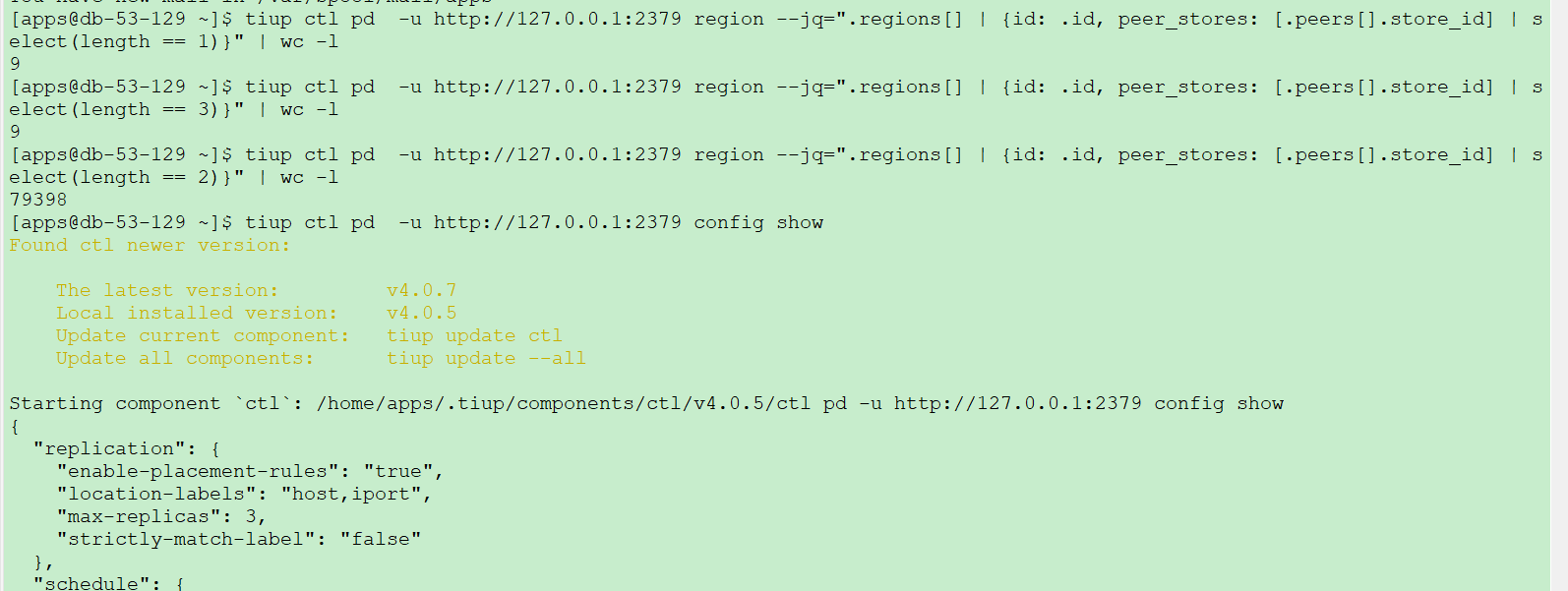

- 【TiDB 版本】:4.0.5

- 【问题描述】:

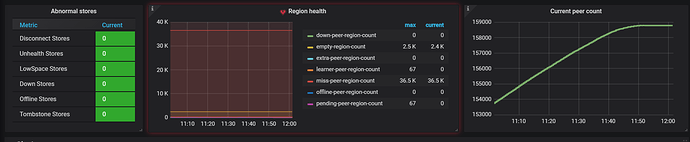

6台机器,每台机器上部署两个tikv实例, 一台机器故障宕机后,整个tidb集群异常不可用。 故障机器重启后, 上面的两个tikv实例无法启动:[2020/10/26 20:48:43.223 +08:00] [FATAL] [server.rs:591] [“failed to start node: EngineTraits(Other(”[components/raftstore/src/store/fsm/store.rs:837]: \"[components/raftstore/src/store/peer_storage.rs:385]: [region 13024] entry at apply index 4531624 doesn\\\'t exist, may lose data.\""))"]

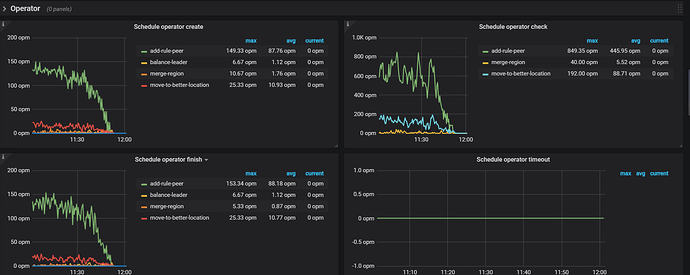

调度好像没起作用,没有把region leader从故障的节点上转移。 用tiup scale-in下线故障节点,没起作用; 重启整个集群,也没有作用

sync-log配置: raftstore.sync-log: false

pdctl config show all:

» config show all

{

“client-urls”: “http://0.0.0.0:2379”,

“peer-urls”: “http://172.20.53.141:2380”,

“advertise-client-urls”: “http://172.20.53.141:2379”,

“advertise-peer-urls”: “http://172.20.53.141:2380”,

“name”: “pd-53-141-2379”,

“data-dir”: “/apps/dba/data/tidb/pd_2379”,

“force-new-cluster”: false,

“enable-grpc-gateway”: true,

“initial-cluster”: “pd-53-129-2379=http://172.20.53.129:2380,pd-53-141-2379=http://172.20.53.141:2380,pd-53-138-2379=http://172.20.53.138:2380”,

“initial-cluster-state”: “new”,

“join”: “”,

“lease”: 3,

“log”: {

“level”: “info”,

“format”: “text”,

“disable-timestamp”: false,

“file”: {

“filename”: “/apps/dba/logs/tidb/pd_2379/pd.log”,

“max-size”: 100,

“max-days”: 30,

“max-backups”: 7

},

“development”: false,

“disable-caller”: false,

“disable-stacktrace”: false,

“disable-error-verbose”: true,

“sampling”: null

},

“tso-save-interval”: “3s”,

“metric”: {

“job”: “pd-53-141-2379”,

“address”: “”,

“interval”: “15s”

},

“schedule”: {

“max-snapshot-count”: 3,

“max-pending-peer-count”: 16,

“max-merge-region-size”: 20,

“max-merge-region-keys”: 200000,

“split-merge-interval”: “1h0m0s”,

“enable-one-way-merge”: “false”,

“enable-cross-table-merge”: “false”,

“patrol-region-interval”: “100ms”,

“max-store-down-time”: “3m0s”,

“leader-schedule-limit”: 64,

“leader-schedule-policy”: “count”,

“region-schedule-limit”: 4096,

“replica-schedule-limit”: 64,

“merge-schedule-limit”: 16,

“hot-region-schedule-limit”: 0,

“hot-region-cache-hits-threshold”: 3,

“store-limit”: {

“1”: {

“add-peer”: 15,

“remove-peer”: 15

},

“12”: {

“add-peer”: 15,

“remove-peer”: 15

},

“15”: {

“add-peer”: 15,

“remove-peer”: 15

},

“2”: {

“add-peer”: 15,

“remove-peer”: 15

},

“20”: {

“add-peer”: 15,

“remove-peer”: 15

},

“21”: {

“add-peer”: 15,

“remove-peer”: 15

},

“22”: {

“add-peer”: 15,

“remove-peer”: 15

},

“23”: {

“add-peer”: 15,

“remove-peer”: 15

},

“24”: {

“add-peer”: 15,

“remove-peer”: 15

},

“3”: {

“add-peer”: 15,

“remove-peer”: 15

},

“5”: {

“add-peer”: 15,

“remove-peer”: 15

},

“8”: {

“add-peer”: 15,

“remove-peer”: 15

}

},

“tolerant-size-ratio”: 0,

“low-space-ratio”: 0.9,

“high-space-ratio”: 0.8,

“scheduler-max-waiting-operator”: 5,

“enable-remove-down-replica”: “true”,

“enable-replace-offline-replica”: “true”,

“enable-make-up-replica”: “true”,

“enable-remove-extra-replica”: “true”,

“enable-location-replacement”: “true”,

“enable-debug-metrics”: “false”,

“schedulers-v2”: [

{

“type”: “balance-region”,

“args”: null,

“disable”: false,

“args-payload”: “”

},

{

“type”: “balance-leader”,

“args”: null,

“disable”: false,

“args-payload”: “”

},

{

“type”: “hot-region”,

“args”: null,

“disable”: false,

“args-payload”: “”

},

{

“type”: “label”,

“args”: null,

“disable”: false,

“args-payload”: “”

}

],

“schedulers-payload”: {

“balance-hot-region-scheduler”: null,

“balance-leader-scheduler”: {

“name”: “balance-leader-scheduler”,

“ranges”: [

{

“end-key”: “”,

“start-key”: “”

}

]

},

“balance-region-scheduler”: {

“name”: “balance-region-scheduler”,

“ranges”: [

{

“end-key”: “”,

“start-key”: “”

}

]

},

“label-scheduler”: {

“name”: “label-scheduler”,

“ranges”: [

{

“end-key”: “”,

“start-key”: “”

}

]

}

},

“store-limit-mode”: “manual”

},

“replication”: {

“max-replicas”: 2,

“location-labels”: “host,iport”,

“strictly-match-label”: “false”,

“enable-placement-rules”: “true”

},

“pd-server”: {

“use-region-storage”: “true”,

“max-gap-reset-ts”: “24h0m0s”,

“key-type”: “table”,

“runtime-services”: “”,

“metric-storage”: “http://172.20.51.225:9090”,

“dashboard-address”: “http://172.20.53.129:2379”,

“trace-region-flow”: “false”

},

“cluster-version”: “4.0.5”,

“quota-backend-bytes”: “8GiB”,

“auto-compaction-mode”: “periodic”,

“auto-compaction-retention-v2”: “1h”,

“TickInterval”: “500ms”,

“ElectionInterval”: “15s”,

“PreVote”: true,

“security”: {

“cacert-path”: “”,

“cert-path”: “”,

“key-path”: “”,

“cert-allowed-cn”: null

},

“label-property”: {},

“WarningMsgs”: [

“disable-telemetry in conf/pd.toml is deprecated, use enable-telemetry instead”,

“Config contains undefined item: enable-dynamic-config, use-region-storage”

],

“DisableStrictReconfigCheck”: false,

“HeartbeatStreamBindInterval”: “1m0s”,

“LeaderPriorityCheckInterval”: “1m0s”,

“dashboard”: {

“tidb-cacert-path”: “”,

“tidb-cert-path”: “”,

“tidb-key-path”: “”,

“public-path-prefix”: “”,

“internal-proxy”: true,

“enable-telemetry”: false,

“disable-telemetry”: true

},

“replication-mode”: {

“replication-mode”: “majority”,

“dr-auto-sync”: {

“label-key”: “”,

“primary”: “”,

“dr”: “”,

“primary-replicas”: 0,

“dr-replicas”: 0,

“wait-store-timeout”: “1m0s”,

“wait-sync-timeout”: “1m0s”

}

}

}

»

tiup cluster display:

Found cluster newer version:

The latest version: v1.2.1

Local installed version: v1.1.1

Update current component: tiup update cluster

Update all components: tiup update --all

Starting component cluster: /home/apps/.tiup/components/cluster/v1.1.1/tiup-cluster display tidblepro

tidb Cluster: tidblepro

tidb Version: v4.0.5

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

172.20.51.89:9093 alertmanager 172.20.51.89 9093/9094 linux/x86_64 inactive /apps/dba/data/tidb/alert_9093 /apps/dba/svr/tidb/alert_9093

172.20.51.89:3000 grafana 172.20.51.89 3000 linux/x86_64 inactive - /apps/dba/svr/tidb/grafana_3000

172.20.53.129:2379 pd 172.20.53.129 2379/2380 linux/x86_64 Up|UI /apps/dba/data/tidb/pd_2379 /apps/dba/svr/tidb/pd_2379

172.20.53.138:2379 pd 172.20.53.138 2379/2380 linux/x86_64 Up|L /apps/dba/data/tidb/pd_2379 /apps/dba/svr/tidb/pd_2379

172.20.53.141:2379 pd 172.20.53.141 2379/2380 linux/x86_64 Up /apps/dba/data/tidb/pd_2379 /apps/dba/svr/tidb/pd_2379

172.20.51.225:9090 prometheus 172.20.51.225 9090 linux/x86_64 inactive /data/dba/data/tidb/prometheus_9090 /data/dba/svr/tidb/prometheus_9090

172.20.53.131:4000 tidb 172.20.53.131 4000/10080 linux/x86_64 Down - /apps/dba/svr/tidb/tidb_4000

172.20.53.133:4000 tidb 172.20.53.133 4000/10080 linux/x86_64 Down - /apps/dba/svr/tidb/tidb_4000

172.20.53.135:4000 tidb 172.20.53.135 4000/10080 linux/x86_64 Down - /apps/dba/svr/tidb/tidb_4000

172.20.53.141:4000 tidb 172.20.53.141 4000/10080 linux/x86_64 Down - /apps/dba/svr/tidb/tidb_4000

172.20.55.100:20160 tikv 172.20.55.100 20160/20180 linux/x86_64 Up /apps/dba/data/tidb/tikv_20160 /apps/dba/svr/tidb/tikv_20160

172.20.55.100:20161 tikv 172.20.55.100 20161/20181 linux/x86_64 Up /apps/dba/data/tidb/tikv_20161 /apps/dba/svr/tidb/tikv_20161

172.20.55.101:20160 tikv 172.20.55.101 20160/20180 linux/x86_64 Up /apps/dba/data/tidb/tikv_20160 /apps/dba/svr/tidb/tikv_20160

172.20.55.101:20161 tikv 172.20.55.101 20161/20181 linux/x86_64 Up /apps/dba/data/tidb/tikv_20161 /apps/dba/svr/tidb/tikv_20161

172.20.55.102:20160 tikv 172.20.55.102 20160/20180 linux/x86_64 Pending Offline /apps/dba/data/tidb/tikv_20160 /apps/dba/svr/tidb/tikv_20160

172.20.55.102:20161 tikv 172.20.55.102 20161/20181 linux/x86_64 Pending Offline /apps/dba/data/tidb/tikv_20161 /apps/dba/svr/tidb/tikv_20161

172.20.55.103:20160 tikv 172.20.55.103 20160/20180 linux/x86_64 Up /apps/dba/data/tidb/tikv_20160 /apps/dba/svr/tidb/tikv_20160

172.20.55.103:20161 tikv 172.20.55.103 20161/20181 linux/x86_64 Up /apps/dba/data/tidb/tikv_20161 /apps/dba/svr/tidb/tikv_20161

172.20.55.104:20160 tikv 172.20.55.104 20160/20180 linux/x86_64 Up /apps/dba/data/tidb/tikv_20160 /apps/dba/svr/tidb/tikv_20160

172.20.55.104:20161 tikv 172.20.55.104 20161/20181 linux/x86_64 Up /apps/dba/data/tidb/tikv_20161 /apps/dba/svr/tidb/tikv_20161

172.20.55.105:20160 tikv 172.20.55.105 20160/20180 linux/x86_64 Up /apps/dba/data/tidb/tikv_20160 /apps/dba/svr/tidb/tikv_20160

172.20.55.105:20161 tikv 172.20.55.105 20161/20181 linux/x86_64 Up /apps/dba/data/tidb/tikv_20161 /apps/dba/svr/tidb/tikv_20161